Junda Cheng

A Wavelet-based Stereo Matching Framework for Solving Frequency Convergence Inconsistency

May 23, 2025

Abstract:We find that the EPE evaluation metrics of RAFT-stereo converge inconsistently in the low and high frequency regions, resulting high frequency degradation (e.g., edges and thin objects) during the iterative process. The underlying reason for the limited performance of current iterative methods is that it optimizes all frequency components together without distinguishing between high and low frequencies. We propose a wavelet-based stereo matching framework (Wavelet-Stereo) for solving frequency convergence inconsistency. Specifically, we first explicitly decompose an image into high and low frequency components using discrete wavelet transform. Then, the high-frequency and low-frequency components are fed into two different multi-scale frequency feature extractors. Finally, we propose a novel LSTM-based high-frequency preservation update operator containing an iterative frequency adapter to provide adaptive refined high-frequency features at different iteration steps by fine-tuning the initial high-frequency features. By processing high and low frequency components separately, our framework can simultaneously refine high-frequency information in edges and low-frequency information in smooth regions, which is especially suitable for challenging scenes with fine details and textures in the distance. Extensive experiments demonstrate that our Wavelet-Stereo outperforms the state-of-the-art methods and ranks 1st on both the KITTI 2015 and KITTI 2012 leaderboards for almost all metrics. We will provide code and pre-trained models to encourage further exploration, application, and development of our innovative framework (https://github.com/SIA-IDE/Wavelet-Stereo).

BANet: Bilateral Aggregation Network for Mobile Stereo Matching

Mar 05, 2025Abstract:State-of-the-art stereo matching methods typically use costly 3D convolutions to aggregate a full cost volume, but their computational demands make mobile deployment challenging. Directly applying 2D convolutions for cost aggregation often results in edge blurring, detail loss, and mismatches in textureless regions. Some complex operations, like deformable convolutions and iterative warping, can partially alleviate this issue; however, they are not mobile-friendly, limiting their deployment on mobile devices. In this paper, we present a novel bilateral aggregation network (BANet) for mobile stereo matching that produces high-quality results with sharp edges and fine details using only 2D convolutions. Specifically, we first separate the full cost volume into detailed and smooth volumes using a spatial attention map, then perform detailed and smooth aggregations accordingly, ultimately fusing both to obtain the final disparity map. Additionally, to accurately identify high-frequency detailed regions and low-frequency smooth/textureless regions, we propose a new scale-aware spatial attention module. Experimental results demonstrate that our BANet-2D significantly outperforms other mobile-friendly methods, achieving 35.3\% higher accuracy on the KITTI 2015 leaderboard than MobileStereoNet-2D, with faster runtime on mobile devices. The extended 3D version, BANet-3D, achieves the highest accuracy among all real-time methods on high-end GPUs. Code: \textcolor{magenta}{https://github.com/gangweiX/BANet}.

StereoGen: High-quality Stereo Image Generation from a Single Image

Jan 15, 2025Abstract:State-of-the-art supervised stereo matching methods have achieved amazing results on various benchmarks. However, these data-driven methods suffer from generalization to real-world scenarios due to the lack of real-world annotated data. In this paper, we propose StereoGen, a novel pipeline for high-quality stereo image generation. This pipeline utilizes arbitrary single images as left images and pseudo disparities generated by a monocular depth estimation model to synthesize high-quality corresponding right images. Unlike previous methods that fill the occluded area in warped right images using random backgrounds or using convolutions to take nearby pixels selectively, we fine-tune a diffusion inpainting model to recover the background. Images generated by our model possess better details and undamaged semantic structures. Besides, we propose Training-free Confidence Generation and Adaptive Disparity Selection. The former suppresses the negative effect of harmful pseudo ground truth during stereo training, while the latter helps generate a wider disparity distribution and better synthetic images. Experiments show that models trained under our pipeline achieve state-of-the-art zero-shot generalization results among all published methods. The code will be available upon publication of the paper.

MonSter: Marry Monodepth to Stereo Unleashes Power

Jan 15, 2025Abstract:Stereo matching recovers depth from image correspondences. Existing methods struggle to handle ill-posed regions with limited matching cues, such as occlusions and textureless areas. To address this, we propose MonSter, a novel method that leverages the complementary strengths of monocular depth estimation and stereo matching. MonSter integrates monocular depth and stereo matching into a dual-branch architecture to iteratively improve each other. Confidence-based guidance adaptively selects reliable stereo cues for monodepth scale-shift recovery. The refined monodepth is in turn guides stereo effectively at ill-posed regions. Such iterative mutual enhancement enables MonSter to evolve monodepth priors from coarse object-level structures to pixel-level geometry, fully unlocking the potential of stereo matching. As shown in Fig.1, MonSter ranks 1st across five most commonly used leaderboards -- SceneFlow, KITTI 2012, KITTI 2015, Middlebury, and ETH3D. Achieving up to 49.5% improvements (Bad 1.0 on ETH3D) over the previous best method. Comprehensive analysis verifies the effectiveness of MonSter in ill-posed regions. In terms of zero-shot generalization, MonSter significantly and consistently outperforms state-of-the-art across the board. The code is publicly available at: https://github.com/Junda24/MonSter.

Leveraging Consistent Spatio-Temporal Correspondence for Robust Visual Odometry

Dec 22, 2024

Abstract:Recent approaches to VO have significantly improved performance by using deep networks to predict optical flow between video frames. However, existing methods still suffer from noisy and inconsistent flow matching, making it difficult to handle challenging scenarios and long-sequence estimation. To overcome these challenges, we introduce Spatio-Temporal Visual Odometry (STVO), a novel deep network architecture that effectively leverages inherent spatio-temporal cues to enhance the accuracy and consistency of multi-frame flow matching. With more accurate and consistent flow matching, STVO can achieve better pose estimation through the bundle adjustment (BA). Specifically, STVO introduces two innovative components: 1) the Temporal Propagation Module that utilizes multi-frame information to extract and propagate temporal cues across adjacent frames, maintaining temporal consistency; 2) the Spatial Activation Module that utilizes geometric priors from the depth maps to enhance spatial consistency while filtering out excessive noise and incorrect matches. Our STVO achieves state-of-the-art performance on TUM-RGBD, EuRoc MAV, ETH3D and KITTI Odometry benchmarks. Notably, it improves accuracy by 77.8% on ETH3D benchmark and 38.9% on KITTI Odometry benchmark over the previous best methods.

RoMeO: Robust Metric Visual Odometry

Dec 16, 2024Abstract:Visual odometry (VO) aims to estimate camera poses from visual inputs -- a fundamental building block for many applications such as VR/AR and robotics. This work focuses on monocular RGB VO where the input is a monocular RGB video without IMU or 3D sensors. Existing approaches lack robustness under this challenging scenario and fail to generalize to unseen data (especially outdoors); they also cannot recover metric-scale poses. We propose Robust Metric Visual Odometry (RoMeO), a novel method that resolves these issues leveraging priors from pre-trained depth models. RoMeO incorporates both monocular metric depth and multi-view stereo (MVS) models to recover metric-scale, simplify correspondence search, provide better initialization and regularize optimization. Effective strategies are proposed to inject noise during training and adaptively filter noisy depth priors, which ensure the robustness of RoMeO on in-the-wild data. As shown in Fig.1, RoMeO advances the state-of-the-art (SOTA) by a large margin across 6 diverse datasets covering both indoor and outdoor scenes. Compared to the current SOTA DPVO, RoMeO reduces the relative (align the trajectory scale with GT) and absolute trajectory errors both by >50%. The performance gain also transfers to the full SLAM pipeline (with global BA & loop closure). Code will be released upon acceptance.

IGEV++: Iterative Multi-range Geometry Encoding Volumes for Stereo Matching

Sep 01, 2024Abstract:Stereo matching is a core component in many computer vision and robotics systems. Despite significant advances over the last decade, handling matching ambiguities in ill-posed regions and large disparities remains an open challenge. In this paper, we propose a new deep network architecture, called IGEV++, for stereo matching. The proposed IGEV++ builds Multi-range Geometry Encoding Volumes (MGEV) that encode coarse-grained geometry information for ill-posed regions and large disparities and fine-grained geometry information for details and small disparities. To construct MGEV, we introduce an adaptive patch matching module that efficiently and effectively computes matching costs for large disparity ranges and/or ill-posed regions. We further propose a selective geometry feature fusion module to adaptively fuse multi-range and multi-granularity geometry features in MGEV. We then index the fused geometry features and input them to ConvGRUs to iteratively update the disparity map. MGEV allows to efficiently handle large disparities and ill-posed regions, such as occlusions and textureless regions, and enjoys rapid convergence during iterations. Our IGEV++ achieves the best performance on the Scene Flow test set across all disparity ranges, up to 768px. Our IGEV++ also achieves state-of-the-art accuracy on the Middlebury, ETH3D, KITTI 2012, and 2015 benchmarks. Specifically, IGEV++ achieves a 3.23% 2-pixel outlier rate (Bad 2.0) on the large disparity benchmark, Middlebury, representing error reductions of 31.9% and 54.8% compared to RAFT-Stereo and GMStereo, respectively. We also present a real-time version of IGEV++ that achieves the best performance among all published real-time methods on the KITTI benchmarks. The code is publicly available at https://github.com/gangweiX/IGEV-plusplus

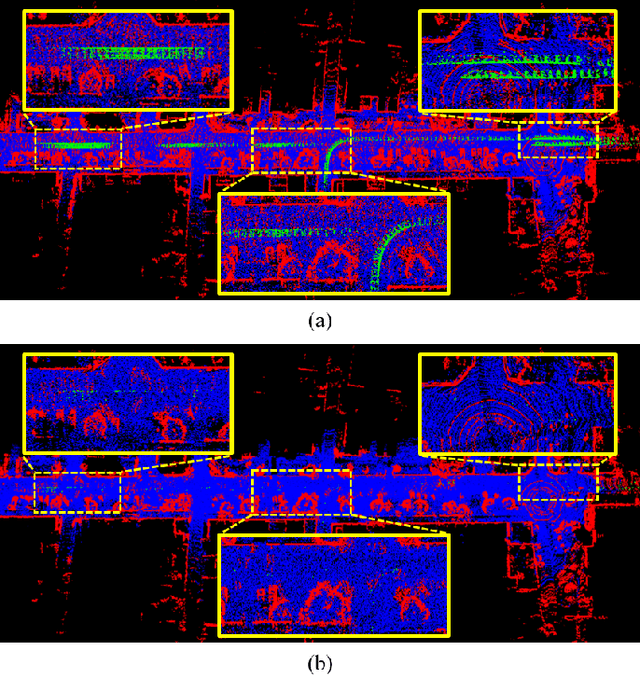

A Fast Dynamic Point Detection Method for LiDAR-Inertial Odometry in Driving Scenarios

Jul 04, 2024

Abstract:Existing 3D point-based dynamic point detection and removal methods have a significant time overhead, making them difficult to adapt to LiDAR-inertial odometry systems. This paper proposes a label consistency based dynamic point detection and removal method for handling moving vehicles and pedestrians in autonomous driving scenarios, and embeds the proposed dynamic point detection and removal method into a self-designed LiDAR-inertial odometry system. Experimental results on three public datasets demonstrate that our method can accomplish the dynamic point detection and removal with extremely low computational overhead (i.e., 1$\sim$9ms) in LIO systems, meanwhile achieve comparable preservation rate and rejection rate to state-of-the-art methods and significantly enhance the accuracy of pose estimation. We have released the source code of this work for the development of the community.

MC-Stereo: Multi-peak Lookup and Cascade Search Range for Stereo Matching

Nov 04, 2023Abstract:Stereo matching is a fundamental task in scene comprehension. In recent years, the method based on iterative optimization has shown promise in stereo matching. However, the current iteration framework employs a single-peak lookup, which struggles to handle the multi-peak problem effectively. Additionally, the fixed search range used during the iteration process limits the final convergence effects. To address these issues, we present a novel iterative optimization architecture called MC-Stereo. This architecture mitigates the multi-peak distribution problem in matching through the multi-peak lookup strategy, and integrates the coarse-to-fine concept into the iterative framework via the cascade search range. Furthermore, given that feature representation learning is crucial for successful learnbased stereo matching, we introduce a pre-trained network to serve as the feature extractor, enhancing the front end of the stereo matching pipeline. Based on these improvements, MC-Stereo ranks first among all publicly available methods on the KITTI-2012 and KITTI-2015 benchmarks, and also achieves state-of-the-art performance on ETH3D. The code will be open sourced after the publication of this paper.

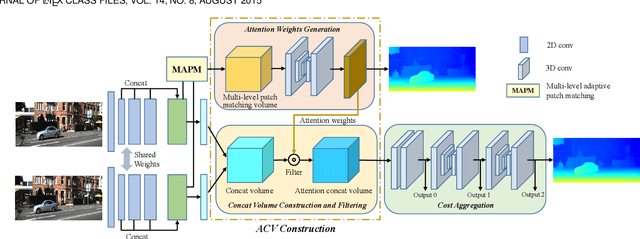

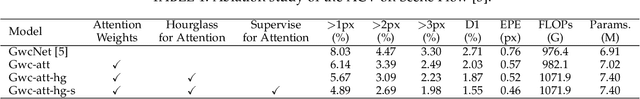

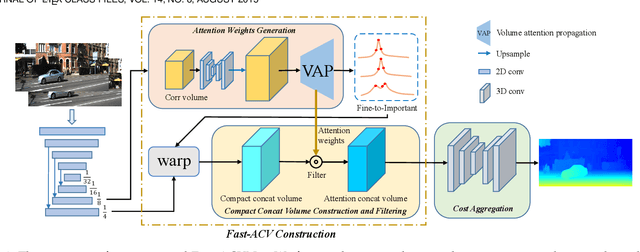

Accurate and Efficient Stereo Matching via Attention Concatenation Volume

Sep 27, 2022

Abstract:Stereo matching is a fundamental building block for many vision and robotics applications. An informative and concise cost volume representation is vital for stereo matching of high accuracy and efficiency. In this paper, we present a novel cost volume construction method, named attention concatenation volume (ACV), which generates attention weights from correlation clues to suppress redundant information and enhance matching-related information in the concatenation volume. The ACV can be seamlessly embedded into most stereo matching networks, the resulting networks can use a more lightweight aggregation network and meanwhile achieve higher accuracy. We further design a fast version of ACV to enable real-time performance, named Fast-ACV, which generates high likelihood disparity hypotheses and the corresponding attention weights from low-resolution correlation clues to significantly reduce computational and memory cost and meanwhile maintain a satisfactory accuracy. The core idea of our Fast-ACV is volume attention propagation (VAP) which can automatically select accurate correlation values from an upsampled correlation volume and propagate these accurate values to the surroundings pixels with ambiguous correlation clues. Furthermore, we design a highly accurate network ACVNet and a real-time network Fast-ACVNet based on our ACV and Fast-ACV respectively, which achieve the state-of-the-art performance on several benchmarks (i.e., our ACVNet ranks the 2nd on KITTI 2015 and Scene Flow, and the 3rd on KITTI 2012 and ETH3D among all the published methods; our Fast-ACVNet outperforms almost all state-of-the-art real-time methods on Scene Flow, KITTI 2012 and 2015 and meanwhile has better generalization ability)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge