Xiaobao Wei

RSATalker: Realistic Socially-Aware Talking Head Generation for Multi-Turn Conversation

Jan 15, 2026Abstract:Talking head generation is increasingly important in virtual reality (VR), especially for social scenarios involving multi-turn conversation. Existing approaches face notable limitations: mesh-based 3D methods can model dual-person dialogue but lack realistic textures, while large-model-based 2D methods produce natural appearances but incur prohibitive computational costs. Recently, 3D Gaussian Splatting (3DGS) based methods achieve efficient and realistic rendering but remain speaker-only and ignore social relationships. We introduce RSATalker, the first framework that leverages 3DGS for realistic and socially-aware talking head generation with support for multi-turn conversation. Our method first drives mesh-based 3D facial motion from speech, then binds 3D Gaussians to mesh facets to render high-fidelity 2D avatar videos. To capture interpersonal dynamics, we propose a socially-aware module that encodes social relationships, including blood and non-blood as well as equal and unequal, into high-level embeddings through a learnable query mechanism. We design a three-stage training paradigm and construct the RSATalker dataset with speech-mesh-image triplets annotated with social relationships. Extensive experiments demonstrate that RSATalker achieves state-of-the-art performance in both realism and social awareness. The code and dataset will be released.

ParkGaussian: Surround-view 3D Gaussian Splatting for Autonomous Parking

Jan 04, 2026Abstract:Parking is a critical task for autonomous driving systems (ADS), with unique challenges in crowded parking slots and GPS-denied environments. However, existing works focus on 2D parking slot perception, mapping, and localization, 3D reconstruction remains underexplored, which is crucial for capturing complex spatial geometry in parking scenarios. Naively improving the visual quality of reconstructed parking scenes does not directly benefit autonomous parking, as the key entry point for parking is the slots perception module. To address these limitations, we curate the first benchmark named ParkRecon3D, specifically designed for parking scene reconstruction. It includes sensor data from four surround-view fisheye cameras with calibrated extrinsics and dense parking slot annotations. We then propose ParkGaussian, the first framework that integrates 3D Gaussian Splatting (3DGS) for parking scene reconstruction. To further improve the alignment between reconstruction and downstream parking slot detection, we introduce a slot-aware reconstruction strategy that leverages existing parking perception methods to enhance the synthesis quality of slot regions. Experiments on ParkRecon3D demonstrate that ParkGaussian achieves state-of-the-art reconstruction quality and better preserves perception consistency for downstream tasks. The code and dataset will be released at: https://github.com/wm-research/ParkGaussian

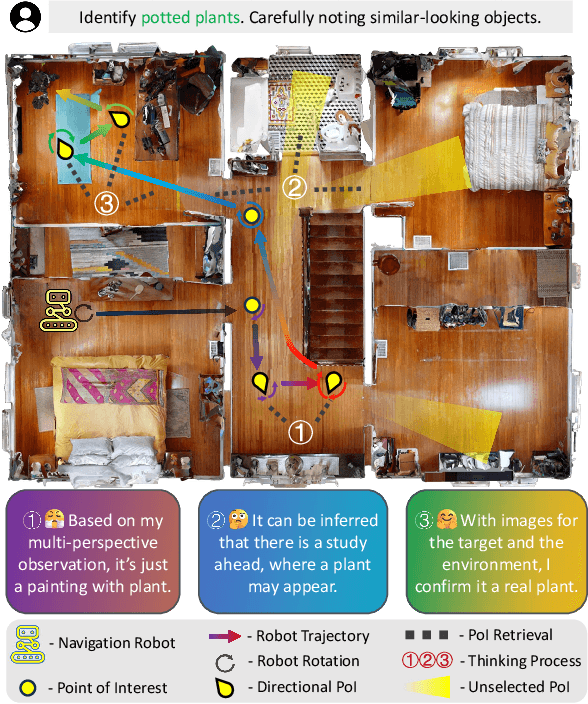

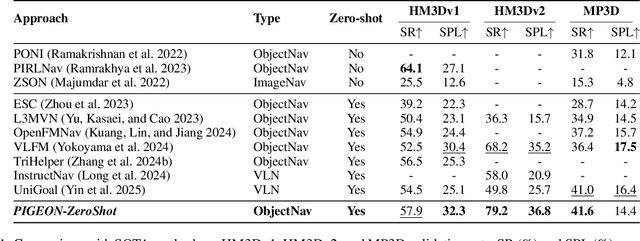

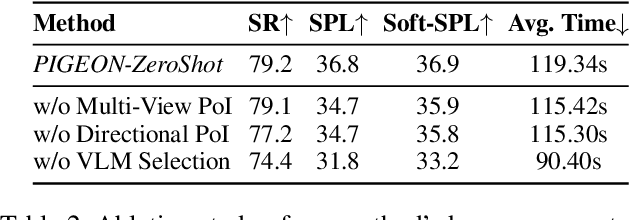

PIGEON: VLM-Driven Object Navigation via Points of Interest Selection

Nov 17, 2025

Abstract:Navigating to a specified object in an unknown environment is a fundamental yet challenging capability of embodied intelligence. However, current methods struggle to balance decision frequency with intelligence, resulting in decisions lacking foresight or discontinuous actions. In this work, we propose PIGEON: Point of Interest Guided Exploration for Object Navigation with VLM, maintaining a lightweight and semantically aligned snapshot memory during exploration as semantic input for the exploration strategy. We use a large Visual-Language Model (VLM), named PIGEON-VL, to select Points of Interest (PoI) formed during exploration and then employ a lower-level planner for action output, increasing the decision frequency. Additionally, this PoI-based decision-making enables the generation of Reinforcement Learning with Verifiable Reward (RLVR) data suitable for simulators. Experiments on classic object navigation benchmarks demonstrate that our zero-shot transfer method achieves state-of-the-art performance, while RLVR further enhances the model's semantic guidance capabilities, enabling deep reasoning during real-time navigation.

Rethinking Driving World Model as Synthetic Data Generator for Perception Tasks

Oct 22, 2025Abstract:Recent advancements in driving world models enable controllable generation of high-quality RGB videos or multimodal videos. Existing methods primarily focus on metrics related to generation quality and controllability. However, they often overlook the evaluation of downstream perception tasks, which are $\mathbf{really\ crucial}$ for the performance of autonomous driving. Existing methods usually leverage a training strategy that first pretrains on synthetic data and finetunes on real data, resulting in twice the epochs compared to the baseline (real data only). When we double the epochs in the baseline, the benefit of synthetic data becomes negligible. To thoroughly demonstrate the benefit of synthetic data, we introduce Dream4Drive, a novel synthetic data generation framework designed for enhancing the downstream perception tasks. Dream4Drive first decomposes the input video into several 3D-aware guidance maps and subsequently renders the 3D assets onto these guidance maps. Finally, the driving world model is fine-tuned to produce the edited, multi-view photorealistic videos, which can be used to train the downstream perception models. Dream4Drive enables unprecedented flexibility in generating multi-view corner cases at scale, significantly boosting corner case perception in autonomous driving. To facilitate future research, we also contribute a large-scale 3D asset dataset named DriveObj3D, covering the typical categories in driving scenarios and enabling diverse 3D-aware video editing. We conduct comprehensive experiments to show that Dream4Drive can effectively boost the performance of downstream perception models under various training epochs. Project: $\href{https://wm-research.github.io/Dream4Drive/}{this\ https\ URL}$

OmniIndoor3D: Comprehensive Indoor 3D Reconstruction

May 27, 2025Abstract:We propose a novel framework for comprehensive indoor 3D reconstruction using Gaussian representations, called OmniIndoor3D. This framework enables accurate appearance, geometry, and panoptic reconstruction of diverse indoor scenes captured by a consumer-level RGB-D camera. Since 3DGS is primarily optimized for photorealistic rendering, it lacks the precise geometry critical for high-quality panoptic reconstruction. Therefore, OmniIndoor3D first combines multiple RGB-D images to create a coarse 3D reconstruction, which is then used to initialize the 3D Gaussians and guide the 3DGS training. To decouple the optimization conflict between appearance and geometry, we introduce a lightweight MLP that adjusts the geometric properties of 3D Gaussians. The introduced lightweight MLP serves as a low-pass filter for geometry reconstruction and significantly reduces noise in indoor scenes. To improve the distribution of Gaussian primitives, we propose a densification strategy guided by panoptic priors to encourage smoothness on planar surfaces. Through the joint optimization of appearance, geometry, and panoptic reconstruction, OmniIndoor3D provides comprehensive 3D indoor scene understanding, which facilitates accurate and robust robotic navigation. We perform thorough evaluations across multiple datasets, and OmniIndoor3D achieves state-of-the-art results in appearance, geometry, and panoptic reconstruction. We believe our work bridges a critical gap in indoor 3D reconstruction. The code will be released at: https://ucwxb.github.io/OmniIndoor3D/

A Wavelet-based Stereo Matching Framework for Solving Frequency Convergence Inconsistency

May 23, 2025

Abstract:We find that the EPE evaluation metrics of RAFT-stereo converge inconsistently in the low and high frequency regions, resulting high frequency degradation (e.g., edges and thin objects) during the iterative process. The underlying reason for the limited performance of current iterative methods is that it optimizes all frequency components together without distinguishing between high and low frequencies. We propose a wavelet-based stereo matching framework (Wavelet-Stereo) for solving frequency convergence inconsistency. Specifically, we first explicitly decompose an image into high and low frequency components using discrete wavelet transform. Then, the high-frequency and low-frequency components are fed into two different multi-scale frequency feature extractors. Finally, we propose a novel LSTM-based high-frequency preservation update operator containing an iterative frequency adapter to provide adaptive refined high-frequency features at different iteration steps by fine-tuning the initial high-frequency features. By processing high and low frequency components separately, our framework can simultaneously refine high-frequency information in edges and low-frequency information in smooth regions, which is especially suitable for challenging scenes with fine details and textures in the distance. Extensive experiments demonstrate that our Wavelet-Stereo outperforms the state-of-the-art methods and ranks 1st on both the KITTI 2015 and KITTI 2012 leaderboards for almost all metrics. We will provide code and pre-trained models to encourage further exploration, application, and development of our innovative framework (https://github.com/SIA-IDE/Wavelet-Stereo).

ManipDreamer: Boosting Robotic Manipulation World Model with Action Tree and Visual Guidance

Apr 23, 2025

Abstract:While recent advancements in robotic manipulation video synthesis have shown promise, significant challenges persist in ensuring effective instruction-following and achieving high visual quality. Recent methods, like RoboDreamer, utilize linguistic decomposition to divide instructions into separate lower-level primitives, conditioning the world model on these primitives to achieve compositional instruction-following. However, these separate primitives do not consider the relationships that exist between them. Furthermore, recent methods neglect valuable visual guidance, including depth and semantic guidance, both crucial for enhancing visual quality. This paper introduces ManipDreamer, an advanced world model based on the action tree and visual guidance. To better learn the relationships between instruction primitives, we represent the instruction as the action tree and assign embeddings to tree nodes, each instruction can acquire its embeddings by navigating through the action tree. The instruction embeddings can be used to guide the world model. To enhance visual quality, we combine depth and semantic guidance by introducing a visual guidance adapter compatible with the world model. This visual adapter enhances both the temporal and physical consistency of video generation. Based on the action tree and visual guidance, ManipDreamer significantly boosts the instruction-following ability and visual quality. Comprehensive evaluations on robotic manipulation benchmarks reveal that ManipDreamer achieves large improvements in video quality metrics in both seen and unseen tasks, with PSNR improved from 19.55 to 21.05, SSIM improved from 0.7474 to 0.7982 and reduced Flow Error from 3.506 to 3.201 in unseen tasks, compared to the recent RoboDreamer model. Additionally, our method increases the success rate of robotic manipulation tasks by 2.5% in 6 RLbench tasks on average.

EmbodiedOcc++: Boosting Embodied 3D Occupancy Prediction with Plane Regularization and Uncertainty Sampler

Apr 13, 2025Abstract:Online 3D occupancy prediction provides a comprehensive spatial understanding of embodied environments. While the innovative EmbodiedOcc framework utilizes 3D semantic Gaussians for progressive indoor occupancy prediction, it overlooks the geometric characteristics of indoor environments, which are primarily characterized by planar structures. This paper introduces EmbodiedOcc++, enhancing the original framework with two key innovations: a Geometry-guided Refinement Module (GRM) that constrains Gaussian updates through plane regularization, along with a Semantic-aware Uncertainty Sampler (SUS) that enables more effective updates in overlapping regions between consecutive frames. GRM regularizes the position update to align with surface normals. It determines the adaptive regularization weight using curvature-based and depth-based constraints, allowing semantic Gaussians to align accurately with planar surfaces while adapting in complex regions. To effectively improve geometric consistency from different views, SUS adaptively selects proper Gaussians to update. Comprehensive experiments on the EmbodiedOcc-ScanNet benchmark demonstrate that EmbodiedOcc++ achieves state-of-the-art performance across different settings. Our method demonstrates improved edge accuracy and retains more geometric details while ensuring computational efficiency, which is essential for online embodied perception. The code will be released at: https://github.com/PKUHaoWang/EmbodiedOcc2.

DNRSelect: Active Best View Selection for Deferred Neural Rendering

Jan 21, 2025

Abstract:Deferred neural rendering (DNR) is an emerging computer graphics pipeline designed for high-fidelity rendering and robotic perception. However, DNR heavily relies on datasets composed of numerous ray-traced images and demands substantial computational resources. It remains under-explored how to reduce the reliance on high-quality ray-traced images while maintaining the rendering fidelity. In this paper, we propose DNRSelect, which integrates a reinforcement learning-based view selector and a 3D texture aggregator for deferred neural rendering. We first propose a novel view selector for deferred neural rendering based on reinforcement learning, which is trained on easily obtained rasterized images to identify the optimal views. By acquiring only a few ray-traced images for these selected views, the selector enables DNR to achieve high-quality rendering. To further enhance spatial awareness and geometric consistency in DNR, we introduce a 3D texture aggregator that fuses pyramid features from depth maps and normal maps with UV maps. Given that acquiring ray-traced images is more time-consuming than generating rasterized images, DNRSelect minimizes the need for ray-traced data by using only a few selected views while still achieving high-fidelity rendering results. We conduct detailed experiments and ablation studies on the NeRF-Synthetic dataset to demonstrate the effectiveness of DNRSelect. The code will be released.

GraphAvatar: Compact Head Avatars with GNN-Generated 3D Gaussians

Dec 18, 2024

Abstract:Rendering photorealistic head avatars from arbitrary viewpoints is crucial for various applications like virtual reality. Although previous methods based on Neural Radiance Fields (NeRF) can achieve impressive results, they lack fidelity and efficiency. Recent methods using 3D Gaussian Splatting (3DGS) have improved rendering quality and real-time performance but still require significant storage overhead. In this paper, we introduce a method called GraphAvatar that utilizes Graph Neural Networks (GNN) to generate 3D Gaussians for the head avatar. Specifically, GraphAvatar trains a geometric GNN and an appearance GNN to generate the attributes of the 3D Gaussians from the tracked mesh. Therefore, our method can store the GNN models instead of the 3D Gaussians, significantly reducing the storage overhead to just 10MB. To reduce the impact of face-tracking errors, we also present a novel graph-guided optimization module to refine face-tracking parameters during training. Finally, we introduce a 3D-aware enhancer for post-processing to enhance the rendering quality. We conduct comprehensive experiments to demonstrate the advantages of GraphAvatar, surpassing existing methods in visual fidelity and storage consumption. The ablation study sheds light on the trade-offs between rendering quality and model size. The code will be released at: https://github.com/ucwxb/GraphAvatar

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge