Tianle Xu

Panoramic Direct LiDAR-assisted Visual Odometry

Sep 14, 2024

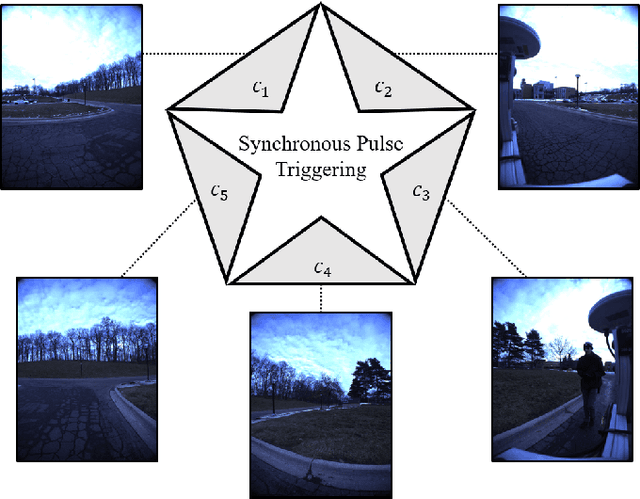

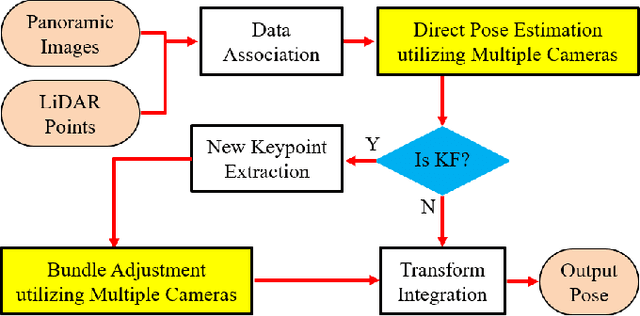

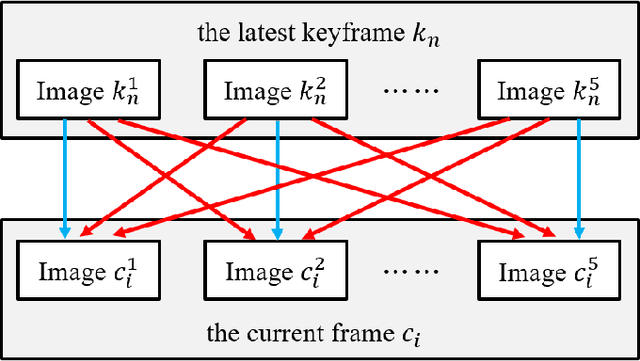

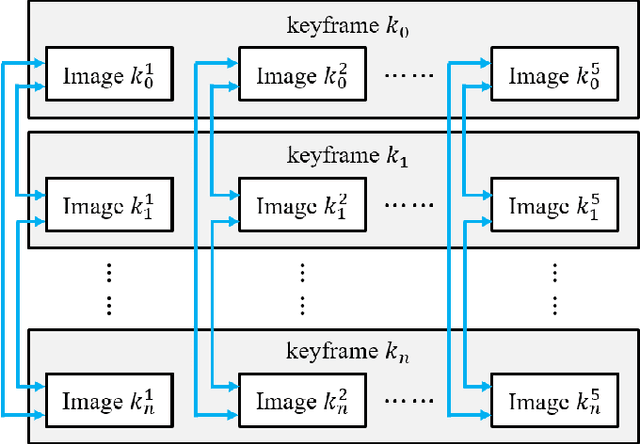

Abstract:Enhancing visual odometry by exploiting sparse depth measurements from LiDAR is a promising solution for improving tracking accuracy of an odometry. Most existing works utilize a monocular pinhole camera, yet could suffer from poor robustness due to less available information from limited field-of-view (FOV). This paper proposes a panoramic direct LiDAR-assisted visual odometry, which fully associates the 360-degree FOV LiDAR points with the 360-degree FOV panoramic image datas. 360-degree FOV panoramic images can provide more available information, which can compensate inaccurate pose estimation caused by insufficient texture or motion blur from a single view. In addition to constraints between a specific view at different times, constraints can also be built between different views at the same moment. Experimental results on public datasets demonstrate the benefit of large FOV of our panoramic direct LiDAR-assisted visual odometry to state-of-the-art approaches.

* 6 pages, 6 figures

Semi-Elastic LiDAR-Inertial Odometry

Jul 15, 2023

Abstract:Existing LiDAR-inertial state estimation methods treats the state at the beginning of current sweep as equal to the state at the end of previous sweep. However, if the previous state is inaccurate, the current state cannot satisfy the constraints from LiDAR and IMU consistently, and in turn yields local inconsistency in the estimated states (e.g., zigzag trajectory or high-frequency oscillating velocity). To address this issue, this paper proposes a semi-elastic LiDAR-inertial state estimation method. Our method provides the state sufficient flexibility to be optimized to the correct value, thus preferably ensuring improved accuracy, consistency, and robustness of state estimation. We integrate the proposed method into an optimization-based LiDARinertial odometry (LIO) framework. Experimental results on four public datasets demonstrate that our method outperforms existing state-of-the-art LiDAR-inertial odometry systems in terms of accuracy. In addition, our semi-elastic LiDAR-inertial state estimation method can better enhance the accuracy, consistency, and robustness. We have released the source code of this work to contribute to advancements in LiDAR-inertial state estimation and benefit the broader research community.

LIW-OAM: Lidar-Inertial-Wheel Odometry and Mapping

Feb 28, 2023Abstract:LiDAR-inertial odometry and mapping (LIOAM), which fuses complementary information of a LiDAR and an Inertial Measurement Unit (IMU), is an attractive solution for pose estimation and mapping. In LI-OAM, both pose and velocity are regarded as state variables that need to be solved. However, the widely-used Iterative Closest Point (ICP) algorithm can only provide constraint for pose, while the velocity can only be constrained by IMU pre-integration. As a result, the velocity estimates inclined to be updated accordingly with the pose results. In this paper, we propose LIW-OAM, an accurate and robust LiDAR-inertial-wheel odometry and mapping system, which fuses the measurements from LiDAR, IMU and wheel encoder in a bundle adjustment (BA) based optimization framework. The involvement of a wheel encoder could provide velocity measurement as an important observation, which assists LI-OAM to provide a more accurate state prediction. In addition, constraining the velocity variable by the observation from wheel encoder in optimization can further improve the accuracy of state estimation. Experiment results on two public datasets demonstrate that our system outperforms all state-of-the-art LI-OAM systems in terms of smaller absolute trajectory error (ATE), and embedding a wheel encoder can greatly improve the performance of LI-OAM based on the BA framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge