Chen Ye

Stroke Modeling Enables Vectorized Character Generation with Large Vectorized Glyph Model

Nov 14, 2025

Abstract:Vectorized glyphs are widely used in poster design, network animation, art display, and various other fields due to their scalability and flexibility. In typography, they are often seen as special sequences composed of ordered strokes. This concept extends to the token sequence prediction abilities of large language models (LLMs), enabling vectorized character generation through stroke modeling. In this paper, we propose a novel Large Vectorized Glyph Model (LVGM) designed to generate vectorized Chinese glyphs by predicting the next stroke. Initially, we encode strokes into discrete latent variables called stroke embeddings. Subsequently, we train our LVGM via fine-tuning DeepSeek LLM by predicting the next stroke embedding. With limited strokes given, it can generate complete characters, semantically elegant words, and even unseen verses in vectorized form. Moreover, we release a new large-scale Chinese SVG dataset containing 907,267 samples based on strokes for dynamically vectorized glyph generation. Experimental results show that our model has scaling behaviors on data scales. Our generated vectorized glyphs have been validated by experts and relevant individuals.

PanTS: The Pancreatic Tumor Segmentation Dataset

Jul 02, 2025Abstract:PanTS is a large-scale, multi-institutional dataset curated to advance research in pancreatic CT analysis. It contains 36,390 CT scans from 145 medical centers, with expert-validated, voxel-wise annotations of over 993,000 anatomical structures, covering pancreatic tumors, pancreas head, body, and tail, and 24 surrounding anatomical structures such as vascular/skeletal structures and abdominal/thoracic organs. Each scan includes metadata such as patient age, sex, diagnosis, contrast phase, in-plane spacing, slice thickness, etc. AI models trained on PanTS achieve significantly better performance in pancreatic tumor detection, localization, and segmentation compared to those trained on existing public datasets. Our analysis indicates that these gains are directly attributable to the 16x larger-scale tumor annotations and indirectly supported by the 24 additional surrounding anatomical structures. As the largest and most comprehensive resource of its kind, PanTS offers a new benchmark for developing and evaluating AI models in pancreatic CT analysis.

Convex Hull-based Algebraic Constraint for Visual Quadric SLAM

Mar 03, 2025

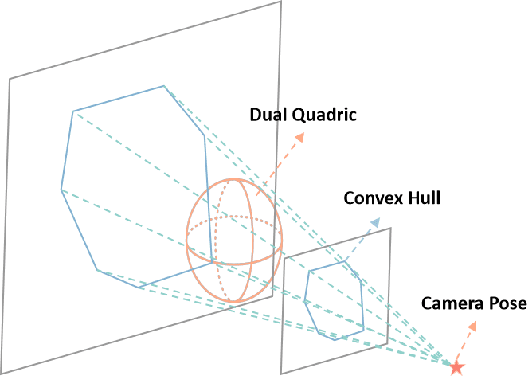

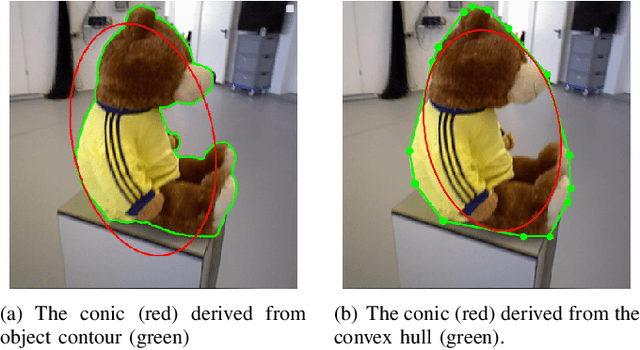

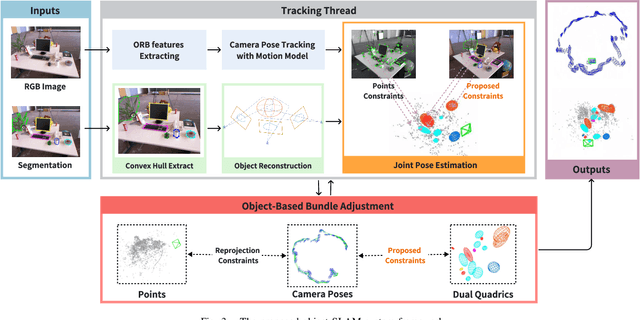

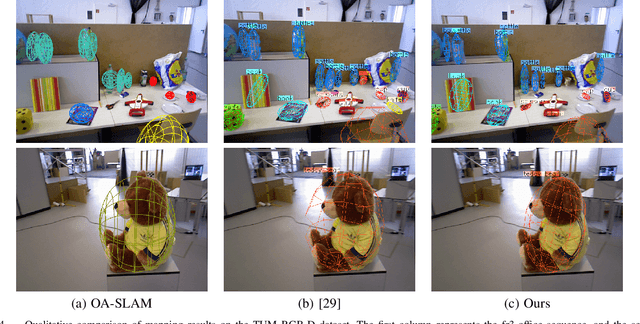

Abstract:Using Quadrics as the object representation has the benefits of both generality and closed-form projection derivation between image and world spaces. Although numerous constraints have been proposed for dual quadric reconstruction, we found that many of them are imprecise and provide minimal improvements to localization.After scrutinizing the existing constraints, we introduce a concise yet more precise convex hull-based algebraic constraint for object landmarks, which is applied to object reconstruction, frontend pose estimation, and backend bundle adjustment.This constraint is designed to fully leverage precise semantic segmentation, effectively mitigating mismatches between complex-shaped object contours and dual quadrics.Experiments on public datasets demonstrate that our approach is applicable to both monocular and RGB-D SLAM and achieves improved object mapping and localization than existing quadric SLAM methods. The implementation of our method is available at https://github.com/tiev-tongji/convexhull-based-algebraic-constraint.

MVC-VPR: Mutual Learning of Viewpoint Classification and Visual Place Recognition

Dec 13, 2024

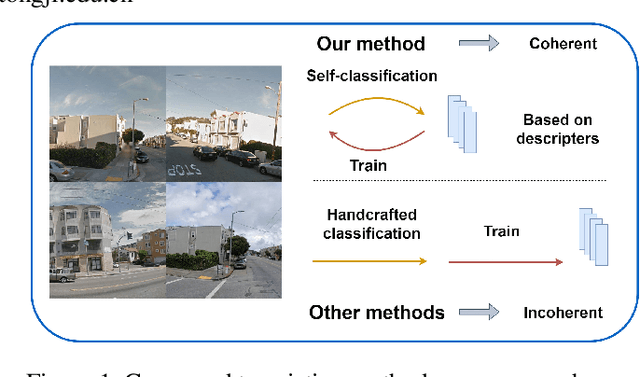

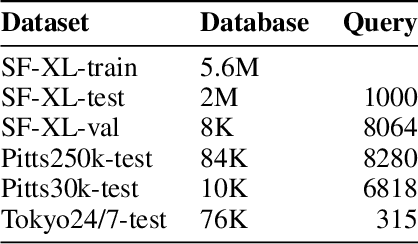

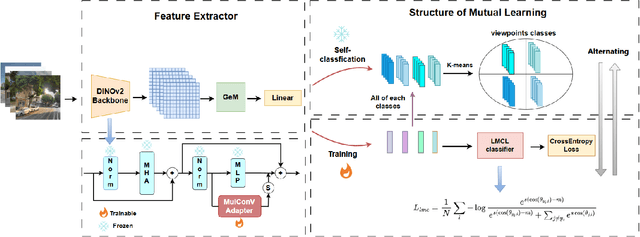

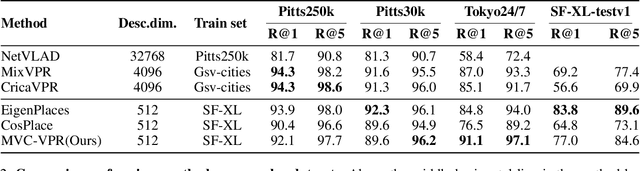

Abstract:Visual Place Recognition (VPR) aims to robustly identify locations by leveraging image retrieval based on descriptors encoded from environmental images. However, drastic appearance changes of images captured from different viewpoints at the same location pose incoherent supervision signals for descriptor learning, which severely hinder the performance of VPR. Previous work proposes classifying images based on manually defined rules or ground truth labels for viewpoints, followed by descriptor training based on the classification results. However, not all datasets have ground truth labels of viewpoints and manually defined rules may be suboptimal, leading to degraded descriptor performance.To address these challenges, we introduce the mutual learning of viewpoint self-classification and VPR. Starting from coarse classification based on geographical coordinates, we progress to finer classification of viewpoints using simple clustering techniques. The dataset is partitioned in an unsupervised manner while simultaneously training a descriptor extractor for place recognition. Experimental results show that this approach almost perfectly partitions the dataset based on viewpoints, thus achieving mutually reinforcing effects. Our method even excels state-of-the-art (SOTA) methods that partition datasets using ground truth labels.

Exploring Mathematical Extrapolation of Large Language Models with Synthetic Data

Jun 04, 2024

Abstract:Large Language Models (LLMs) have shown excellent performance in language understanding, text generation, code synthesis, and many other tasks, while they still struggle in complex multi-step reasoning problems, such as mathematical reasoning. In this paper, through a newly proposed arithmetical puzzle problem, we show that the model can perform well on multi-step reasoning tasks via fine-tuning on high-quality synthetic data. Experimental results with the open-llama-3B model on three different test datasets show that not only the model can reach a zero-shot pass@1 at 0.44 on the in-domain dataset, it also demonstrates certain generalization capabilities on the out-of-domain datasets. Specifically, this paper has designed two out-of-domain datasets in the form of extending the numerical range and the composing components of the arithmetical puzzle problem separately. The fine-tuned models have shown encouraging performance on these two far more difficult tasks with the zero-shot pass@1 at 0.33 and 0.35, respectively.

POWQMIX: Weighted Value Factorization with Potentially Optimal Joint Actions Recognition for Cooperative Multi-Agent Reinforcement Learning

May 15, 2024

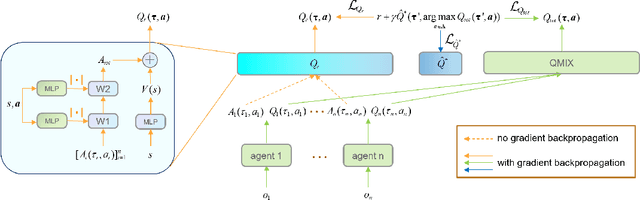

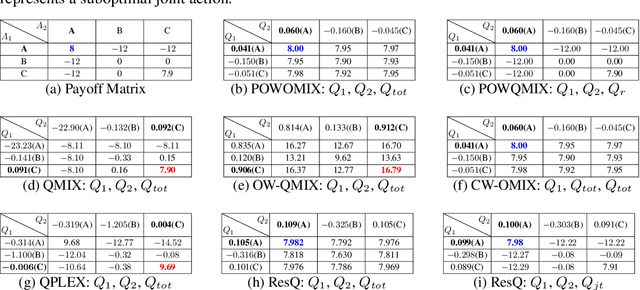

Abstract:Value function factorization methods are commonly used in cooperative multi-agent reinforcement learning, with QMIX receiving significant attention. Many QMIX-based methods introduce monotonicity constraints between the joint action value and individual action values to achieve decentralized execution. However, such constraints limit the representation capacity of value factorization, restricting the joint action values it can represent and hindering the learning of the optimal policy. To address this challenge, we propose the Potentially Optimal joint actions Weighted QMIX (POWQMIX) algorithm, which recognizes the potentially optimal joint actions and assigns higher weights to the corresponding losses of these joint actions during training. We theoretically prove that with such a weighted training approach the optimal policy is guaranteed to be recovered. Experiments in matrix games, predator-prey, and StarCraft II Multi-Agent Challenge environments demonstrate that our algorithm outperforms the state-of-the-art value-based multi-agent reinforcement learning methods.

LOG-LIO2: A LiDAR-Inertial Odometry with Efficient Uncertainty Analysis

May 02, 2024

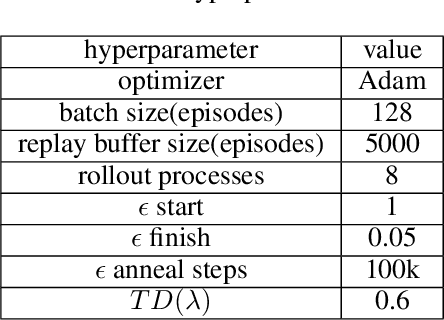

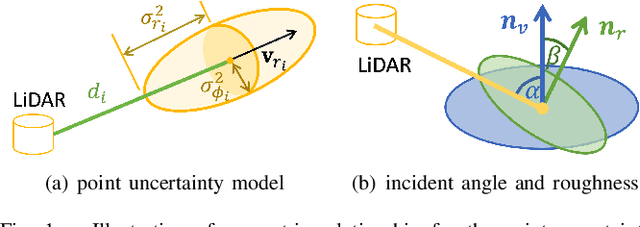

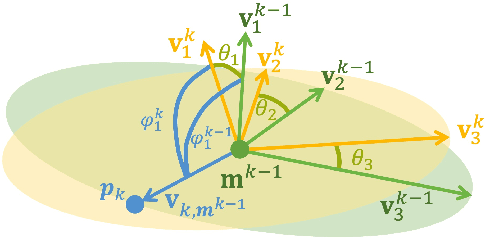

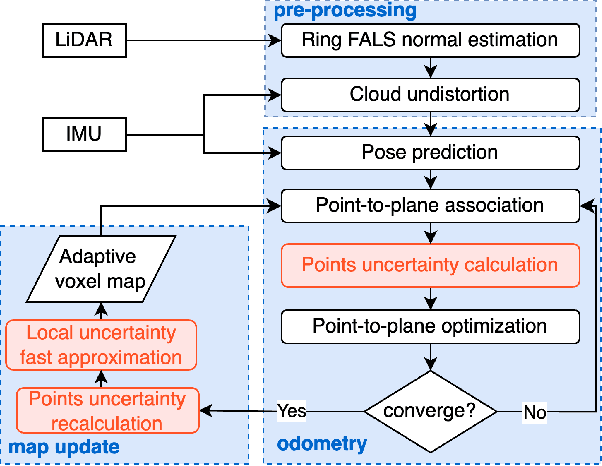

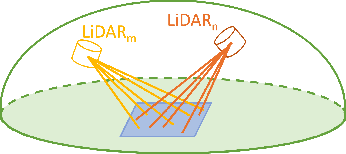

Abstract:Uncertainty in LiDAR measurements, stemming from factors such as range sensing, is crucial for LIO (LiDAR-Inertial Odometry) systems as it affects the accurate weighting in the loss function. While recent LIO systems address uncertainty related to range sensing, the impact of incident angle on uncertainty is often overlooked by the community. Moreover, the existing uncertainty propagation methods suffer from computational inefficiency. This paper proposes a comprehensive point uncertainty model that accounts for both the uncertainties from LiDAR measurements and surface characteristics, along with an efficient local uncertainty analytical method for LiDAR-based state estimation problem. We employ a projection operator that separates the uncertainty into the ray direction and its orthogonal plane. Then, we derive incremental Jacobian matrices of eigenvalues and eigenvectors w.r.t. points, which enables a fast approximation of uncertainty propagation. This approach eliminates the requirement for redundant traversal of points, significantly reducing the time complexity of uncertainty propagation from $\mathcal{O} (n)$ to $\mathcal{O} (1)$ when a new point is added. Simulations and experiments on public datasets are conducted to validate the accuracy and efficiency of our formulations. The proposed methods have been integrated into a LIO system, which is available at https://github.com/tiev-tongji/LOG-LIO2.

N$^{3}$-Mapping: Normal Guided Neural Non-Projective Signed Distance Fields for Large-scale 3D Mapping

Jan 07, 2024

Abstract:Accurate and dense mapping in large-scale environments is essential for various robot applications. Recently, implicit neural signed distance fields (SDFs) have shown promising advances in this task. However, most existing approaches employ projective distances from range data as SDF supervision, introducing approximation errors and thus degrading the mapping quality. To address this problem, we introduce N3-Mapping, an implicit neural mapping system featuring normal-guided neural non-projective signed distance fields. Specifically, we directly sample points along the surface normal, instead of the ray, to obtain more accurate non-projective distance values from range data. Then these distance values are used as supervision to train the implicit map. For large-scale mapping, we apply a voxel-oriented sliding window mechanism to alleviate the forgetting issue with a bounded memory footprint. Besides, considering the uneven distribution of measured point clouds, a hierarchical sampling strategy is designed to improve training efficiency. Experiments demonstrate that our method effectively mitigates SDF approximation errors and achieves state-of-the-art mapping quality compared to existing approaches.

Progressive Dual Priori Network for Generalized Breast Tumor Segmentation

Oct 20, 2023Abstract:To promote the generalization ability of breast tumor segmentation models, as well as to improve the segmentation performance for breast tumors with smaller size, low-contrast amd irregular shape, we propose a progressive dual priori network (PDPNet) to segment breast tumors from dynamic enhanced magnetic resonance images (DCE-MRI) acquired at different sites. The PDPNet first cropped tumor regions with a coarse-segmentation based localization module, then the breast tumor mask was progressively refined by using the weak semantic priori and cross-scale correlation prior knowledge. To validate the effectiveness of PDPNet, we compared it with several state-of-the-art methods on multi-center datasets. The results showed that, comparing against the suboptimal method, the DSC, SEN, KAPPA and HD95 of PDPNet were improved 3.63\%, 8.19\%, 5.52\%, and 3.66\% respectively. In addition, through ablations, we demonstrated that the proposed localization module can decrease the influence of normal tissues and therefore improve the generalization ability of the model. The weak semantic priors allow focusing on tumor regions to avoid missing small tumors and low-contrast tumors. The cross-scale correlation priors are beneficial for promoting the shape-aware ability for irregual tumors. Thus integrating them in a unified framework improved the multi-center breast tumor segmentation performance.

How to Fine-tune the Model: Unified Model Shift and Model Bias Policy Optimization

Sep 22, 2023

Abstract:Designing and deriving effective model-based reinforcement learning (MBRL) algorithms with a performance improvement guarantee is challenging, mainly attributed to the high coupling between model learning and policy optimization. Many prior methods that rely on return discrepancy to guide model learning ignore the impacts of model shift, which can lead to performance deterioration due to excessive model updates. Other methods use performance difference bound to explicitly consider model shift. However, these methods rely on a fixed threshold to constrain model shift, resulting in a heavy dependence on the threshold and a lack of adaptability during the training process. In this paper, we theoretically derive an optimization objective that can unify model shift and model bias and then formulate a fine-tuning process. This process adaptively adjusts the model updates to get a performance improvement guarantee while avoiding model overfitting. Based on these, we develop a straightforward algorithm USB-PO (Unified model Shift and model Bias Policy Optimization). Empirical results show that USB-PO achieves state-of-the-art performance on several challenging benchmark tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge