Alessandro Saffiotti

Planning for Learning Object Properties

Jan 15, 2023

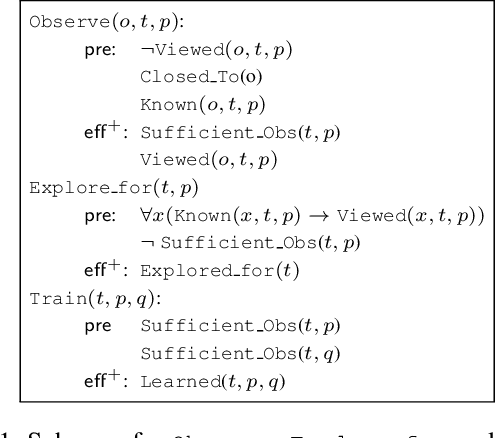

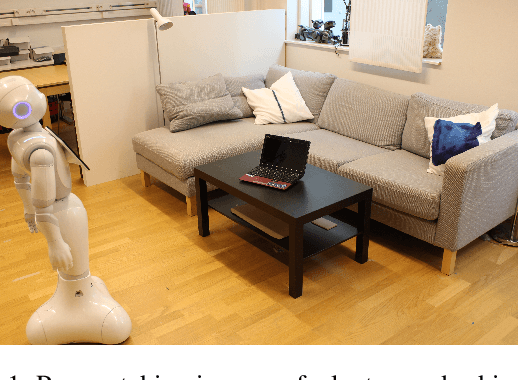

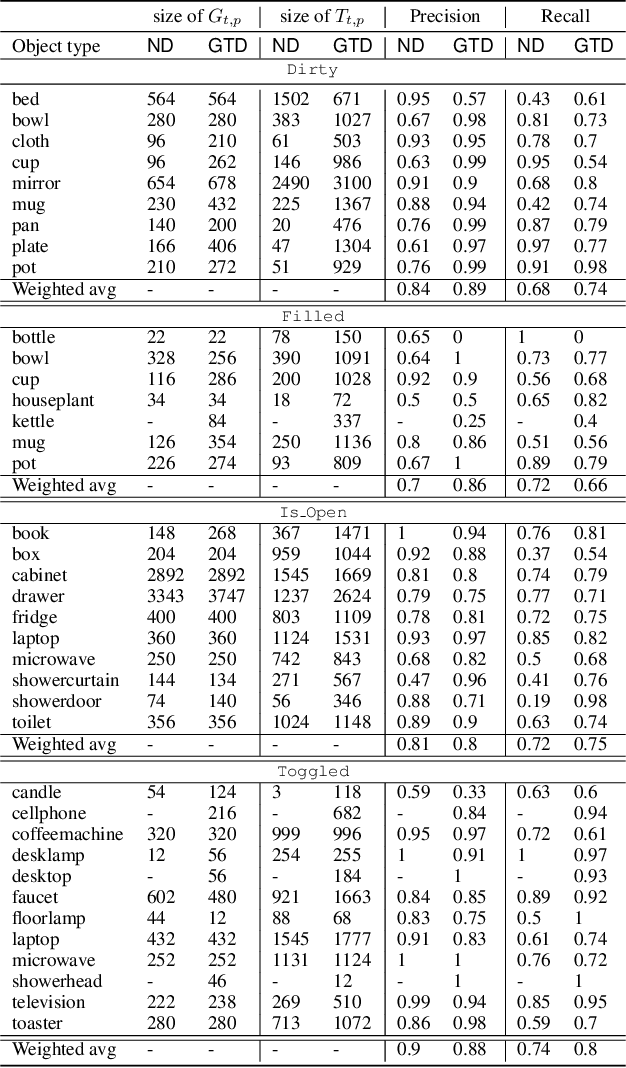

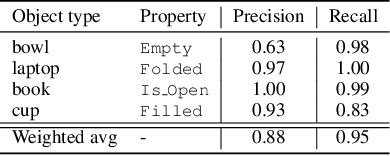

Abstract:Autonomous agents embedded in a physical environment need the ability to recognize objects and their properties from sensory data. Such a perceptual ability is often implemented by supervised machine learning models, which are pre-trained using a set of labelled data. In real-world, open-ended deployments, however, it is unrealistic to assume to have a pre-trained model for all possible environments. Therefore, agents need to dynamically learn/adapt/extend their perceptual abilities online, in an autonomous way, by exploring and interacting with the environment where they operate. This paper describes a way to do so, by exploiting symbolic planning. Specifically, we formalize the problem of automatically training a neural network to recognize object properties as a symbolic planning problem (using PDDL). We use planning techniques to produce a strategy for automating the training dataset creation and the learning process. Finally, we provide an experimental evaluation in both a simulated and a real environment, which shows that the proposed approach is able to successfully learn how to recognize new object properties.

Two ways to make your robot proactive: reasoning about human intentions, or reasoning about possible futures

May 11, 2022

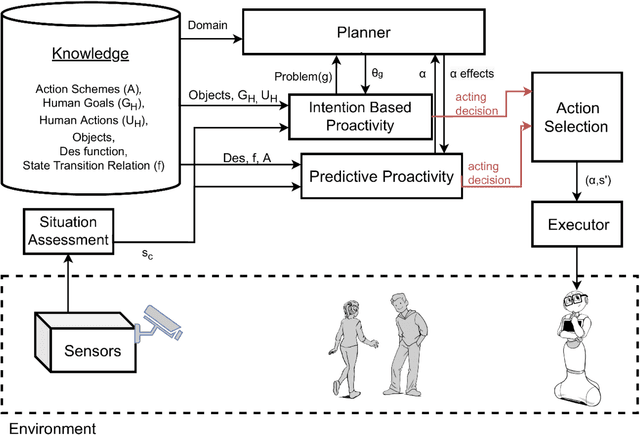

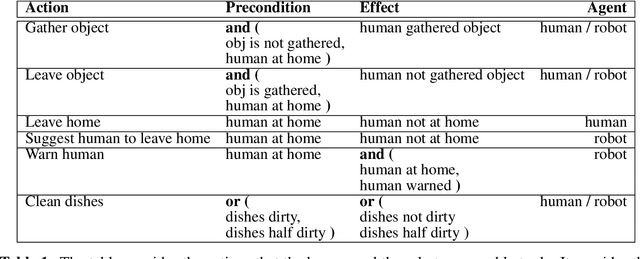

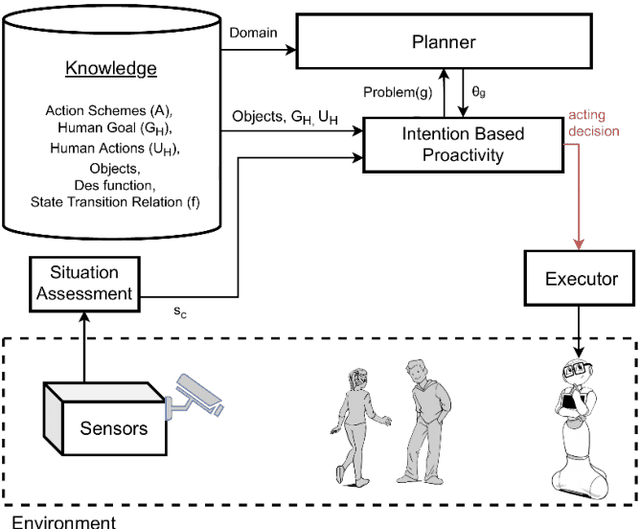

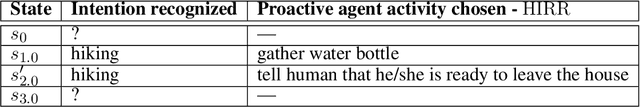

Abstract:Robots sharing their space with humans need to be proactive in order to be helpful. Proactive robots are able to act on their own initiative in an anticipatory way to benefit humans. In this work, we investigate two ways to make robots proactive. One way is to recognize humans' intentions and to act to fulfill them, like opening the door that you are about to cross. The other way is to reason about possible future threats or opportunities and to act to prevent or to foster them, like recommending you to take an umbrella since rain has been forecasted. In this paper, we present approaches to realize these two types of proactive behavior. We then present an integrated system that can generate proactive robot behavior by reasoning on both factors: intentions and predictions. We illustrate our system on a sample use case including a domestic robot and a human. We first run this use case with the two separate proactive systems, intention-based and prediction-based, and then run it with our integrated system. The results show that the integrated system is able to take into account a broader variety of aspects that are needed for proactivity.

Composing Complex and Hybrid AI Solutions

Feb 25, 2022

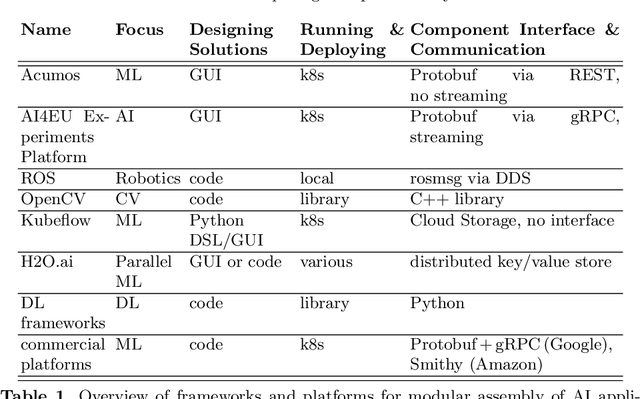

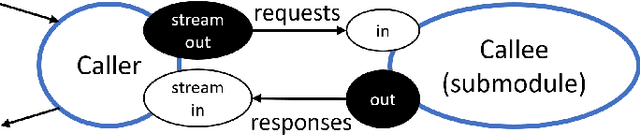

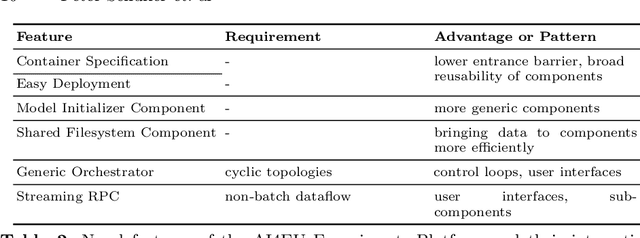

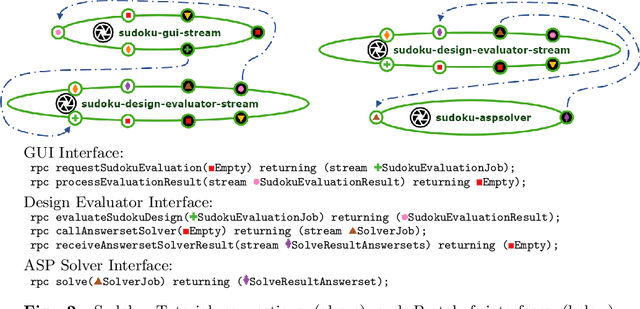

Abstract:Progress in several areas of computer science has been enabled by comfortable and efficient means of experimentation, clear interfaces, and interchangable components, for example using OpenCV for computer vision or ROS for robotics. We describe an extension of the Acumos system towards enabling the above features for general AI applications. Originally, Acumos was created for telecommunication purposes, mainly for creating linear pipelines of machine learning components. Our extensions include support for more generic components with gRPC/Protobuf interfaces, automatic orchestration of graphically assembled solutions including control loops, sub-component topologies, and event-based communication,and provisions for assembling solutions which contain user interfaces and shared storage areas. We provide examples of deployable solutions and their interfaces. The framework is deployed at http://aiexp.ai4europe.eu/ and its source code is managed as an open source Eclipse project.

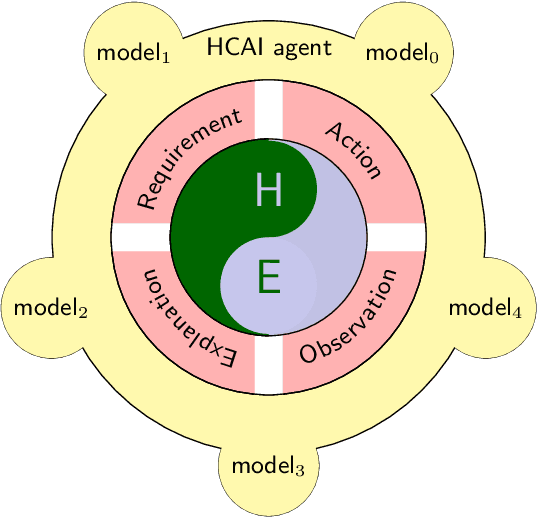

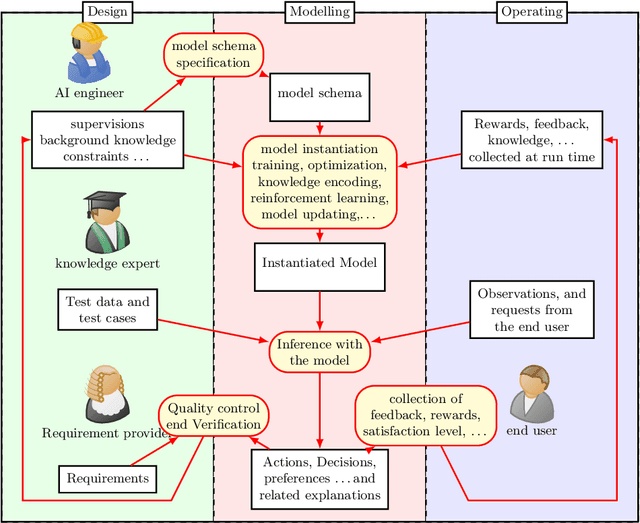

On some Foundational Aspects of Human-Centered Artificial Intelligence

Dec 29, 2021

Abstract:The burgeoning of AI has prompted recommendations that AI techniques should be "human-centered". However, there is no clear definition of what is meant by Human Centered Artificial Intelligence, or for short, HCAI. This paper aims to improve this situation by addressing some foundational aspects of HCAI. To do so, we introduce the term HCAI agent to refer to any physical or software computational agent equipped with AI components and that interacts and/or collaborates with humans. This article identifies five main conceptual components that participate in an HCAI agent: Observations, Requirements, Actions, Explanations and Models. We see the notion of HCAI agent, together with its components and functions, as a way to bridge the technical and non-technical discussions on human-centered AI. In this paper, we focus our analysis on scenarios consisting of a single agent operating in dynamic environments in presence of humans.

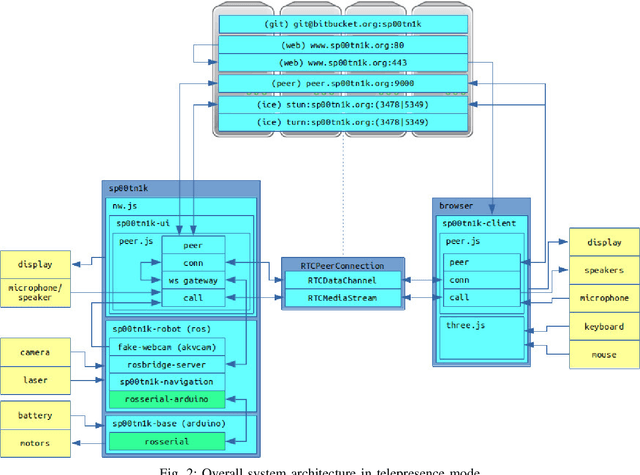

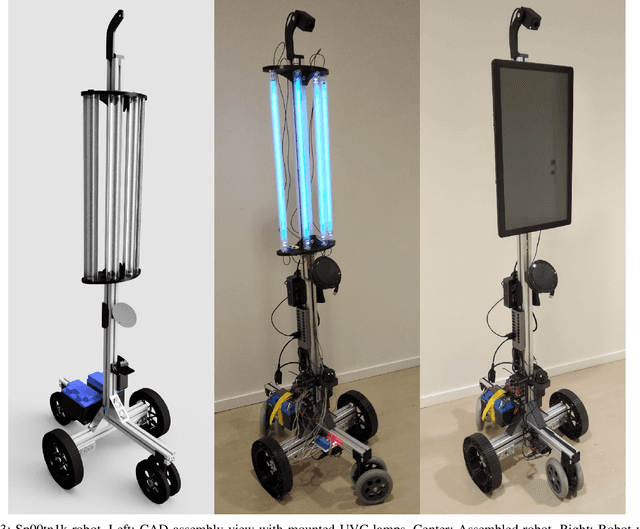

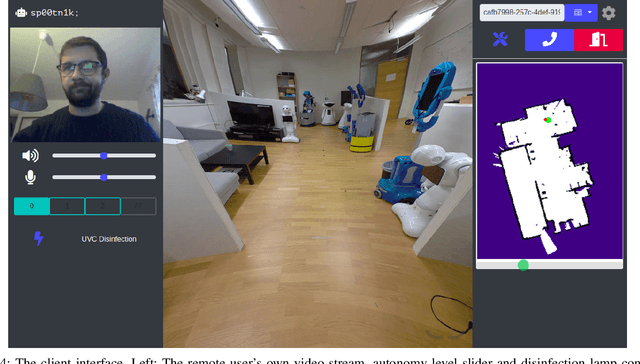

An Open-Source Modular Robotic System for Telepresence and Remote Disinfection

Feb 02, 2021

Abstract:In a pandemic contact between humans needs to be avoided wherever possible. Robots can take over an increasing number of tasks to protect people from being exposed to others. One such task is the disinfection of environments in which infection spread is particularly likely or bears increased risks. It has been shown that UVC light is effective in neutralizing a variety of pathogens, among others the virus causing COVID-19, SARS-CoV-2. Another function which can reduce the need for physical proximity between humans is interaction via telepresence, i.e., the remote embodiment of a person controlling the robot. This work presents a modular mobile robot for telepresence and disinfection with UVC lamps. Both operation modes are supported by adaptable autonomy navigation features for facilitating efficient task execution. The platform's primary contributions are its hardware and software design, which combine consumer-grade components and 3D-printed mounting with open-source software frameworks.

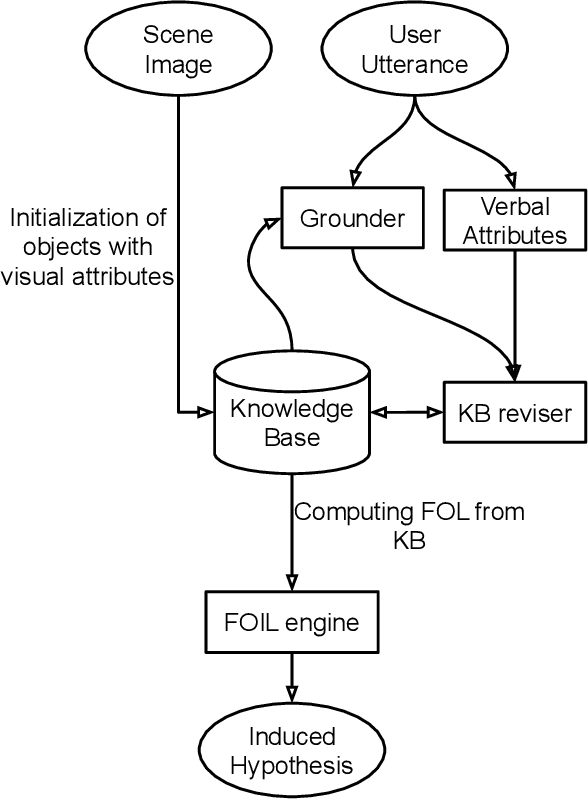

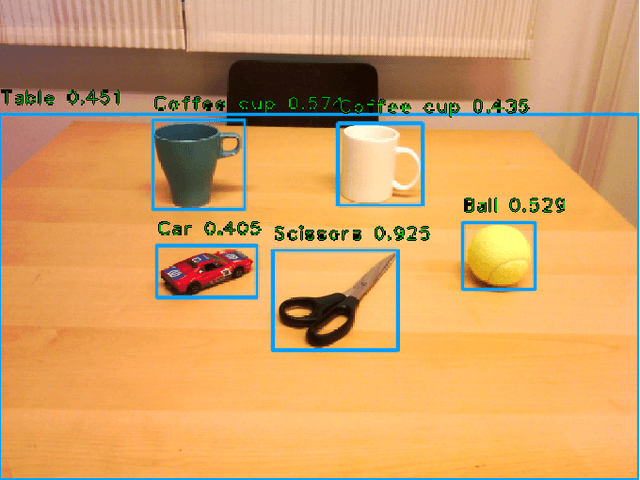

Towards Abstract Relational Learning in Human Robot Interaction

Nov 20, 2020

Abstract:Humans have a rich representation of the entities in their environment. Entities are described by their attributes, and entities that share attributes are often semantically related. For example, if two books have "Natural Language Processing" as the value of their `title' attribute, we can expect that their `topic' attribute will also be equal, namely, "NLP". Humans tend to generalize such observations, and infer sufficient conditions under which the `topic' attribute of any entity is "NLP". If robots need to interact successfully with humans, they need to represent entities, attributes, and generalizations in a similar way. This ends in a contextualized cognitive agent that can adapt its understanding, where context provides sufficient conditions for a correct understanding. In this work, we address the problem of how to obtain these representations through human-robot interaction. We integrate visual perception and natural language input to incrementally build a semantic model of the world, and then use inductive reasoning to infer logical rules that capture generic semantic relations, true in this model. These relations can be used to enrich the human-robot interaction, to populate a knowledge base with inferred facts, or to remove uncertainty in the robot's sensory inputs.

Robby is Not a Robber : On the Use of Institutions for Learning Normative Behavior

Aug 01, 2019

Abstract:Future robots should follow human social norms in order to be useful and accepted in human society. In this paper, we leverage already existing social knowledge in human societies by capturing it in our framework through the notion of social norms. We show how norms can be used to guide a reinforcement learning agent towards achieving normative behavior and apply the same set of norms over different domains. Thus, we are able to: (1) provide a way to intuitively encode social knowledge (through norms); (2) guide learning towards normative behaviors (through an automatic norm reward system); and (3) achieve a transfer of learning by abstracting policies; Finally, (4) the method is not dependent on a particular RL algorithm. We show how our approach can be seen as a means to achieve abstract representation and learn procedural knowledge based on the declarative semantics of norms and discuss possible implications of this in some areas of cognitive science.

Anticipation in collaborative music performance using fuzzy systems: a case study

Jun 05, 2019

Abstract:In order to collaborate and co-create with humans, an AI system must be capable of both reactive and anticipatory behavior. We present a case study of such a system in the domain of musical improvisation. We consider a duo consisting of a human pianist accompained by an off-the-shelf virtual drummer, and we design an AI system to control the perfomance parameters of the drummer (e.g., patterns, intensity, or complexity) as a function of what the human pianist is playing. The AI system utilizes a model elicited from the musicians and encoded through fuzzy logic. This paper outlines the methodology, design, and development process of this system. An evaluation in public concerts is upcoming. This case study is seen as a step in the broader investigation of anticipation and creative processes in mixed human-robot, or "anthrobotic" systems.

Learning from Implicit Information in Natural Language Instructions for Robotic Manipulations

Apr 30, 2019

Abstract:Human-robot interaction often occurs in the form of instructions given from a human to a robot. For a robot to successfully follow instructions, a common representation of the world and objects in it should be shared between humans and the robot so that the instructions can be grounded. Achieving this representation can be done via learning, where both the world representation and the language grounding are learned simultaneously. However, in robotics this can be a difficult task due to the cost and scarcity of data. In this paper, we tackle the problem by separately learning the world representation of the robot and the language grounding. While this approach can address the challenges in getting sufficient data, it may give rise to inconsistencies between both learned components. Therefore, we further propose Bayesian learning to resolve such inconsistencies between the natural language grounding and a robot's world representation by exploiting spatio-relational information that is implicitly present in instructions given by a human. Moreover, we demonstrate the feasibility of our approach on a scenario involving a robotic arm in the physical world.

Norms, Institutions, and Robots

Jul 30, 2018

Abstract:Interactions within human societies are usually regulated by social norms. If robots are to be accepted into human society, it is essential that they are aware of and capable of reasoning about social norms. In this paper, we focus on how to represent social norms in societies with humans and robots, and how artificial agents such as robots can reason about social norms in order to plan appropriate behavior. We use the notion of institution as a way to formally define and encapsulate norms. We provide a formal framework built around the notion of institution. The framework distinguishes between abstract norms and their semantics in a concrete domain, hence allowing the use of the same institution across physical domains and agent types. It also provides a formal computational framework for norm verification, planning, and plan execution in a domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge