Amy Loutfi

Örebro University, Örebro, Sweden

Towards a Comprehensive Theory of Reservoir Computing

Nov 18, 2025Abstract:In reservoir computing, an input sequence is processed by a recurrent neural network, the reservoir, which transforms it into a spatial pattern that a shallow readout network can then exploit for tasks such as memorization and time-series prediction or classification. Echo state networks (ESN) are a model class in which the reservoir is a traditional artificial neural network. This class contains many model types, each with sets of hyperparameters. Selecting models and parameter settings for particular applications requires a theory for predicting and comparing performances. Here, we demonstrate that recent developments of perceptron theory can be used to predict the memory capacity and accuracy of a wide variety of ESN models, including reservoirs with linear neurons, sigmoid nonlinear neurons, different types of recurrent matrices, and different types of readout networks. Across thirty variants of ESNs, we show that empirical results consistently confirm the theory's predictions. As a practical demonstration, the theory is used to optimize memory capacity of an ESN in the entire joint parameter space. Further, guided by the theory, we propose a novel ESN model with a readout network that does not require training, and which outperforms earlier ESN models without training. Finally, we characterize the geometry of the readout networks in ESNs, which reveals that many ESN models exhibit a similar regular simplex geometry as has been observed in the output weights of deep neural networks.

Efficient Hyperdimensional Computing with Modular Composite Representations

Nov 12, 2025Abstract:The modular composite representation (MCR) is a computing model that represents information with high-dimensional integer vectors using modular arithmetic. Originally proposed as a generalization of the binary spatter code model, it aims to provide higher representational power while remaining a lighter alternative to models requiring high-precision components. Despite this potential, MCR has received limited attention. Systematic analyses of its trade-offs and comparisons with other models are lacking, sustaining the perception that its added complexity outweighs the improved expressivity. In this work, we revisit MCR by presenting its first extensive evaluation, demonstrating that it achieves a unique balance of capacity, accuracy, and hardware efficiency. Experiments measuring capacity demonstrate that MCR outperforms binary and integer vectors while approaching complex-valued representations at a fraction of their memory footprint. Evaluation on 123 datasets confirms consistent accuracy gains and shows that MCR can match the performance of binary spatter codes using up to 4x less memory. We investigate the hardware realization of MCR by showing that it maps naturally to digital logic and by designing the first dedicated accelerator. Evaluations on basic operations and 7 selected datasets demonstrate a speedup of up to 3 orders of magnitude and significant energy reductions compared to software implementation. When matched for accuracy against binary spatter codes, MCR achieves on average 3.08x faster execution and 2.68x lower energy consumption. These findings demonstrate that, although MCR requires more sophisticated operations than binary spatter codes, its modular arithmetic and higher per-component precision enable lower dimensionality. When realized with dedicated hardware, this results in a faster, more energy-efficient, and high-precision alternative to existing models.

Relative Navigation and Dynamic Target Tracking for Autonomous Underwater Proximity Operations

Aug 23, 2025Abstract:Estimating a target's 6-DoF motion in underwater proximity operations is difficult because the chaser lacks target-side proprioception and the available relative observations are sparse, noisy, and often partial (e.g., Ultra-Short Baseline (USBL) positions). Without a motion prior, factor-graph maximum a posteriori estimation is underconstrained: consecutive target states are weakly linked and orientation can drift. We propose a generalized constant-twist motion prior defined on the tangent space of Lie groups that enforces temporally consistent trajectories across all degrees of freedom; in SE(3) it couples translation and rotation in the body frame. We present a ternary factor and derive its closed-form Jacobians based on standard Lie group operations, enabling drop-in use for trajectories on arbitrary Lie groups. We evaluate two deployment modes: (A) an SE(3)-only representation that regularizes orientation even when only position is measured, and (B) a mode with boundary factors that switches the target representation between SE(3) and 3D position while applying the same generalized constant-twist prior across representation changes. Validation on a real-world dynamic docking scenario dataset shows consistent ego-target trajectory estimation through USBL-only and optical relative measurement segments with an improved relative tracking accuracy compared to the noisy measurements to the target. Because the construction relies on standard Lie group primitives, it is portable across state manifolds and sensing modalities.

Interactive Double Deep Q-network: Integrating Human Interventions and Evaluative Predictions in Reinforcement Learning of Autonomous Driving

Apr 28, 2025Abstract:Integrating human expertise with machine learning is crucial for applications demanding high accuracy and safety, such as autonomous driving. This study introduces Interactive Double Deep Q-network (iDDQN), a Human-in-the-Loop (HITL) approach that enhances Reinforcement Learning (RL) by merging human insights directly into the RL training process, improving model performance. Our proposed iDDQN method modifies the Q-value update equation to integrate human and agent actions, establishing a collaborative approach for policy development. Additionally, we present an offline evaluative framework that simulates the agent's trajectory as if no human intervention had occurred, to assess the effectiveness of human interventions. Empirical results in simulated autonomous driving scenarios demonstrate that iDDQN outperforms established approaches, including Behavioral Cloning (BC), HG-DAgger, Deep Q-Learning from Demonstrations (DQfD), and vanilla DRL in leveraging human expertise for improving performance and adaptability.

Evaluation of Coordination Strategies for Underground Automated Vehicle Fleets in Mixed Traffic

Apr 27, 2025Abstract:This study investigates the efficiency and safety outcomes of implementing different adaptive coordination models for automated vehicle (AV) fleets, managed by a centralized coordinator that dynamically responds to human-controlled vehicle behavior. The simulated scenarios replicate an underground mining environment characterized by narrow tunnels with limited connectivity. To address the unique challenges of such settings, we propose a novel metric - Path Overlap Density (POD) - to predict efficiency and potentially the safety performance of AV fleets. The study also explores the impact of map features on AV fleets performance. The results demonstrate that both AV fleet coordination strategies and underground tunnel network characteristics significantly influence overall system performance. While map features are critical for optimizing efficiency, adaptive coordination strategies are essential for ensuring safe operations.

REvolve: Reward Evolution with Large Language Models for Autonomous Driving

Jun 03, 2024Abstract:Designing effective reward functions is crucial to training reinforcement learning (RL) algorithms. However, this design is non-trivial, even for domain experts, due to the subjective nature of certain tasks that are hard to quantify explicitly. In recent works, large language models (LLMs) have been used for reward generation from natural language task descriptions, leveraging their extensive instruction tuning and commonsense understanding of human behavior. In this work, we hypothesize that LLMs, guided by human feedback, can be used to formulate human-aligned reward functions. Specifically, we study this in the challenging setting of autonomous driving (AD), wherein notions of "good" driving are tacit and hard to quantify. To this end, we introduce REvolve, an evolutionary framework that uses LLMs for reward design in AD. REvolve creates and refines reward functions by utilizing human feedback to guide the evolution process, effectively translating implicit human knowledge into explicit reward functions for training (deep) RL agents. We demonstrate that agents trained on REvolve-designed rewards align closely with human driving standards, thereby outperforming other state-of-the-art baselines.

Cybersecurity and Embodiment Integrity for Modern Robots: A Conceptual Framework

Jan 15, 2024Abstract:Modern robots are stepping away from monolithic entities built using ad-hoc sensors and actuators, due to new technologies and communication paradigms, such as the Internet of Things (IoT) and the Robotic Operating System (ROS). Using such paradigms, robots can be built by acquiring heterogeneous standard devices and putting them in communication with each other. This approach brings high degrees of modularity, but it also yields uncertainty of providing cybersecurity assurances, and guarantees on the integrity of the embodiment. In this paper, we first illustrate how cyberattacks on different devices can have radically different consequences on the robot's ability to complete its tasks and preserve its embodiment. We also claim that modern robots should have self-awareness for what it concerns such aspects, and formulate the different characteristics that robots should integrate for doing so. Then, we show that achieving these propositions requires that robots possess at least three properties that conceptually link devices and tasks. Last, we reflect on how these three properties could be achieved in a larger conceptual framework.

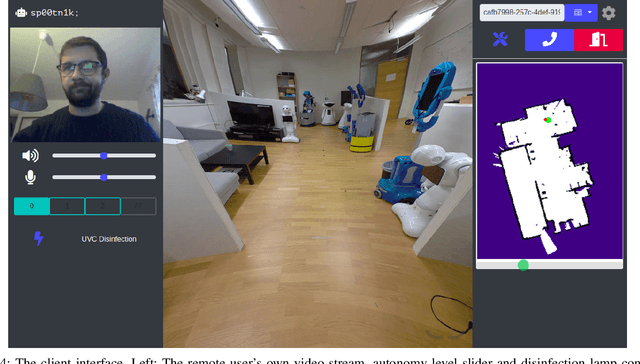

Levels of Automation for a Mobile Robot Teleoperated by a Caregiver

Jul 21, 2021

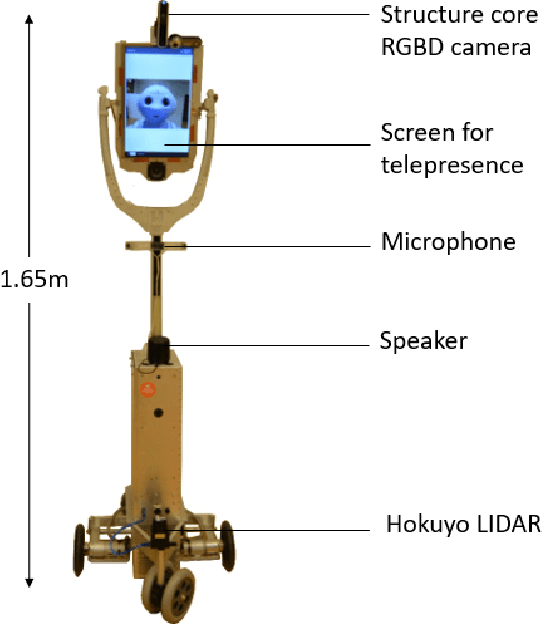

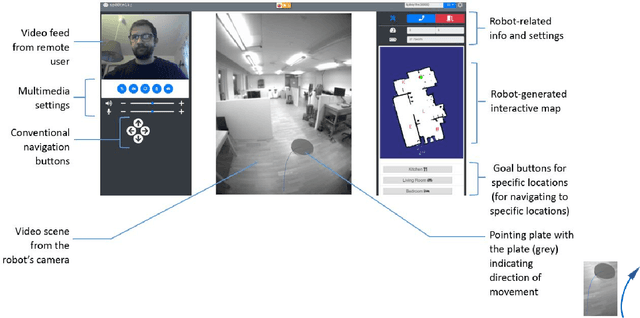

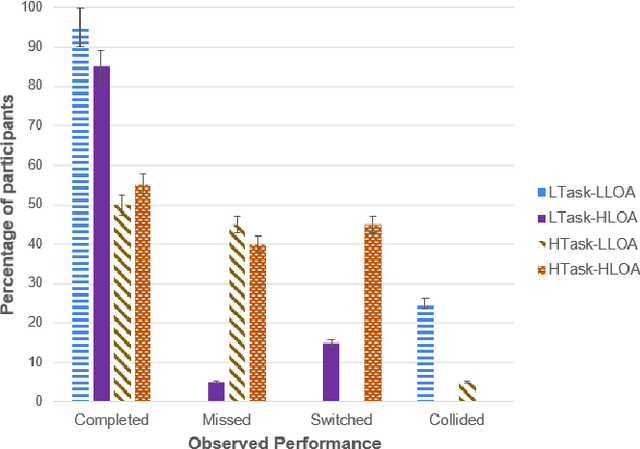

Abstract:Caregivers in eldercare can benefit from telepresence robots that allow them to perform a variety of tasks remotely. In order for such robots to be operated effectively and efficiently by non-technical users, it is important to examine if and how the robotic system's level of automation (LOA) impacts their performance. The objective of this work was to develop suitable LOA modes for a mobile robotic telepresence (MRP) system for eldercare and assess their influence on users' performance, workload, awareness of the environment and usability at two different levels of task complexity. For this purpose, two LOA modes were implemented on the MRP platform: assisted teleoperation (low LOA mode) and autonomous navigation (high LOA mode). The system was evaluated in a user study with 20 participants, who, in the role of the caregiver, navigated the robot through a home-like environment to perform various control and perception tasks. Results revealed that performance improved at high LOA when the task complexity was low. However, when task complexity increased, lower LOA improved performance. This opposite trend was also observed in the results for workload and situation awareness. We discuss the results in terms of the LOAs' impact on users' attitude towards automation and implications on usability.

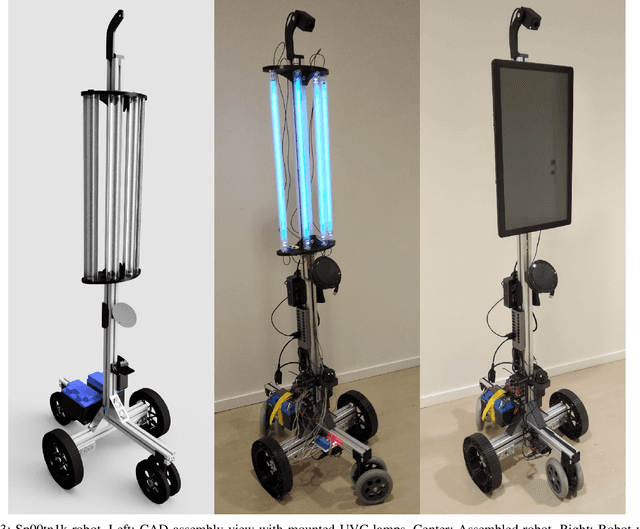

An Open-Source Modular Robotic System for Telepresence and Remote Disinfection

Feb 02, 2021

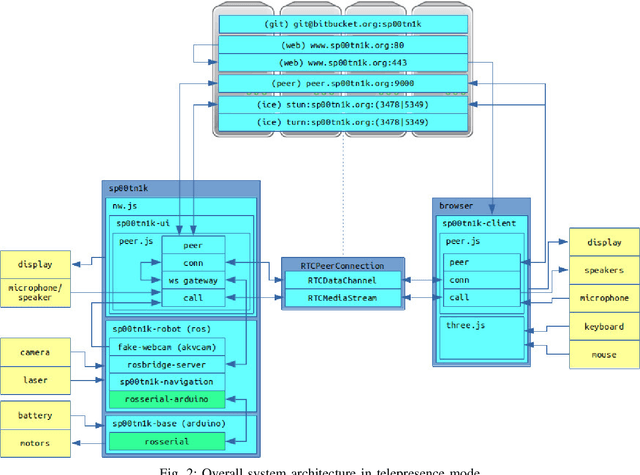

Abstract:In a pandemic contact between humans needs to be avoided wherever possible. Robots can take over an increasing number of tasks to protect people from being exposed to others. One such task is the disinfection of environments in which infection spread is particularly likely or bears increased risks. It has been shown that UVC light is effective in neutralizing a variety of pathogens, among others the virus causing COVID-19, SARS-CoV-2. Another function which can reduce the need for physical proximity between humans is interaction via telepresence, i.e., the remote embodiment of a person controlling the robot. This work presents a modular mobile robot for telepresence and disinfection with UVC lamps. Both operation modes are supported by adaptable autonomy navigation features for facilitating efficient task execution. The platform's primary contributions are its hardware and software design, which combine consumer-grade components and 3D-printed mounting with open-source software frameworks.

Reinforcement Learning Approaches in Social Robotics

Sep 21, 2020

Abstract:There is a growing body of literature that formulates social human-robot interactions as sequential decision-making tasks. In such cases, reinforcement learning arises naturally since the interaction is a key component in both reinforcement learning and social robotics. This article surveys reinforcement learning approaches in social robotics. We propose a taxonomy that categorizes reinforcement learning methods in social robotics according to the nature of the reward function. We discuss the benefits and challenges of such methods and outline possible future directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge