Zihui Zhang

LogoSP: Local-global Grouping of Superpoints for Unsupervised Semantic Segmentation of 3D Point Clouds

Jun 09, 2025Abstract:We study the problem of unsupervised 3D semantic segmentation on raw point clouds without needing human labels in training. Existing methods usually formulate this problem into learning per-point local features followed by a simple grouping strategy, lacking the ability to discover additional and possibly richer semantic priors beyond local features. In this paper, we introduce LogoSP to learn 3D semantics from both local and global point features. The key to our approach is to discover 3D semantic information by grouping superpoints according to their global patterns in the frequency domain, thus generating highly accurate semantic pseudo-labels for training a segmentation network. Extensive experiments on two indoor and an outdoor datasets show that our LogoSP surpasses all existing unsupervised methods by large margins, achieving the state-of-the-art performance for unsupervised 3D semantic segmentation. Notably, our investigation into the learned global patterns reveals that they truly represent meaningful 3D semantics in the absence of human labels during training.

GrabS: Generative Embodied Agent for 3D Object Segmentation without Scene Supervision

Apr 16, 2025Abstract:We study the hard problem of 3D object segmentation in complex point clouds without requiring human labels of 3D scenes for supervision. By relying on the similarity of pretrained 2D features or external signals such as motion to group 3D points as objects, existing unsupervised methods are usually limited to identifying simple objects like cars or their segmented objects are often inferior due to the lack of objectness in pretrained features. In this paper, we propose a new two-stage pipeline called GrabS. The core concept of our method is to learn generative and discriminative object-centric priors as a foundation from object datasets in the first stage, and then design an embodied agent to learn to discover multiple objects by querying against the pretrained generative priors in the second stage. We extensively evaluate our method on two real-world datasets and a newly created synthetic dataset, demonstrating remarkable segmentation performance, clearly surpassing all existing unsupervised methods.

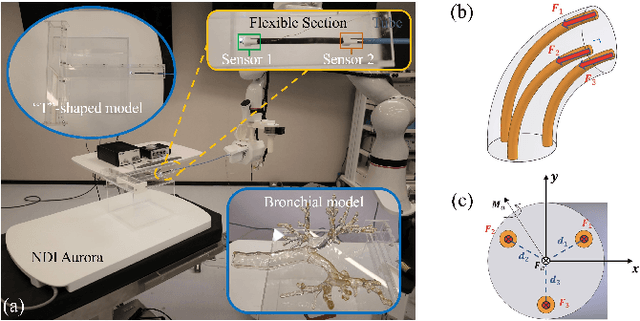

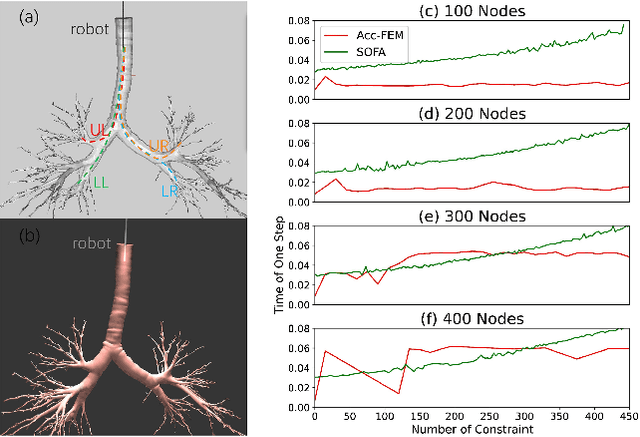

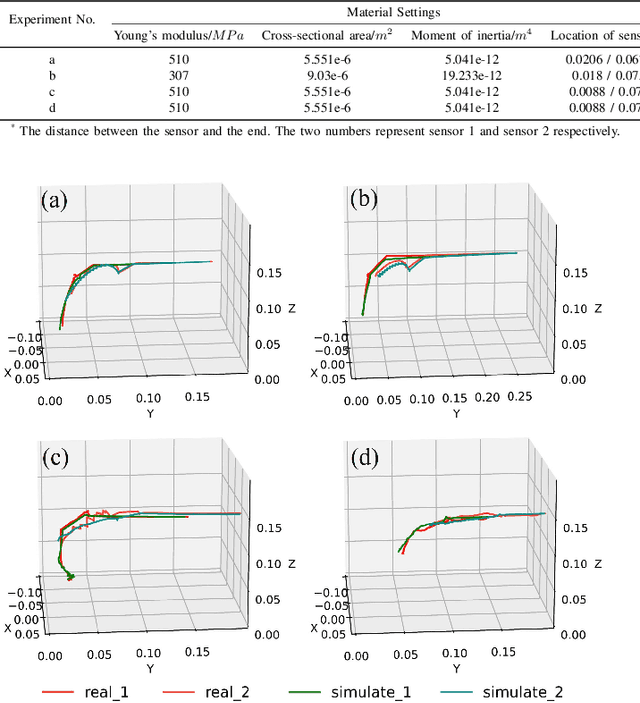

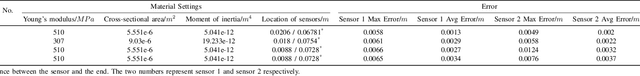

Accelerated Quasi-Static FEM for Real-Time Modeling of Continuum Robots with Multiple Contacts and Large Deformation

Mar 10, 2025

Abstract:Continuum robots offer high flexibility and multiple degrees of freedom, making them ideal for navigating narrow lumens. However, accurately modeling their behavior under large deformations and frequent environmental contacts remains challenging. Current methods for solving the deformation of these robots, such as the Model Order Reduction and Gauss-Seidel (GS) methods, suffer from significant drawbacks. They experience reduced computational speed as the number of contact points increases and struggle to balance speed with model accuracy. To overcome these limitations, we introduce a novel finite element method (FEM) named Acc-FEM. Acc-FEM employs a large deformation quasi-static finite element model and integrates an accelerated solver scheme to handle multi-contact simulations efficiently. Additionally, it utilizes parallel computing with Graphics Processing Units (GPU) for real-time updates of the finite element models and collision detection. Extensive numerical experiments demonstrate that Acc-FEM significantly improves computational efficiency in modeling continuum robots with multiple contacts while achieving satisfactory accuracy, addressing the deficiencies of existing methods.

BATseg: Boundary-aware Multiclass Spinal Cord Tumor Segmentation on 3D MRI Scans

Dec 09, 2024Abstract:Spinal cord tumors significantly contribute to neurological morbidity and mortality. Precise morphometric quantification, encompassing the size, location, and type of such tumors, holds promise for optimizing treatment planning strategies. Although recent methods have demonstrated excellent performance in medical image segmentation, they primarily focus on discerning shapes with relatively large morphology such as brain tumors, ignoring the challenging problem of identifying spinal cord tumors which tend to have tiny sizes, diverse locations, and shapes. To tackle this hard problem of multiclass spinal cord tumor segmentation, we propose a new method, called BATseg, to learn a tumor surface distance field by applying our new multiclass boundary-aware loss function. To verify the effectiveness of our approach, we also introduce the first and large-scale spinal cord tumor dataset. It comprises gadolinium-enhanced T1-weighted 3D MRI scans from 653 patients and contains the four most common spinal cord tumor types: astrocytomas, ependymomas, hemangioblastomas, and spinal meningiomas. Extensive experiments on our dataset and another public kidney tumor segmentation dataset show that our proposed method achieves superior performance for multiclass tumor segmentation.

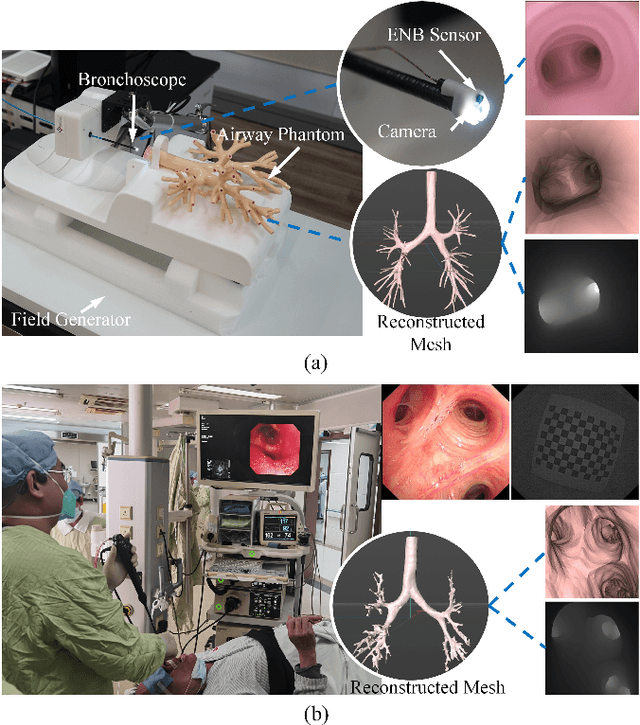

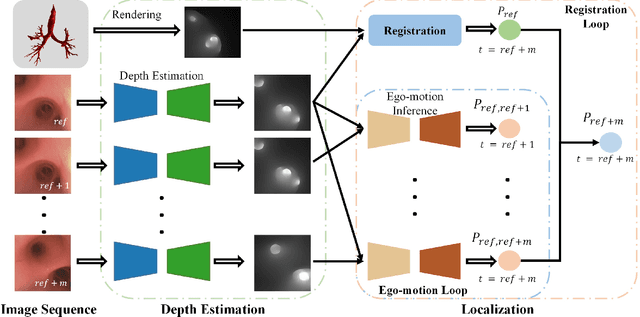

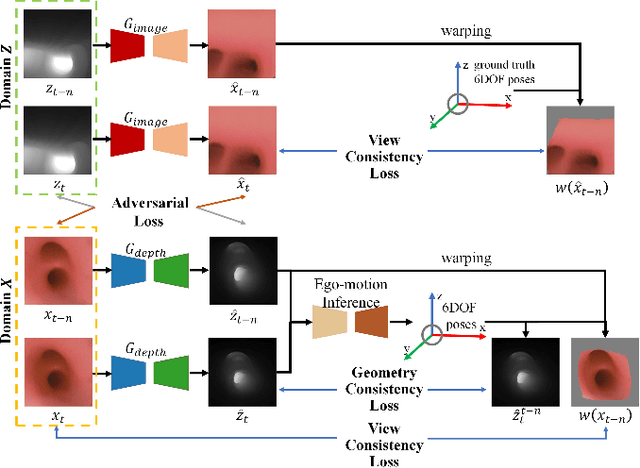

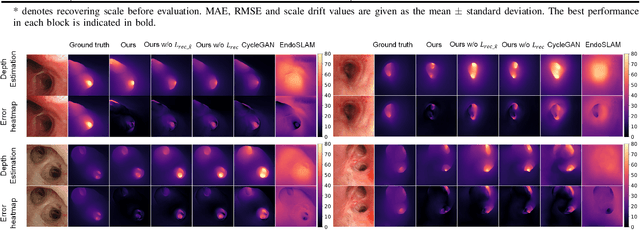

DD-VNB: A Depth-based Dual-Loop Framework for Real-time Visually Navigated Bronchoscopy

Mar 15, 2024

Abstract:Real-time 6 DOF localization of bronchoscopes is crucial for enhancing intervention quality. However, current vision-based technologies struggle to balance between generalization to unseen data and computational speed. In this study, we propose a Depth-based Dual-Loop framework for real-time Visually Navigated Bronchoscopy (DD-VNB) that can generalize across patient cases without the need of re-training. The DD-VNB framework integrates two key modules: depth estimation and dual-loop localization. To address the domain gap among patients, we propose a knowledge-embedded depth estimation network that maps endoscope frames to depth, ensuring generalization by eliminating patient-specific textures. The network embeds view synthesis knowledge into a cycle adversarial architecture for scale-constrained monocular depth estimation. For real-time performance, our localization module embeds a fast ego-motion estimation network into the loop of depth registration. The ego-motion inference network estimates the pose change of the bronchoscope in high frequency while depth registration against the pre-operative 3D model provides absolute pose periodically. Specifically, the relative pose changes are fed into the registration process as the initial guess to boost its accuracy and speed. Experiments on phantom and in-vivo data from patients demonstrate the effectiveness of our framework: 1) monocular depth estimation outperforms SOTA, 2) localization achieves an accuracy of Absolute Tracking Error (ATE) of 4.7 $\pm$ 3.17 mm in phantom and 6.49 $\pm$ 3.88 mm in patient data, 3) with a frame-rate approaching video capture speed, 4) without the necessity of case-wise network retraining. The framework's superior speed and accuracy demonstrate its promising clinical potential for real-time bronchoscopic navigation.

LMSanitator: Defending Prompt-Tuning Against Task-Agnostic Backdoors

Aug 26, 2023

Abstract:Prompt-tuning has emerged as an attractive paradigm for deploying large-scale language models due to its strong downstream task performance and efficient multitask serving ability. Despite its wide adoption, we empirically show that prompt-tuning is vulnerable to downstream task-agnostic backdoors, which reside in the pretrained models and can affect arbitrary downstream tasks. The state-of-the-art backdoor detection approaches cannot defend against task-agnostic backdoors since they hardly converge in reversing the backdoor triggers. To address this issue, we propose LMSanitator, a novel approach for detecting and removing task-agnostic backdoors on Transformer models. Instead of directly inversing the triggers, LMSanitator aims to inverse the predefined attack vectors (pretrained models' output when the input is embedded with triggers) of the task-agnostic backdoors, which achieves much better convergence performance and backdoor detection accuracy. LMSanitator further leverages prompt-tuning's property of freezing the pretrained model to perform accurate and fast output monitoring and input purging during the inference phase. Extensive experiments on multiple language models and NLP tasks illustrate the effectiveness of LMSanitator. For instance, LMSanitator achieves 92.8% backdoor detection accuracy on 960 models and decreases the attack success rate to less than 1% in most scenarios.

GrowSP: Unsupervised Semantic Segmentation of 3D Point Clouds

May 25, 2023

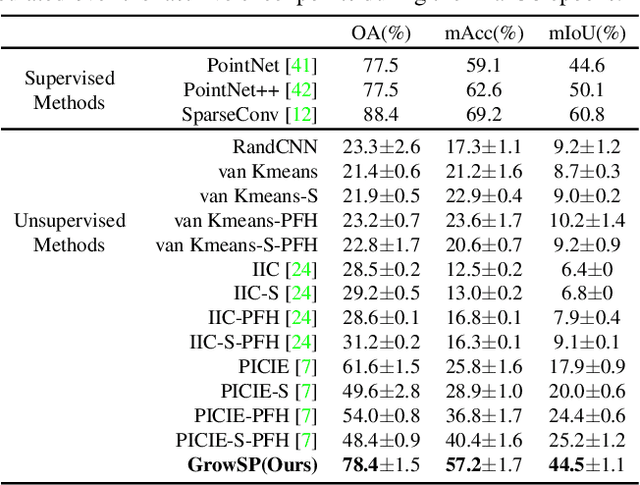

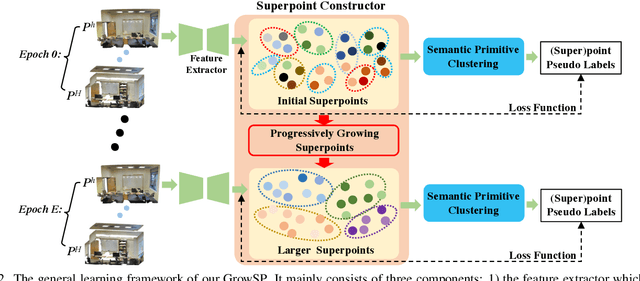

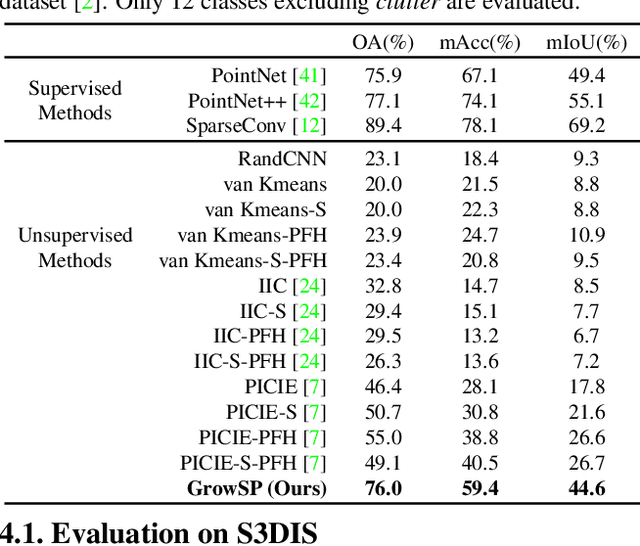

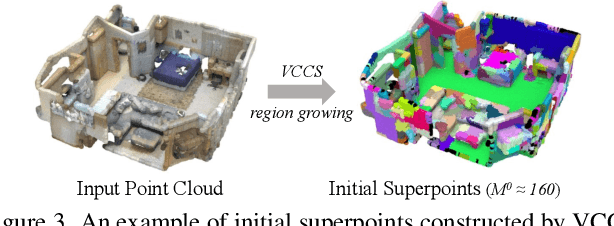

Abstract:We study the problem of 3D semantic segmentation from raw point clouds. Unlike existing methods which primarily rely on a large amount of human annotations for training neural networks, we propose the first purely unsupervised method, called GrowSP, to successfully identify complex semantic classes for every point in 3D scenes, without needing any type of human labels or pretrained models. The key to our approach is to discover 3D semantic elements via progressive growing of superpoints. Our method consists of three major components, 1) the feature extractor to learn per-point features from input point clouds, 2) the superpoint constructor to progressively grow the sizes of superpoints, and 3) the semantic primitive clustering module to group superpoints into semantic elements for the final semantic segmentation. We extensively evaluate our method on multiple datasets, demonstrating superior performance over all unsupervised baselines and approaching the classic fully-supervised PointNet. We hope our work could inspire more advanced methods for unsupervised 3D semantic learning.

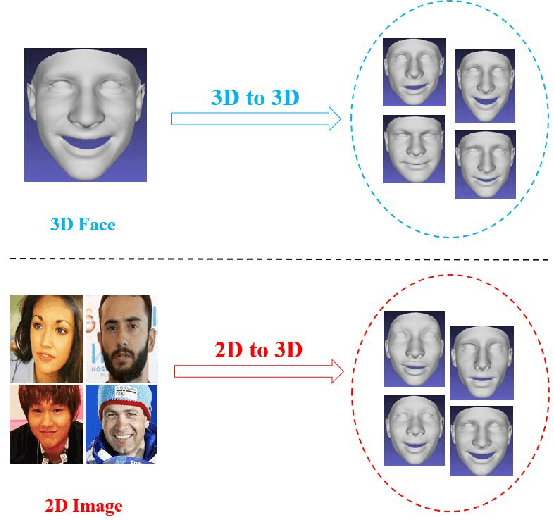

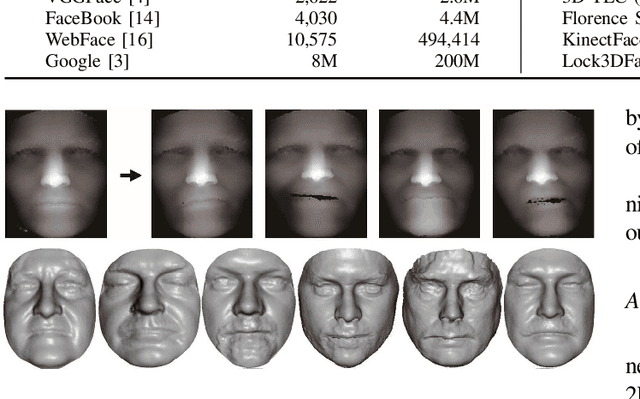

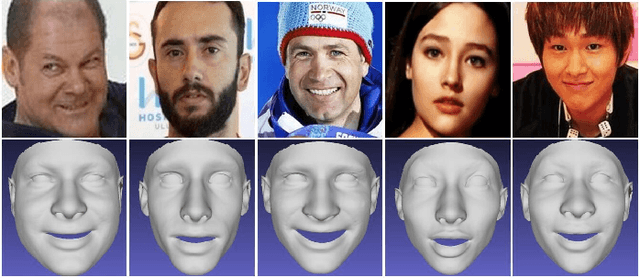

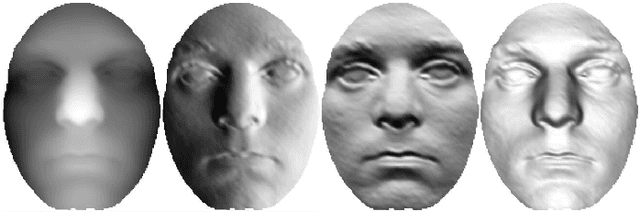

Reconstructing A Large Scale 3D Face Dataset for Deep 3D Face Identification

Oct 16, 2020

Abstract:Deep learning methods have brought many breakthroughs to computer vision, especially in 2D face recognition. However, the bottleneck of deep learning based 3D face recognition is that it is difficult to collect millions of 3D faces, whether for industry or academia. In view of this situation, there are many methods to generate more 3D faces from existing 3D faces through 3D face data augmentation, which are used to train deep 3D face recognition models. However, to the best of our knowledge, there is no method to generate 3D faces from 2D face images for training deep 3D face recognition models. This letter focuses on the role of reconstructed 3D facial surfaces in 3D face identification and proposes a framework of 2D-aided deep 3D face identification. In particular, we propose to reconstruct millions of 3D face scans from a large scale 2D face database (i.e.VGGFace2), using a deep learning based 3D face reconstruction method (i.e.ExpNet). Then, we adopt a two-phase training approach: In the first phase, we use millions of face images to pre-train the deep convolutional neural network (DCNN), and in the second phase, we use normal component images (NCI) of reconstructed 3D face scans to train the DCNN. Extensive experimental results illustrate that the proposed approach can greatly improve the rank-1 score of 3D face identification on the FRGC v2.0, the Bosphorus, and the BU-3DFE 3D face databases, compared to the model trained by 2D face images. Finally, our proposed approach achieves state-of-the-art rank-1 scores on the FRGC v2.0 (97.6%), Bosphorus (98.4%), and BU-3DFE (98.8%) databases. The experimental results show that the reconstructed 3D facial surfaces are useful and our 2D-aided deep 3D face identification framework is meaningful, facing the scarcity of 3D faces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge