Qingyao Tian

BREATH-VL: Vision-Language-Guided 6-DoF Bronchoscopy Localization via Semantic-Geometric Fusion

Jan 07, 2026Abstract:Vision-language models (VLMs) have recently shown remarkable performance in navigation and localization tasks by leveraging large-scale pretraining for semantic understanding. However, applying VLMs to 6-DoF endoscopic camera localization presents several challenges: 1) the lack of large-scale, high-quality, densely annotated, and localization-oriented vision-language datasets in real-world medical settings; 2) limited capability for fine-grained pose regression; and 3) high computational latency when extracting temporal features from past frames. To address these issues, we first construct BREATH dataset, the largest in-vivo endoscopic localization dataset to date, collected in the complex human airway. Building on this dataset, we propose BREATH-VL, a hybrid framework that integrates semantic cues from VLMs with geometric information from vision-based registration methods for accurate 6-DoF pose estimation. Our motivation lies in the complementary strengths of both approaches: VLMs offer generalizable semantic understanding, while registration methods provide precise geometric alignment. To further enhance the VLM's ability to capture temporal context, we introduce a lightweight context-learning mechanism that encodes motion history as linguistic prompts, enabling efficient temporal reasoning without expensive video-level computation. Extensive experiments demonstrate that the vision-language module delivers robust semantic localization in challenging surgical scenes. Building on this, our BREATH-VL outperforms state-of-the-art vision-only localization methods in both accuracy and generalization, reducing translational error by 25.5% compared with the best-performing baseline, while achieving competitive computational latency.

EndoMatcher: Generalizable Endoscopic Image Matcher via Multi-Domain Pre-training for Robot-Assisted Surgery

Aug 07, 2025Abstract:Generalizable dense feature matching in endoscopic images is crucial for robot-assisted tasks, including 3D reconstruction, navigation, and surgical scene understanding. Yet, it remains a challenge due to difficult visual conditions (e.g., weak textures, large viewpoint variations) and a scarcity of annotated data. To address these challenges, we propose EndoMatcher, a generalizable endoscopic image matcher via large-scale, multi-domain data pre-training. To address difficult visual conditions, EndoMatcher employs a two-branch Vision Transformer to extract multi-scale features, enhanced by dual interaction blocks for robust correspondence learning. To overcome data scarcity and improve domain diversity, we construct Endo-Mix6, the first multi-domain dataset for endoscopic matching. Endo-Mix6 consists of approximately 1.2M real and synthetic image pairs across six domains, with correspondence labels generated using Structure-from-Motion and simulated transformations. The diversity and scale of Endo-Mix6 introduce new challenges in training stability due to significant variations in dataset sizes, distribution shifts, and error imbalance. To address them, a progressive multi-objective training strategy is employed to promote balanced learning and improve representation quality across domains. This enables EndoMatcher to generalize across unseen organs and imaging conditions in a zero-shot fashion. Extensive zero-shot matching experiments demonstrate that EndoMatcher increases the number of inlier matches by 140.69% and 201.43% on the Hamlyn and Bladder datasets over state-of-the-art methods, respectively, and improves the Matching Direction Prediction Accuracy (MDPA) by 9.40% on the Gastro-Matching dataset, achieving dense and accurate matching under challenging endoscopic conditions. The code is publicly available at https://github.com/Beryl2000/EndoMatcher.

Harnessing Foundation Models for Robust and Generalizable 6-DOF Bronchoscopy Localization

May 30, 2025Abstract:Vision-based 6-DOF bronchoscopy localization offers a promising solution for accurate and cost-effective interventional guidance. However, existing methods struggle with 1) limited generalization across patient cases due to scarce labeled data, and 2) poor robustness under visual degradation, as bronchoscopy procedures frequently involve artifacts such as occlusions and motion blur that impair visual information. To address these challenges, we propose PANSv2, a generalizable and robust bronchoscopy localization framework. Motivated by PANS that leverages multiple visual cues for pose likelihood measurement, PANSv2 integrates depth estimation, landmark detection, and centerline constraints into a unified pose optimization framework that evaluates pose probability and solves for the optimal bronchoscope pose. To further enhance generalization capabilities, we leverage the endoscopic foundation model EndoOmni for depth estimation and the video foundation model EndoMamba for landmark detection, incorporating both spatial and temporal analyses. Pretrained on diverse endoscopic datasets, these models provide stable and transferable visual representations, enabling reliable performance across varied bronchoscopy scenarios. Additionally, to improve robustness to visual degradation, we introduce an automatic re-initialization module that detects tracking failures and re-establishes pose using landmark detections once clear views are available. Experimental results on bronchoscopy dataset encompassing 10 patient cases show that PANSv2 achieves the highest tracking success rate, with an 18.1% improvement in SR-5 (percentage of absolute trajectory error under 5 mm) compared to existing methods, showing potential towards real clinical usage.

EndoMamba: An Efficient Foundation Model for Endoscopic Videos

Feb 26, 2025

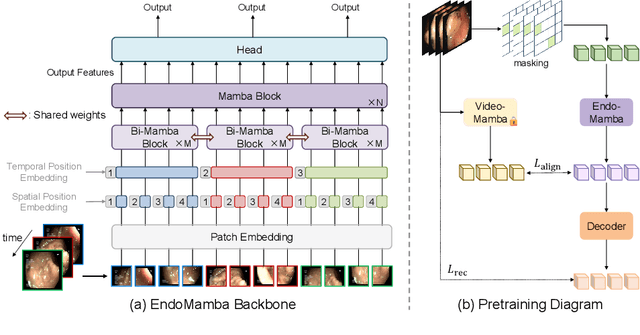

Abstract:Endoscopic video-based tasks, such as visual navigation and surgical phase recognition, play a crucial role in minimally invasive surgeries by providing real-time assistance. While recent video foundation models have shown promise, their applications are hindered by (1) computational inefficiencies and (2) suboptimal performance caused by limited data for pre-training in endoscopy. To address these issues, we present EndoMamba, a foundation model designed for real-time inference while learning generalized spatiotemporal representations. First, to mitigate computational inefficiencies, we propose the EndoMamba backbone, optimized for real-time inference. Inspired by recent advancements in state space models, EndoMamba integrates Bidirectional Mamba blocks for spatial modeling within individual frames and vanilla Mamba blocks for past-to-present reasoning across the temporal domain. This design enables both strong spatiotemporal modeling and efficient inference in online video streams. Second, we propose a self-supervised hierarchical pre-training diagram to enhance EndoMamba's representation learning using endoscopic videos and incorporating general video domain knowledge. Specifically, our approach combines masked reconstruction with auxiliary supervision, leveraging low-level reconstruction to capture spatial-temporal structures and high-level alignment to transfer broader knowledge from a pretrained general-video domain foundation model. Extensive experiments on four downstream tasks--classification, segmentation, surgical phase recognition, and localization--demonstrate that EndoMamba outperforms existing foundation models and task-specific methods while maintaining real-time inference speed. The source code will be released upon acceptance.

Enhancing Bronchoscopy Depth Estimation through Synthetic-to-Real Domain Adaptation

Nov 07, 2024

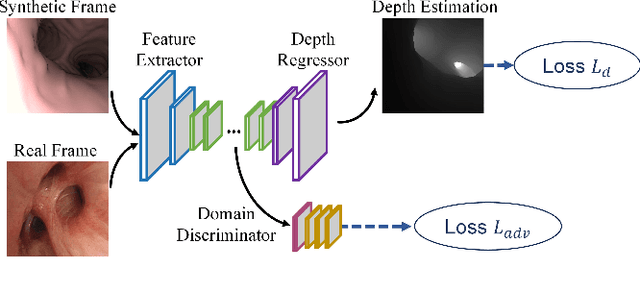

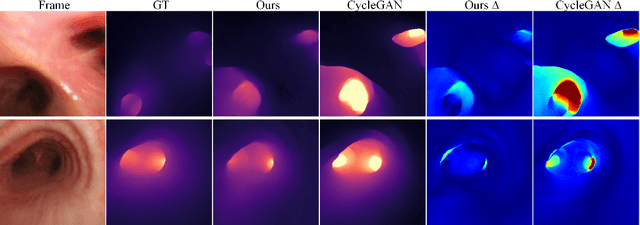

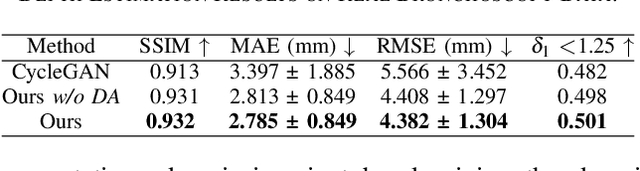

Abstract:Monocular depth estimation has shown promise in general imaging tasks, aiding in localization and 3D reconstruction. While effective in various domains, its application to bronchoscopic images is hindered by the lack of labeled data, challenging the use of supervised learning methods. In this work, we propose a transfer learning framework that leverages synthetic data with depth labels for training and adapts domain knowledge for accurate depth estimation in real bronchoscope data. Our network demonstrates improved depth prediction on real footage using domain adaptation compared to training solely on synthetic data, validating our approach.

Multi-Stage Airway Segmentation in Lung CT Based on Multi-scale Nested Residual UNet

Oct 24, 2024

Abstract:Accurate and complete segmentation of airways in chest CT images is essential for the quantitative assessment of lung diseases and the facilitation of pulmonary interventional procedures. Although deep learning has led to significant advancements in medical image segmentation, maintaining airway continuity remains particularly challenging. This difficulty arises primarily from the small and dispersed nature of airway structures, as well as class imbalance in CT scans. To address these challenges, we designed a Multi-scale Nested Residual U-Net (MNR-UNet), incorporating multi-scale inputs and Residual Multi-scale Modules (RMM) into a nested residual framework to enhance information flow, effectively capturing the intricate details of small airways and mitigating gradient vanishing. Building on this, we developed a three-stage segmentation pipeline to optimize the training of the MNR-UNet. The first two stages prioritize high accuracy and sensitivity, while the third stage focuses on repairing airway breakages to balance topological completeness and correctness. To further address class imbalance, we introduced a weighted Breakage-Aware Loss (wBAL) to heighten focus on challenging samples, penalizing breakages and thereby extending the length of the airway tree. Additionally, we proposed a hierarchical evaluation framework to offer more clinically meaningful analysis. Validation on both in-house and public datasets demonstrates that our approach achieves superior performance in detecting more accurate airway voxels and identifying additional branches, significantly improving airway topological completeness. The code will be released publicly following the publication of the paper.

EndoOmni: Zero-Shot Cross-Dataset Depth Estimation in Endoscopy by Robust Self-Learning from Noisy Labels

Sep 11, 2024Abstract:Single-image depth estimation is essential for endoscopy tasks such as localization, reconstruction, and augmented reality. Most existing methods in surgical scenes focus on in-domain depth estimation, limiting their real-world applicability. This constraint stems from the scarcity and inferior labeling quality of medical data for training. In this work, we present EndoOmni, the first foundation model for zero-shot cross-domain depth estimation for endoscopy. To harness the potential of diverse training data, we refine the advanced self-learning paradigm that employs a teacher model to generate pseudo-labels, guiding a student model trained on large-scale labeled and unlabeled data. To address training disturbance caused by inherent noise in depth labels, we propose a robust training framework that leverages both depth labels and estimated confidence from the teacher model to jointly guide the student model training. Moreover, we propose a weighted scale-and-shift invariant loss to adaptively adjust learning weights based on label confidence, thus imposing learning bias towards cleaner label pixels while reducing the influence of highly noisy pixels. Experiments on zero-shot relative depth estimation show that our EndoOmni improves state-of-the-art methods in medical imaging for 41\% and existing foundation models for 25\% in terms of absolute relative error on specific dataset. Furthermore, our model provides strong initialization for fine-tuning to metric depth estimation, maintaining superior performance in both in-domain and out-of-domain scenarios. The source code will be publicly available.

PANS: Probabilistic Airway Navigation System for Real-time Robust Bronchoscope Localization

Jul 08, 2024Abstract:Accurate bronchoscope localization is essential for pulmonary interventions, by providing six degrees of freedom (DOF) in airway navigation. However, the robustness of current vision-based methods is often compromised in clinical practice, and they struggle to perform in real-time and to generalize across cases unseen during training. To overcome these challenges, we propose a novel Probabilistic Airway Navigation System (PANS), leveraging Monte-Carlo method with pose hypotheses and likelihoods to achieve robust and real-time bronchoscope localization. Specifically, our PANS incorporates diverse visual representations (\textit{e.g.}, odometry and landmarks) by leveraging two key modules, including the Depth-based Motion Inference (DMI) and the Bronchial Semantic Analysis (BSA). To generate the pose hypotheses of bronchoscope for PANS, we devise the DMI to accurately propagate the estimation of pose hypotheses over time. Moreover, to estimate the accurate pose likelihood, we devise the BSA module by effectively distinguishing between similar bronchial regions in endoscopic images, along with a novel metric to assess the congruence between estimated depth maps and the segmented airway structure. Under this probabilistic formulation, our PANS is capable of achieving the 6-DOF bronchoscope localization with superior accuracy and robustness. Extensive experiments on the collected pulmonary intervention dataset comprising 10 clinical cases confirm the advantage of our PANS over state-of-the-arts, in terms of both robustness and generalization in localizing deeper airway branches and the efficiency of real-time inference. The proposed PANS reveals its potential to be a reliable tool in the operating room, promising to enhance the quality and safety of pulmonary interventions.

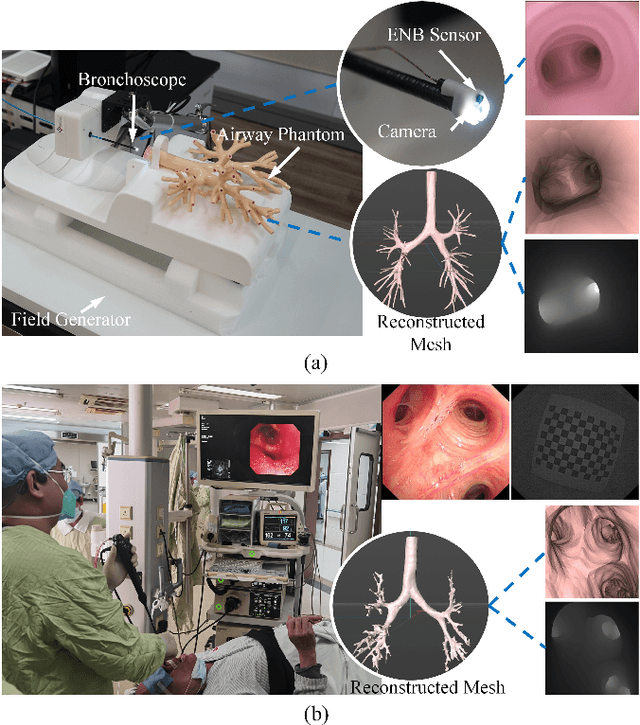

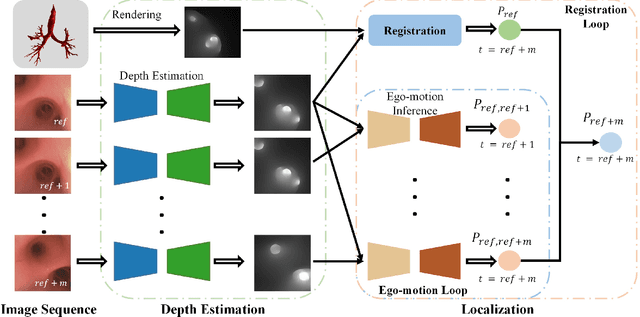

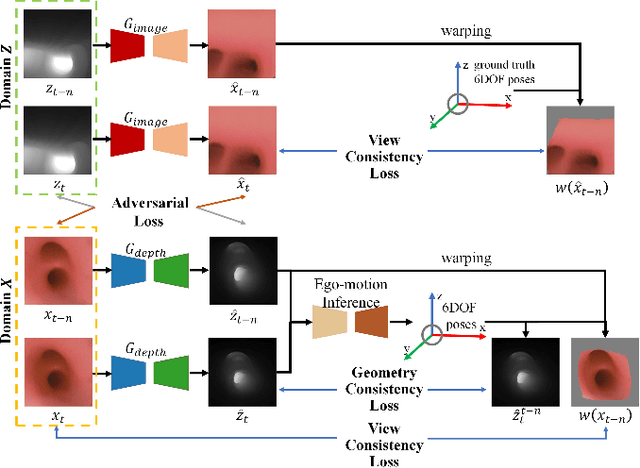

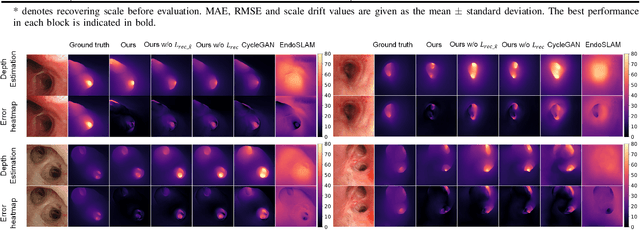

DD-VNB: A Depth-based Dual-Loop Framework for Real-time Visually Navigated Bronchoscopy

Mar 15, 2024

Abstract:Real-time 6 DOF localization of bronchoscopes is crucial for enhancing intervention quality. However, current vision-based technologies struggle to balance between generalization to unseen data and computational speed. In this study, we propose a Depth-based Dual-Loop framework for real-time Visually Navigated Bronchoscopy (DD-VNB) that can generalize across patient cases without the need of re-training. The DD-VNB framework integrates two key modules: depth estimation and dual-loop localization. To address the domain gap among patients, we propose a knowledge-embedded depth estimation network that maps endoscope frames to depth, ensuring generalization by eliminating patient-specific textures. The network embeds view synthesis knowledge into a cycle adversarial architecture for scale-constrained monocular depth estimation. For real-time performance, our localization module embeds a fast ego-motion estimation network into the loop of depth registration. The ego-motion inference network estimates the pose change of the bronchoscope in high frequency while depth registration against the pre-operative 3D model provides absolute pose periodically. Specifically, the relative pose changes are fed into the registration process as the initial guess to boost its accuracy and speed. Experiments on phantom and in-vivo data from patients demonstrate the effectiveness of our framework: 1) monocular depth estimation outperforms SOTA, 2) localization achieves an accuracy of Absolute Tracking Error (ATE) of 4.7 $\pm$ 3.17 mm in phantom and 6.49 $\pm$ 3.88 mm in patient data, 3) with a frame-rate approaching video capture speed, 4) without the necessity of case-wise network retraining. The framework's superior speed and accuracy demonstrate its promising clinical potential for real-time bronchoscopic navigation.

BronchoCopilot: Towards Autonomous Robotic Bronchoscopy via Multimodal Reinforcement Learning

Mar 03, 2024

Abstract:Bronchoscopy plays a significant role in the early diagnosis and treatment of lung diseases. This process demands physicians to maneuver the flexible endoscope for reaching distal lesions, particularly requiring substantial expertise when examining the airways of the upper lung lobe. With the development of artificial intelligence and robotics, reinforcement learning (RL) method has been applied to the manipulation of interventional surgical robots. However, unlike human physicians who utilize multimodal information, most of the current RL methods rely on a single modality, limiting their performance. In this paper, we propose BronchoCopilot, a multimodal RL agent designed to acquire manipulation skills for autonomous bronchoscopy. BronchoCopilot specifically integrates images from the bronchoscope camera and estimated robot poses, aiming for a higher success rate within challenging airway environment. We employ auxiliary reconstruction tasks to compress multimodal data and utilize attention mechanisms to achieve an efficient latent representation of this data, serving as input for the RL module. This framework adopts a stepwise training and fine-tuning approach to mitigate the challenges of training difficulty. Our evaluation in the realistic simulation environment reveals that BronchoCopilot, by effectively harnessing multimodal information, attains a success rate of approximately 90\% in fifth generation airways with consistent movements. Additionally, it demonstrates a robust capacity to adapt to diverse cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge