Lujie Li

Enhancing Bronchoscopy Depth Estimation through Synthetic-to-Real Domain Adaptation

Nov 07, 2024

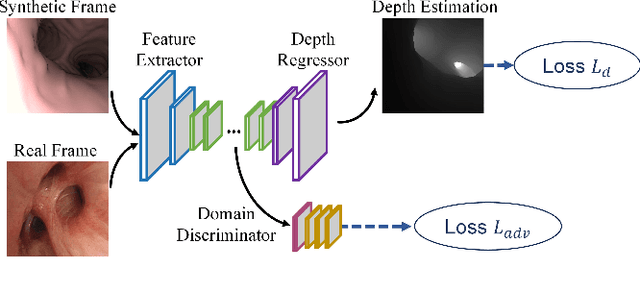

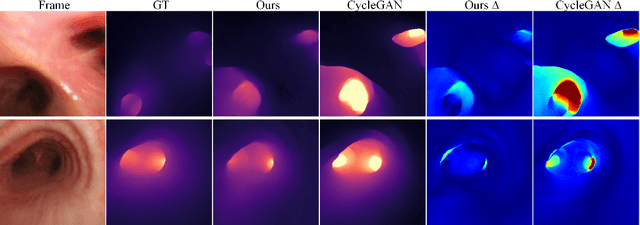

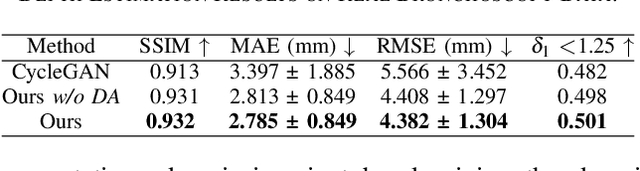

Abstract:Monocular depth estimation has shown promise in general imaging tasks, aiding in localization and 3D reconstruction. While effective in various domains, its application to bronchoscopic images is hindered by the lack of labeled data, challenging the use of supervised learning methods. In this work, we propose a transfer learning framework that leverages synthetic data with depth labels for training and adapts domain knowledge for accurate depth estimation in real bronchoscope data. Our network demonstrates improved depth prediction on real footage using domain adaptation compared to training solely on synthetic data, validating our approach.

EndoOmni: Zero-Shot Cross-Dataset Depth Estimation in Endoscopy by Robust Self-Learning from Noisy Labels

Sep 11, 2024Abstract:Single-image depth estimation is essential for endoscopy tasks such as localization, reconstruction, and augmented reality. Most existing methods in surgical scenes focus on in-domain depth estimation, limiting their real-world applicability. This constraint stems from the scarcity and inferior labeling quality of medical data for training. In this work, we present EndoOmni, the first foundation model for zero-shot cross-domain depth estimation for endoscopy. To harness the potential of diverse training data, we refine the advanced self-learning paradigm that employs a teacher model to generate pseudo-labels, guiding a student model trained on large-scale labeled and unlabeled data. To address training disturbance caused by inherent noise in depth labels, we propose a robust training framework that leverages both depth labels and estimated confidence from the teacher model to jointly guide the student model training. Moreover, we propose a weighted scale-and-shift invariant loss to adaptively adjust learning weights based on label confidence, thus imposing learning bias towards cleaner label pixels while reducing the influence of highly noisy pixels. Experiments on zero-shot relative depth estimation show that our EndoOmni improves state-of-the-art methods in medical imaging for 41\% and existing foundation models for 25\% in terms of absolute relative error on specific dataset. Furthermore, our model provides strong initialization for fine-tuning to metric depth estimation, maintaining superior performance in both in-domain and out-of-domain scenarios. The source code will be publicly available.

PANS: Probabilistic Airway Navigation System for Real-time Robust Bronchoscope Localization

Jul 08, 2024Abstract:Accurate bronchoscope localization is essential for pulmonary interventions, by providing six degrees of freedom (DOF) in airway navigation. However, the robustness of current vision-based methods is often compromised in clinical practice, and they struggle to perform in real-time and to generalize across cases unseen during training. To overcome these challenges, we propose a novel Probabilistic Airway Navigation System (PANS), leveraging Monte-Carlo method with pose hypotheses and likelihoods to achieve robust and real-time bronchoscope localization. Specifically, our PANS incorporates diverse visual representations (\textit{e.g.}, odometry and landmarks) by leveraging two key modules, including the Depth-based Motion Inference (DMI) and the Bronchial Semantic Analysis (BSA). To generate the pose hypotheses of bronchoscope for PANS, we devise the DMI to accurately propagate the estimation of pose hypotheses over time. Moreover, to estimate the accurate pose likelihood, we devise the BSA module by effectively distinguishing between similar bronchial regions in endoscopic images, along with a novel metric to assess the congruence between estimated depth maps and the segmented airway structure. Under this probabilistic formulation, our PANS is capable of achieving the 6-DOF bronchoscope localization with superior accuracy and robustness. Extensive experiments on the collected pulmonary intervention dataset comprising 10 clinical cases confirm the advantage of our PANS over state-of-the-arts, in terms of both robustness and generalization in localizing deeper airway branches and the efficiency of real-time inference. The proposed PANS reveals its potential to be a reliable tool in the operating room, promising to enhance the quality and safety of pulmonary interventions.

BronchoTrack: Airway Lumen Tracking for Branch-Level Bronchoscopic Localization

Feb 20, 2024Abstract:Localizing the bronchoscope in real time is essential for ensuring intervention quality. However, most existing methods struggle to balance between speed and generalization. To address these challenges, we present BronchoTrack, an innovative real-time framework for accurate branch-level localization, encompassing lumen detection, tracking, and airway association.To achieve real-time performance, we employ a benchmark lightweight detector for efficient lumen detection. We are the first to introduce multi-object tracking to bronchoscopic localization, mitigating temporal confusion in lumen identification caused by rapid bronchoscope movement and complex airway structures. To ensure generalization across patient cases, we propose a training-free detection-airway association method based on a semantic airway graph that encodes the hierarchy of bronchial tree structures.Experiments on nine patient datasets demonstrate BronchoTrack's localization accuracy of 85.64 \%, while accessing up to the 4th generation of airways.Furthermore, we tested BronchoTrack in an in-vivo animal study using a porcine model, where it successfully localized the bronchoscope into the 8th generation airway.Experimental evaluation underscores BronchoTrack's real-time performance in both satisfying accuracy and generalization, demonstrating its potential for clinical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge