Zhijie Wang

Matrix Completion Via Reweighted Logarithmic Norm Minimization

Dec 24, 2025Abstract:Low-rank matrix completion (LRMC) has demonstrated remarkable success in a wide range of applications. To address the NP-hard nature of the rank minimization problem, the nuclear norm is commonly used as a convex and computationally tractable surrogate for the rank function. However, this approach often yields suboptimal solutions due to the excessive shrinkage of singular values. In this letter, we propose a novel reweighted logarithmic norm as a more effective nonconvex surrogate, which provides a closer approximation than many existing alternatives. We efficiently solve the resulting optimization problem by employing the alternating direction method of multipliers (ADMM). Experimental results on image inpainting demonstrate that the proposed method achieves superior performance compared to state-of-the-art LRMC approaches, both in terms of visual quality and quantitative metrics.

RaSA: Rank-Sharing Low-Rank Adaptation

Mar 16, 2025Abstract:Low-rank adaptation (LoRA) has been prominently employed for parameter-efficient fine-tuning of large language models (LLMs). However, the limited expressive capacity of LoRA, stemming from the low-rank constraint, has been recognized as a bottleneck, particularly in rigorous tasks like code generation and mathematical reasoning. To address this limitation, we introduce Rank-Sharing Low-Rank Adaptation (RaSA), an innovative extension that enhances the expressive capacity of LoRA by leveraging partial rank sharing across layers. By forming a shared rank pool and applying layer-specific weighting, RaSA effectively increases the number of ranks without augmenting parameter overhead. Our theoretically grounded and empirically validated approach demonstrates that RaSA not only maintains the core advantages of LoRA but also significantly boosts performance in challenging code and math tasks. Code, data and scripts are available at: https://github.com/zwhe99/RaSA.

WarmFed: Federated Learning with Warm-Start for Globalization and Personalization Via Personalized Diffusion Models

Mar 05, 2025Abstract:Federated Learning (FL) stands as a prominent distributed learning paradigm among multiple clients to achieve a unified global model without privacy leakage. In contrast to FL, Personalized federated learning aims at serving for each client in achieving persoanlized model. However, previous FL frameworks have grappled with a dilemma: the choice between developing a singular global model at the server to bolster globalization or nurturing personalized model at the client to accommodate personalization. Instead of making trade-offs, this paper commences its discourse from the pre-trained initialization, obtaining resilient global information and facilitating the development of both global and personalized models. Specifically, we propose a novel method called WarmFed to achieve this. WarmFed customizes Warm-start through personalized diffusion models, which are generated by local efficient fine-tunining (LoRA). Building upon the Warm-Start, we advance a server-side fine-tuning strategy to derive the global model, and propose a dynamic self-distillation (DSD) to procure more resilient personalized models simultaneously. Comprehensive experiments underscore the substantial gains of our approach across both global and personalized models, achieved within just one-shot and five communication(s).

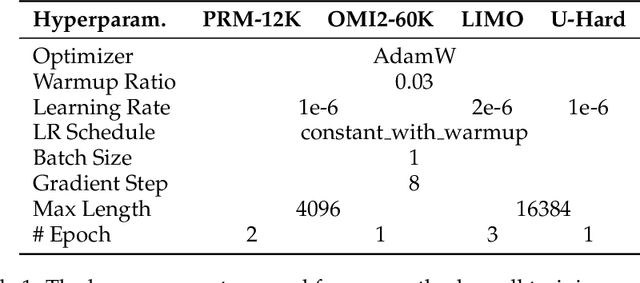

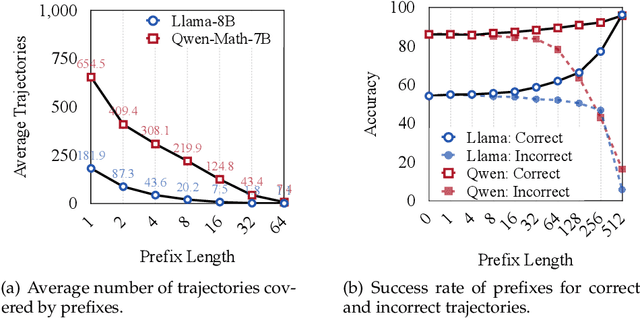

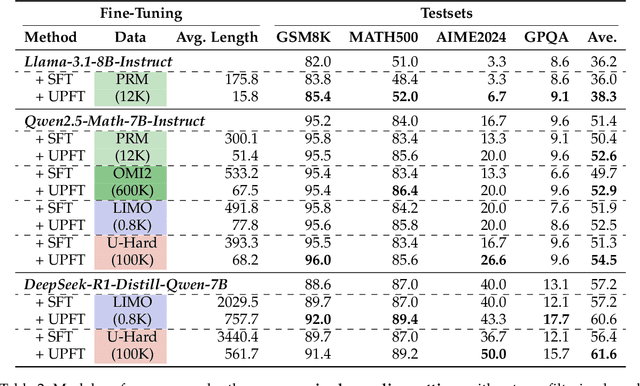

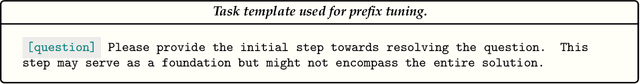

The First Few Tokens Are All You Need: An Efficient and Effective Unsupervised Prefix Fine-Tuning Method for Reasoning Models

Mar 04, 2025

Abstract:Improving the reasoning capabilities of large language models (LLMs) typically requires supervised fine-tuning with labeled data or computationally expensive sampling. We introduce Unsupervised Prefix Fine-Tuning (UPFT), which leverages the observation of Prefix Self-Consistency -- the shared initial reasoning steps across diverse solution trajectories -- to enhance LLM reasoning efficiency. By training exclusively on the initial prefix substrings (as few as 8 tokens), UPFT removes the need for labeled data or exhaustive sampling. Experiments on reasoning benchmarks show that UPFT matches the performance of supervised methods such as Rejection Sampling Fine-Tuning, while reducing training time by 75% and sampling cost by 99%. Further analysis reveals that errors tend to appear in later stages of the reasoning process and that prefix-based training preserves the model's structural knowledge. This work demonstrates how minimal unsupervised fine-tuning can unlock substantial reasoning gains in LLMs, offering a scalable and resource-efficient alternative to conventional approaches.

MFP-VTON: Enhancing Mask-Free Person-to-Person Virtual Try-On via Diffusion Transformer

Feb 03, 2025

Abstract:The garment-to-person virtual try-on (VTON) task, which aims to generate fitting images of a person wearing a reference garment, has made significant strides. However, obtaining a standard garment is often more challenging than using the garment already worn by the person. To improve ease of use, we propose MFP-VTON, a Mask-Free framework for Person-to-Person VTON. Recognizing the scarcity of person-to-person data, we adapt a garment-to-person model and dataset to construct a specialized dataset for this task. Our approach builds upon a pretrained diffusion transformer, leveraging its strong generative capabilities. During mask-free model fine-tuning, we introduce a Focus Attention loss to emphasize the garment of the reference person and the details outside the garment of the target person. Experimental results demonstrate that our model excels in both person-to-person and garment-to-person VTON tasks, generating high-fidelity fitting images.

IGR: Improving Diffusion Model for Garment Restoration from Person Image

Dec 16, 2024

Abstract:Garment restoration, the inverse of virtual try-on task, focuses on restoring standard garment from a person image, requiring accurate capture of garment details. However, existing methods often fail to preserve the identity of the garment or rely on complex processes. To address these limitations, we propose an improved diffusion model for restoring authentic garments. Our approach employs two garment extractors to independently capture low-level features and high-level semantics from the person image. Leveraging a pretrained latent diffusion model, these features are integrated into the denoising process through garment fusion blocks, which combine self-attention and cross-attention layers to align the restored garment with the person image. Furthermore, a coarse-to-fine training strategy is introduced to enhance the fidelity and authenticity of the generated garments. Experimental results demonstrate that our model effectively preserves garment identity and generates high-quality restorations, even in challenging scenarios such as complex garments or those with occlusions.

Towards Understanding Retrieval Accuracy and Prompt Quality in RAG Systems

Nov 29, 2024Abstract:Retrieval-Augmented Generation (RAG) is a pivotal technique for enhancing the capability of large language models (LLMs) and has demonstrated promising efficacy across a diverse spectrum of tasks. While LLM-driven RAG systems show superior performance, they face unique challenges in stability and reliability. Their complexity hinders developers' efforts to design, maintain, and optimize effective RAG systems. Therefore, it is crucial to understand how RAG's performance is impacted by its design. In this work, we conduct an early exploratory study toward a better understanding of the mechanism of RAG systems, covering three code datasets, three QA datasets, and two LLMs. We focus on four design factors: retrieval document type, retrieval recall, document selection, and prompt techniques. Our study uncovers how each factor impacts system correctness and confidence, providing valuable insights for developing an accurate and reliable RAG system. Based on these findings, we present nine actionable guidelines for detecting defects and optimizing the performance of RAG systems. We hope our early exploration can inspire further advancements in engineering, improving and maintaining LLM-driven intelligent software systems for greater efficiency and reliability.

LADEV: A Language-Driven Testing and Evaluation Platform for Vision-Language-Action Models in Robotic Manipulation

Oct 07, 2024Abstract:Building on the advancements of Large Language Models (LLMs) and Vision Language Models (VLMs), recent research has introduced Vision-Language-Action (VLA) models as an integrated solution for robotic manipulation tasks. These models take camera images and natural language task instructions as input and directly generate control actions for robots to perform specified tasks, greatly improving both decision-making capabilities and interaction with human users. However, the data-driven nature of VLA models, combined with their lack of interpretability, makes the assurance of their effectiveness and robustness a challenging task. This highlights the need for a reliable testing and evaluation platform. For this purpose, in this work, we propose LADEV, a comprehensive and efficient platform specifically designed for evaluating VLA models. We first present a language-driven approach that automatically generates simulation environments from natural language inputs, mitigating the need for manual adjustments and significantly improving testing efficiency. Then, to further assess the influence of language input on the VLA models, we implement a paraphrase mechanism that produces diverse natural language task instructions for testing. Finally, to expedite the evaluation process, we introduce a batch-style method for conducting large-scale testing of VLA models. Using LADEV, we conducted experiments on several state-of-the-art VLA models, demonstrating its effectiveness as a tool for evaluating these models. Our results showed that LADEV not only enhances testing efficiency but also establishes a solid baseline for evaluating VLA models, paving the way for the development of more intelligent and advanced robotic systems.

PromptCharm: Text-to-Image Generation through Multi-modal Prompting and Refinement

Mar 06, 2024

Abstract:The recent advancements in Generative AI have significantly advanced the field of text-to-image generation. The state-of-the-art text-to-image model, Stable Diffusion, is now capable of synthesizing high-quality images with a strong sense of aesthetics. Crafting text prompts that align with the model's interpretation and the user's intent thus becomes crucial. However, prompting remains challenging for novice users due to the complexity of the stable diffusion model and the non-trivial efforts required for iteratively editing and refining the text prompts. To address these challenges, we propose PromptCharm, a mixed-initiative system that facilitates text-to-image creation through multi-modal prompt engineering and refinement. To assist novice users in prompting, PromptCharm first automatically refines and optimizes the user's initial prompt. Furthermore, PromptCharm supports the user in exploring and selecting different image styles within a large database. To assist users in effectively refining their prompts and images, PromptCharm renders model explanations by visualizing the model's attention values. If the user notices any unsatisfactory areas in the generated images, they can further refine the images through model attention adjustment or image inpainting within the rich feedback loop of PromptCharm. To evaluate the effectiveness and usability of PromptCharm, we conducted a controlled user study with 12 participants and an exploratory user study with another 12 participants. These two studies show that participants using PromptCharm were able to create images with higher quality and better aligned with the user's expectations compared with using two variants of PromptCharm that lacked interaction or visualization support.

Look Before You Leap: An Exploratory Study of Uncertainty Measurement for Large Language Models

Jul 16, 2023

Abstract:The recent performance leap of Large Language Models (LLMs) opens up new opportunities across numerous industrial applications and domains. However, erroneous generations, such as false predictions, misinformation, and hallucination made by LLMs, have also raised severe concerns for the trustworthiness of LLMs', especially in safety-, security- and reliability-sensitive scenarios, potentially hindering real-world adoptions. While uncertainty estimation has shown its potential for interpreting the prediction risks made by general machine learning (ML) models, little is known about whether and to what extent it can help explore an LLM's capabilities and counteract its undesired behavior. To bridge the gap, in this paper, we initiate an exploratory study on the risk assessment of LLMs from the lens of uncertainty. In particular, we experiment with twelve uncertainty estimation methods and four LLMs on four prominent natural language processing (NLP) tasks to investigate to what extent uncertainty estimation techniques could help characterize the prediction risks of LLMs. Our findings validate the effectiveness of uncertainty estimation for revealing LLMs' uncertain/non-factual predictions. In addition to general NLP tasks, we extensively conduct experiments with four LLMs for code generation on two datasets. We find that uncertainty estimation can potentially uncover buggy programs generated by LLMs. Insights from our study shed light on future design and development for reliable LLMs, facilitating further research toward enhancing the trustworthiness of LLMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge