Zhichao Zhang

ELIQ: A Label-Free Framework for Quality Assessment of Evolving AI-Generated Images

Feb 03, 2026Abstract:Generative text-to-image models are advancing at an unprecedented pace, continuously shifting the perceptual quality ceiling and rendering previously collected labels unreliable for newer generations. To address this, we present ELIQ, a Label-free Framework for Quality Assessment of Evolving AI-generated Images. Specifically, ELIQ focuses on visual quality and prompt-image alignment, automatically constructs positive and aspect-specific negative pairs to cover both conventional distortions and AIGC-specific distortion modes, enabling transferable supervision without human annotations. Building on these pairs, ELIQ adapts a pre-trained multimodal model into a quality-aware critic via instruction tuning and predicts two-dimensional quality using lightweight gated fusion and a Quality Query Transformer. Experiments across multiple benchmarks demonstrate that ELIQ consistently outperforms existing label-free methods, generalizes from AI-generated content (AIGC) to user-generated content (UGC) scenarios without modification, and paves the way for scalable and label-free quality assessment under continuously evolving generative models. The code will be released upon publication.

Decoupling Perception and Calibration: Label-Efficient Image Quality Assessment Framework

Jan 28, 2026Abstract:Recent multimodal large language models (MLLMs) have demonstrated strong capabilities in image quality assessment (IQA) tasks. However, adapting such large-scale models is computationally expensive and still relies on substantial Mean Opinion Score (MOS) annotations. We argue that for MLLM-based IQA, the core bottleneck lies not in the quality perception capacity of MLLMs, but in MOS scale calibration. Therefore, we propose LEAF, a Label-Efficient Image Quality Assessment Framework that distills perceptual quality priors from an MLLM teacher into a lightweight student regressor, enabling MOS calibration with minimal human supervision. Specifically, the teacher conducts dense supervision through point-wise judgments and pair-wise preferences, with an estimate of decision reliability. Guided by these signals, the student learns the teacher's quality perception patterns through joint distillation and is calibrated on a small MOS subset to align with human annotations. Experiments on both user-generated and AI-generated IQA benchmarks demonstrate that our method significantly reduces the need for human annotations while maintaining strong MOS-aligned correlations, making lightweight IQA practical under limited annotation budgets.

JFRFFNet: A Data-Model Co-Driven Graph Signal Denoising Model with Partial Prior Information

Sep 11, 2025Abstract:Wiener filtering in the joint time-vertex fractional Fourier transform (JFRFT) domain has shown high effectiveness in denoising time-varying graph signals. Traditional filtering models use grid search to determine the transform-order pair and compute filter coefficients, while learnable ones employ gradient-descent strategies to optimize them; both require complete prior information of graph signals. To overcome this shortcoming, this letter proposes a data-model co-driven denoising approach, termed neural-network-aided joint time-vertex fractional Fourier filtering (JFRFFNet), which embeds the JFRFT-domain Wiener filter model into a neural network and updates the transform-order pair and filter coefficients through a data-driven approach. This design enables effective denoising using only partial prior information. Experiments demonstrate that JFRFFNet achieves significant improvements in output signal-to-noise ratio compared with some state-of-the-art methods.

VQualA 2025 Challenge on Visual Quality Comparison for Large Multimodal Models: Methods and Results

Sep 11, 2025

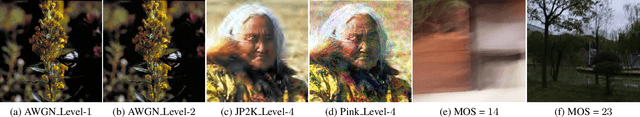

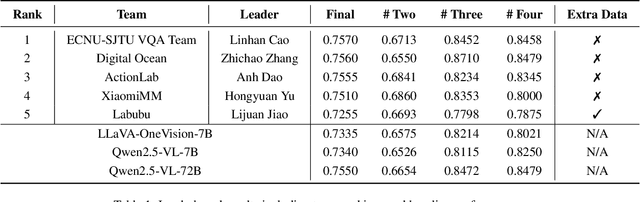

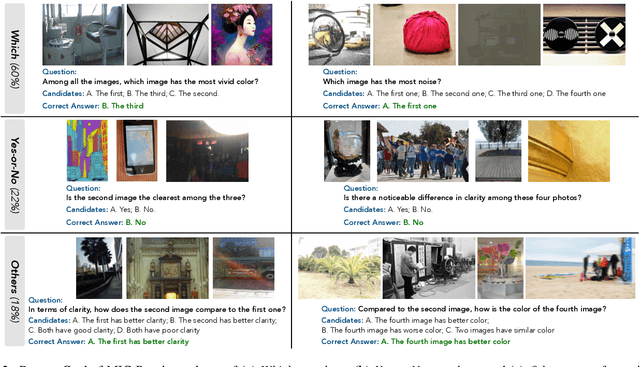

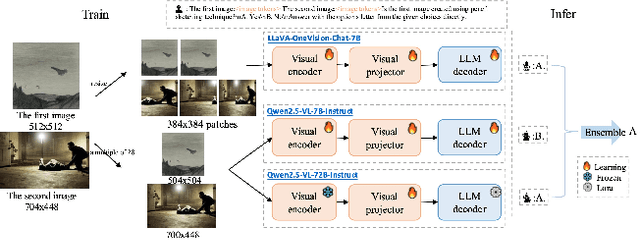

Abstract:This paper presents a summary of the VQualA 2025 Challenge on Visual Quality Comparison for Large Multimodal Models (LMMs), hosted as part of the ICCV 2025 Workshop on Visual Quality Assessment. The challenge aims to evaluate and enhance the ability of state-of-the-art LMMs to perform open-ended and detailed reasoning about visual quality differences across multiple images. To this end, the competition introduces a novel benchmark comprising thousands of coarse-to-fine grained visual quality comparison tasks, spanning single images, pairs, and multi-image groups. Each task requires models to provide accurate quality judgments. The competition emphasizes holistic evaluation protocols, including 2AFC-based binary preference and multi-choice questions (MCQs). Around 100 participants submitted entries, with five models demonstrating the emerging capabilities of instruction-tuned LMMs on quality assessment. This challenge marks a significant step toward open-domain visual quality reasoning and comparison and serves as a catalyst for future research on interpretable and human-aligned quality evaluation systems.

Multiple-Parameter Graph Fractional Fourier Transform: Theory and Applications

Jul 31, 2025Abstract:The graph fractional Fourier transform (GFRFT) applies a single global fractional order to all graph frequencies, which restricts its adaptability to diverse signal characteristics across the spectral domain. To address this limitation, in this paper, we propose two types of multiple-parameter GFRFTs (MPGFRFTs) and establish their corresponding theoretical frameworks. We design a spectral compression strategy tailored for ultra-low compression ratios, effectively preserving essential information even under extreme dimensionality reduction. To enhance flexibility, we introduce a learnable order vector scheme that enables adaptive compression and denoising, demonstrating strong performance on both graph signals and images. We explore the application of MPGFRFTs to image encryption and decryption. Experimental results validate the versatility and superior performance of the proposed MPGFRFT framework across various graph signal processing tasks.

NTIRE 2025 challenge on Text to Image Generation Model Quality Assessment

May 22, 2025Abstract:This paper reports on the NTIRE 2025 challenge on Text to Image (T2I) generation model quality assessment, which will be held in conjunction with the New Trends in Image Restoration and Enhancement Workshop (NTIRE) at CVPR 2025. The aim of this challenge is to address the fine-grained quality assessment of text-to-image generation models. This challenge evaluates text-to-image models from two aspects: image-text alignment and image structural distortion detection, and is divided into the alignment track and the structural track. The alignment track uses the EvalMuse-40K, which contains around 40K AI-Generated Images (AIGIs) generated by 20 popular generative models. The alignment track has a total of 371 registered participants. A total of 1,883 submissions are received in the development phase, and 507 submissions are received in the test phase. Finally, 12 participating teams submitted their models and fact sheets. The structure track uses the EvalMuse-Structure, which contains 10,000 AI-Generated Images (AIGIs) with corresponding structural distortion mask. A total of 211 participants have registered in the structure track. A total of 1155 submissions are received in the development phase, and 487 submissions are received in the test phase. Finally, 8 participating teams submitted their models and fact sheets. Almost all methods have achieved better results than baseline methods, and the winning methods in both tracks have demonstrated superior prediction performance on T2I model quality assessment.

AGHI-QA: A Subjective-Aligned Dataset and Metric for AI-Generated Human Images

Apr 30, 2025

Abstract:The rapid development of text-to-image (T2I) generation approaches has attracted extensive interest in evaluating the quality of generated images, leading to the development of various quality assessment methods for general-purpose T2I outputs. However, existing image quality assessment (IQA) methods are limited to providing global quality scores, failing to deliver fine-grained perceptual evaluations for structurally complex subjects like humans, which is a critical challenge considering the frequent anatomical and textural distortions in AI-generated human images (AGHIs). To address this gap, we introduce AGHI-QA, the first large-scale benchmark specifically designed for quality assessment of AGHIs. The dataset comprises 4,000 images generated from 400 carefully crafted text prompts using 10 state of-the-art T2I models. We conduct a systematic subjective study to collect multidimensional annotations, including perceptual quality scores, text-image correspondence scores, visible and distorted body part labels. Based on AGHI-QA, we evaluate the strengths and weaknesses of current T2I methods in generating human images from multiple dimensions. Furthermore, we propose AGHI-Assessor, a novel quality metric that integrates the large multimodal model (LMM) with domain-specific human features for precise quality prediction and identification of visible and distorted body parts in AGHIs. Extensive experimental results demonstrate that AGHI-Assessor showcases state-of-the-art performance, significantly outperforming existing IQA methods in multidimensional quality assessment and surpassing leading LMMs in detecting structural distortions in AGHIs.

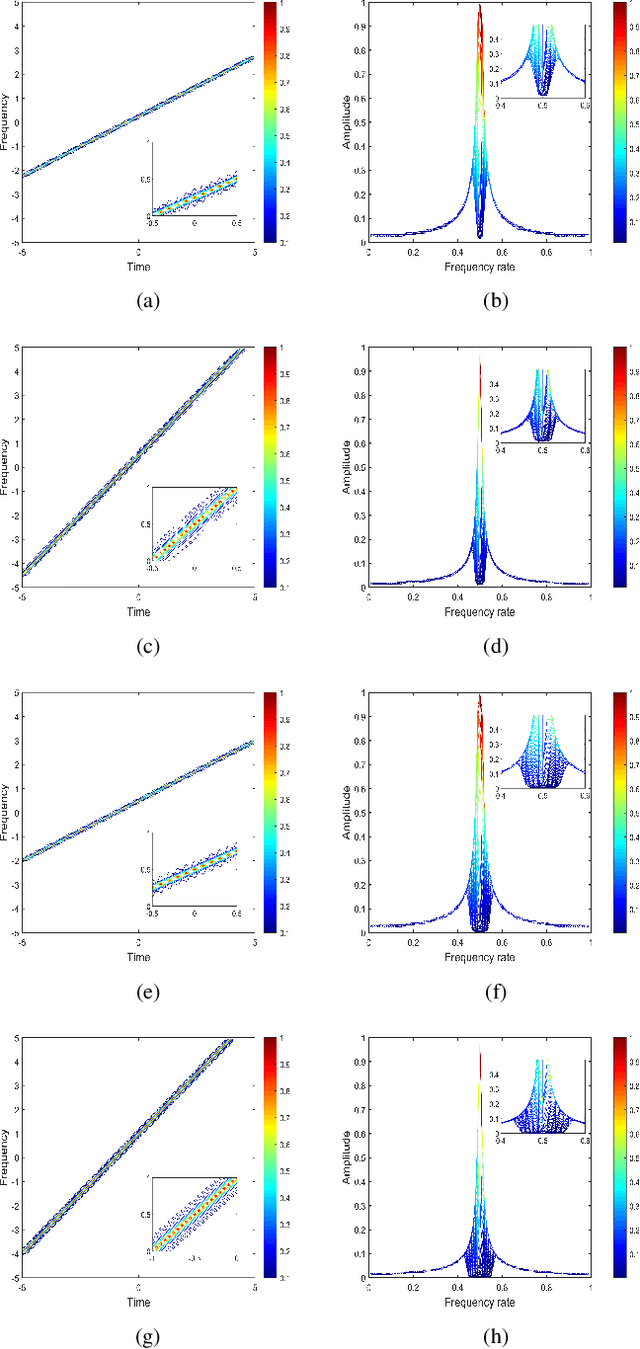

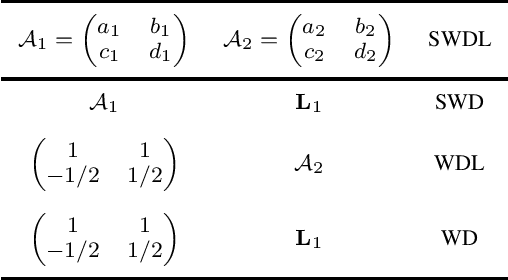

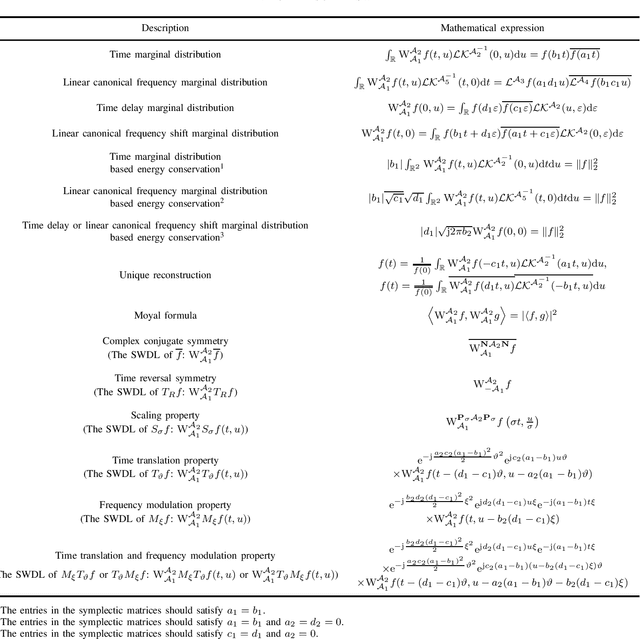

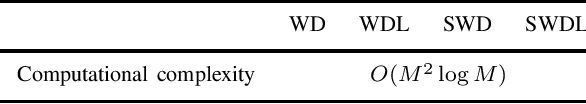

Symplectic Wigner Distribution in the Linear Canonical Transform Domain: Theory and Application

Mar 13, 2025

Abstract:This paper devotes to combine the chirp basis function transformation and symplectic coordinates transformation to yield a novel Wigner distribution (WD) associated with the linear canonical transform (LCT), named as the symplectic WD in the LCT domain (SWDL). It incorporates the merits of the symplectic WD (SWD) and the WD in the LCT domain (WDL), achieving stronger capability in the linear frequency-modulated (LFM) signal frequency rate feature extraction while maintaining the same level of computational complexity. Some essential properties of the SWDL are derived, including marginal distributions, energy conservations, unique reconstruction, Moyal formula, complex conjugate symmetry, time reversal symmetry, scaling property, time translation property, frequency modulation property, and time translation and frequency modulation property. Heisenberg's uncertainty principles of the SWDL are formulated, giving rise to three kinds of lower bounds attainable respectively by Gaussian enveloped complex exponential signal, Gaussian signal and Gaussian enveloped chirp signal. The optimal symplectic matrices corresponding to the highest time-frequency resolution are generated by solving the lower bound optimization (minimization) problem. The time-frequency resolution of the SWDL is compared with those of the SWD and WDL to demonstrate its superiority in LFM signals time-frequency energy concentration. A synthesis example is also carried out to verify the feasibility and reliability of the theoretical analysis.

Standard Heisenberg's uncertainty principles of Cohen's class time-frequency distribution with specific kernels

Mar 13, 2025Abstract:Time-frequency concentration and resolution of the Cohen's class time-frequency distribution (CCTFD) has attracted much attention in time-frequency analysis. A variety of uncertainty principles of the CCTFD is therefore derived, including the weak Heisenberg type, the Hardy type, the Nazarov type, and the local type. However, the standard Heisenberg type still remains unresolved. In this study, we address the question of how the standard Heisenberg's uncertainty principle of the CCTFD is affected by fundamental properties. The investigated distribution properties are Parseval's relation and the concise frequency domain definition (i.e., only frequency variables are explicitly found in the tensor product), based on which we confine our attention to the CCTFD with some specific kernels. That is the unit modulus and v-independent time translation, reversal and scaling invariant kernel CCTFD (UMITRSK-CCTFD). We then extend the standard Heisenberg's uncertainty principles of the Wigner distribution to those of the UMITRSK-CCTFD, giving birth to various types of attainable lower bounds on the uncertainty product in the UMITRSK-CCTFD domain. The derived results strengthen the existing weak Heisenberg type and fill gaps in the standard Heisenberg type.

Graph Chirp Signal and Graph Fractional Vertex-Frequency Energy Distribution

Mar 10, 2025

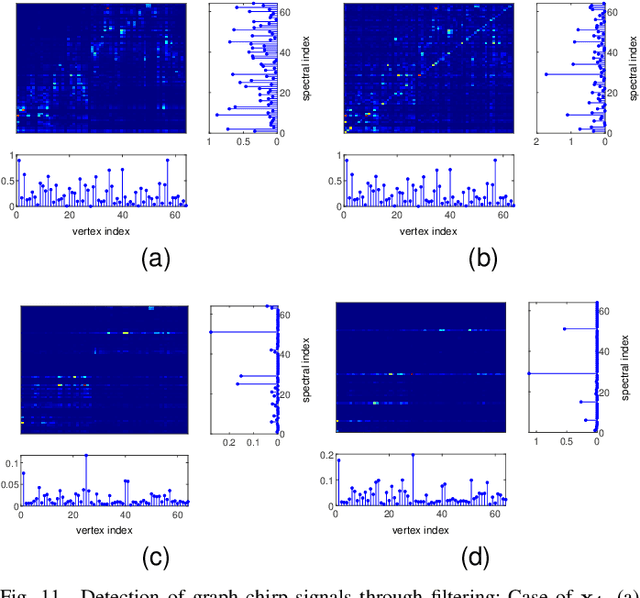

Abstract:Graph signal processing (GSP) has emerged as a powerful framework for analyzing data on irregular domains. In recent years, many classical techniques in signal processing (SP) have been successfully extended to GSP. Among them, chirp signals play a crucial role in various SP applications. However, graph chirp signals have not been formally defined despite their importance. Here, we define graph chirp signals and establish a comprehensive theoretical framework for their analysis. We propose the graph fractional vertex-frequency energy distribution (GFED), which provides a powerful tool for processing and analyzing graph chirp signals. We introduce the general fractional graph distribution (GFGD), a generalized vertex-frequency distribution, and the reduced interference GFED, which can suppress cross-term interference and enhance signal clarity. Furthermore, we propose a novel method for detecting graph signals through GFED domain filtering, facilitating robust detection and analysis of graph chirp signals in noisy environments. Moreover, this method can be applied to real-world data for denoising more effective than some state-of-the-arts, further demonstrating its practical significance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge