Zhang Liu

Task Assignment and Exploration Optimization for Low Altitude UAV Rescue via Generative AI Enhanced Multi-agent Reinforcement Learning

Apr 18, 2025Abstract:Artificial Intelligence (AI)-driven convolutional neural networks enhance rescue, inspection, and surveillance tasks performed by low-altitude uncrewed aerial vehicles (UAVs) and ground computing nodes (GCNs) in unknown environments. However, their high computational demands often exceed a single UAV's capacity, leading to system instability, further exacerbated by the limited and dynamic resources of GCNs. To address these challenges, this paper proposes a novel cooperation framework involving UAVs, ground-embedded robots (GERs), and high-altitude platforms (HAPs), which enable resource pooling through UAV-to-GER (U2G) and UAV-to-HAP (U2H) communications to provide computing services for UAV offloaded tasks. Specifically, we formulate the multi-objective optimization problem of task assignment and exploration optimization in UAVs as a dynamic long-term optimization problem. Our objective is to minimize task completion time and energy consumption while ensuring system stability over time. To achieve this, we first employ the Lyapunov optimization technique to transform the original problem, with stability constraints, into a per-slot deterministic problem. We then propose an algorithm named HG-MADDPG, which combines the Hungarian algorithm with a generative diffusion model (GDM)-based multi-agent deep deterministic policy gradient (MADDPG) approach. We first introduce the Hungarian algorithm as a method for exploration area selection, enhancing UAV efficiency in interacting with the environment. We then innovatively integrate the GDM and multi-agent deep deterministic policy gradient (MADDPG) to optimize task assignment decisions, such as task offloading and resource allocation. Simulation results demonstrate the effectiveness of the proposed approach, with significant improvements in task offloading efficiency, latency reduction, and system stability compared to baseline methods.

Why Reasoning Matters? A Survey of Advancements in Multimodal Reasoning (v1)

Apr 04, 2025

Abstract:Reasoning is central to human intelligence, enabling structured problem-solving across diverse tasks. Recent advances in large language models (LLMs) have greatly enhanced their reasoning abilities in arithmetic, commonsense, and symbolic domains. However, effectively extending these capabilities into multimodal contexts-where models must integrate both visual and textual inputs-continues to be a significant challenge. Multimodal reasoning introduces complexities, such as handling conflicting information across modalities, which require models to adopt advanced interpretative strategies. Addressing these challenges involves not only sophisticated algorithms but also robust methodologies for evaluating reasoning accuracy and coherence. This paper offers a concise yet insightful overview of reasoning techniques in both textual and multimodal LLMs. Through a thorough and up-to-date comparison, we clearly formulate core reasoning challenges and opportunities, highlighting practical methods for post-training optimization and test-time inference. Our work provides valuable insights and guidance, bridging theoretical frameworks and practical implementations, and sets clear directions for future research.

Generative AI for Lyapunov Optimization Theory in UAV-based Low-Altitude Economy Networking

Jan 27, 2025

Abstract:Lyapunov optimization theory has recently emerged as a powerful mathematical framework for solving complex stochastic optimization problems by transforming long-term objectives into a sequence of real-time short-term decisions while ensuring system stability. This theory is particularly valuable in unmanned aerial vehicle (UAV)-based low-altitude economy (LAE) networking scenarios, where it could effectively address inherent challenges of dynamic network conditions, multiple optimization objectives, and stability requirements. Recently, generative artificial intelligence (GenAI) has garnered significant attention for its unprecedented capability to generate diverse digital content. Extending beyond content generation, in this paper, we propose a framework integrating generative diffusion models with reinforcement learning to address Lyapunov optimization problems in UAV-based LAE networking. We begin by introducing the fundamentals of Lyapunov optimization theory and analyzing the limitations of both conventional methods and traditional AI-enabled approaches. We then examine various GenAI models and comprehensively analyze their potential contributions to Lyapunov optimization. Subsequently, we develop a Lyapunov-guided generative diffusion model-based reinforcement learning framework and validate its effectiveness through a UAV-based LAE networking case study. Finally, we outline several directions for future research.

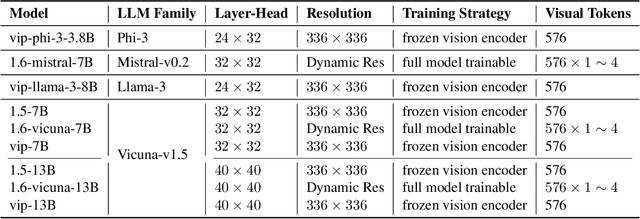

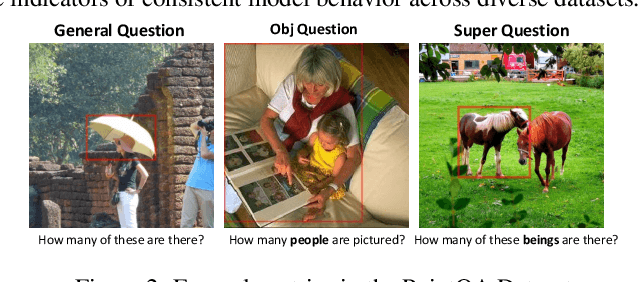

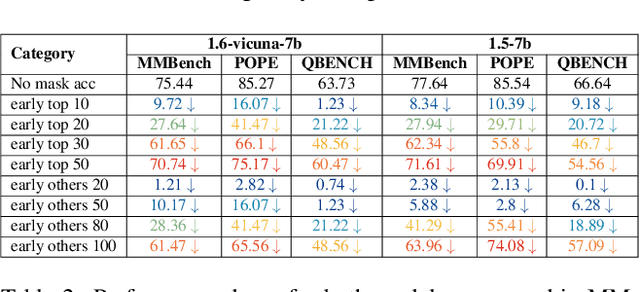

Unveiling Visual Perception in Language Models: An Attention Head Analysis Approach

Dec 24, 2024

Abstract:Recent advancements in Multimodal Large Language Models (MLLMs) have demonstrated remarkable progress in visual understanding. This impressive leap raises a compelling question: how can language models, initially trained solely on linguistic data, effectively interpret and process visual content? This paper aims to address this question with systematic investigation across 4 model families and 4 model scales, uncovering a unique class of attention heads that focus specifically on visual content. Our analysis reveals a strong correlation between the behavior of these attention heads, the distribution of attention weights, and their concentration on visual tokens within the input. These findings enhance our understanding of how LLMs adapt to multimodal tasks, demonstrating their potential to bridge the gap between textual and visual understanding. This work paves the way for the development of AI systems capable of engaging with diverse modalities.

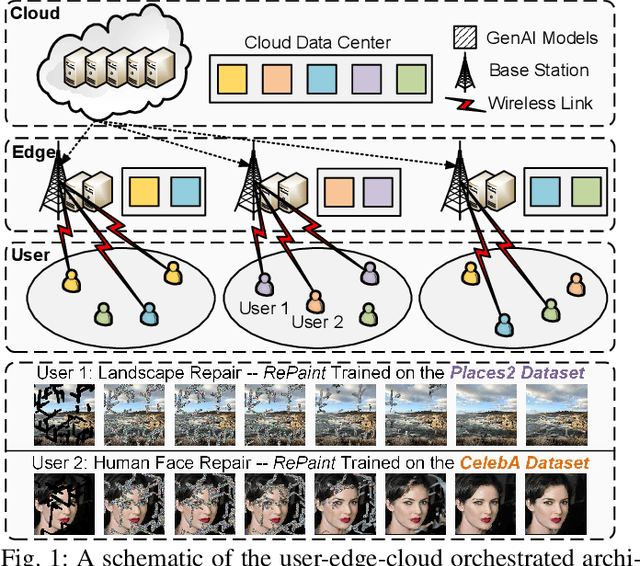

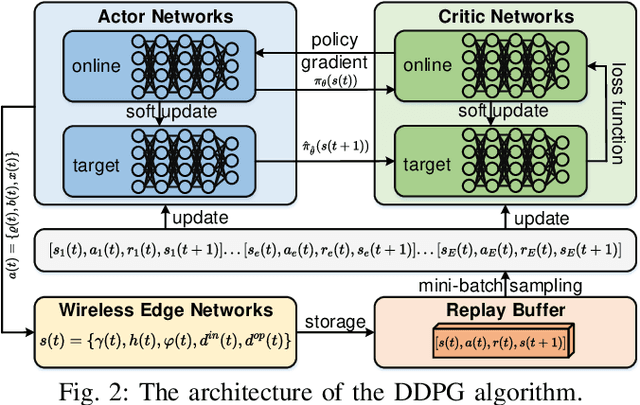

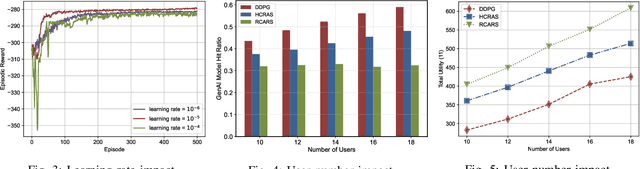

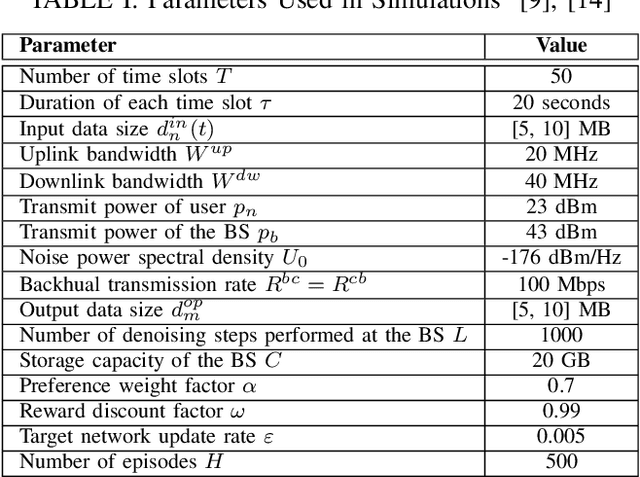

Joint Model Caching and Resource Allocation in Generative AI-Enabled Wireless Edge Networks

Nov 13, 2024

Abstract:With the rapid advancement of artificial intelligence (AI), generative AI (GenAI) has emerged as a transformative tool, enabling customized and personalized AI-generated content (AIGC) services. However, GenAI models with billions of parameters require substantial memory capacity and computational power for deployment and execution, presenting significant challenges to resource-limited edge networks. In this paper, we address the joint model caching and resource allocation problem in GenAI-enabled wireless edge networks. Our objective is to balance the trade-off between delivering high-quality AIGC and minimizing the delay in AIGC service provisioning. To tackle this problem, we employ a deep deterministic policy gradient (DDPG)-based reinforcement learning approach, capable of efficiently determining optimal model caching and resource allocation decisions for AIGC services in response to user mobility and time-varying channel conditions. Numerical results demonstrate that DDPG achieves a higher model hit ratio and provides superior-quality, lower-latency AIGC services compared to other benchmark solutions.

Two-Timescale Model Caching and Resource Allocation for Edge-Enabled AI-Generated Content Services

Nov 03, 2024

Abstract:Generative AI (GenAI) has emerged as a transformative technology, enabling customized and personalized AI-generated content (AIGC) services. In this paper, we address challenges of edge-enabled AIGC service provisioning, which remain underexplored in the literature. These services require executing GenAI models with billions of parameters, posing significant obstacles to resource-limited wireless edge. We subsequently introduce the formulation of joint model caching and resource allocation for AIGC services to balance a trade-off between AIGC quality and latency metrics. We obtain mathematical relationships of these metrics with the computational resources required by GenAI models via experimentation. Afterward, we decompose the formulation into a model caching subproblem on a long-timescale and a resource allocation subproblem on a short-timescale. Since the variables to be solved are discrete and continuous, respectively, we leverage a double deep Q-network (DDQN) algorithm to solve the former subproblem and propose a diffusion-based deep deterministic policy gradient (D3PG) algorithm to solve the latter. The proposed D3PG algorithm makes an innovative use of diffusion models as the actor network to determine optimal resource allocation decisions. Consequently, we integrate these two learning methods within the overarching two-timescale deep reinforcement learning (T2DRL) algorithm, the performance of which is studied through comparative numerical simulations.

DNN Partitioning, Task Offloading, and Resource Allocation in Dynamic Vehicular Networks: A Lyapunov-Guided Diffusion-Based Reinforcement Learning Approach

Jun 11, 2024

Abstract:The rapid advancement of Artificial Intelligence (AI) has introduced Deep Neural Network (DNN)-based tasks to the ecosystem of vehicular networks. These tasks are often computation-intensive, requiring substantial computation resources, which are beyond the capability of a single vehicle. To address this challenge, Vehicular Edge Computing (VEC) has emerged as a solution, offering computing services for DNN-based tasks through resource pooling via Vehicle-to-Vehicle/Infrastructure (V2V/V2I) communications. In this paper, we formulate the problem of joint DNN partitioning, task offloading, and resource allocation in VEC as a dynamic long-term optimization. Our objective is to minimize the DNN-based task completion time while guaranteeing the system stability over time. To this end, we first leverage a Lyapunov optimization technique to decouple the original long-term optimization with stability constraints into a per-slot deterministic problem. Afterwards, we propose a Multi-Agent Diffusion-based Deep Reinforcement Learning (MAD2RL) algorithm, incorporating the innovative use of diffusion models to determine the optimal DNN partitioning and task offloading decisions. Furthermore, we integrate convex optimization techniques into MAD2RL as a subroutine to allocate computation resources, enhancing the learning efficiency. Through simulations under real-world movement traces of vehicles, we demonstrate the superior performance of our proposed algorithm compared to existing benchmark solutions.

GainNet: Coordinates the Odd Couple of Generative AI and 6G Networks

Jan 05, 2024

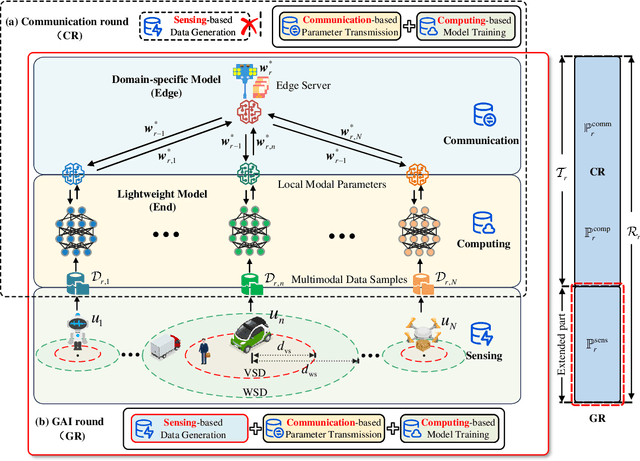

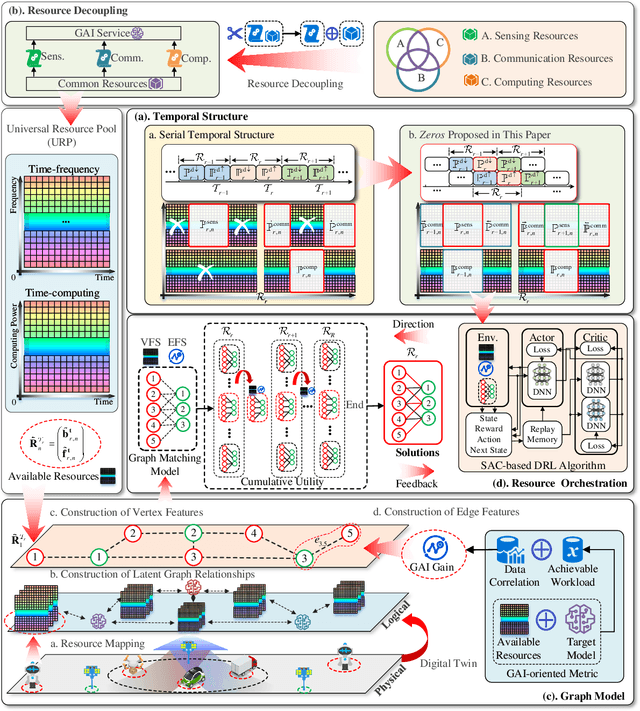

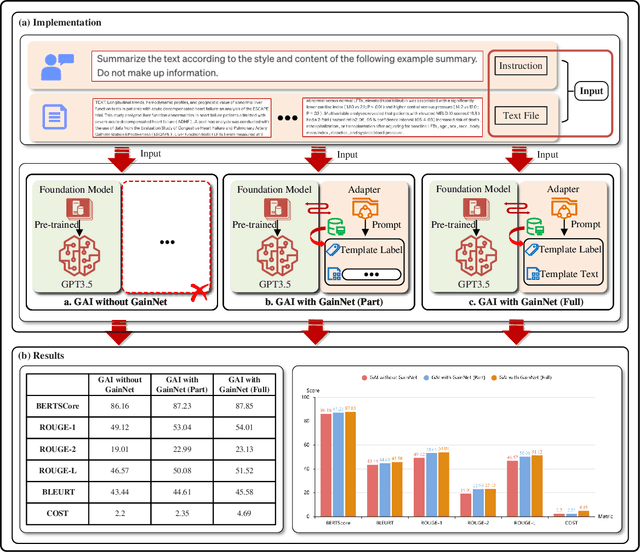

Abstract:The rapid expansion of AI-generated content (AIGC) reflects the iteration from assistive AI towards generative AI (GAI) with creativity. Meanwhile, the 6G networks will also evolve from the Internet-of-everything to the Internet-of-intelligence with hybrid heterogeneous network architectures. In the future, the interplay between GAI and the 6G will lead to new opportunities, where GAI can learn the knowledge of personalized data from the massive connected 6G end devices, while GAI's powerful generation ability can provide advanced network solutions for 6G network and provide 6G end devices with various AIGC services. However, they seem to be an odd couple, due to the contradiction of data and resources. To achieve a better-coordinated interplay between GAI and 6G, the GAI-native networks (GainNet), a GAI-oriented collaborative cloud-edge-end intelligence framework, is proposed in this paper. By deeply integrating GAI with 6G network design, GainNet realizes the positive closed-loop knowledge flow and sustainable-evolution GAI model optimization. On this basis, the GAI-oriented generic resource orchestration mechanism with integrated sensing, communication, and computing (GaiRom-ISCC) is proposed to guarantee the efficient operation of GainNet. Two simple case studies demonstrate the effectiveness and robustness of the proposed schemes. Finally, we envision the key challenges and future directions concerning the interplay between GAI models and 6G networks.

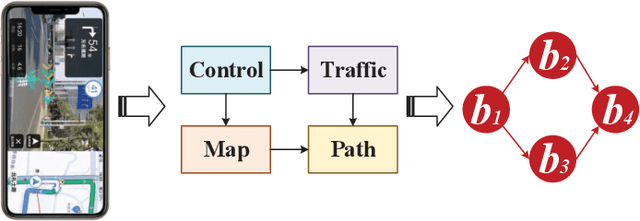

GA-DRL: Graph Neural Network-Augmented Deep Reinforcement Learning for DAG Task Scheduling over Dynamic Vehicular Clouds

Jul 03, 2023

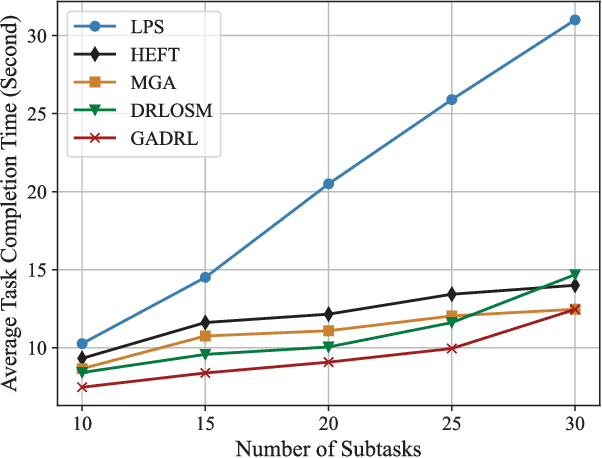

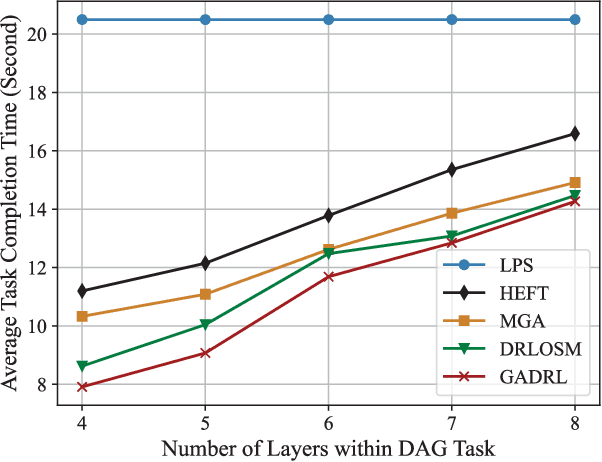

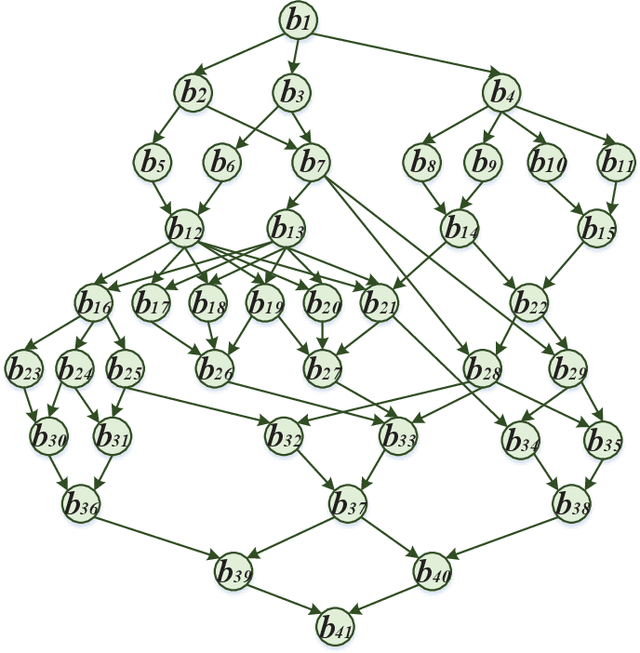

Abstract:Vehicular clouds (VCs) are modern platforms for processing of computation-intensive tasks over vehicles. Such tasks are often represented as directed acyclic graphs (DAGs) consisting of interdependent vertices/subtasks and directed edges. In this paper, we propose a graph neural network-augmented deep reinforcement learning scheme (GA-DRL) for scheduling DAG tasks over dynamic VCs. In doing so, we first model the VC-assisted DAG task scheduling as a Markov decision process. We then adopt a multi-head graph attention network (GAT) to extract the features of DAG subtasks. Our developed GAT enables a two-way aggregation of the topological information in a DAG task by simultaneously considering predecessors and successors of each subtask. We further introduce non-uniform DAG neighborhood sampling through codifying the scheduling priority of different subtasks, which makes our developed GAT generalizable to completely unseen DAG task topologies. Finally, we augment GAT into a double deep Q-network learning module to conduct subtask-to-vehicle assignment according to the extracted features of subtasks, while considering the dynamics and heterogeneity of the vehicles in VCs. Through simulating various DAG tasks under real-world movement traces of vehicles, we demonstrate that GA-DRL outperforms existing benchmarks in terms of DAG task completion time.

Anomaly Crossing: A New Method for Video Anomaly Detection as Cross-domain Few-shot Learning

Dec 14, 2021

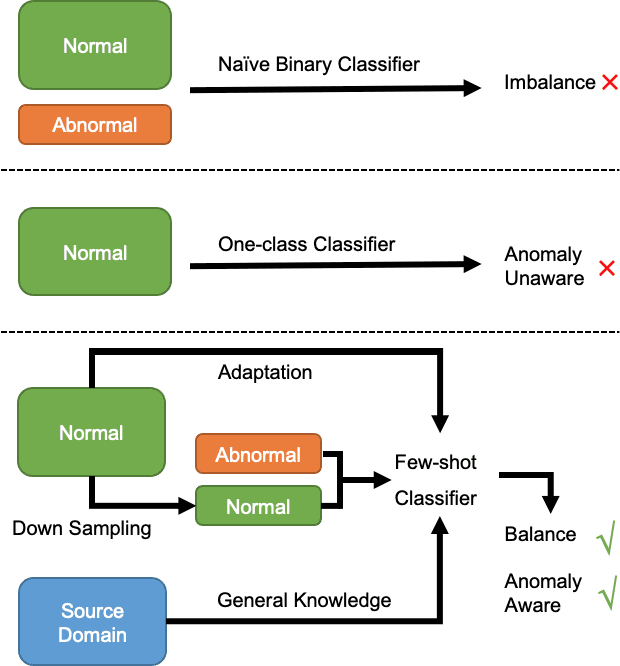

Abstract:Video anomaly detection aims to identify abnormal events that occurred in videos. Since anomalous events are relatively rare, it is not feasible to collect a balanced dataset and train a binary classifier to solve the task. Thus, most previous approaches learn only from normal videos using unsupervised or semi-supervised methods. Obviously, they are limited in capturing and utilizing discriminative abnormal characteristics, which leads to compromised anomaly detection performance. In this paper, to address this issue, we propose a new learning paradigm by making full use of both normal and abnormal videos for video anomaly detection. In particular, we formulate a new learning task: cross-domain few-shot anomaly detection, which can transfer knowledge learned from numerous videos in the source domain to help solve few-shot abnormality detection in the target domain. Concretely, we leverage self-supervised training on the target normal videos to reduce the domain gap and devise a meta context perception module to explore the video context of the event in the few-shot setting. Our experiments show that our method significantly outperforms baseline methods on DoTA and UCF-Crime datasets, and the new task contributes to a more practical training paradigm for anomaly detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge