Xuwei Fan

Privacy-Aware Joint DNN Model Deployment and Partition Optimization for Delay-Efficient Collaborative Edge Inference

Feb 22, 2025

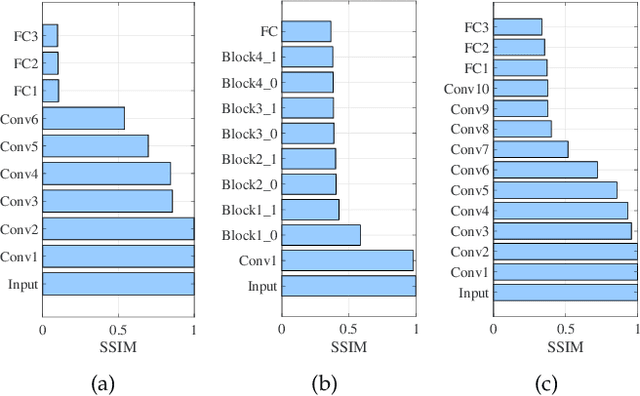

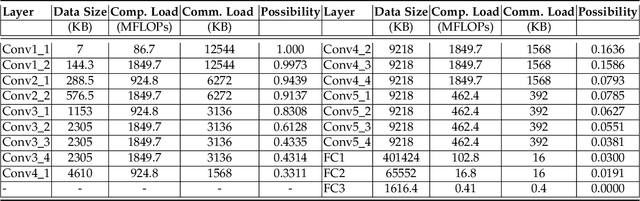

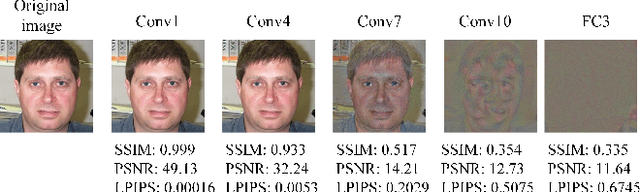

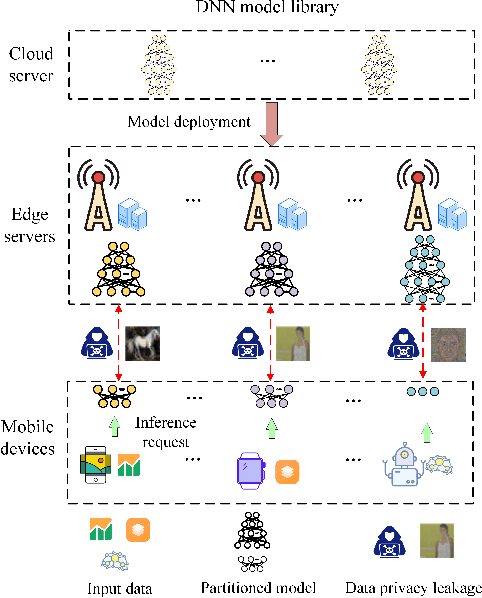

Abstract:Edge inference (EI) is a key solution to address the growing challenges of delayed response times, limited scalability, and privacy concerns in cloud-based Deep Neural Network (DNN) inference. However, deploying DNN models on resource-constrained edge devices faces more severe challenges, such as model storage limitations, dynamic service requests, and privacy risks. This paper proposes a novel framework for privacy-aware joint DNN model deployment and partition optimization to minimize long-term average inference delay under resource and privacy constraints. Specifically, the problem is formulated as a complex optimization problem considering model deployment, user-server association, and model partition strategies. To handle the NP-hardness and future uncertainties, a Lyapunov-based approach is introduced to transform the long-term optimization into a single-time-slot problem, ensuring system performance. Additionally, a coalition formation game model is proposed for edge server association, and a greedy-based algorithm is developed for model deployment within each coalition to efficiently solve the problem. Extensive simulations show that the proposed algorithms effectively reduce inference delay while satisfying privacy constraints, outperforming baseline approaches in various scenarios.

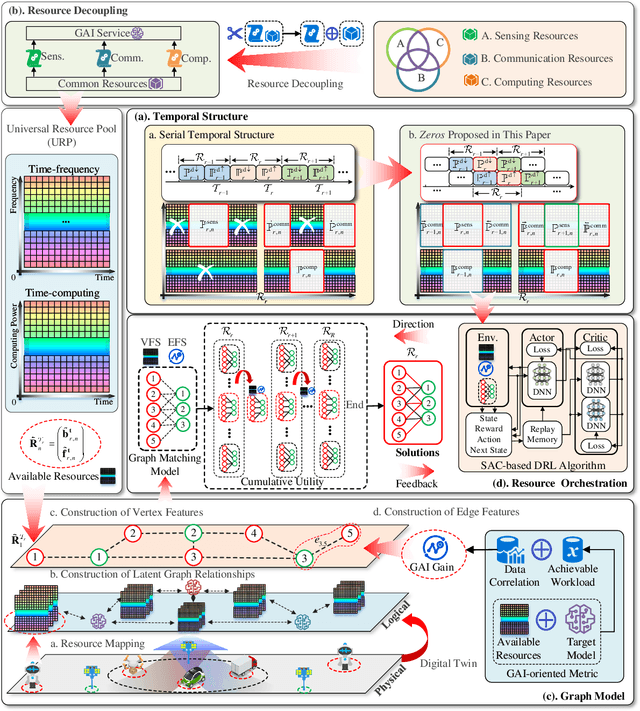

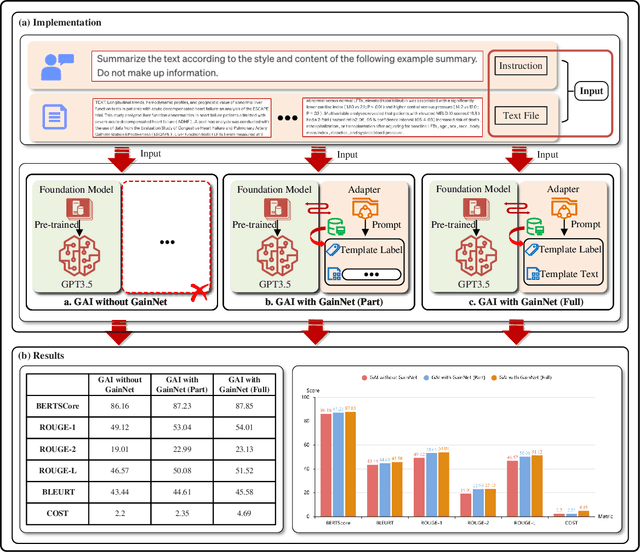

GainNet: Coordinates the Odd Couple of Generative AI and 6G Networks

Jan 05, 2024

Abstract:The rapid expansion of AI-generated content (AIGC) reflects the iteration from assistive AI towards generative AI (GAI) with creativity. Meanwhile, the 6G networks will also evolve from the Internet-of-everything to the Internet-of-intelligence with hybrid heterogeneous network architectures. In the future, the interplay between GAI and the 6G will lead to new opportunities, where GAI can learn the knowledge of personalized data from the massive connected 6G end devices, while GAI's powerful generation ability can provide advanced network solutions for 6G network and provide 6G end devices with various AIGC services. However, they seem to be an odd couple, due to the contradiction of data and resources. To achieve a better-coordinated interplay between GAI and 6G, the GAI-native networks (GainNet), a GAI-oriented collaborative cloud-edge-end intelligence framework, is proposed in this paper. By deeply integrating GAI with 6G network design, GainNet realizes the positive closed-loop knowledge flow and sustainable-evolution GAI model optimization. On this basis, the GAI-oriented generic resource orchestration mechanism with integrated sensing, communication, and computing (GaiRom-ISCC) is proposed to guarantee the efficient operation of GainNet. Two simple case studies demonstrate the effectiveness and robustness of the proposed schemes. Finally, we envision the key challenges and future directions concerning the interplay between GAI models and 6G networks.

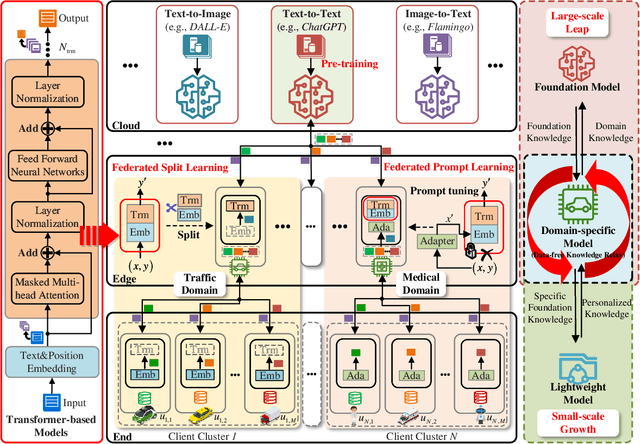

Towards Integrated Fine-tuning and Inference when Generative AI meets Edge Intelligence

Jan 05, 2024Abstract:The high-performance generative artificial intelligence (GAI) represents the latest evolution of computational intelligence, while the blessing of future 6G networks also makes edge intelligence (EI) full of development potential. The inevitable encounter between GAI and EI can unleash new opportunities, where GAI's pre-training based on massive computing resources and large-scale unlabeled corpora can provide strong foundational knowledge for EI, while EI can harness fragmented computing resources to aggregate personalized knowledge for GAI. However, the natural contradictory features pose significant challenges to direct knowledge sharing. To address this, in this paper, we propose the GAI-oriented synthetical network (GaisNet), a collaborative cloud-edge-end intelligence framework that buffers contradiction leveraging data-free knowledge relay, where the bidirectional knowledge flow enables GAI's virtuous-cycle model fine-tuning and task inference, achieving mutualism between GAI and EI with seamless fusion and collaborative evolution. Experimental results demonstrate the effectiveness of the proposed mechanisms. Finally, we discuss the future challenges and directions in the interplay between GAI and EI.

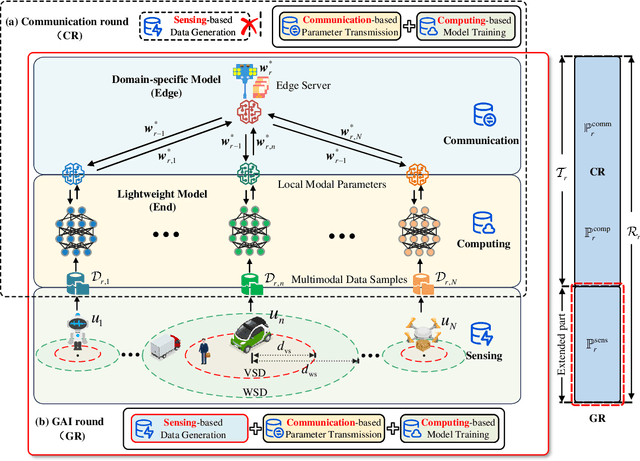

Integrated Sensing, Communication, and Computing for Cost-effective Multimodal Federated Perception

Nov 07, 2023Abstract:Federated learning (FL) is a classic paradigm of 6G edge intelligence (EI), which alleviates privacy leaks and high communication pressure caused by traditional centralized data processing in the artificial intelligence of things (AIoT). The implementation of multimodal federated perception (MFP) services involves three sub-processes, including sensing-based multimodal data generation, communication-based model transmission, and computing-based model training, ultimately relying on available underlying multi-domain physical resources such as time, frequency, and computing power. How to reasonably coordinate the multi-domain resources scheduling among sensing, communication, and computing, therefore, is crucial to the MFP networks. To address the above issues, this paper investigates service-oriented resource management with integrated sensing, communication, and computing (ISCC). With the incentive mechanism of the MFP service market, the resources management problem is redefined as a social welfare maximization problem, where the idea of "expanding resources" and "reducing costs" is used to improve learning performance gain and reduce resource costs. Experimental results demonstrate the effectiveness and robustness of the proposed resource scheduling mechanisms.

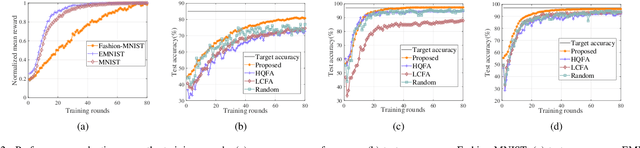

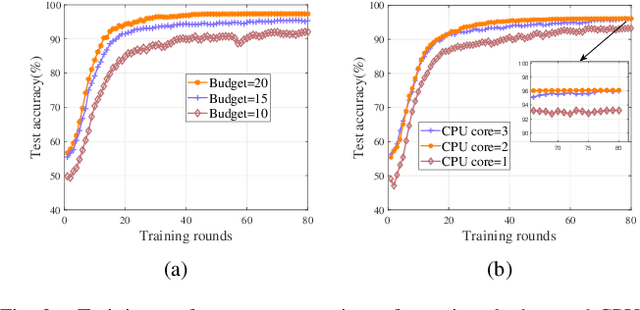

Learning-Based Client Selection for Federated Learning Services Over Wireless Networks with Constrained Monetary Budgets

Aug 08, 2022

Abstract:We investigate a data quality-aware dynamic client selection problem for multiple federated learning (FL) services in a wireless network, where each client has dynamic datasets for the simultaneous training of multiple FL services and each FL service demander has to pay for the clients with constrained monetary budgets. The problem is formalized as a non-cooperative Markov game over the training rounds. A multi-agent hybrid deep reinforcement learning-based algorithm is proposed to optimize the joint client selection and payment actions, while avoiding action conflicts. Simulation results indicate that our proposed algorithm can significantly improve the training performance.

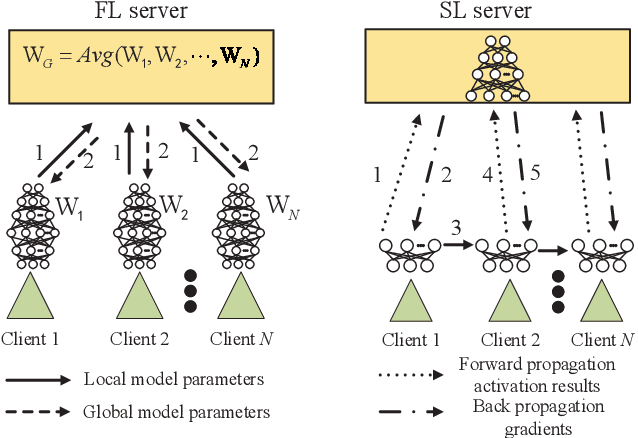

Hybrid Architectures for Distributed Machine Learning in Heterogeneous Wireless Networks

Jun 04, 2022

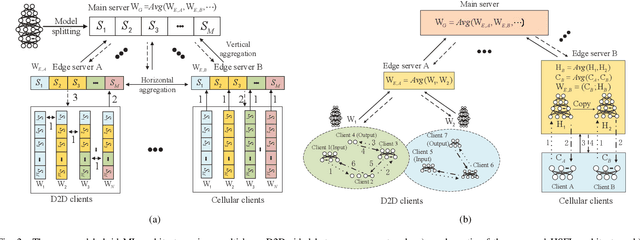

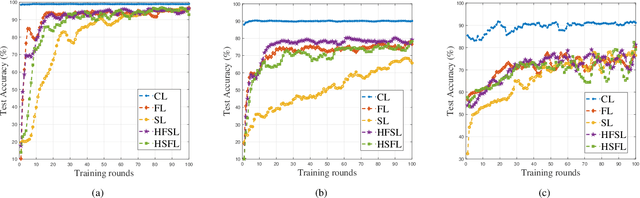

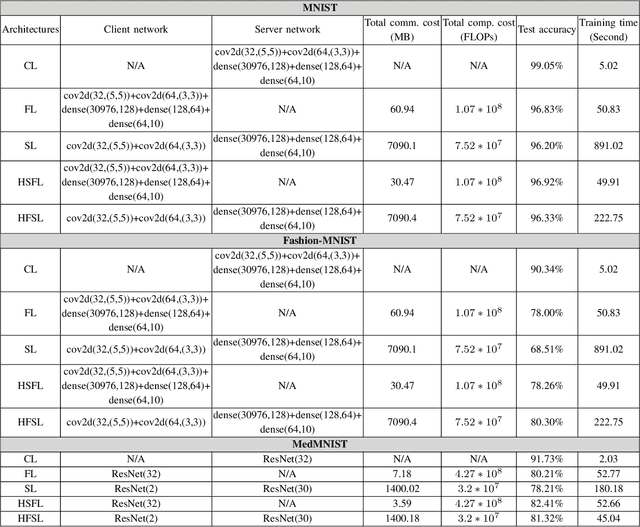

Abstract:The ever-growing data privacy concerns have transformed machine learning (ML) architectures from centralized to distributed, leading to federated learning (FL) and split learning (SL) as the two most popular privacy-preserving ML paradigms. However, implementing either conventional FL or SL alone with diverse network conditions (e.g., device-to-device (D2D) and cellular communications) and heterogeneous clients (e.g., heterogeneous computation/communication/energy capabilities) may face significant challenges, particularly poor architecture scalability and long training time. To this end, this article proposes two novel hybrid distributed ML architectures, namely, hybrid split FL (HSFL) and hybrid federated SL (HFSL), by combining the advantages of both FL and SL in D2D-enabled heterogeneous wireless networks. Specifically, the performance comparison and advantages of HSFL and HFSL are analyzed generally. Promising open research directions are presented to offer commendable reference for future research. Finally, primary simulations are conducted upon considering three datasets under non-independent and identically distributed settings, to verify the feasibility of our proposed architectures, which can significantly reduce communication/computation cost and training time, as compared with conventional FL and SL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge