Yi Liao

Deep learning-based neurodevelopmental assessment in preterm infants

Jan 17, 2026Abstract:Preterm infants (born between 28 and 37 weeks of gestation) face elevated risks of neurodevelopmental delays, making early identification crucial for timely intervention. While deep learning-based volumetric segmentation of brain MRI scans offers a promising avenue for assessing neonatal neurodevelopment, achieving accurate segmentation of white matter (WM) and gray matter (GM) in preterm infants remains challenging due to their comparable signal intensities (isointense appearance) on MRI during early brain development. To address this, we propose a novel segmentation neural network, named Hierarchical Dense Attention Network. Our architecture incorporates a 3D spatial-channel attention mechanism combined with an attention-guided dense upsampling strategy to enhance feature discrimination in low-contrast volumetric data. Quantitative experiments demonstrate that our method achieves superior segmentation performance compared to state-of-the-art baselines, effectively tackling the challenge of isointense tissue differentiation. Furthermore, application of our algorithm confirms that WM and GM volumes in preterm infants are significantly lower than those in term infants, providing additional imaging evidence of the neurodevelopmental delays associated with preterm birth. The code is available at: https://github.com/ICL-SUST/HDAN.

What-If Analysis of Large Language Models: Explore the Game World Using Proactive Thinking

Sep 05, 2025Abstract:Large language models (LLMs) excel at processing information reactively but lack the ability to systemically explore hypothetical futures. They cannot ask, "what if we take this action? how will it affect the final outcome" and forecast its potential consequences before acting. This critical gap limits their utility in dynamic, high-stakes scenarios like strategic planning, risk assessment, and real-time decision making. To bridge this gap, we propose WiA-LLM, a new paradigm that equips LLMs with proactive thinking capabilities. Our approach integrates What-If Analysis (WIA), a systematic approach for evaluating hypothetical scenarios by changing input variables. By leveraging environmental feedback via reinforcement learning, WiA-LLM moves beyond reactive thinking. It dynamically simulates the outcomes of each potential action, enabling the model to anticipate future states rather than merely react to the present conditions. We validate WiA-LLM in Honor of Kings (HoK), a complex multiplayer game environment characterized by rapid state changes and intricate interactions. The game's real-time state changes require precise multi-step consequence prediction, making it an ideal testbed for our approach. Experimental results demonstrate WiA-LLM achieves a remarkable 74.2% accuracy in forecasting game-state changes (up to two times gain over baselines). The model shows particularly significant gains in high-difficulty scenarios where accurate foresight is critical. To our knowledge, this is the first work to formally explore and integrate what-if analysis capabilities within LLMs. WiA-LLM represents a fundamental advance toward proactive reasoning in LLMs, providing a scalable framework for robust decision-making in dynamic environments with broad implications for strategic applications.

Dynamic Accumulated Attention Map for Interpreting Evolution of Decision-Making in Vision Transformer

Mar 18, 2025Abstract:Various Vision Transformer (ViT) models have been widely used for image recognition tasks. However, existing visual explanation methods can not display the attention flow hidden inside the inner structure of ViT models, which explains how the final attention regions are formed inside a ViT for its decision-making. In this paper, a novel visual explanation approach, Dynamic Accumulated Attention Map (DAAM), is proposed to provide a tool that can visualize, for the first time, the attention flow from the top to the bottom through ViT networks. To this end, a novel decomposition module is proposed to construct and store the spatial feature information by unlocking the [class] token generated by the self-attention module of each ViT block. The module can also obtain the channel importance coefficients by decomposing the classification score for supervised ViT models. Because of the lack of classification score in self-supervised ViT models, we propose dimension-wise importance weights to compute the channel importance coefficients. Such spatial features are linearly combined with the corresponding channel importance coefficients, forming the attention map for each block. The dynamic attention flow is revealed by block-wisely accumulating each attention map. The contribution of this work focuses on visualizing the evolution dynamic of the decision-making attention for any intermediate block inside a ViT model by proposing a novel decomposition module and dimension-wise importance weights. The quantitative and qualitative analysis consistently validate the effectiveness and superior capacity of the proposed DAAM for not only interpreting ViT models with the fully-connected layers as the classifier but also self-supervised ViT models. The code is available at https://github.com/ly9802/DynamicAccumulatedAttentionMap.

Tgea: An error-annotated dataset and benchmark tasks for text generation from pretrained language models

Mar 06, 2025Abstract:In order to deeply understand the capability of pretrained language models in text generation and conduct a diagnostic evaluation, we propose TGEA, an error-annotated dataset with multiple benchmark tasks for text generation from pretrained language models (PLMs). We use carefully selected prompt words to guide GPT-2 to generate candidate sentences, from which we select 47K for error annotation. Crowdsourced workers manually check each of these sentences and detect 12k erroneous sentences. We create an error taxonomy to cover 24 types of errors occurring in these erroneous sentences according to the nature of errors with respect to linguistics and knowledge (eg, common sense). For each erroneous span in PLM-generated sentences, we also detect another span that is closely associated with it. Each error is hence manually labeled with comprehensive annotations, including the span of the error, the associated span, minimal correction to the error, the type of the error, and rationale behind the error. Apart from the fully annotated dataset, we also present a detailed description of the data collection procedure, statistics and analysis of the dataset. This is the first dataset with comprehensive annotations for PLM-generated texts, which facilitates the diagnostic evaluation of PLM-based text generation. Furthermore, we use TGEA as a benchmark dataset and propose a series of automatic diagnosis tasks, including error detection, error type classification, associated span detection, error rationale generation, to further promote future study on the automatic error detection and correction on texts generated by pretrained language models.

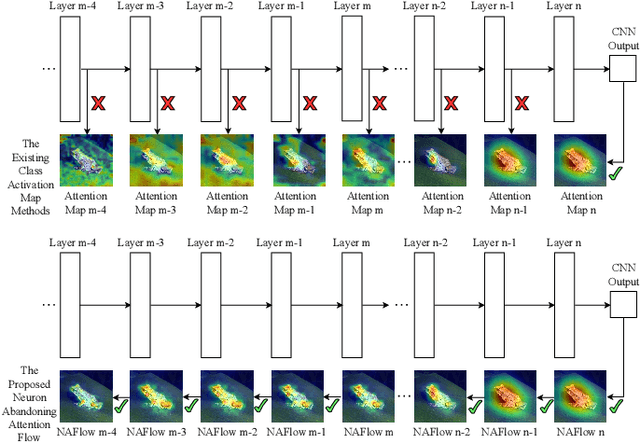

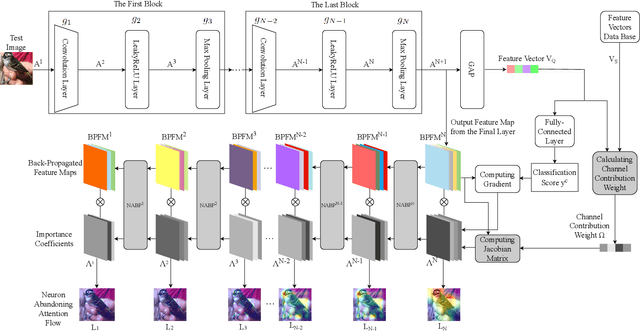

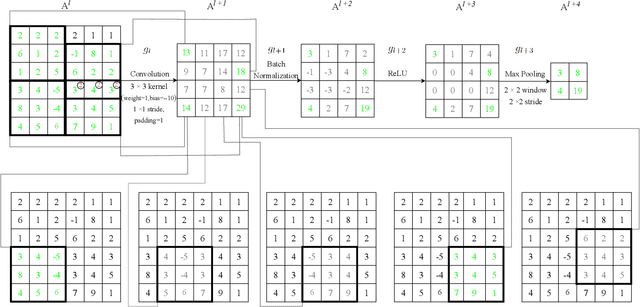

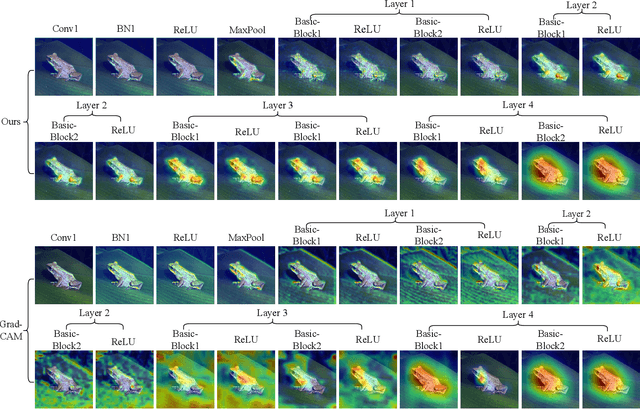

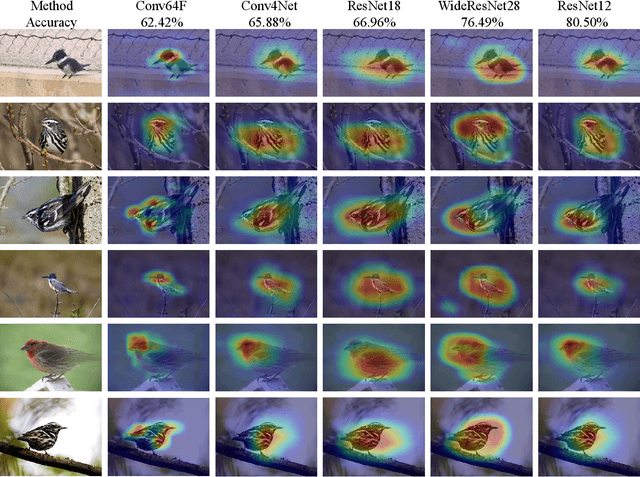

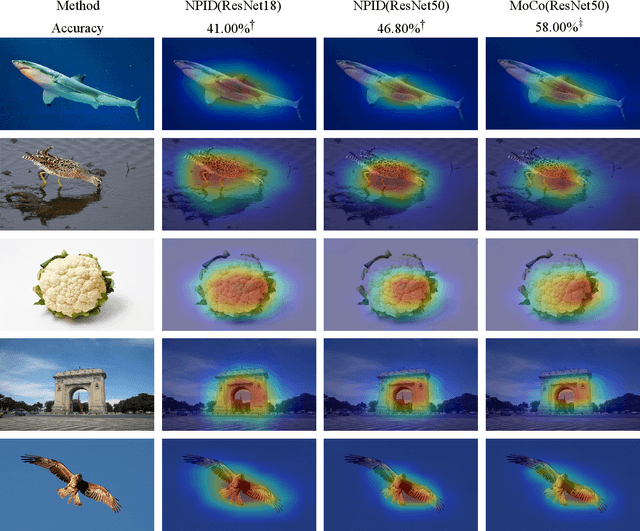

Neuron Abandoning Attention Flow: Visual Explanation of Dynamics inside CNN Models

Dec 02, 2024

Abstract:In this paper, we present a Neuron Abandoning Attention Flow (NAFlow) method to address the open problem of visually explaining the attention evolution dynamics inside CNNs when making their classification decisions. A novel cascading neuron abandoning back-propagation algorithm is designed to trace neurons in all layers of a CNN that involve in making its prediction to address the problem of significant interference from abandoned neurons. Firstly, a Neuron Abandoning Back-Propagation (NA-BP) module is proposed to generate Back-Propagated Feature Maps (BPFM) by using the inverse function of the intermediate layers of CNN models, on which the neurons not used for decision-making are abandoned. Meanwhile, the cascading NA-BP modules calculate the tensors of importance coefficients which are linearly combined with the tensors of BPFMs to form the NAFlow. Secondly, to be able to visualize attention flow for similarity metric-based CNN models, a new channel contribution weights module is proposed to calculate the importance coefficients via Jacobian Matrix. The effectiveness of the proposed NAFlow is validated on nine widely-used CNN models for various tasks of general image classification, contrastive learning classification, few-shot image classification, and image retrieval.

SATA: Spatial Autocorrelation Token Analysis for Enhancing the Robustness of Vision Transformers

Sep 30, 2024

Abstract:Over the past few years, vision transformers (ViTs) have consistently demonstrated remarkable performance across various visual recognition tasks. However, attempts to enhance their robustness have yielded limited success, mainly focusing on different training strategies, input patch augmentation, or network structural enhancements. These approaches often involve extensive training and fine-tuning, which are time-consuming and resource-intensive. To tackle these obstacles, we introduce a novel approach named Spatial Autocorrelation Token Analysis (SATA). By harnessing spatial relationships between token features, SATA enhances both the representational capacity and robustness of ViT models. This is achieved through the analysis and grouping of tokens according to their spatial autocorrelation scores prior to their input into the Feed-Forward Network (FFN) block of the self-attention mechanism. Importantly, SATA seamlessly integrates into existing pre-trained ViT baselines without requiring retraining or additional fine-tuning, while concurrently improving efficiency by reducing the computational load of the FFN units. Experimental results show that the baseline ViTs enhanced with SATA not only achieve a new state-of-the-art top-1 accuracy on ImageNet-1K image classification (94.9%) but also establish new state-of-the-art performance across multiple robustness benchmarks, including ImageNet-A (top-1=63.6%), ImageNet-R (top-1=79.2%), and ImageNet-C (mCE=13.6%), all without requiring additional training or fine-tuning of baseline models.

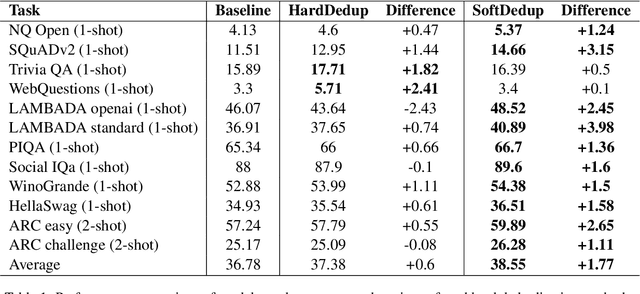

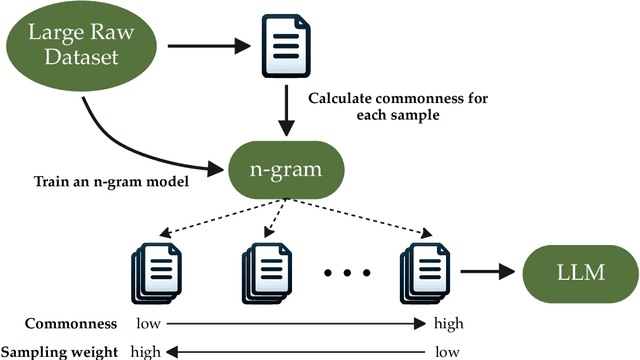

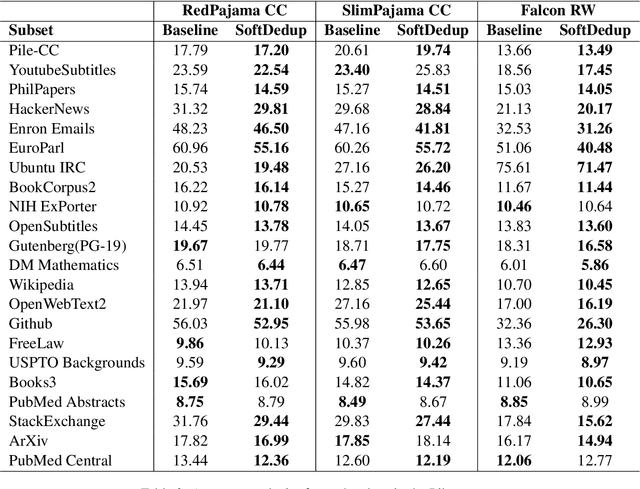

SoftDedup: an Efficient Data Reweighting Method for Speeding Up Language Model Pre-training

Jul 09, 2024

Abstract:The effectiveness of large language models (LLMs) is often hindered by duplicated data in their extensive pre-training datasets. Current approaches primarily focus on detecting and removing duplicates, which risks the loss of valuable information and neglects the varying degrees of duplication. To address this, we propose a soft deduplication method that maintains dataset integrity while selectively reducing the sampling weight of data with high commonness. Central to our approach is the concept of "data commonness", a metric we introduce to quantify the degree of duplication by measuring the occurrence probabilities of samples using an n-gram model. Empirical analysis shows that this method significantly improves training efficiency, achieving comparable perplexity scores with at least a 26% reduction in required training steps. Additionally, it enhances average few-shot downstream accuracy by 1.77% when trained for an equivalent duration. Importantly, this approach consistently improves performance, even on rigorously deduplicated datasets, indicating its potential to complement existing methods and become a standard pre-training process for LLMs.

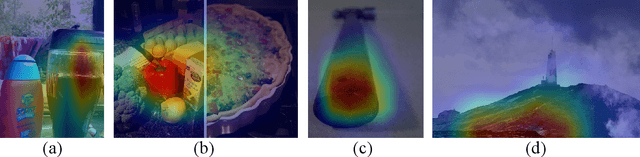

Feature Activation Map: Visual Explanation of Deep Learning Models for Image Classification

Jul 11, 2023

Abstract:Decisions made by convolutional neural networks(CNN) can be understood and explained by visualizing discriminative regions on images. To this end, Class Activation Map (CAM) based methods were proposed as powerful interpretation tools, making the prediction of deep learning models more explainable, transparent, and trustworthy. However, all the CAM-based methods (e.g., CAM, Grad-CAM, and Relevance-CAM) can only be used for interpreting CNN models with fully-connected (FC) layers as a classifier. It is worth noting that many deep learning models classify images without FC layers, e.g., few-shot learning image classification, contrastive learning image classification, and image retrieval tasks. In this work, a post-hoc interpretation tool named feature activation map (FAM) is proposed, which can interpret deep learning models without FC layers as a classifier. In the proposed FAM algorithm, the channel-wise contribution weights are derived from the similarity scores between two image embeddings. The activation maps are linearly combined with the corresponding normalized contribution weights, forming the explanation map for visualization. The quantitative and qualitative experiments conducted on ten deep learning models for few-shot image classification, contrastive learning image classification and image retrieval tasks demonstrate the effectiveness of the proposed FAM algorithm.

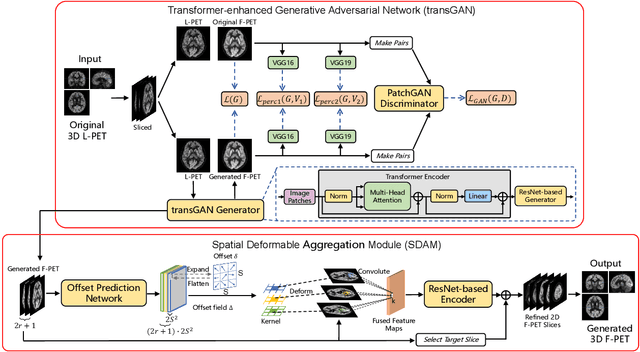

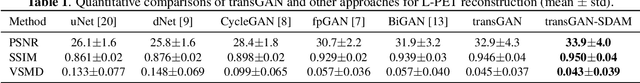

A resource-efficient deep learning framework for low-dose brain PET image reconstruction and analysis

Feb 14, 2022

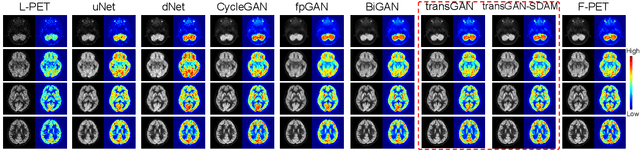

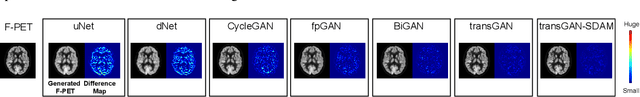

Abstract:18F-fluorodeoxyglucose (18F-FDG) Positron Emission Tomography (PET) imaging usually needs a full-dose radioactive tracer to obtain satisfactory diagnostic results, which raises concerns about the potential health risks of radiation exposure, especially for pediatric patients. Reconstructing the low-dose PET (L-PET) images to the high-quality full-dose PET (F-PET) ones is an effective way that both reduces the radiation exposure and remains diagnostic accuracy. In this paper, we propose a resource-efficient deep learning framework for L-PET reconstruction and analysis, referred to as transGAN-SDAM, to generate F-PET from corresponding L-PET, and quantify the standard uptake value ratios (SUVRs) of these generated F-PET at whole brain. The transGAN-SDAM consists of two modules: a transformer-encoded Generative Adversarial Network (transGAN) and a Spatial Deformable Aggregation Module (SDAM). The transGAN generates higher quality F-PET images, and then the SDAM integrates the spatial information of a sequence of generated F-PET slices to synthesize whole-brain F-PET images. Experimental results demonstrate the superiority and rationality of our approach.

A Compositional Feature Embedding and Similarity Metric for Ultra-Fine-Grained Visual Categorization

Oct 06, 2021

Abstract:Fine-grained visual categorization (FGVC), which aims at classifying objects with small inter-class variances, has been significantly advanced in recent years. However, ultra-fine-grained visual categorization (ultra-FGVC), which targets at identifying subclasses with extremely similar patterns, has not received much attention. In ultra-FGVC datasets, the samples per category are always scarce as the granularity moves down, which will lead to overfitting problems. Moreover, the difference among different categories is too subtle to distinguish even for professional experts. Motivated by these issues, this paper proposes a novel compositional feature embedding and similarity metric (CECS). Specifically, in the compositional feature embedding module, we randomly select patches in the original input image, and these patches are then replaced by patches from the images of different categories or masked out. Then the replaced and masked images are used to augment the original input images, which can provide more diverse samples and thus largely alleviate overfitting problem resulted from limited training samples. Besides, learning with diverse samples forces the model to learn not only the most discriminative features but also other informative features in remaining regions, enhancing the generalization and robustness of the model. In the compositional similarity metric module, a new similarity metric is developed to improve the classification performance by narrowing the intra-category distance and enlarging the inter-category distance. Experimental results on two ultra-FGVC datasets and one FGVC dataset with recent benchmark methods consistently demonstrate that the proposed CECS method achieves the state of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge