Yaran Chen

Robotic Sim-to-Real Transfer for Long-Horizon Pick-and-Place Tasks in the Robotic Sim2Real Competition

Mar 14, 2025Abstract:This paper presents a fully autonomous robotic system that performs sim-to-real transfer in complex long-horizon tasks involving navigation, recognition, grasping, and stacking in an environment with multiple obstacles. The key feature of the system is the ability to overcome typical sensing and actuation discrepancies during sim-to-real transfer and to achieve consistent performance without any algorithmic modifications. To accomplish this, a lightweight noise-resistant visual perception system and a nonlinearity-robust servo system are adopted. We conduct a series of tests in both simulated and real-world environments. The visual perception system achieves the speed of 11 ms per frame due to its lightweight nature, and the servo system achieves sub-centimeter accuracy with the proposed controller. Both exhibit high consistency during sim-to-real transfer. Benefiting from these, our robotic system took first place in the mineral searching task of the Robotic Sim2Real Challenge hosted at ICRA 2024.

Sample-efficient Unsupervised Policy Cloning from Ensemble Self-supervised Labeled Videos

Dec 14, 2024

Abstract:Current advanced policy learning methodologies have demonstrated the ability to develop expert-level strategies when provided enough information. However, their requirements, including task-specific rewards, expert-labeled trajectories, and huge environmental interactions, can be expensive or even unavailable in many scenarios. In contrast, humans can efficiently acquire skills within a few trials and errors by imitating easily accessible internet video, in the absence of any other supervision. In this paper, we try to let machines replicate this efficient watching-and-learning process through Unsupervised Policy from Ensemble Self-supervised labeled Videos (UPESV), a novel framework to efficiently learn policies from videos without any other expert supervision. UPESV trains a video labeling model to infer the expert actions in expert videos, through several organically combined self-supervised tasks. Each task performs its own duties, and they together enable the model to make full use of both expert videos and reward-free interactions for advanced dynamics understanding and robust prediction. Simultaneously, UPESV clones a policy from the labeled expert videos, in turn collecting environmental interactions for self-supervised tasks. After a sample-efficient and unsupervised (i.e., reward-free) training process, an advanced video-imitated policy is obtained. Extensive experiments in sixteen challenging procedurally-generated environments demonstrate that the proposed UPESV achieves state-of-the-art few-shot policy learning (outperforming five current advanced baselines on 12/16 tasks) without exposure to any other supervision except videos. Detailed analysis is also provided, verifying the necessity of each self-supervised task employed in UPESV.

GAPartManip: A Large-scale Part-centric Dataset for Material-Agnostic Articulated Object Manipulation

Nov 27, 2024Abstract:Effectively manipulating articulated objects in household scenarios is a crucial step toward achieving general embodied artificial intelligence. Mainstream research in 3D vision has primarily focused on manipulation through depth perception and pose detection. However, in real-world environments, these methods often face challenges due to imperfect depth perception, such as with transparent lids and reflective handles. Moreover, they generally lack the diversity in part-based interactions required for flexible and adaptable manipulation. To address these challenges, we introduced a large-scale part-centric dataset for articulated object manipulation that features both photo-realistic material randomizations and detailed annotations of part-oriented, scene-level actionable interaction poses. We evaluated the effectiveness of our dataset by integrating it with several state-of-the-art methods for depth estimation and interaction pose prediction. Additionally, we proposed a novel modular framework that delivers superior and robust performance for generalizable articulated object manipulation. Our extensive experiments demonstrate that our dataset significantly improves the performance of depth perception and actionable interaction pose prediction in both simulation and real-world scenarios.

PlanAgent: A Multi-modal Large Language Agent for Closed-loop Vehicle Motion Planning

Jun 04, 2024

Abstract:Vehicle motion planning is an essential component of autonomous driving technology. Current rule-based vehicle motion planning methods perform satisfactorily in common scenarios but struggle to generalize to long-tailed situations. Meanwhile, learning-based methods have yet to achieve superior performance over rule-based approaches in large-scale closed-loop scenarios. To address these issues, we propose PlanAgent, the first mid-to-mid planning system based on a Multi-modal Large Language Model (MLLM). MLLM is used as a cognitive agent to introduce human-like knowledge, interpretability, and common-sense reasoning into the closed-loop planning. Specifically, PlanAgent leverages the power of MLLM through three core modules. First, an Environment Transformation module constructs a Bird's Eye View (BEV) map and a lane-graph-based textual description from the environment as inputs. Second, a Reasoning Engine module introduces a hierarchical chain-of-thought from scene understanding to lateral and longitudinal motion instructions, culminating in planner code generation. Last, a Reflection module is integrated to simulate and evaluate the generated planner for reducing MLLM's uncertainty. PlanAgent is endowed with the common-sense reasoning and generalization capability of MLLM, which empowers it to effectively tackle both common and complex long-tailed scenarios. Our proposed PlanAgent is evaluated on the large-scale and challenging nuPlan benchmarks. A comprehensive set of experiments convincingly demonstrates that PlanAgent outperforms the existing state-of-the-art in the closed-loop motion planning task. Codes will be soon released.

Learning Future Representation with Synthetic Observations for Sample-efficient Reinforcement Learning

May 20, 2024

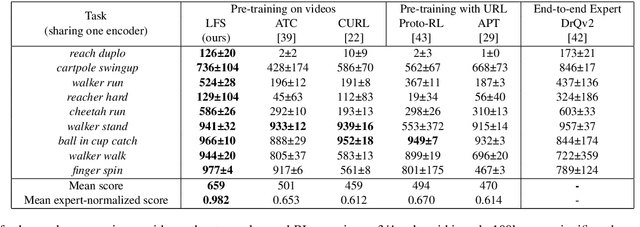

Abstract:In visual Reinforcement Learning (RL), upstream representation learning largely determines the effect of downstream policy learning. Employing auxiliary tasks allows the agent to enhance visual representation in a targeted manner, thereby improving the sample efficiency and performance of downstream RL. Prior advanced auxiliary tasks all focus on how to extract as much information as possible from limited experience (including observations, actions, and rewards) through their different auxiliary objectives, whereas in this article, we first start from another perspective: auxiliary training data. We try to improve auxiliary representation learning for RL by enriching auxiliary training data, proposing \textbf{L}earning \textbf{F}uture representation with \textbf{S}ynthetic observations \textbf{(LFS)}, a novel self-supervised RL approach. Specifically, we propose a training-free method to synthesize observations that may contain future information, as well as a data selection approach to eliminate unqualified synthetic noise. The remaining synthetic observations and real observations then serve as the auxiliary data to achieve a clustering-based temporal association task for representation learning. LFS allows the agent to access and learn observations that have not yet appeared in advance, so as to quickly understand and exploit them when they occur later. In addition, LFS does not rely on rewards or actions, which means it has a wider scope of application (e.g., learning from video) than recent advanced auxiliary tasks. Extensive experiments demonstrate that our LFS exhibits state-of-the-art RL sample efficiency on challenging continuous control and enables advanced visual pre-training based on action-free video demonstrations.

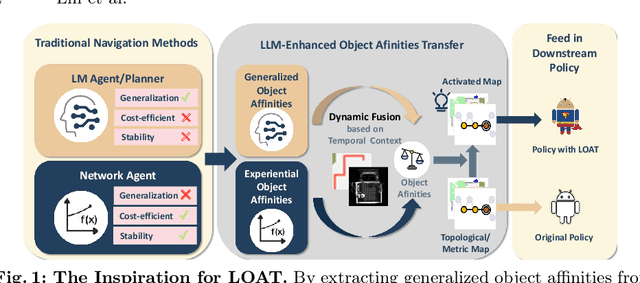

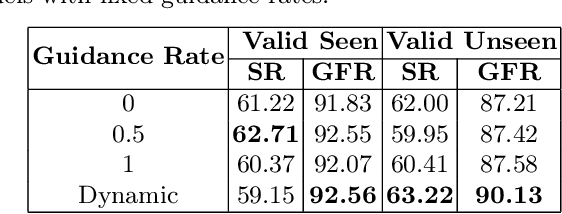

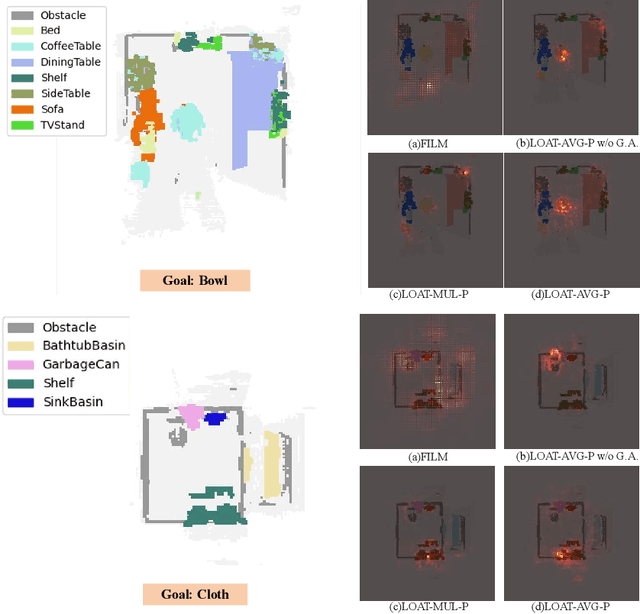

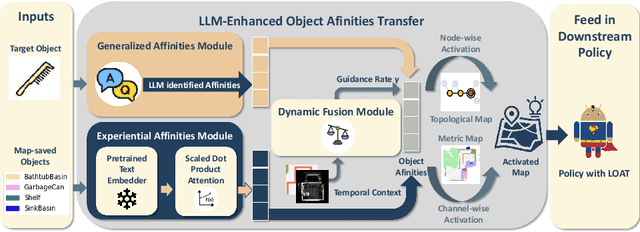

Advancing Object Goal Navigation Through LLM-enhanced Object Affinities Transfer

Mar 15, 2024

Abstract:In object goal navigation, agents navigate towards objects identified by category labels using visual and spatial information. Previously, solely network-based methods typically rely on historical data for object affinities estimation, lacking adaptability to new environments and unseen targets. Simultaneously, employing Large Language Models (LLMs) for navigation as either planners or agents, though offering a broad knowledge base, is cost-inefficient and lacks targeted historical experience. Addressing these challenges, we present the LLM-enhanced Object Affinities Transfer (LOAT) framework, integrating LLM-derived object semantics with network-based approaches to leverage experiential object affinities, thus improving adaptability in unfamiliar settings. LOAT employs a dual-module strategy: a generalized affinities module for accessing LLMs' vast knowledge and an experiential affinities module for applying learned object semantic relationships, complemented by a dynamic fusion module harmonizing these information sources based on temporal context. The resulting scores activate semantic maps before feeding into downstream policies, enhancing navigation systems with context-aware inputs. Our evaluations in AI2-THOR and Habitat simulators demonstrate improvements in both navigation success rates and efficiency, validating the LOAT's efficacy in integrating LLM insights for improved object goal navigation.

RoboGPT: an intelligent agent of making embodied long-term decisions for daily instruction tasks

Nov 27, 2023Abstract:Robotic agents must master common sense and long-term sequential decisions to solve daily tasks through natural language instruction. The developments in Large Language Models (LLMs) in natural language processing have inspired efforts to use LLMs in complex robot planning. Despite LLMs' great generalization and comprehension of instruction tasks, LLMs-generated task plans sometimes lack feasibility and correctness. To address the problem, we propose a RoboGPT agent\footnote{our code and dataset will be released soon} for making embodied long-term decisions for daily tasks, with two modules: 1) LLMs-based planning with re-plan to break the task into multiple sub-goals; 2) RoboSkill individually designed for sub-goals to learn better navigation and manipulation skills. The LLMs-based planning is enhanced with a new robotic dataset and re-plan, called RoboGPT. The new robotic dataset of 67k daily instruction tasks is gathered for fine-tuning the Llama model and obtaining RoboGPT. RoboGPT planner with strong generalization can plan hundreds of daily instruction tasks. Additionally, a low-computational Re-Plan module is designed to allow plans to flexibly adapt to the environment, thereby addressing the nomenclature diversity challenge. The proposed RoboGPT agent outperforms SOTA methods on the ALFRED daily tasks. Moreover, RoboGPT planner exceeds SOTA LLM-based planners like ChatGPT in task-planning rationality for hundreds of unseen daily tasks, and even other domain tasks, while keeping the large model's original broad application and generality.

ComSD: Balancing Behavioral Quality and Diversity in Unsupervised Skill Discovery

Sep 29, 2023

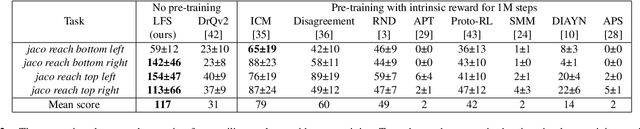

Abstract:Learning diverse and qualified behaviors for utilization and adaptation without supervision is a key ability of intelligent creatures. Ideal unsupervised skill discovery methods are able to produce diverse and qualified skills in the absence of extrinsic reward, while the discovered skill set can efficiently adapt to downstream tasks in various ways. Maximizing the Mutual Information (MI) between skills and visited states can achieve ideal skill-conditioned behavior distillation in theory. However, it's difficult for recent advanced methods to well balance behavioral quality (exploration) and diversity (exploitation) in practice, which may be attributed to the unreasonable MI estimation by their rigid intrinsic reward design. In this paper, we propose Contrastive multi-objectives Skill Discovery (ComSD) which tries to mitigate the quality-versus-diversity conflict of discovered behaviors through a more reasonable MI estimation and a dynamically weighted intrinsic reward. ComSD proposes to employ contrastive learning for a more reasonable estimation of skill-conditioned entropy in MI decomposition. In addition, a novel weighting mechanism is proposed to dynamically balance different entropy (in MI decomposition) estimations into a novel multi-objective intrinsic reward, to improve both skill diversity and quality. For challenging robot behavior discovery, ComSD can produce a qualified skill set consisting of diverse behaviors at different activity levels, which recent advanced methods cannot. On numerical evaluations, ComSD exhibits state-of-the-art adaptation performance, significantly outperforming recent advanced skill discovery methods across all skill combination tasks and most skill finetuning tasks. Codes will be released at https://github.com/liuxin0824/ComSD.

Multi-modal Learning based Prediction for Disease

Jul 19, 2023Abstract:Non alcoholic fatty liver disease (NAFLD) is the most common cause of chronic liver disease, which can be predicted accurately to prevent advanced fibrosis and cirrhosis. While, a liver biopsy, the gold standard for NAFLD diagnosis, is invasive, expensive, and prone to sampling errors. Therefore, non-invasive studies are extremely promising, yet they are still in their infancy due to the lack of comprehensive research data and intelligent methods for multi-modal data. This paper proposes a NAFLD diagnosis system (DeepFLDDiag) combining a comprehensive clinical dataset (FLDData) and a multi-modal learning based NAFLD prediction method (DeepFLD). The dataset includes over 6000 participants physical examinations, laboratory and imaging studies, extensive questionnaires, and facial images of partial participants, which is comprehensive and valuable for clinical studies. From the dataset, we quantitatively analyze and select clinical metadata that most contribute to NAFLD prediction. Furthermore, the proposed DeepFLD, a deep neural network model designed to predict NAFLD using multi-modal input, including metadata and facial images, outperforms the approach that only uses metadata. Satisfactory performance is also verified on other unseen datasets. Inspiringly, DeepFLD can achieve competitive results using only facial images as input rather than metadata, paving the way for a more robust and simpler non-invasive NAFLD diagnosis.

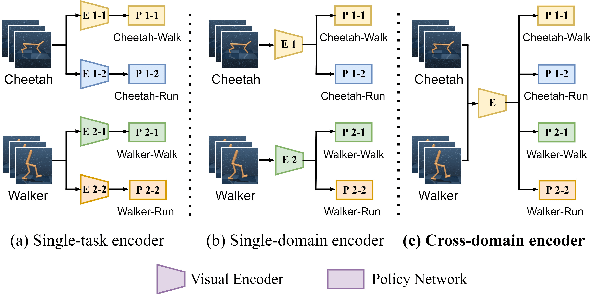

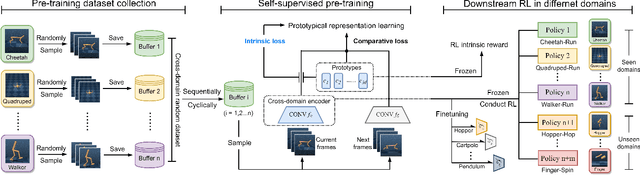

Cross-domain Random Pre-training with Prototypes for Reinforcement Learning

Feb 11, 2023

Abstract:Task-agnostic cross-domain pre-training shows great potential in image-based Reinforcement Learning (RL) but poses a big challenge. In this paper, we propose CRPTpro, a Cross-domain self-supervised Random Pre-Training framework with prototypes for image-based RL. CRPTpro employs cross-domain random policy to easily and quickly sample diverse data from multiple domains, to improve pre-training efficiency. Moreover, prototypical representation learning with a novel intrinsic loss is proposed to pre-train an effective and generic encoder across different domains. Without finetuning, the cross-domain encoder can be implemented for challenging downstream visual-control RL tasks defined in different domains efficiently. Compared with prior arts like APT and Proto-RL, CRPTpro achieves better performance on cross-domain downstream RL tasks without extra training on exploration agents for expert data collection, greatly reducing the burden of pre-training. Experiments on DeepMind Control suite (DMControl) demonstrate that CRPTpro outperforms APT significantly on 11/12 cross-domain RL tasks with only 39% pre-training hours, becoming a state-of-the-art cross-domain pre-training method in both policy learning performance and pre-training efficiency. The complete code will be released at https://github.com/liuxin0824/CRPTpro.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge