Xudong Zhao

Layer-adaptive Expert Pruning for Pre-Training of Mixture-of-Experts Large Language Models

Jan 20, 2026Abstract:Although Mixture-of-Experts (MoE) Large Language Models (LLMs) deliver superior accuracy with a reduced number of active parameters, their pre-training represents a significant computationally bottleneck due to underutilized experts and limited training efficiency. This work introduces a Layer-Adaptive Expert Pruning (LAEP) algorithm designed for the pre-training stage of MoE LLMs. In contrast to previous expert pruning approaches that operate primarily in the post-training phase, the proposed algorithm enhances training efficiency by selectively pruning underutilized experts and reorganizing experts across computing devices according to token distribution statistics. Comprehensive experiments demonstrate that LAEP effectively reduces model size and substantially improves pre-training efficiency. In particular, when pre-training the 1010B Base model from scratch, LAEP achieves a 48.3\% improvement in training efficiency alongside a 33.3% parameter reduction, while still delivering excellent performance across multiple domains.

Yuan3.0 Flash: An Open Multimodal Large Language Model for Enterprise Applications

Jan 05, 2026Abstract:We introduce Yuan3.0 Flash, an open-source Mixture-of-Experts (MoE) MultiModal Large Language Model featuring 3.7B activated parameters and 40B total parameters, specifically designed to enhance performance on enterprise-oriented tasks while maintaining competitive capabilities on general-purpose tasks. To address the overthinking phenomenon commonly observed in Large Reasoning Models (LRMs), we propose Reflection-aware Adaptive Policy Optimization (RAPO), a novel RL training algorithm that effectively regulates overthinking behaviors. In enterprise-oriented tasks such as retrieval-augmented generation (RAG), complex table understanding, and summarization, Yuan3.0 Flash consistently achieves superior performance. Moreover, it also demonstrates strong reasoning capabilities in domains such as mathematics, science, etc., attaining accuracy comparable to frontier model while requiring only approximately 1/4 to 1/2 of the average tokens. Yuan3.0 Flash has been fully open-sourced to facilitate further research and real-world deployment: https://github.com/Yuan-lab-LLM/Yuan3.0.

Yuan-TecSwin: A text conditioned Diffusion model with Swin-transformer blocks

Dec 18, 2025Abstract:Diffusion models have shown remarkable capacity in image synthesis based on their U-shaped architecture and convolutional neural networks (CNN) as basic blocks. The locality of the convolution operation in CNN may limit the model's ability to understand long-range semantic information. To address this issue, we propose Yuan-TecSwin, a text-conditioned diffusion model with Swin-transformer in this work. The Swin-transformer blocks take the place of CNN blocks in the encoder and decoder, to improve the non-local modeling ability in feature extraction and image restoration. The text-image alignment is improved with a well-chosen text encoder, effective utilization of text embedding, and careful design in the incorporation of text condition. Using an adapted time step to search in different diffusion stages, inference performance is further improved by 10%. Yuan-TecSwin achieves the state-of-the-art FID score of 1.37 on ImageNet generation benchmark, without any additional models at different denoising stages. In a side-by-side comparison, we find it difficult for human interviewees to tell the model-generated images from the human-painted ones.

Robust 4D Radar-aided Inertial Navigation for Aerial Vehicles

Feb 21, 2025Abstract:While LiDAR and cameras are becoming ubiquitous for unmanned aerial vehicles (UAVs) but can be ineffective in challenging environments, 4D millimeter-wave (MMW) radars that can provide robust 3D ranging and Doppler velocity measurements are less exploited for aerial navigation. In this paper, we develop an efficient and robust error-state Kalman filter (ESKF)-based radar-inertial navigation for UAVs. The key idea of the proposed approach is the point-to-distribution radar scan matching to provide motion constraints with proper uncertainty qualification, which are used to update the navigation states in a tightly coupled manner, along with the Doppler velocity measurements. Moreover, we propose a robust keyframe-based matching scheme against the prior map (if available) to bound the accumulated navigation errors and thus provide a radar-based global localization solution with high accuracy. Extensive real-world experimental validations have demonstrated that the proposed radar-aided inertial navigation outperforms state-of-the-art methods in both accuracy and robustness.

SQLfuse: Enhancing Text-to-SQL Performance through Comprehensive LLM Synergy

Jul 19, 2024

Abstract:Text-to-SQL conversion is a critical innovation, simplifying the transition from complex SQL to intuitive natural language queries, especially significant given SQL's prevalence in the job market across various roles. The rise of Large Language Models (LLMs) like GPT-3.5 and GPT-4 has greatly advanced this field, offering improved natural language understanding and the ability to generate nuanced SQL statements. However, the potential of open-source LLMs in Text-to-SQL applications remains underexplored, with many frameworks failing to leverage their full capabilities, particularly in handling complex database queries and incorporating feedback for iterative refinement. Addressing these limitations, this paper introduces SQLfuse, a robust system integrating open-source LLMs with a suite of tools to enhance Text-to-SQL translation's accuracy and usability. SQLfuse features four modules: schema mining, schema linking, SQL generation, and a SQL critic module, to not only generate but also continuously enhance SQL query quality. Demonstrated by its leading performance on the Spider Leaderboard and deployment by Ant Group, SQLfuse showcases the practical merits of open-source LLMs in diverse business contexts.

Yuan 2.0-M32: Mixture of Experts with Attention Router

May 29, 2024Abstract:Yuan 2.0-M32, with a similar base architecture as Yuan-2.0 2B, uses a mixture-of-experts architecture with 32 experts of which 2 experts are active. A new router network, Attention Router, is proposed and adopted for a more efficient selection of experts, which improves the accuracy compared to the model with classical router network. Yuan 2.0-M32 is trained with 2000B tokens from scratch, and the training computation consumption is only 9.25% of a dense model at the same parameter scale. Yuan 2.0-M32 demonstrates competitive capability on coding, math, and various domains of expertise, with only 3.7B active parameters of 40B in total, and 7.4 GFlops forward computation per token, both of which are only 1/19 of Llama3-70B. Yuan 2.0-M32 surpass Llama3-70B on MATH and ARC-Challenge benchmark, with accuracy of 55.89 and 95.8 respectively. The models and source codes of Yuan 2.0-M32 are released at Github1.

YUAN 2.0: A Large Language Model with Localized Filtering-based Attention

Dec 04, 2023

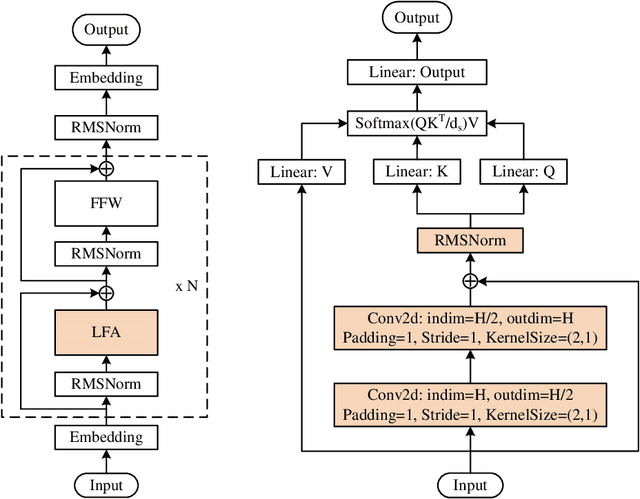

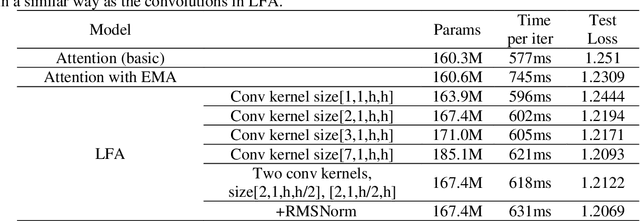

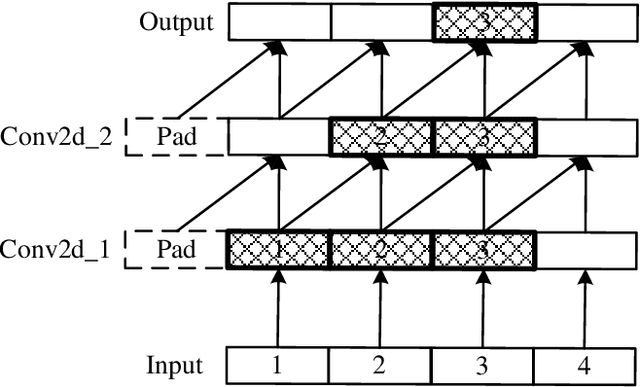

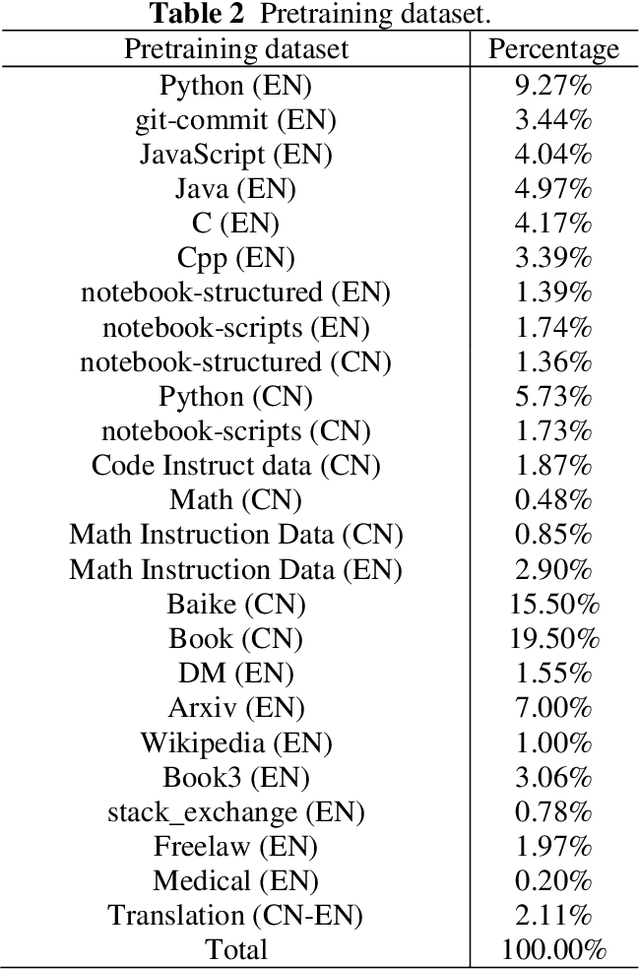

Abstract:In this work, we develop and release Yuan 2.0, a series of large language models with parameters ranging from 2.1 billion to 102.6 billion. The Localized Filtering-based Attention (LFA) is introduced to incorporate prior knowledge of local dependencies of natural language into Attention. A data filtering and generating system is presented to build pre-training and fine-tuning dataset in high quality. A distributed training method with non-uniform pipeline parallel, data parallel, and optimizer parallel is proposed, which greatly reduces the bandwidth requirements of intra-node communication, and achieves good performance in large-scale distributed training. Yuan 2.0 models display impressive ability in code generation, math problem-solving, and chatting compared with existing models. The latest version of YUAN 2.0, including model weights and source code, is accessible at Github.

Yuan 1.0: Large-Scale Pre-trained Language Model in Zero-Shot and Few-Shot Learning

Oct 12, 2021

Abstract:Recent work like GPT-3 has demonstrated excellent performance of Zero-Shot and Few-Shot learning on many natural language processing (NLP) tasks by scaling up model size, dataset size and the amount of computation. However, training a model like GPT-3 requires huge amount of computational resources which makes it challengeable to researchers. In this work, we propose a method that incorporates large-scale distributed training performance into model architecture design. With this method, Yuan 1.0, the current largest singleton language model with 245B parameters, achieves excellent performance on thousands GPUs during training, and the state-of-the-art results on NLP tasks. A data processing method is designed to efficiently filter massive amount of raw data. The current largest high-quality Chinese corpus with 5TB high quality texts is built based on this method. In addition, a calibration and label expansion method is proposed to improve the Zero-Shot and Few-Shot performance, and steady improvement is observed on the accuracy of various tasks. Yuan 1.0 presents strong capacity of natural language generation, and the generated articles are difficult to distinguish from the human-written ones.

MLRSNet: A Multi-label High Spatial Resolution Remote Sensing Dataset for Semantic Scene Understanding

Oct 01, 2020

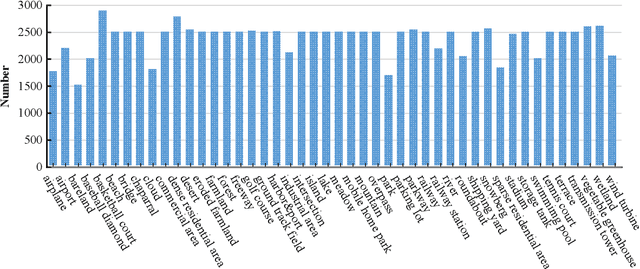

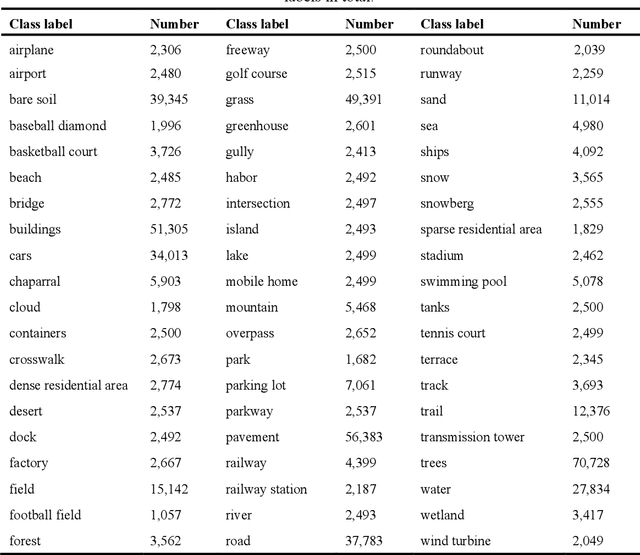

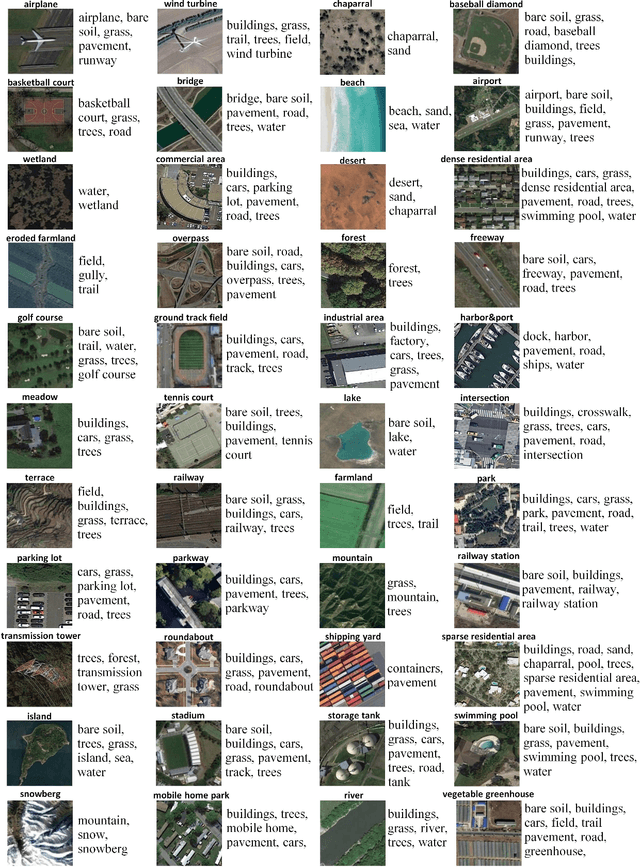

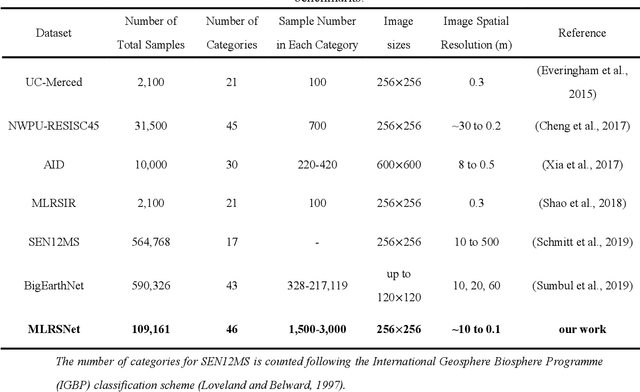

Abstract:To better understand scene images in the field of remote sensing, multi-label annotation of scene images is necessary. Moreover, to enhance the performance of deep learning models for dealing with semantic scene understanding tasks, it is vital to train them on large-scale annotated data. However, most existing datasets are annotated by a single label, which cannot describe the complex remote sensing images well because scene images might have multiple land cover classes. Few multi-label high spatial resolution remote sensing datasets have been developed to train deep learning models for multi-label based tasks, such as scene classification and image retrieval. To address this issue, in this paper, we construct a multi-label high spatial resolution remote sensing dataset named MLRSNet for semantic scene understanding with deep learning from the overhead perspective. It is composed of high-resolution optical satellite or aerial images. MLRSNet contains a total of 109,161 samples within 46 scene categories, and each image has at least one of 60 predefined labels. We have designed visual recognition tasks, including multi-label based image classification and image retrieval, in which a wide variety of deep learning approaches are evaluated with MLRSNet. The experimental results demonstrate that MLRSNet is a significant benchmark for future research, and it complements the current widely used datasets such as ImageNet, which fills gaps in multi-label image research. Furthermore, we will continue to expand the MLRSNet. MLRSNet and all related materials have been made publicly available at https://data.mendeley.com/datasets/7j9bv9vwsx/2 and https://github.com/cugbrs/MLRSNet.git.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge