Hongli Liu

Domain Generalization for Cross-Receiver Radio Frequency Fingerprint Identification

Nov 06, 2024

Abstract:Radio Frequency Fingerprint Identification (RFFI) technology uniquely identifies emitters by analyzing unique distortions in the transmitted signal caused by non-ideal hardware. Recently, RFFI based on deep learning methods has gained popularity and is seen as a promising way to address the device authentication problem for Internet of Things (IoT) systems. However, in cross-receiver scenarios, where the RFFI model is trained over RF signals from some receivers but deployed at a new receiver, the alteration of receivers' characteristics would lead to data distribution shift and cause significant performance degradation at the new receiver. To address this problem, we first perform a theoretical analysis of the cross-receiver generalization error bound and propose a sufficient condition, named Separable Condition (SC), to minimize the classification error probability on the new receiver. Guided by the SC, a Receiver-Independent Emitter Identification (RIEI)model is devised to decouple the received signals into emitter-related features and receiver-related features and only the emitter-related features are used for identification. Furthermore, by leveraging federated learning, we also develop a FedRIEI model to eliminate the need for centralized collection of raw data from multiple receivers. Experiments on two real-world datasets demonstrate the superiority of our proposed methods over some baseline methods.

CAT: LoCalization and IdentificAtion Cascade Detection Transformer for Open-World Object Detection

Jan 05, 2023

Abstract:Open-world object detection (OWOD), as a more general and challenging goal, requires the model trained from data on known objects to detect both known and unknown objects and incrementally learn to identify these unknown objects. The existing works which employ standard detection framework and fixed pseudo-labelling mechanism (PLM) have the following problems: (i) The inclusion of detecting unknown objects substantially reduces the model's ability to detect known ones. (ii) The PLM does not adequately utilize the priori knowledge of inputs. (iii) The fixed selection manner of PLM cannot guarantee that the model is trained in the right direction. We observe that humans subconsciously prefer to focus on all foreground objects and then identify each one in detail, rather than localize and identify a single object simultaneously, for alleviating the confusion. This motivates us to propose a novel solution called CAT: LoCalization and IdentificAtion Cascade Detection Transformer which decouples the detection process via the shared decoder in the cascade decoding way. In the meanwhile, we propose the self-adaptive pseudo-labelling mechanism which combines the model-driven with input-driven PLM and self-adaptively generates robust pseudo-labels for unknown objects, significantly improving the ability of CAT to retrieve unknown objects. Comprehensive experiments on two benchmark datasets, i.e., MS-COCO and PASCAL VOC, show that our model outperforms the state-of-the-art in terms of all metrics in the task of OWOD, incremental object detection (IOD) and open-set detection.

Yuan 1.0: Large-Scale Pre-trained Language Model in Zero-Shot and Few-Shot Learning

Oct 12, 2021

Abstract:Recent work like GPT-3 has demonstrated excellent performance of Zero-Shot and Few-Shot learning on many natural language processing (NLP) tasks by scaling up model size, dataset size and the amount of computation. However, training a model like GPT-3 requires huge amount of computational resources which makes it challengeable to researchers. In this work, we propose a method that incorporates large-scale distributed training performance into model architecture design. With this method, Yuan 1.0, the current largest singleton language model with 245B parameters, achieves excellent performance on thousands GPUs during training, and the state-of-the-art results on NLP tasks. A data processing method is designed to efficiently filter massive amount of raw data. The current largest high-quality Chinese corpus with 5TB high quality texts is built based on this method. In addition, a calibration and label expansion method is proposed to improve the Zero-Shot and Few-Shot performance, and steady improvement is observed on the accuracy of various tasks. Yuan 1.0 presents strong capacity of natural language generation, and the generated articles are difficult to distinguish from the human-written ones.

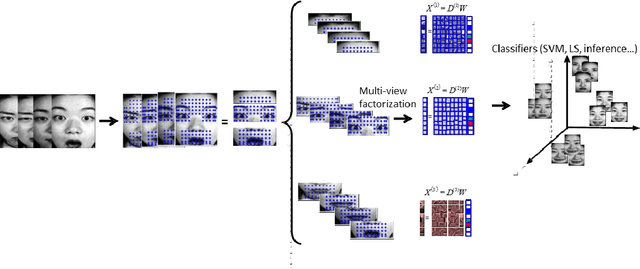

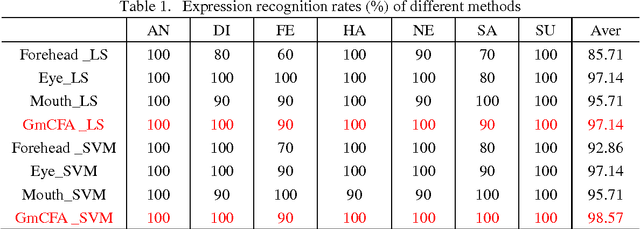

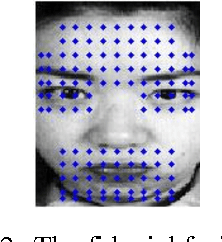

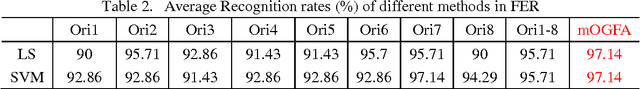

Multi-view Face Analysis Based on Gabor Features

Mar 06, 2014

Abstract:Facial analysis has attracted much attention in the technology for human-machine interface. Different methods of classification based on sparse representation and Gabor kernels have been widely applied in the fields of facial analysis. However, most of these methods treat face from a whole view standpoint. In terms of the importance of different facial views, in this paper, we present multi-view face analysis based on sparse representation and Gabor wavelet coefficients. To evaluate the performance, we conduct face analysis experiments including face recognition (FR) and face expression recognition (FER) on JAFFE database. Experiments are conducted from two parts: (1) Face images are divided into three facial parts which are forehead, eye and mouth. (2) Face images are divided into 8 parts by the orientation of Gabor kernels. Experimental results demonstrate that the proposed methods can significantly boost the performance and perform better than the other methods.

* 8 pages, 3 figures, Journal of Information and Computational Science

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge