Xinpeng Li

When Domains Interact: Asymmetric and Order-Sensitive Cross-Domain Effects in Reinforcement Learning for Reasoning

Feb 01, 2026Abstract:Group Relative Policy Optimization (GRPO) has become a key technique for improving reasoning abilities in large language models, yet its behavior under different domain sequencing strategies is poorly understood. In particular, the impact of sequential (one domain at a time) versus mixed-domain (multiple domain at a time) training in GRPO has not been systematically studied. We provide the first systematic analysis of training-order effects across math, science, logic, and puzzle reasoning tasks. We found (1) single-domain generalization is highly asymmetric: training on other domains improves math reasoning by approximately 25\% accuracy, while yielding negligible transfer to logic and puzzle; (2) cross-domain interactions are highly order-dependent: training in the order math$\rightarrow$science achieves 83\% / 41\% accuracy on math / science, while reversing the order to science$\rightarrow$math degrades performance to 77\% / 25\%; (3) no single strategy is universally optimal in multi-domain training: sequential training favors math (up to 84\%), mixed training favors science and logic, and poor ordering can incur large performance gaps (from 70\% to 56\%). Overall, our findings demonstrate that GRPO under multi-domain settings exhibits pronounced asymmetry, order sensitivity, and strategy dependence, highlighting the necessity of domain-aware and order-aware training design.

Mid-Think: Training-Free Intermediate-Budget Reasoning via Token-Level Triggers

Jan 11, 2026Abstract:Hybrid reasoning language models are commonly controlled through high-level Think/No-think instructions to regulate reasoning behavior, yet we found that such mode switching is largely driven by a small set of trigger tokens rather than the instructions themselves. Through attention analysis and controlled prompting experiments, we show that a leading ``Okay'' token induces reasoning behavior, while the newline pattern following ``</think>'' suppresses it. Based on this observation, we propose Mid-Think, a simple training-free prompting format that combines these triggers to achieve intermediate-budget reasoning, consistently outperforming fixed-token and prompt-based baselines in terms of the accuracy-length trade-off. Furthermore, applying Mid-Think to RL training after SFT reduces training time by approximately 15% while improving final performance of Qwen3-8B on AIME from 69.8% to 72.4% and on GPQA from 58.5% to 61.1%, demonstrating its effectiveness for both inference-time control and RL-based reasoning training.

A New Framework for Explainable Rare Cell Identification in Single-Cell Transcriptomics Data

Jan 04, 2026Abstract:The detection of rare cell types in single-cell transcriptomics data is crucial for elucidating disease pathogenesis and tissue development dynamics. However, a critical gap that persists in current methods is their inability to provide an explanation based on genes for each cell they have detected as rare. We identify three primary sources of this deficiency. First, the anomaly detectors often function as "black boxes", designed to detect anomalies but unable to explain why a cell is anomalous. Second, the standard analytical framework hinders interpretability by relying on dimensionality reduction techniques, such as Principal Component Analysis (PCA), which transform meaningful gene expression data into abstract, uninterpretable features. Finally, existing explanation algorithms cannot be readily applied to this domain, as single-cell data is characterized by high dimensionality, noise, and substantial sparsity. To overcome these limitations, we introduce a framework for explainable anomaly detection in single-cell transcriptomics data which not only identifies individual anomalies, but also provides a visual explanation based on genes that makes an instance anomalous. This framework has two key ingredients that are not existed in current methods applied in this domain. First, it eliminates the PCA step which is deemed to be an essential component in previous studies. Second, it employs the state-of-art anomaly detector and explainer as the efficient and effective means to find each rare cell and the relevant gene subspace in order to provide explanations for each rare cell as well as the typical normal cell associated with the rare cell's closest normal cells.

Distribution-Based Feature Attribution for Explaining the Predictions of Any Classifier

Nov 12, 2025Abstract:The proliferation of complex, black-box AI models has intensified the need for techniques that can explain their decisions. Feature attribution methods have become a popular solution for providing post-hoc explanations, yet the field has historically lacked a formal problem definition. This paper addresses this gap by introducing a formal definition for the problem of feature attribution, which stipulates that explanations be supported by an underlying probability distribution represented by the given dataset. Our analysis reveals that many existing model-agnostic methods fail to meet this criterion, while even those that do often possess other limitations. To overcome these challenges, we propose Distributional Feature Attribution eXplanations (DFAX), a novel, model-agnostic method for feature attribution. DFAX is the first feature attribution method to explain classifier predictions directly based on the data distribution. We show through extensive experiments that DFAX is more effective and efficient than state-of-the-art baselines.

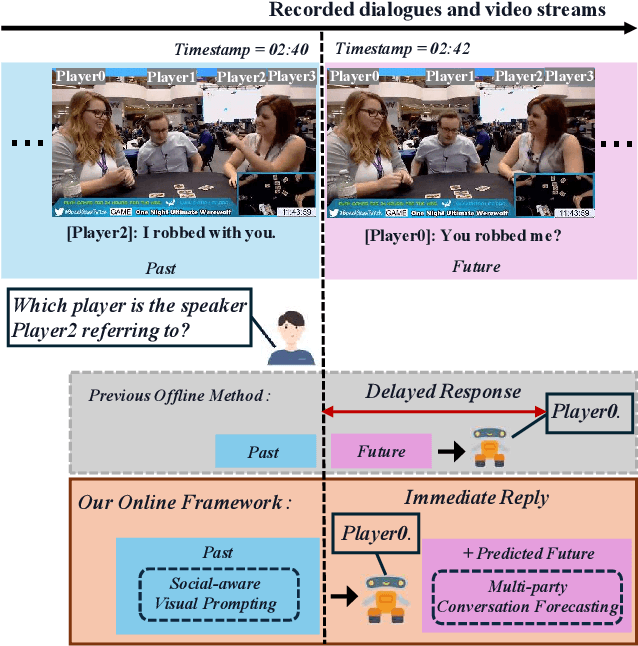

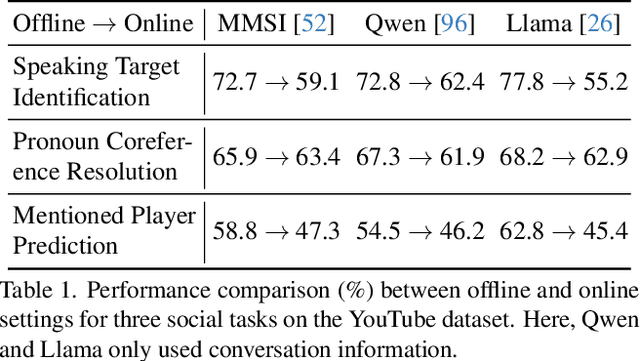

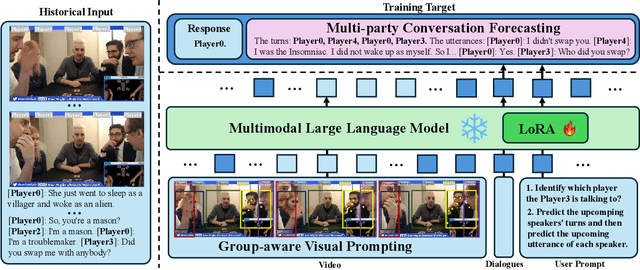

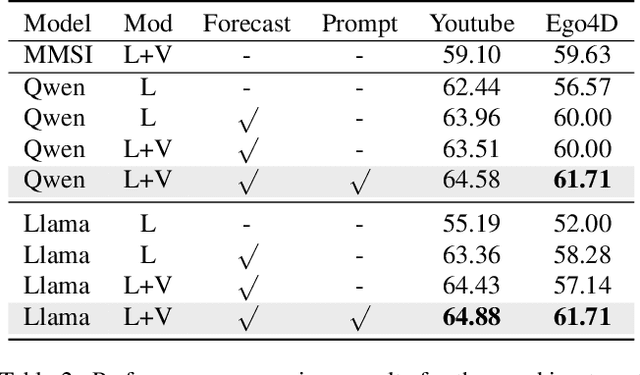

Towards Online Multi-Modal Social Interaction Understanding

Mar 25, 2025

Abstract:Multimodal social interaction understanding (MMSI) is critical in human-robot interaction systems. In real-world scenarios, AI agents are required to provide real-time feedback. However, existing models often depend on both past and future contexts, which hinders them from applying to real-world problems. To bridge this gap, we propose an online MMSI setting, where the model must resolve MMSI tasks using only historical information, such as recorded dialogues and video streams. To address the challenges of missing the useful future context, we develop a novel framework, named Online-MMSI-VLM, that leverages two complementary strategies: multi-party conversation forecasting and social-aware visual prompting with multi-modal large language models. First, to enrich linguistic context, the multi-party conversation forecasting simulates potential future utterances in a coarse-to-fine manner, anticipating upcoming speaker turns and then generating fine-grained conversational details. Second, to effectively incorporate visual social cues like gaze and gesture, social-aware visual prompting highlights the social dynamics in video with bounding boxes and body keypoints for each person and frame. Extensive experiments on three tasks and two datasets demonstrate that our method achieves state-of-the-art performance and significantly outperforms baseline models, indicating its effectiveness on Online-MMSI. The code and pre-trained models will be publicly released at: https://github.com/Sampson-Lee/OnlineMMSI.

An Online Automatic Modulation Classification Scheme Based on Isolation Distributional Kernel

Oct 03, 2024

Abstract:Automatic Modulation Classification (AMC), as a crucial technique in modern non-cooperative communication networks, plays a key role in various civil and military applications. However, existing AMC methods usually are complicated and can work in batch mode only due to their high computational complexity. This paper introduces a new online AMC scheme based on Isolation Distributional Kernel. Our method stands out in two aspects. Firstly, it is the first proposal to represent baseband signals using a distributional kernel. Secondly, it introduces a pioneering AMC technique that works well in online settings under realistic time-varying channel conditions. Through extensive experiments in online settings, we demonstrate the effectiveness of the proposed classifier. Our results indicate that the proposed approach outperforms existing baseline models, including two state-of-the-art deep learning classifiers. Moreover, it distinguishes itself as the first online classifier for AMC with linear time complexity, which marks a significant efficiency boost for real-time applications.

Two in One Go: Single-stage Emotion Recognition with Decoupled Subject-context Transformer

Apr 29, 2024Abstract:Emotion recognition aims to discern the emotional state of subjects within an image, relying on subject-centric and contextual visual cues. Current approaches typically follow a two-stage pipeline: first localize subjects by off-the-shelf detectors, then perform emotion classification through the late fusion of subject and context features. However, the complicated paradigm suffers from disjoint training stages and limited interaction between fine-grained subject-context elements. To address the challenge, we present a single-stage emotion recognition approach, employing a Decoupled Subject-Context Transformer (DSCT), for simultaneous subject localization and emotion classification. Rather than compartmentalizing training stages, we jointly leverage box and emotion signals as supervision to enrich subject-centric feature learning. Furthermore, we introduce DSCT to facilitate interactions between fine-grained subject-context cues in a decouple-then-fuse manner. The decoupled query token--subject queries and context queries--gradually intertwine across layers within DSCT, during which spatial and semantic relations are exploited and aggregated. We evaluate our single-stage framework on two widely used context-aware emotion recognition datasets, CAER-S and EMOTIC. Our approach surpasses two-stage alternatives with fewer parameter numbers, achieving a 3.39% accuracy improvement and a 6.46% average precision gain on CAER-S and EMOTIC datasets, respectively.

Real3D-AD: A Dataset of Point Cloud Anomaly Detection

Sep 26, 2023Abstract:High-precision point cloud anomaly detection is the gold standard for identifying the defects of advancing machining and precision manufacturing. Despite some methodological advances in this area, the scarcity of datasets and the lack of a systematic benchmark hinder its development. We introduce Real3D-AD, a challenging high-precision point cloud anomaly detection dataset, addressing the limitations in the field. With 1,254 high-resolution 3D items from forty thousand to millions of points for each item, Real3D-AD is the largest dataset for high-precision 3D industrial anomaly detection to date. Real3D-AD surpasses existing 3D anomaly detection datasets available regarding point cloud resolution (0.0010mm-0.0015mm), 360 degree coverage and perfect prototype. Additionally, we present a comprehensive benchmark for Real3D-AD, revealing the absence of baseline methods for high-precision point cloud anomaly detection. To address this, we propose Reg3D-AD, a registration-based 3D anomaly detection method incorporating a novel feature memory bank that preserves local and global representations. Extensive experiments on the Real3D-AD dataset highlight the effectiveness of Reg3D-AD. For reproducibility and accessibility, we provide the Real3D-AD dataset, benchmark source code, and Reg3D-AD on our website:https://github.com/M-3LAB/Real3D-AD.

MEFLUT: Unsupervised 1D Lookup Tables for Multi-exposure Image Fusion

Sep 21, 2023Abstract:In this paper, we introduce a new approach for high-quality multi-exposure image fusion (MEF). We show that the fusion weights of an exposure can be encoded into a 1D lookup table (LUT), which takes pixel intensity value as input and produces fusion weight as output. We learn one 1D LUT for each exposure, then all the pixels from different exposures can query 1D LUT of that exposure independently for high-quality and efficient fusion. Specifically, to learn these 1D LUTs, we involve attention mechanism in various dimensions including frame, channel and spatial ones into the MEF task so as to bring us significant quality improvement over the state-of-the-art (SOTA). In addition, we collect a new MEF dataset consisting of 960 samples, 155 of which are manually tuned by professionals as ground-truth for evaluation. Our network is trained by this dataset in an unsupervised manner. Extensive experiments are conducted to demonstrate the effectiveness of all the newly proposed components, and results show that our approach outperforms the SOTA in our and another representative dataset SICE, both qualitatively and quantitatively. Moreover, our 1D LUT approach takes less than 4ms to run a 4K image on a PC GPU. Given its high quality, efficiency and robustness, our method has been shipped into millions of Android mobiles across multiple brands world-wide. Code is available at: https://github.com/Hedlen/MEFLUT.

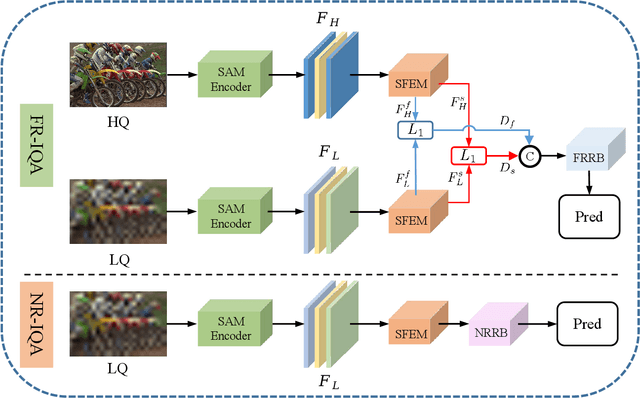

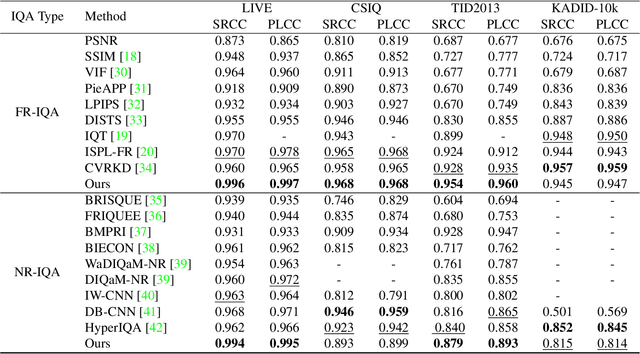

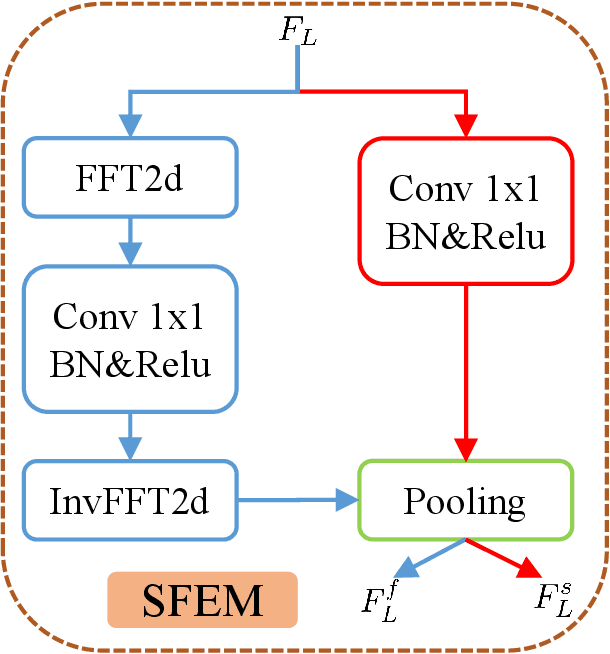

SAM-IQA: Can Segment Anything Boost Image Quality Assessment?

Jul 10, 2023

Abstract:Image Quality Assessment (IQA) is a challenging task that requires training on massive datasets to achieve accurate predictions. However, due to the lack of IQA data, deep learning-based IQA methods typically rely on pre-trained networks trained on massive datasets as feature extractors to enhance their generalization ability, such as the ResNet network trained on ImageNet. In this paper, we utilize the encoder of Segment Anything, a recently proposed segmentation model trained on a massive dataset, for high-level semantic feature extraction. Most IQA methods are limited to extracting spatial-domain features, while frequency-domain features have been shown to better represent noise and blur. Therefore, we leverage both spatial-domain and frequency-domain features by applying Fourier and standard convolutions on the extracted features, respectively. Extensive experiments are conducted to demonstrate the effectiveness of all the proposed components, and results show that our approach outperforms the state-of-the-art (SOTA) in four representative datasets, both qualitatively and quantitatively. Our experiments confirm the powerful feature extraction capabilities of Segment Anything and highlight the value of combining spatial-domain and frequency-domain features in IQA tasks. Code: https://github.com/Hedlen/SAM-IQA

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge