Xian Zhong

on behalf of EUCanImage working group

AGMA: Adaptive Gaussian Mixture Anchors for Prior-Guided Multimodal Human Trajectory Forecasting

Feb 04, 2026Abstract:Human trajectory forecasting requires capturing the multimodal nature of pedestrian behavior. However, existing approaches suffer from prior misalignment. Their learned or fixed priors often fail to capture the full distribution of plausible futures, limiting both prediction accuracy and diversity. We theoretically establish that prediction error is lower-bounded by prior quality, making prior modeling a key performance bottleneck. Guided by this insight, we propose AGMA (Adaptive Gaussian Mixture Anchors), which constructs expressive priors through two stages: extracting diverse behavioral patterns from training data and distilling them into a scene-adaptive global prior for inference. Extensive experiments on ETH-UCY, Stanford Drone, and JRDB datasets demonstrate that AGMA achieves state-of-the-art performance, confirming the critical role of high-quality priors in trajectory forecasting.

Robust Multicentre Detection and Classification of Colorectal Liver Metastases on CT: Application of Foundation Models

Jan 12, 2026Abstract:Colorectal liver metastases (CRLM) are a major cause of cancer-related mortality, and reliable detection on CT remains challenging in multi-centre settings. We developed a foundation model-based AI pipeline for patient-level classification and lesion-level detection of CRLM on contrast-enhanced CT, integrating uncertainty quantification and explainability. CT data from the EuCanImage consortium (n=2437) and an external TCIA cohort (n=197) were used. Among several pretrained models, UMedPT achieved the best performance and was fine-tuned with an MLP head for classification and an FCOS-based head for lesion detection. The classification model achieved an AUC of 0.90 and a sensitivity of 0.82 on the combined test set, with a sensitivity of 0.85 on the external cohort. Excluding the most uncertain 20 percent of cases improved AUC to 0.91 and balanced accuracy to 0.86. Decision curve analysis showed clinical benefit for threshold probabilities between 0.30 and 0.40. The detection model identified 69.1 percent of lesions overall, increasing from 30 percent to 98 percent across lesion size quartiles. Grad-CAM highlighted lesion-corresponding regions in high-confidence cases. These results demonstrate that foundation model-based pipelines can support robust and interpretable CRLM detection and classification across heterogeneous CT data.

Expanding Zero-Shot Object Counting with Rich Prompts

May 21, 2025Abstract:Expanding pre-trained zero-shot counting models to handle unseen categories requires more than simply adding new prompts, as this approach does not achieve the necessary alignment between text and visual features for accurate counting. We introduce RichCount, the first framework to address these limitations, employing a two-stage training strategy that enhances text encoding and strengthens the model's association with objects in images. RichCount improves zero-shot counting for unseen categories through two key objectives: (1) enriching text features with a feed-forward network and adapter trained on text-image similarity, thereby creating robust, aligned representations; and (2) applying this refined encoder to counting tasks, enabling effective generalization across diverse prompts and complex images. In this manner, RichCount goes beyond simple prompt expansion to establish meaningful feature alignment that supports accurate counting across novel categories. Extensive experiments on three benchmark datasets demonstrate the effectiveness of RichCount, achieving state-of-the-art performance in zero-shot counting and significantly enhancing generalization to unseen categories in open-world scenarios.

SOTA: Spike-Navigated Optimal TrAnsport Saliency Region Detection in Composite-bias Videos

May 01, 2025Abstract:Existing saliency detection methods struggle in real-world scenarios due to motion blur and occlusions. In contrast, spike cameras, with their high temporal resolution, significantly enhance visual saliency maps. However, the composite noise inherent to spike camera imaging introduces discontinuities in saliency detection. Low-quality samples further distort model predictions, leading to saliency bias. To address these challenges, we propose Spike-navigated Optimal TrAnsport Saliency Region Detection (SOTA), a framework that leverages the strengths of spike cameras while mitigating biases in both spatial and temporal dimensions. Our method introduces Spike-based Micro-debias (SM) to capture subtle frame-to-frame variations and preserve critical details, even under minimal scene or lighting changes. Additionally, Spike-based Global-debias (SG) refines predictions by reducing inconsistencies across diverse conditions. Extensive experiments on real and synthetic datasets demonstrate that SOTA outperforms existing methods by eliminating composite noise bias. Our code and dataset will be released at https://github.com/lwxfight/sota.

Beyond the Horizon: Decoupling UAVs Multi-View Action Recognition via Partial Order Transfer

Apr 29, 2025Abstract:Action recognition in unmanned aerial vehicles (UAVs) poses unique challenges due to significant view variations along the vertical spatial axis. Unlike traditional ground-based settings, UAVs capture actions from a wide range of altitudes, resulting in considerable appearance discrepancies. We introduce a multi-view formulation tailored to varying UAV altitudes and empirically observe a partial order among views, where recognition accuracy consistently decreases as the altitude increases. This motivates a novel approach that explicitly models the hierarchical structure of UAV views to improve recognition performance across altitudes. To this end, we propose the Partial Order Guided Multi-View Network (POG-MVNet), designed to address drastic view variations by effectively leveraging view-dependent information across different altitude levels. The framework comprises three key components: a View Partition (VP) module, which uses the head-to-body ratio to group views by altitude; an Order-aware Feature Decoupling (OFD) module, which disentangles action-relevant and view-specific features under partial order guidance; and an Action Partial Order Guide (APOG), which leverages the partial order to transfer informative knowledge from easier views to support learning in more challenging ones. We conduct experiments on Drone-Action, MOD20, and UAV datasets, demonstrating that POG-MVNet significantly outperforms competing methods. For example, POG-MVNet achieves a 4.7% improvement on Drone-Action dataset and a 3.5% improvement on UAV dataset compared to state-of-the-art methods ASAT and FAR. The code for POG-MVNet will be made available soon.

PAD: Phase-Amplitude Decoupling Fusion for Multi-Modal Land Cover Classification

Apr 27, 2025Abstract:The fusion of Synthetic Aperture Radar (SAR) and RGB imagery for land cover classification remains challenging due to modality heterogeneity and the underutilization of spectral complementarity. Existing methods often fail to decouple shared structural features from modality-specific radiometric attributes, leading to feature conflicts and information loss. To address this issue, we propose Phase-Amplitude Decoupling (PAD), a frequency-aware framework that separates phase (modality-shared) and amplitude (modality-specific) components in the Fourier domain. Specifically, PAD consists of two key components: 1) Phase Spectrum Correction (PSC), which aligns cross-modal phase features through convolution-guided scaling to enhance geometric consistency, and 2) Amplitude Spectrum Fusion (ASF), which dynamically integrates high-frequency details and low-frequency structures using frequency-adaptive multilayer perceptrons. This approach leverages SAR's sensitivity to morphological features and RGB's spectral richness. Extensive experiments on WHU-OPT-SAR and DDHR-SK datasets demonstrate state-of-the-art performance. Our work establishes a new paradigm for physics-aware multi-modal fusion in remote sensing. The code will be available at https://github.com/RanFeng2/PAD.

QIRL: Boosting Visual Question Answering via Optimized Question-Image Relation Learning

Apr 04, 2025Abstract:Existing debiasing approaches in Visual Question Answering (VQA) primarily focus on enhancing visual learning, integrating auxiliary models, or employing data augmentation strategies. However, these methods exhibit two major drawbacks. First, current debiasing techniques fail to capture the superior relation between images and texts because prevalent learning frameworks do not enable models to extract deeper correlations from highly contrasting samples. Second, they do not assess the relevance between the input question and image during inference, as no prior work has examined the degree of input relevance in debiasing studies. Motivated by these limitations, we propose a novel framework, Optimized Question-Image Relation Learning (QIRL), which employs a generation-based self-supervised learning strategy. Specifically, two modules are introduced to address the aforementioned issues. The Negative Image Generation (NIG) module automatically produces highly irrelevant question-image pairs during training to enhance correlation learning, while the Irrelevant Sample Identification (ISI) module improves model robustness by detecting and filtering irrelevant inputs, thereby reducing prediction errors. Furthermore, to validate our concept of reducing output errors through filtering unrelated question-image inputs, we propose a specialized metric to evaluate the performance of the ISI module. Notably, our approach is model-agnostic and can be integrated with various VQA models. Extensive experiments on VQA-CPv2 and VQA-v2 demonstrate the effectiveness and generalization ability of our method. Among data augmentation strategies, our approach achieves state-of-the-art results.

SU-YOLO: Spiking Neural Network for Efficient Underwater Object Detection

Mar 31, 2025Abstract:Underwater object detection is critical for oceanic research and industrial safety inspections. However, the complex optical environment and the limited resources of underwater equipment pose significant challenges to achieving high accuracy and low power consumption. To address these issues, we propose Spiking Underwater YOLO (SU-YOLO), a Spiking Neural Network (SNN) model. Leveraging the lightweight and energy-efficient properties of SNNs, SU-YOLO incorporates a novel spike-based underwater image denoising method based solely on integer addition, which enhances the quality of feature maps with minimal computational overhead. In addition, we introduce Separated Batch Normalization (SeBN), a technique that normalizes feature maps independently across multiple time steps and is optimized for integration with residual structures to capture the temporal dynamics of SNNs more effectively. The redesigned spiking residual blocks integrate the Cross Stage Partial Network (CSPNet) with the YOLO architecture to mitigate spike degradation and enhance the model's feature extraction capabilities. Experimental results on URPC2019 underwater dataset demonstrate that SU-YOLO achieves mAP of 78.8% with 6.97M parameters and an energy consumption of 2.98 mJ, surpassing mainstream SNN models in both detection accuracy and computational efficiency. These results underscore the potential of SNNs for engineering applications. The code is available in https://github.com/lwxfight/snn-underwater.

SpikeDerain: Unveiling Clear Videos from Rainy Sequences Using Color Spike Streams

Mar 26, 2025Abstract:Restoring clear frames from rainy videos presents a significant challenge due to the rapid motion of rain streaks. Traditional frame-based visual sensors, which capture scene content synchronously, struggle to capture the fast-moving details of rain accurately. In recent years, neuromorphic sensors have introduced a new paradigm for dynamic scene perception, offering microsecond temporal resolution and high dynamic range. However, existing multimodal methods that fuse event streams with RGB images face difficulties in handling the complex spatiotemporal interference of raindrops in real scenes, primarily due to hardware synchronization errors and computational redundancy. In this paper, we propose a Color Spike Stream Deraining Network (SpikeDerain), capable of reconstructing spike streams of dynamic scenes and accurately removing rain streaks. To address the challenges of data scarcity in real continuous rainfall scenes, we design a physically interpretable rain streak synthesis model that generates parameterized continuous rain patterns based on arbitrary background images. Experimental results demonstrate that the network, trained with this synthetic data, remains highly robust even under extreme rainfall conditions. These findings highlight the effectiveness and robustness of our method across varying rainfall levels and datasets, setting new standards for video deraining tasks. The code will be released soon.

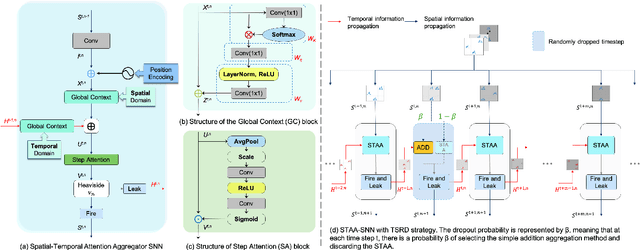

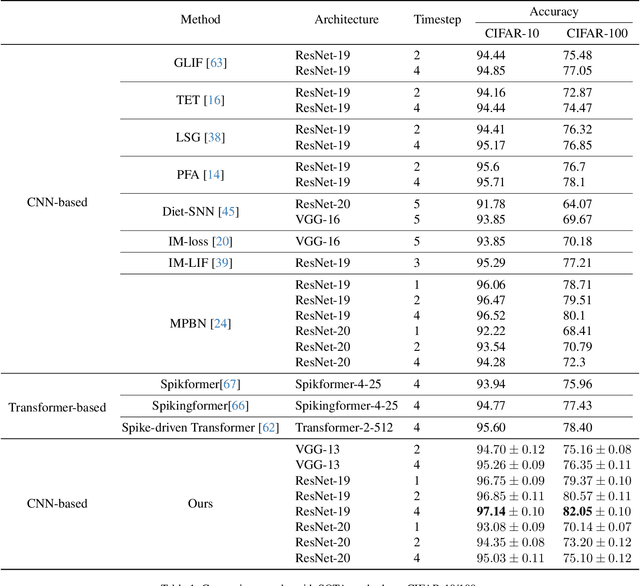

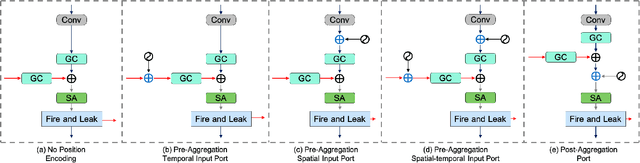

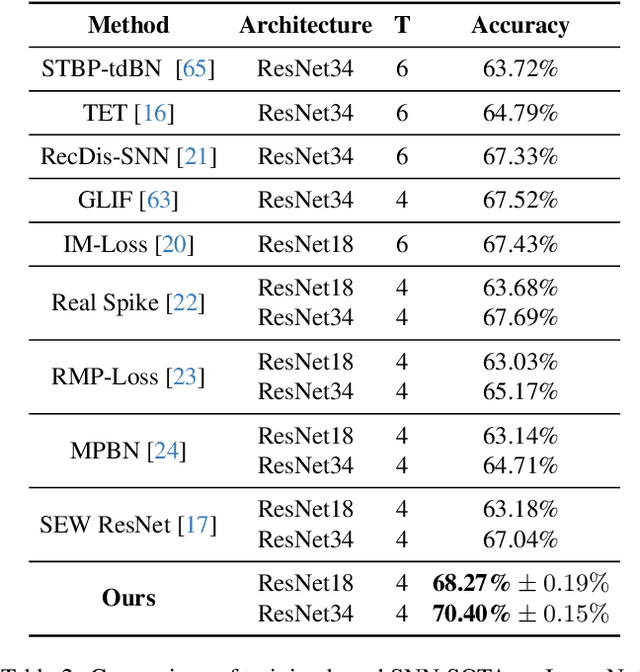

STAA-SNN: Spatial-Temporal Attention Aggregator for Spiking Neural Networks

Mar 05, 2025

Abstract:Spiking Neural Networks (SNNs) have gained significant attention due to their biological plausibility and energy efficiency, making them promising alternatives to Artificial Neural Networks (ANNs). However, the performance gap between SNNs and ANNs remains a substantial challenge hindering the widespread adoption of SNNs. In this paper, we propose a Spatial-Temporal Attention Aggregator SNN (STAA-SNN) framework, which dynamically focuses on and captures both spatial and temporal dependencies. First, we introduce a spike-driven self-attention mechanism specifically designed for SNNs. Additionally, we pioneeringly incorporate position encoding to integrate latent temporal relationships into the incoming features. For spatial-temporal information aggregation, we employ step attention to selectively amplify relevant features at different steps. Finally, we implement a time-step random dropout strategy to avoid local optima. As a result, STAA-SNN effectively captures both spatial and temporal dependencies, enabling the model to analyze complex patterns and make accurate predictions. The framework demonstrates exceptional performance across diverse datasets and exhibits strong generalization capabilities. Notably, STAA-SNN achieves state-of-the-art results on neuromorphic datasets CIFAR10-DVS, with remarkable performances of 97.14%, 82.05% and 70.40% on the static datasets CIFAR-10, CIFAR-100 and ImageNet, respectively. Furthermore, our model exhibits improved performance ranging from 0.33\% to 2.80\% with fewer time steps. The code for the model is available on GitHub.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge