Xi Peng

Rutgers University

Reliable Thinking with Images

Feb 16, 2026Abstract:As a multimodal extension of Chain-of-Thought (CoT), Thinking with Images (TWI) has recently emerged as a promising avenue to enhance the reasoning capability of Multi-modal Large Language Models (MLLMs), which generates interleaved CoT by incorporating visual cues into the textual reasoning process. However, the success of existing TWI methods heavily relies on the assumption that interleaved image-text CoTs are faultless, which is easily violated in real-world scenarios due to the complexity of multimodal understanding. In this paper, we reveal and study a highly-practical yet under-explored problem in TWI, termed Noisy Thinking (NT). Specifically, NT refers to the imperfect visual cues mining and answer reasoning process. As the saying goes, ``One mistake leads to another'', erroneous interleaved CoT would cause error accumulation, thus significantly degrading the performance of MLLMs. To solve the NT problem, we propose a novel method dubbed Reliable Thinking with Images (RTWI). In brief, RTWI estimates the reliability of visual cues and textual CoT in a unified text-centric manner and accordingly employs robust filtering and voting modules to prevent NT from contaminating the final answer. Extensive experiments on seven benchmarks verify the effectiveness of RTWI against NT.

ARK: A Dual-Axis Multimodal Retrieval Benchmark along Reasoning and Knowledge

Feb 10, 2026Abstract:Existing multimodal retrieval benchmarks largely emphasize semantic matching on daily-life images and offer limited diagnostics of professional knowledge and complex reasoning. To address this gap, we introduce ARK, a benchmark designed to analyze multimodal retrieval from two complementary perspectives: (i) knowledge domains (five domains with 17 subtypes), which characterize the content and expertise retrieval relies on, and (ii) reasoning skills (six categories), which characterize the type of inference over multimodal evidence required to identify the correct candidate. Specifically, ARK evaluates retrieval with both unimodal and multimodal queries and candidates, covering 16 heterogeneous visual data types. To avoid shortcut matching during evaluation, most queries are paired with targeted hard negatives that require multi-step reasoning. We evaluate 23 representative text-based and multimodal retrievers on ARK and observe a pronounced gap between knowledge-intensive and reasoning-intensive retrieval, with fine-grained visual and spatial reasoning emerging as persistent bottlenecks. We further show that simple enhancements such as re-ranking and rewriting yield consistent improvements, but substantial headroom remains.

Multiview Self-Representation Learning across Heterogeneous Views

Feb 04, 2026Abstract:Features of the same sample generated by different pretrained models often exhibit inherently distinct feature distributions because of discrepancies in the model pretraining objectives or architectures. Learning invariant representations from large-scale unlabeled visual data with various pretrained models in a fully unsupervised transfer manner remains a significant challenge. In this paper, we propose a multiview self-representation learning (MSRL) method in which invariant representations are learned by exploiting the self-representation property of features across heterogeneous views. The features are derived from large-scale unlabeled visual data through transfer learning with various pretrained models and are referred to as heterogeneous multiview data. An individual linear model is stacked on top of its corresponding frozen pretrained backbone. We introduce an information-passing mechanism that relies on self-representation learning to support feature aggregation over the outputs of the linear model. Moreover, an assignment probability distribution consistency scheme is presented to guide multiview self-representation learning by exploiting complementary information across different views. Consequently, representation invariance across different linear models is enforced through this scheme. In addition, we provide a theoretical analysis of the information-passing mechanism, the assignment probability distribution consistency and the incremental views. Extensive experiments with multiple benchmark visual datasets demonstrate that the proposed MSRL method consistently outperforms several state-of-the-art approaches.

Accelerated MR Elastography Using Learned Neural Network Representation

Jan 17, 2026Abstract:To develop a deep-learning method for achieving fast high-resolution MR elastography from highly undersampled data without the need of high-quality training dataset. We first framed the deep neural network representation as a nonlinear extension of the linear subspace model, then used it to represent and reconstruct MRE image repetitions from undersampled k-space data. The network weights were learned using a multi-level k-space consistent loss in a self-supervised manner. To further enhance reconstruction quality, phase-contrast specific magnitude and phase priors were incorporated, including the similarity of anatomical structures and smoothness of wave-induced harmonic displacement. Experiments were conducted using both 3D gradient-echo spiral and multi-slice spin-echo spiral MRE datasets. Compared to the conventional linear subspace-based approaches, the nonlinear network representation method was able to produce superior image reconstruction with suppressed noise and artifacts from a single in-plane spiral arm per MRE repetition (e.g., total R=10), yielding comparable stiffness estimation to the fully sampled data. This work demonstrated the feasibility of using deep network representations to model and reconstruct MRE images from highly-undersampled data, a nonlinear extension of the subspace-based approaches.

Next-Scale Prediction: A Self-Supervised Approach for Real-World Image Denoising

Dec 24, 2025Abstract:Self-supervised real-world image denoising remains a fundamental challenge, arising from the antagonistic trade-off between decorrelating spatially structured noise and preserving high-frequency details. Existing blind-spot network (BSN) methods rely on pixel-shuffle downsampling (PD) to decorrelate noise, but aggressive downsampling fragments fine structures, while milder downsampling fails to remove correlated noise. To address this, we introduce Next-Scale Prediction (NSP), a novel self-supervised paradigm that decouples noise decorrelation from detail preservation. NSP constructs cross-scale training pairs, where BSN takes low-resolution, fully decorrelated sub-images as input to predict high-resolution targets that retain fine details. As a by-product, NSP naturally supports super-resolution of noisy images without retraining or modification. Extensive experiments demonstrate that NSP achieves state-of-the-art self-supervised denoising performance on real-world benchmarks, significantly alleviating the long-standing conflict between noise decorrelation and detail preservation.

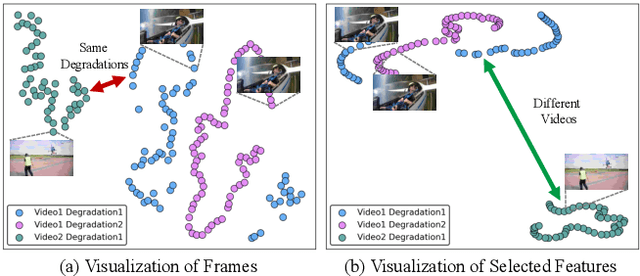

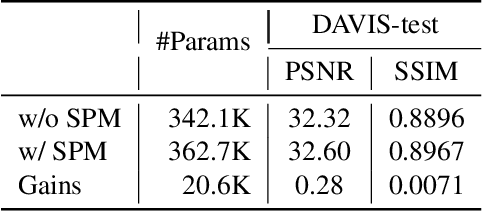

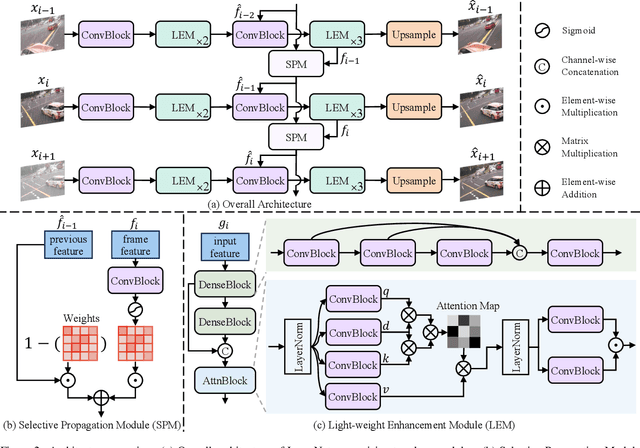

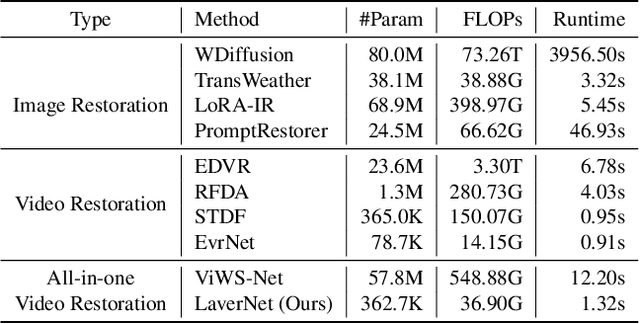

LaverNet: Lightweight All-in-one Video Restoration via Selective Propagation

Dec 18, 2025

Abstract:Recent studies have explored all-in-one video restoration, which handles multiple degradations with a unified model. However, these approaches still face two challenges when dealing with time-varying degradations. First, the degradation can dominate temporal modeling, confusing the model to focus on artifacts rather than the video content. Second, current methods typically rely on large models to handle all-in-one restoration, concealing those underlying difficulties. To address these challenges, we propose a lightweight all-in-one video restoration network, LaverNet, with only 362K parameters. To mitigate the impact of degradations on temporal modeling, we introduce a novel propagation mechanism that selectively transmits only degradation-agnostic features across frames. Through LaverNet, we demonstrate that strong all-in-one restoration can be achieved with a compact network. Despite its small size, less than 1\% of the parameters of existing models, LaverNet achieves comparable, even superior performance across benchmarks.

Toward Robust and Harmonious Adaptation for Cross-modal Retrieval

Nov 18, 2025

Abstract:Recently, the general-to-customized paradigm has emerged as the dominant approach for Cross-Modal Retrieval (CMR), which reconciles the distribution shift problem between the source domain and the target domain. However, existing general-to-customized CMR methods typically assume that the entire target-domain data is available, which is easily violated in real-world scenarios and thus inevitably suffer from the query shift (QS) problem. Specifically, query shift embraces the following two characteristics and thus poses new challenges to CMR. i) Online Shift: real-world queries always arrive in an online manner, rendering it impractical to access the entire query set beforehand for customization approaches; ii) Diverse Shift: even with domain customization, the CMR models struggle to satisfy queries from diverse users or scenarios, leaving an urgent need to accommodate diverse queries. In this paper, we observe that QS would not only undermine the well-structured common space inherited from the source model, but also steer the model toward forgetting the indispensable general knowledge for CMR. Inspired by the observations, we propose a novel method for achieving online and harmonious adaptation against QS, dubbed Robust adaptation with quEry ShifT (REST). To deal with online shift, REST first refines the retrieval results to formulate the query predictions and accordingly designs a QS-robust objective function on these predictions to preserve the well-established common space in an online manner. As for tackling the more challenging diverse shift, REST employs a gradient decoupling module to dexterously manipulate the gradients during the adaptation process, thus preventing the CMR model from forgetting the general knowledge. Extensive experiments on 20 benchmarks across three CMR tasks verify the effectiveness of our method against QS.

Semantic-Consistent Bidirectional Contrastive Hashing for Noisy Multi-Label Cross-Modal Retrieval

Nov 11, 2025Abstract:Cross-modal hashing (CMH) facilitates efficient retrieval across different modalities (e.g., image and text) by encoding data into compact binary representations. While recent methods have achieved remarkable performance, they often rely heavily on fully annotated datasets, which are costly and labor-intensive to obtain. In real-world scenarios, particularly in multi-label datasets, label noise is prevalent and severely degrades retrieval performance. Moreover, existing CMH approaches typically overlook the partial semantic overlaps inherent in multi-label data, limiting their robustness and generalization. To tackle these challenges, we propose a novel framework named Semantic-Consistent Bidirectional Contrastive Hashing (SCBCH). The framework comprises two complementary modules: (1) Cross-modal Semantic-Consistent Classification (CSCC), which leverages cross-modal semantic consistency to estimate sample reliability and reduce the impact of noisy labels; (2) Bidirectional Soft Contrastive Hashing (BSCH), which dynamically generates soft contrastive sample pairs based on multi-label semantic overlap, enabling adaptive contrastive learning between semantically similar and dissimilar samples across modalities. Extensive experiments on four widely-used cross-modal retrieval benchmarks validate the effectiveness and robustness of our method, consistently outperforming state-of-the-art approaches under noisy multi-label conditions.

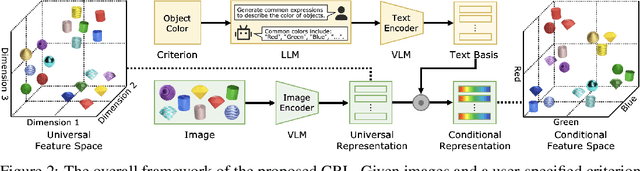

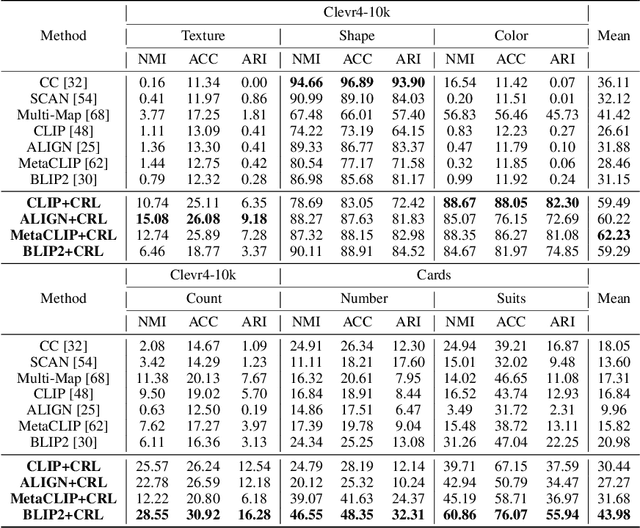

Conditional Representation Learning for Customized Tasks

Oct 06, 2025

Abstract:Conventional representation learning methods learn a universal representation that primarily captures dominant semantics, which may not always align with customized downstream tasks. For instance, in animal habitat analysis, researchers prioritize scene-related features, whereas universal embeddings emphasize categorical semantics, leading to suboptimal results. As a solution, existing approaches resort to supervised fine-tuning, which however incurs high computational and annotation costs. In this paper, we propose Conditional Representation Learning (CRL), aiming to extract representations tailored to arbitrary user-specified criteria. Specifically, we reveal that the semantics of a space are determined by its basis, thereby enabling a set of descriptive words to approximate the basis for a customized feature space. Building upon this insight, given a user-specified criterion, CRL first employs a large language model (LLM) to generate descriptive texts to construct the semantic basis, then projects the image representation into this conditional feature space leveraging a vision-language model (VLM). The conditional representation better captures semantics for the specific criterion, which could be utilized for multiple customized tasks. Extensive experiments on classification and retrieval tasks demonstrate the superiority and generality of the proposed CRL. The code is available at https://github.com/XLearning-SCU/2025-NeurIPS-CRL.

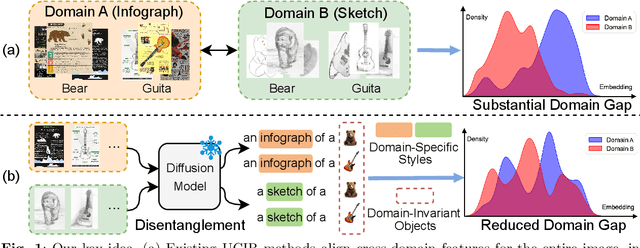

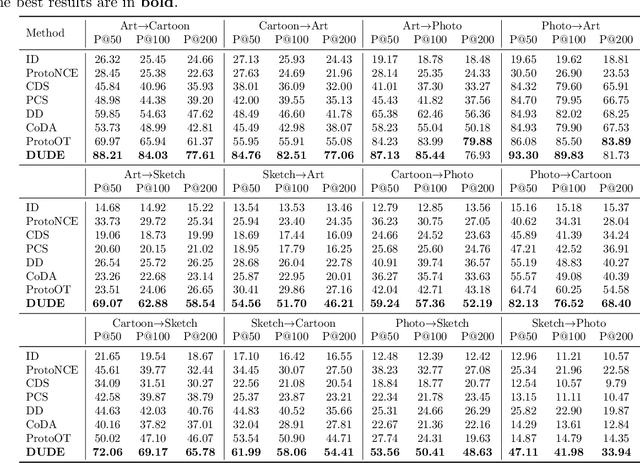

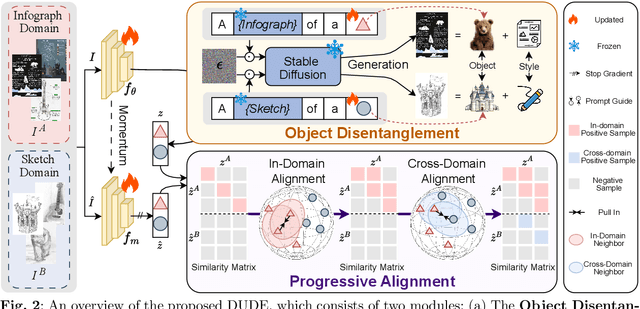

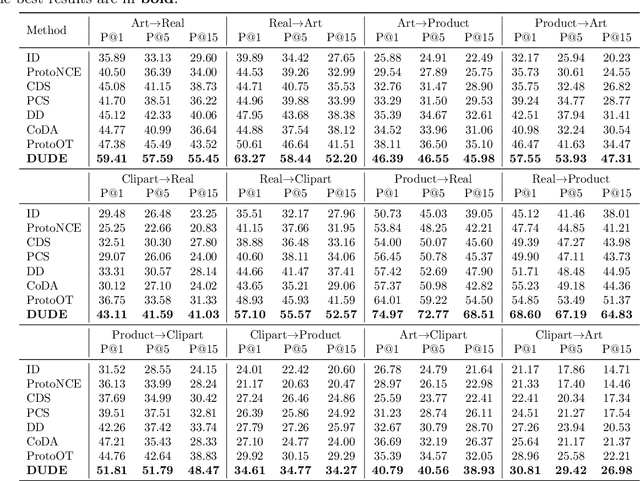

DUDE: Diffusion-Based Unsupervised Cross-Domain Image Retrieval

Sep 04, 2025

Abstract:Unsupervised cross-domain image retrieval (UCIR) aims to retrieve images of the same category across diverse domains without relying on annotations. Existing UCIR methods, which align cross-domain features for the entire image, often struggle with the domain gap, as the object features critical for retrieval are frequently entangled with domain-specific styles. To address this challenge, we propose DUDE, a novel UCIR method building upon feature disentanglement. In brief, DUDE leverages a text-to-image generative model to disentangle object features from domain-specific styles, thus facilitating semantical image retrieval. To further achieve reliable alignment of the disentangled object features, DUDE aligns mutual neighbors from within domains to across domains in a progressive manner. Extensive experiments demonstrate that DUDE achieves state-of-the-art performance across three benchmark datasets over 13 domains. The code will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge