Wentao Zhao

Graph-Loc: Robust Graph-Based LiDAR Pose Tracking with Compact Structural Map Priors under Low Observability and Occlusion

Feb 09, 2026Abstract:Map-based LiDAR pose tracking is essential for long-term autonomous operation, where onboard map priors need be compact for scalable storage and fast retrieval, while online observations are often partial, repetitive, and heavily occluded. We propose Graph-Loc, a graph-based localization framework that tracks the platform pose against compact structural map priors represented as a lightweight point-line graph. Such priors can be constructed from heterogeneous sources commonly available in practice, including polygon outlines vectorized from occupancy/grid maps and CAD/model/floor-plan layouts. For each incoming LiDAR scan, Graph-Loc extracts sparse point and line primitives to form an observation graph, retrieves a pose-conditioned visible subgraph via LiDAR ray simulation, and performs scan-to-map association through unbalanced optimal transport with a local graph-context regularizer. The unbalanced formulation relaxes mass conservation, improving robustness to missing, spurious, and fragmented structures under occlusion. To enhance stability in low-observability segments, we estimate information anisotropy from the refinement normal matrix and defer updates along weakly constrained directions until sufficient constraints reappear. Experiments on public benchmarks, controlled stress tests, and real-world deployments demonstrate accurate and stable tracking with KB-level priors from heterogeneous map sources, including under geometrically degenerate and sustained occlusion and in the presence of gradual scene changes.

Learning Query-Specific Rubrics from Human Preferences for DeepResearch Report Generation

Feb 03, 2026Abstract:Nowadays, training and evaluating DeepResearch-generated reports remain challenging due to the lack of verifiable reward signals. Accordingly, rubric-based evaluation has become a common practice. However, existing approaches either rely on coarse, pre-defined rubrics that lack sufficient granularity, or depend on manually constructed query-specific rubrics that are costly and difficult to scale. In this paper, we propose a pipeline to train human-preference-aligned query-specific rubric generators tailored for DeepResearch report generation. We first construct a dataset of DeepResearch-style queries annotated with human preferences over paired reports, and train rubric generators via reinforcement learning with a hybrid reward combining human preference supervision and LLM-based rubric evaluation. To better handle long-horizon reasoning, we further introduce a Multi-agent Markov-state (MaMs) workflow for report generation. We empirically show that our proposed rubric generators deliver more discriminative and better human-aligned supervision than existing rubric design strategies. Moreover, when integrated into the MaMs training framework, DeepResearch systems equipped with our rubric generators consistently outperform all open-source baselines on the DeepResearch Bench and achieve performance comparable to that of leading closed-source models.

Guided Diffusion-based Generation of Adversarial Objects for Real-World Monocular Depth Estimation Attacks

Dec 30, 2025Abstract:Monocular Depth Estimation (MDE) serves as a core perception module in autonomous driving systems, but it remains highly susceptible to adversarial attacks. Errors in depth estimation may propagate through downstream decision making and influence overall traffic safety. Existing physical attacks primarily rely on texture-based patches, which impose strict placement constraints and exhibit limited realism, thereby reducing their effectiveness in complex driving environments. To overcome these limitations, this work introduces a training-free generative adversarial attack framework that generates naturalistic, scene-consistent adversarial objects via a diffusion-based conditional generation process. The framework incorporates a Salient Region Selection module that identifies regions most influential to MDE and a Jacobian Vector Product Guidance mechanism that steers adversarial gradients toward update directions supported by the pre-trained diffusion model. This formulation enables the generation of physically plausible adversarial objects capable of inducing substantial adversarial depth shifts. Extensive digital and physical experiments demonstrate that our method significantly outperforms existing attacks in effectiveness, stealthiness, and physical deployability, underscoring its strong practical implications for autonomous driving safety assessment.

RaCalNet: Radar Calibration Network for Sparse-Supervised Metric Depth Estimation

Jun 18, 2025Abstract:Dense metric depth estimation using millimeter-wave radar typically requires dense LiDAR supervision, generated via multi-frame projection and interpolation, to guide the learning of accurate depth from sparse radar measurements and RGB images. However, this paradigm is both costly and data-intensive. To address this, we propose RaCalNet, a novel framework that eliminates the need for dense supervision by using sparse LiDAR to supervise the learning of refined radar measurements, resulting in a supervision density of merely around 1% compared to dense-supervised methods. Unlike previous approaches that associate radar points with broad image regions and rely heavily on dense labels, RaCalNet first recalibrates and refines sparse radar points to construct accurate depth priors. These priors then serve as reliable anchors to guide monocular depth prediction, enabling metric-scale estimation without resorting to dense supervision. This design improves structural consistency and preserves fine details. Despite relying solely on sparse supervision, RaCalNet surpasses state-of-the-art dense-supervised methods, producing depth maps with clear object contours and fine-grained textures. Extensive experiments on the ZJU-4DRadarCam dataset and real-world deployment scenarios demonstrate its effectiveness, reducing RMSE by 35.30% and 34.89%, respectively.

UNO: Unified Self-Supervised Monocular Odometry for Platform-Agnostic Deployment

Jun 08, 2025Abstract:This work presents UNO, a unified monocular visual odometry framework that enables robust and adaptable pose estimation across diverse environments, platforms, and motion patterns. Unlike traditional methods that rely on deployment-specific tuning or predefined motion priors, our approach generalizes effectively across a wide range of real-world scenarios, including autonomous vehicles, aerial drones, mobile robots, and handheld devices. To this end, we introduce a Mixture-of-Experts strategy for local state estimation, with several specialized decoders that each handle a distinct class of ego-motion patterns. Moreover, we introduce a fully differentiable Gumbel-Softmax module that constructs a robust inter-frame correlation graph, selects the optimal expert decoder, and prunes erroneous estimates. These cues are then fed into a unified back-end that combines pre-trained, scale-independent depth priors with a lightweight bundling adjustment to enforce geometric consistency. We extensively evaluate our method on three major benchmark datasets: KITTI (outdoor/autonomous driving), EuRoC-MAV (indoor/aerial drones), and TUM-RGBD (indoor/handheld), demonstrating state-of-the-art performance.

SN-LiDAR: Semantic Neural Fields for Novel Space-time View LiDAR Synthesis

Apr 11, 2025Abstract:Recent research has begun exploring novel view synthesis (NVS) for LiDAR point clouds, aiming to generate realistic LiDAR scans from unseen viewpoints. However, most existing approaches do not reconstruct semantic labels, which are crucial for many downstream applications such as autonomous driving and robotic perception. Unlike images, which benefit from powerful segmentation models, LiDAR point clouds lack such large-scale pre-trained models, making semantic annotation time-consuming and labor-intensive. To address this challenge, we propose SN-LiDAR, a method that jointly performs accurate semantic segmentation, high-quality geometric reconstruction, and realistic LiDAR synthesis. Specifically, we employ a coarse-to-fine planar-grid feature representation to extract global features from multi-frame point clouds and leverage a CNN-based encoder to extract local semantic features from the current frame point cloud. Extensive experiments on SemanticKITTI and KITTI-360 demonstrate the superiority of SN-LiDAR in both semantic and geometric reconstruction, effectively handling dynamic objects and large-scale scenes. Codes will be available on https://github.com/dtc111111/SN-Lidar.

Vision-Language Model Predictive Control for Manipulation Planning and Trajectory Generation

Apr 07, 2025

Abstract:Model Predictive Control (MPC) is a widely adopted control paradigm that leverages predictive models to estimate future system states and optimize control inputs accordingly. However, while MPC excels in planning and control, it lacks the capability for environmental perception, leading to failures in complex and unstructured scenarios. To address this limitation, we introduce Vision-Language Model Predictive Control (VLMPC), a robotic manipulation planning framework that integrates the perception power of vision-language models (VLMs) with MPC. VLMPC utilizes a conditional action sampling module that takes a goal image or language instruction as input and leverages VLM to generate candidate action sequences. These candidates are fed into a video prediction model that simulates future frames based on the actions. In addition, we propose an enhanced variant, Traj-VLMPC, which replaces video prediction with motion trajectory generation to reduce computational complexity while maintaining accuracy. Traj-VLMPC estimates motion dynamics conditioned on the candidate actions, offering a more efficient alternative for long-horizon tasks and real-time applications. Both VLMPC and Traj-VLMPC select the optimal action sequence using a VLM-based hierarchical cost function that captures both pixel-level and knowledge-level consistency between the current observation and the task input. We demonstrate that both approaches outperform existing state-of-the-art methods on public benchmarks and achieve excellent performance in various real-world robotic manipulation tasks. Code is available at https://github.com/PPjmchen/VLMPC.

SALT: A Flexible Semi-Automatic Labeling Tool for General LiDAR Point Clouds with Cross-Scene Adaptability and 4D Consistency

Mar 31, 2025

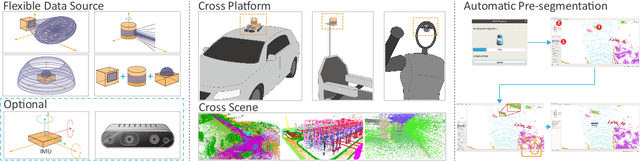

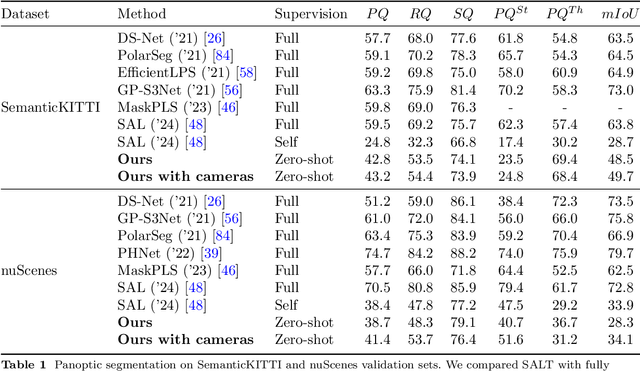

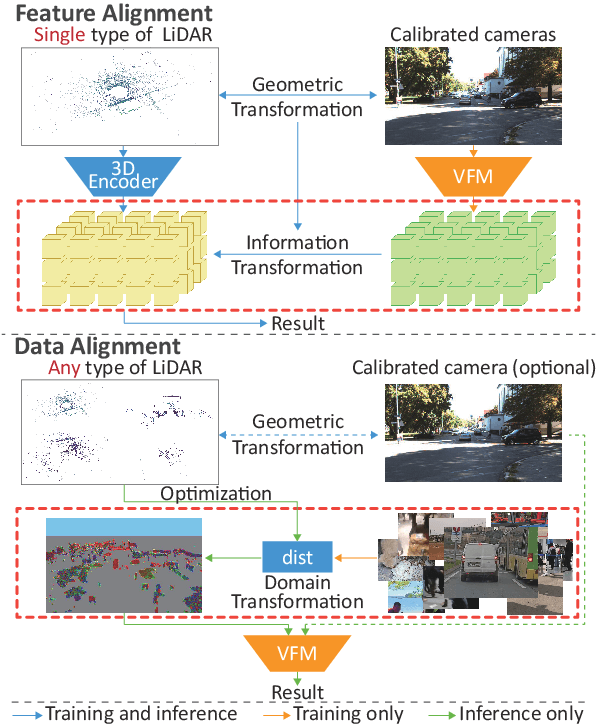

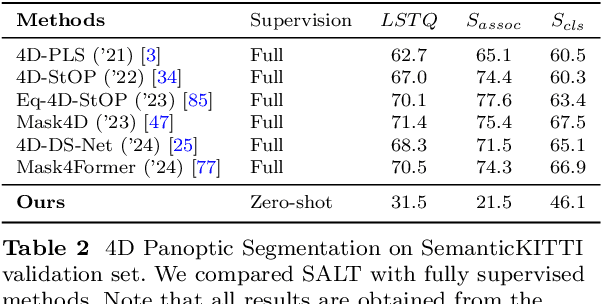

Abstract:We propose a flexible Semi-Automatic Labeling Tool (SALT) for general LiDAR point clouds with cross-scene adaptability and 4D consistency. Unlike recent approaches that rely on camera distillation, SALT operates directly on raw LiDAR data, automatically generating pre-segmentation results. To achieve this, we propose a novel zero-shot learning paradigm, termed data alignment, which transforms LiDAR data into pseudo-images by aligning with the training distribution of vision foundation models. Additionally, we design a 4D-consistent prompting strategy and 4D non-maximum suppression module to enhance SAM2, ensuring high-quality, temporally consistent presegmentation. SALT surpasses the latest zero-shot methods by 18.4% PQ on SemanticKITTI and achieves nearly 40-50% of human annotator performance on our newly collected low-resolution LiDAR data and on combined data from three LiDAR types, significantly boosting annotation efficiency. We anticipate that SALT's open-sourcing will catalyze substantial expansion of current LiDAR datasets and lay the groundwork for the future development of LiDAR foundation models. Code is available at https://github.com/Cavendish518/SALT.

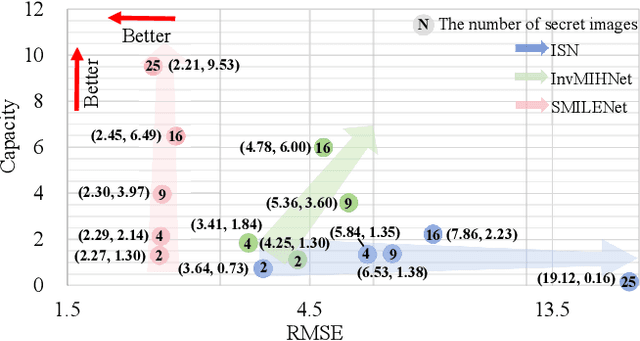

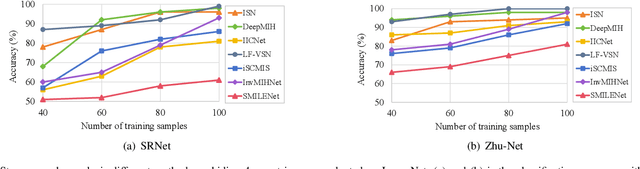

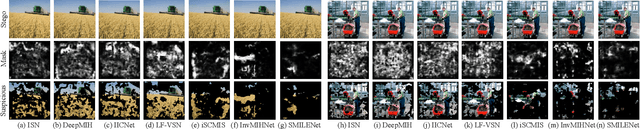

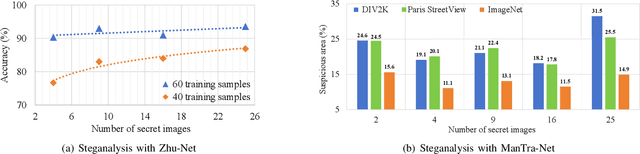

SMILENet: Unleashing Extra-Large Capacity Image Steganography via a Synergistic Mosaic InvertibLE Hiding Network

Mar 07, 2025

Abstract:Existing image steganography methods face fundamental limitations in hiding capacity (typically $1\sim7$ images) due to severe information interference and uncoordinated capacity-distortion trade-off. We propose SMILENet, a novel synergistic framework that achieves 25 image hiding through three key innovations: (i) A synergistic network architecture coordinates reversible and non-reversible operations to efficiently exploit information redundancy in both secret and cover images. The reversible Invertible Cover-Driven Mosaic (ICDM) module and Invertible Mosaic Secret Embedding (IMSE) module establish cover-guided mosaic transformations and representation embedding with mathematically guaranteed invertibility for distortion-free embedding. The non-reversible Secret Information Selection (SIS) module and Secret Detail Enhancement (SDE) module implement learnable feature modulation for critical information selection and enhancement. (ii) A unified training strategy that coordinates complementary modules to achieve 3.0x higher capacity than existing methods with superior visual quality. (iii) Last but not least, we introduce a new metric to model Capacity-Distortion Trade-off for evaluating the image steganography algorithms that jointly considers hiding capacity and distortion, and provides a unified evaluation approach for accessing results with different number of secret image. Extensive experiments on DIV2K, Paris StreetView and ImageNet1K show that SMILENet outperforms state-of-the-art methods in terms of hiding capacity, recovery quality as well as security against steganalysis methods.

SFPNet: Sparse Focal Point Network for Semantic Segmentation on General LiDAR Point Clouds

Jul 16, 2024

Abstract:Although LiDAR semantic segmentation advances rapidly, state-of-the-art methods often incorporate specifically designed inductive bias derived from benchmarks originating from mechanical spinning LiDAR. This can limit model generalizability to other kinds of LiDAR technologies and make hyperparameter tuning more complex. To tackle these issues, we propose a generalized framework to accommodate various types of LiDAR prevalent in the market by replacing window-attention with our sparse focal point modulation. Our SFPNet is capable of extracting multi-level contexts and dynamically aggregating them using a gate mechanism. By implementing a channel-wise information query, features that incorporate both local and global contexts are encoded. We also introduce a novel large-scale hybrid-solid LiDAR semantic segmentation dataset for robotic applications. SFPNet demonstrates competitive performance on conventional benchmarks derived from mechanical spinning LiDAR, while achieving state-of-the-art results on benchmark derived from solid-state LiDAR. Additionally, it outperforms existing methods on our novel dataset sourced from hybrid-solid LiDAR. Code and dataset are available at https://github.com/Cavendish518/SFPNet and https://www.semanticindustry.top.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge