Xiaoning Wang

SN-LiDAR: Semantic Neural Fields for Novel Space-time View LiDAR Synthesis

Apr 11, 2025Abstract:Recent research has begun exploring novel view synthesis (NVS) for LiDAR point clouds, aiming to generate realistic LiDAR scans from unseen viewpoints. However, most existing approaches do not reconstruct semantic labels, which are crucial for many downstream applications such as autonomous driving and robotic perception. Unlike images, which benefit from powerful segmentation models, LiDAR point clouds lack such large-scale pre-trained models, making semantic annotation time-consuming and labor-intensive. To address this challenge, we propose SN-LiDAR, a method that jointly performs accurate semantic segmentation, high-quality geometric reconstruction, and realistic LiDAR synthesis. Specifically, we employ a coarse-to-fine planar-grid feature representation to extract global features from multi-frame point clouds and leverage a CNN-based encoder to extract local semantic features from the current frame point cloud. Extensive experiments on SemanticKITTI and KITTI-360 demonstrate the superiority of SN-LiDAR in both semantic and geometric reconstruction, effectively handling dynamic objects and large-scale scenes. Codes will be available on https://github.com/dtc111111/SN-Lidar.

FOKE: A Personalized and Explainable Education Framework Integrating Foundation Models, Knowledge Graphs, and Prompt Engineering

May 06, 2024

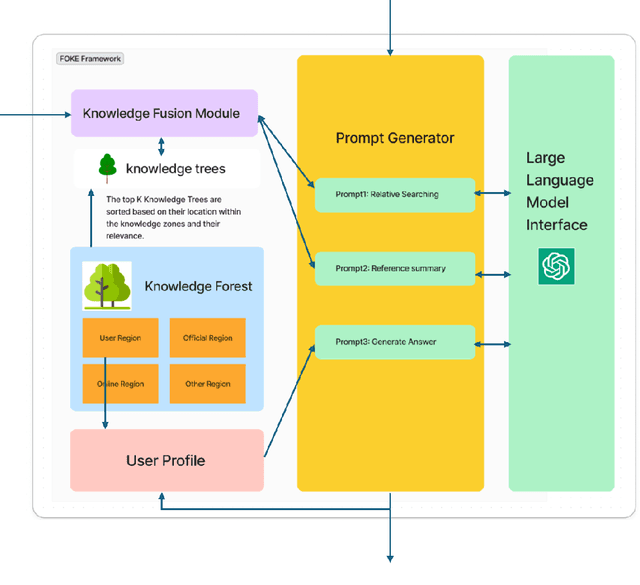

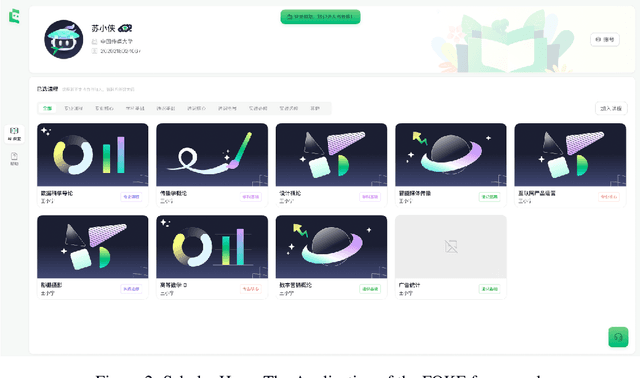

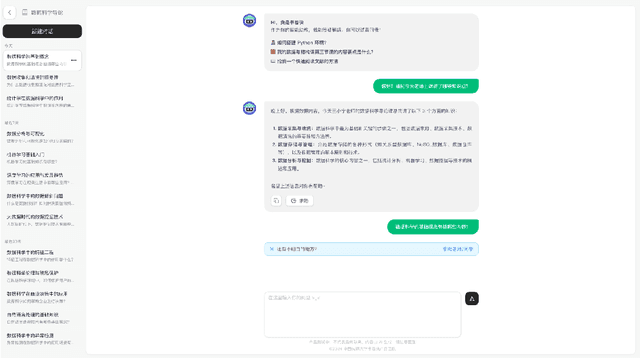

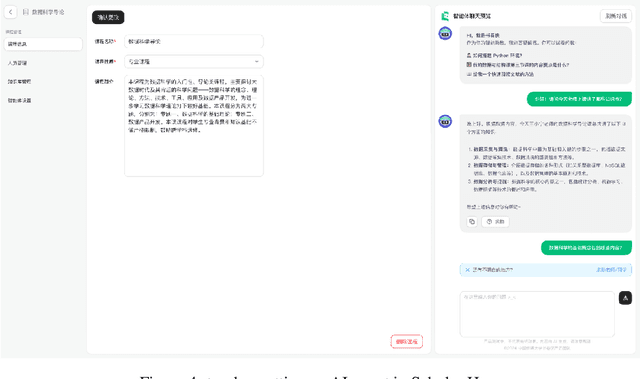

Abstract:Integrating large language models (LLMs) and knowledge graphs (KGs) holds great promise for revolutionizing intelligent education, but challenges remain in achieving personalization, interactivity, and explainability. We propose FOKE, a Forest Of Knowledge and Education framework that synergizes foundation models, knowledge graphs, and prompt engineering to address these challenges. FOKE introduces three key innovations: (1) a hierarchical knowledge forest for structured domain knowledge representation; (2) a multi-dimensional user profiling mechanism for comprehensive learner modeling; and (3) an interactive prompt engineering scheme for generating precise and tailored learning guidance. We showcase FOKE's application in programming education, homework assessment, and learning path planning, demonstrating its effectiveness and practicality. Additionally, we implement Scholar Hero, a real-world instantiation of FOKE. Our research highlights the potential of integrating foundation models, knowledge graphs, and prompt engineering to revolutionize intelligent education practices, ultimately benefiting learners worldwide. FOKE provides a principled and unified approach to harnessing cutting-edge AI technologies for personalized, interactive, and explainable educational services, paving the way for further research and development in this critical direction.

CodeAid: Evaluating a Classroom Deployment of an LLM-based Programming Assistant that Balances Student and Educator Needs

Jan 20, 2024

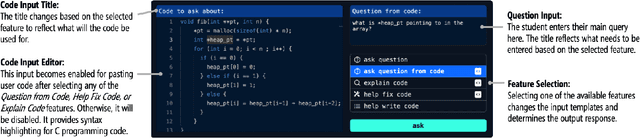

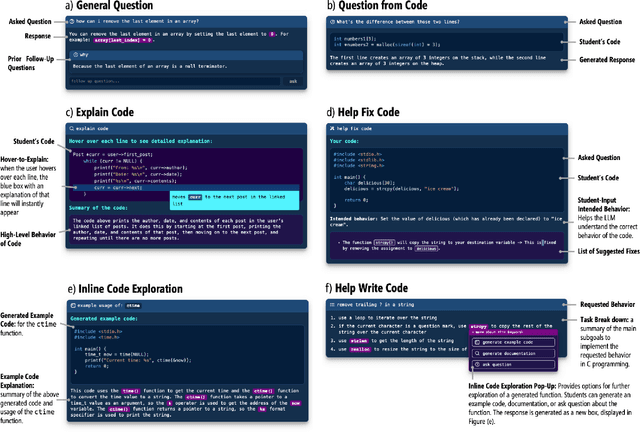

Abstract:Timely, personalized feedback is essential for students learning programming, especially as class sizes expand. LLM-based tools like ChatGPT offer instant support, but reveal direct answers with code, which may hinder deep conceptual engagement. We developed CodeAid, an LLM-based programming assistant delivering helpful, technically correct responses, without revealing code solutions. For example, CodeAid can answer conceptual questions, generate pseudo-code with line-by-line explanations, and annotate student's incorrect code with fix suggestions. We deployed CodeAid in a programming class of 700 students for a 12-week semester. A thematic analysis of 8,000 usages of CodeAid was performed, further enriched by weekly surveys, and 22 student interviews. We then interviewed eight programming educators to gain further insights on CodeAid. Findings revealed students primarily used CodeAid for conceptual understanding and debugging, although a minority tried to obtain direct code. Educators appreciated CodeAid's educational approach, and expressed concerns about occasional incorrect feedback and students defaulting to ChatGPT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge