Wen Fan

Engineering Mathematics, University of Bristol, affiliated with the Bristol Robotics Lab, United Kingdom

WHU-STree: A Multi-modal Benchmark Dataset for Street Tree Inventory

Sep 16, 2025Abstract:Street trees are vital to urban livability, providing ecological and social benefits. Establishing a detailed, accurate, and dynamically updated street tree inventory has become essential for optimizing these multifunctional assets within space-constrained urban environments. Given that traditional field surveys are time-consuming and labor-intensive, automated surveys utilizing Mobile Mapping Systems (MMS) offer a more efficient solution. However, existing MMS-acquired tree datasets are limited by small-scale scene, limited annotation, or single modality, restricting their utility for comprehensive analysis. To address these limitations, we introduce WHU-STree, a cross-city, richly annotated, and multi-modal urban street tree dataset. Collected across two distinct cities, WHU-STree integrates synchronized point clouds and high-resolution images, encompassing 21,007 annotated tree instances across 50 species and 2 morphological parameters. Leveraging the unique characteristics, WHU-STree concurrently supports over 10 tasks related to street tree inventory. We benchmark representative baselines for two key tasks--tree species classification and individual tree segmentation. Extensive experiments and in-depth analysis demonstrate the significant potential of multi-modal data fusion and underscore cross-domain applicability as a critical prerequisite for practical algorithm deployment. In particular, we identify key challenges and outline potential future works for fully exploiting WHU-STree, encompassing multi-modal fusion, multi-task collaboration, cross-domain generalization, spatial pattern learning, and Multi-modal Large Language Model for street tree asset management. The WHU-STree dataset is accessible at: https://github.com/WHU-USI3DV/WHU-STree.

MagicGripper: A Multimodal Sensor-Integrated Gripper for Contact-Rich Robotic Manipulation

May 30, 2025Abstract:Contact-rich manipulation in unstructured environments demands precise, multimodal perception to enable robust and adaptive control. Vision-based tactile sensors (VBTSs) have emerged as an effective solution; however, conventional VBTSs often face challenges in achieving compact, multi-modal functionality due to hardware constraints and algorithmic complexity. In this work, we present MagicGripper, a multimodal sensor-integrated gripper designed for contact-rich robotic manipulation. Building on our prior design, MagicTac, we develop a compact variant, mini-MagicTac, which features a three-dimensional, multi-layered grid embedded in a soft elastomer. MagicGripper integrates mini-MagicTac, enabling high-resolution tactile feedback alongside proximity and visual sensing within a compact, gripper-compatible form factor. We conduct a thorough evaluation of mini-MagicTac's performance, demonstrating its capabilities in spatial resolution, contact localization, and force regression. We also assess its robustness across manufacturing variability, mechanical deformation, and sensing performance under real-world conditions. Furthermore, we validate the effectiveness of MagicGripper through three representative robotic tasks: a teleoperated assembly task, a contact-based alignment task, and an autonomous robotic grasping task. Across these experiments, MagicGripper exhibits reliable multimodal perception, accurate force estimation, and high adaptability to challenging manipulation scenarios. Our results highlight the potential of MagicGripper as a practical and versatile tool for embodied intelligence in complex, contact-rich environments.

MM-Retinal V2: Transfer an Elite Knowledge Spark into Fundus Vision-Language Pretraining

Jan 27, 2025

Abstract:Vision-language pretraining (VLP) has been investigated to generalize across diverse downstream tasks for fundus image analysis. Although recent methods showcase promising achievements, they significantly rely on large-scale private image-text data but pay less attention to the pretraining manner, which limits their further advancements. In this work, we introduce MM-Retinal V2, a high-quality image-text paired dataset comprising CFP, FFA, and OCT image modalities. Then, we propose a novel fundus vision-language pretraining model, namely KeepFIT V2, which is pretrained by integrating knowledge from the elite data spark into categorical public datasets. Specifically, a preliminary textual pretraining is adopted to equip the text encoder with primarily ophthalmic textual knowledge. Moreover, a hybrid image-text knowledge injection module is designed for knowledge transfer, which is essentially based on a combination of global semantic concepts from contrastive learning and local appearance details from generative learning. Extensive experiments across zero-shot, few-shot, and linear probing settings highlight the generalization and transferability of KeepFIT V2, delivering performance competitive to state-of-the-art fundus VLP models trained on large-scale private image-text datasets. Our dataset and model are publicly available via https://github.com/lxirich/MM-Retinal.

Design and Benchmarking of A Multi-Modality Sensor for Robotic Manipulation with GAN-Based Cross-Modality Interpretation

Jan 04, 2025

Abstract:In this paper, we present the design and benchmark of an innovative sensor, ViTacTip, which fulfills the demand for advanced multi-modal sensing in a compact design. A notable feature of ViTacTip is its transparent skin, which incorporates a `see-through-skin' mechanism. This mechanism aims at capturing detailed object features upon contact, significantly improving both vision-based and proximity perception capabilities. In parallel, the biomimetic tips embedded in the sensor's skin are designed to amplify contact details, thus substantially augmenting tactile and derived force perception abilities. To demonstrate the multi-modal capabilities of ViTacTip, we developed a multi-task learning model that enables simultaneous recognition of hardness, material, and textures. To assess the functionality and validate the versatility of ViTacTip, we conducted extensive benchmarking experiments, including object recognition, contact point detection, pose regression, and grating identification. To facilitate seamless switching between various sensing modalities, we employed a Generative Adversarial Network (GAN)-based approach. This method enhances the applicability of the ViTacTip sensor across diverse environments by enabling cross-modality interpretation.

CrystalTac: 3D-Printed Vision-Based Tactile Sensor Family through Rapid Monolithic Manufacturing Technique

Aug 01, 2024

Abstract:Recently, vision-based tactile sensors (VBTSs) have gained popularity in robotics systems. The sensing mechanisms of most VBTSs can be categorised based on the type of tactile features they capture. Each category requires specific structural designs to convert physical contact into optical information. The complex architectures of VBTSs pose challenges for traditional manufacturing techniques in terms of design flexibility, cost-effectiveness, and quality stability. Previous research has shown that monolithic manufacturing using multi-material 3D printing technology can partially address these challenges. This study introduces the CrystalTac family, a series of VBTSs designed with a unique sensing mechanism and fabricated through rapid monolithic manufacturing. Case studies on CrystalTac-type sensors demonstrate their effective performance in tasks involving tactile perception, along with impressive cost-effectiveness and design flexibility. The CrystalTac family aims to highlight the potential of monolithic manufacturing in VBTS development and inspire further research in tactile sensing and manipulation.

Automated Quantification of Hyperreflective Foci in SD-OCT With Diabetic Retinopathy

Jul 31, 2024

Abstract:The presence of hyperreflective foci (HFs) is related to retinal disease progression, and the quantity has proven to be a prognostic factor of visual and anatomical outcome in various retinal diseases. However, lack of efficient quantitative tools for evaluating the HFs has deprived ophthalmologist of assessing the volume of HFs. For this reason, we propose an automated quantification algorithm to segment and quantify HFs in spectral domain optical coherence tomography (SD-OCT). The proposed algorithm consists of two parallel processes namely: region of interest (ROI) generation and HFs estimation. To generate the ROI, we use morphological reconstruction to obtain the reconstructed image and histogram constructed for data distributions and clustering. In parallel, we estimate the HFs by extracting the extremal regions from the connected regions obtained from a component tree. Finally, both the ROI and the HFs estimation process are merged to obtain the segmented HFs. The proposed algorithm was tested on 40 3D SD-OCT volumes from 40 patients diagnosed with non-proliferative diabetic retinopathy (NPDR), proliferative diabetic retinopathy (PDR), and diabetic macular edema (DME). The average dice similarity coefficient (DSC) and correlation coefficient (r) are 69.70%, 0.99 for NPDR, 70.31%, 0.99 for PDR, and 71.30%, 0.99 for DME, respectively. The proposed algorithm can provide ophthalmologist with good HFs quantitative information, such as volume, size, and location of the HFs.

* IEEE Journal of Biomedical and Health Informatics, Volume: 24, Issue: 4, pp. 1125 - 1136, 2020

A UAV-Enabled Time-Sensitive Data Collection Scheme for Grassland Monitoring Edge Networks

Jul 30, 2024

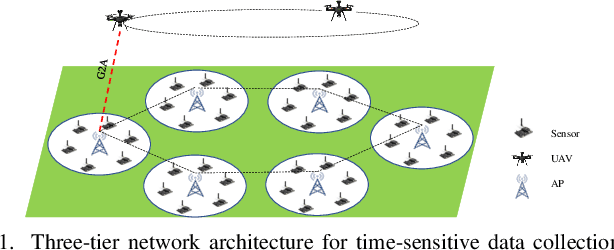

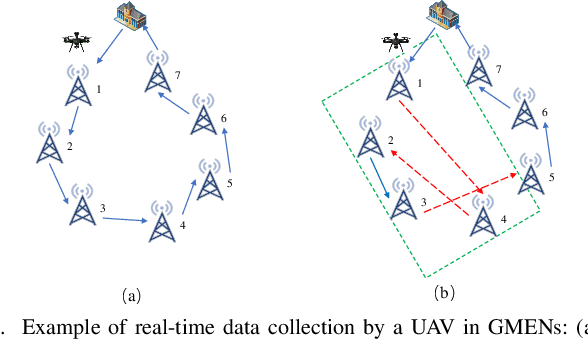

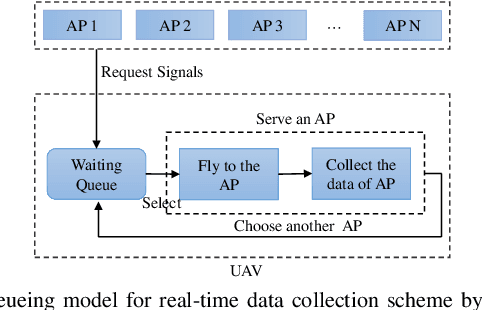

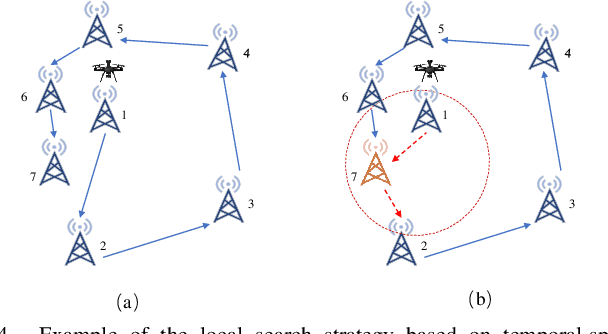

Abstract:Grassland monitoring is essential for the sustainable development of grassland resources. Traditional Internet of Things (IoT) devices generate critical ecological data, making data loss unacceptable, but the harsh environment complicates data collection. Unmanned Aerial Vehicle (UAV) and mobile edge computing (MEC) offer efficient data collection solutions, enhancing performance on resource-limited mobile devices. In this context, this paper is the first to investigate a UAV-enabled time-sensitive data collection problem (TSDCMP) within grassland monitoring edge networks (GMENs). Unlike many existing data collection scenarios, this problem has three key challenges. First, the total amount of data collected depends significantly on the data collection duration and arrival time of UAV at each access point (AP). Second, the volume of data at different APs varies among regions due to differences in monitoring objects and vegetation coverage. Third, the service requests time and locations from APs are often not adjacent topologically. To address these issues, We formulate the TSDCMP for UAV-enabled GMENs as a mixed-integer programming model in a single trip. This model considers constraints such as the limited energy of UAV, the coupled routing and time scheduling, and the state of APs and UAV arrival time. Subsequently, we propose a novel cooperative heuristic algorithm based on temporal-spatial correlations (CHTSC) that integrates a modified dynamic programming (MDP) into an iterated local search to solve the TSDCMP for UAV-enabled GMENs. This approach fully takes into account the temporal and spatial relationships between consecutive service requests from APs. Systematic simulation studies demonstrate that the mixed-integer programming model effectively represents the TSDCMP within UAV-enabled GMENs.

MagicTac: A Novel High-Resolution 3D Multi-layer Grid-Based Tactile Sensor

Feb 02, 2024

Abstract:Accurate robotic control over interactions with the environment is fundamentally grounded in understanding tactile contacts. In this paper, we introduce MagicTac, a novel high-resolution grid-based tactile sensor. This sensor employs a 3D multi-layer grid-based design, inspired by the Magic Cube structure. This structure can help increase the spatial resolution of MagicTac to perceive external interaction contacts. Moreover, the sensor is produced using the multi-material additive manufacturing technique, which simplifies the manufacturing process while ensuring repeatability of production. Compared to traditional vision-based tactile sensors, it offers the advantages of i) high spatial resolution, ii) significant affordability, and iii) fabrication-friendly construction that requires minimal assembly skills. We evaluated the proposed MagicTac in the tactile reconstruction task using the deformation field and optical flow. Results indicated that MagicTac could capture fine textures and is sensitive to dynamic contact information. Through the grid-based multi-material additive manufacturing technique, the affordability and productivity of MagicTac can be enhanced with a minimum manufacturing cost of 4.76 GBP and a minimum manufacturing time of 24.6 minutes.

ViTacTip: Design and Verification of a Novel Biomimetic Physical Vision-Tactile Fusion Sensor

Jan 31, 2024Abstract:Tactile sensing is significant for robotics since it can obtain physical contact information during manipulation. To capture multimodal contact information within a compact framework, we designed a novel sensor called ViTacTip, which seamlessly integrates both tactile and visual perception capabilities into a single, integrated sensor unit. ViTacTip features a transparent skin to capture fine features of objects during contact, which can be known as the see-through-skin mechanism. In the meantime, the biomimetic tips embedded in ViTacTip can amplify touch motions during tactile perception. For comparative analysis, we also fabricated a ViTac sensor devoid of biomimetic tips, as well as a TacTip sensor with opaque skin. Furthermore, we develop a Generative Adversarial Network (GAN)-based approach for modality switching between different perception modes, effectively alternating the emphasis between vision and tactile perception modes. We conducted a performance evaluation of the proposed sensor across three distinct tasks: i) grating identification, ii) pose regression, and iii) contact localization and force estimation. In the grating identification task, ViTacTip demonstrated an accuracy of 99.72%, surpassing TacTip, which achieved 94.60%. It also exhibited superior performance in both pose and force estimation tasks with the minimum error of 0.08mm and 0.03N, respectively, in contrast to ViTac's 0.12mm and 0.15N. Results indicate that ViTacTip outperforms single-modality sensors.

TacFR-Gripper: A Reconfigurable Fin Ray-Based Compliant Robotic Gripper with Tactile Skin for In-Hand Manipulation

Nov 17, 2023Abstract:This paper introduces the TacFR-Gripper, a reconfigurable Fin Ray-based soft and compliant robotic gripper equipped with tactile skin, which can be used for dexterous in-hand manipulation tasks. This gripper can adaptively grasp objects of diverse shapes and stiffness levels. An array of Force Sensitive Resistor (FSR) sensors is embedded within the robotic finger to serve as the tactile skin, enabling the robot to perceive contact information during manipulation. We provide theoretical analysis for gripper design, including kinematic analysis, workspace analysis, and finite element analysis to identify the relationship between the gripper's load and its deformation. Moreover, we implemented a Graph Neural Network (GNN)-based tactile perception approach to enable reliable grasping without accidental slip or excessive force. Three physical experiments were conducted to quantify the performance of the TacFR-Gripper. These experiments aimed to i) assess the grasp success rate across various everyday objects through different configurations, ii) verify the effectiveness of tactile skin with the GNN algorithm in grasping, iii) evaluate the gripper's in-hand manipulation capabilities for object pose control. The experimental results indicate that the TacFR-Gripper can grasp a wide range of complex-shaped objects with a high success rate and deliver dexterous in-hand manipulation. Additionally, the integration of tactile skin with the GNN algorithm enhances grasp stability by incorporating tactile feedback during manipulations. For more details of this project, please view our website: https://sites.google.com/view/tacfr-gripper/homepage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge