Jialin Lin

Engineering Mathematics, University of Bristol, affiliated with the Bristol Robotics Lab, United Kingdom

Design and Benchmarking of A Multi-Modality Sensor for Robotic Manipulation with GAN-Based Cross-Modality Interpretation

Jan 04, 2025

Abstract:In this paper, we present the design and benchmark of an innovative sensor, ViTacTip, which fulfills the demand for advanced multi-modal sensing in a compact design. A notable feature of ViTacTip is its transparent skin, which incorporates a `see-through-skin' mechanism. This mechanism aims at capturing detailed object features upon contact, significantly improving both vision-based and proximity perception capabilities. In parallel, the biomimetic tips embedded in the sensor's skin are designed to amplify contact details, thus substantially augmenting tactile and derived force perception abilities. To demonstrate the multi-modal capabilities of ViTacTip, we developed a multi-task learning model that enables simultaneous recognition of hardness, material, and textures. To assess the functionality and validate the versatility of ViTacTip, we conducted extensive benchmarking experiments, including object recognition, contact point detection, pose regression, and grating identification. To facilitate seamless switching between various sensing modalities, we employed a Generative Adversarial Network (GAN)-based approach. This method enhances the applicability of the ViTacTip sensor across diverse environments by enabling cross-modality interpretation.

Hierarchical Semi-Supervised Learning Framework for Surgical Gesture Segmentation and Recognition Based on Multi-Modality Data

Jul 31, 2023

Abstract:Segmenting and recognizing surgical operation trajectories into distinct, meaningful gestures is a critical preliminary step in surgical workflow analysis for robot-assisted surgery. This step is necessary for facilitating learning from demonstrations for autonomous robotic surgery, evaluating surgical skills, and so on. In this work, we develop a hierarchical semi-supervised learning framework for surgical gesture segmentation using multi-modality data (i.e. kinematics and vision data). More specifically, surgical tasks are initially segmented based on distance characteristics-based profiles and variance characteristics-based profiles constructed using kinematics data. Subsequently, a Transformer-based network with a pre-trained `ResNet-18' backbone is used to extract visual features from the surgical operation videos. By combining the potential segmentation points obtained from both modalities, we can determine the final segmentation points. Furthermore, gesture recognition can be implemented based on supervised learning. The proposed approach has been evaluated using data from the publicly available JIGSAWS database, including Suturing, Needle Passing, and Knot Tying tasks. The results reveal an average F1 score of 0.623 for segmentation and an accuracy of 0.856 for recognition.

IoHRT: An Open-Source Unified Framework Towards the Internet of Humans and Robotic Things with Cloud Computing for Home-Care Applications

Mar 07, 2023Abstract:The accelerating aging population has led to an increasing demand for domestic robotics to ease caregivers' burden. The integration of Internet of Things (IoT), robotics, and human-robot interaction (HRI) technologies is essential for home-care applications. Although the concept of the Internet of Robotic Things (IoRT) has been utilized in various fields, most existing IoRT frameworks lack ergonomic HRI interfaces and are limited to specific tasks. This paper presents an open-source unified Internet of Humans and Robotic Things (IoHRT) framework with cloud computing, which combines personalized HRI interfaces with intelligent robotics and IoT techniques. This proposed open-source framework demonstrates characteristics of high security, compatibility, and modularity, allowing unlimited user access. Two case studies were conducted to evaluate the proposed framework's functionalities, evaluating its effectiveness in home-care scenarios. Users' feedback was collected via questionnaires, which indicates the IoHRT framework's high potential for home-care applications.

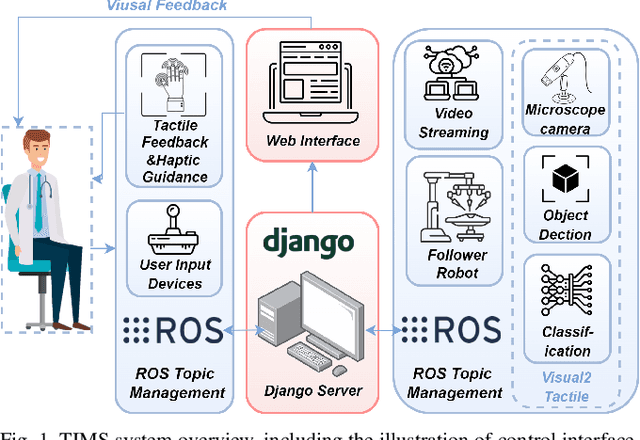

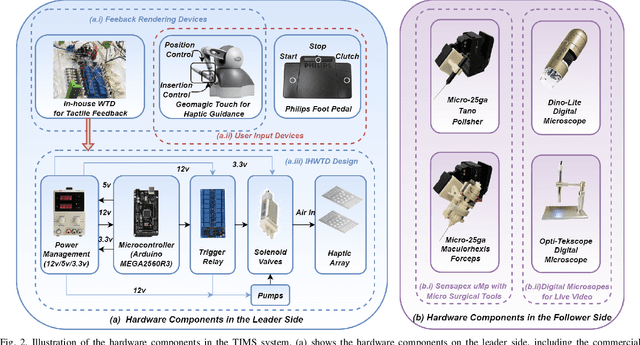

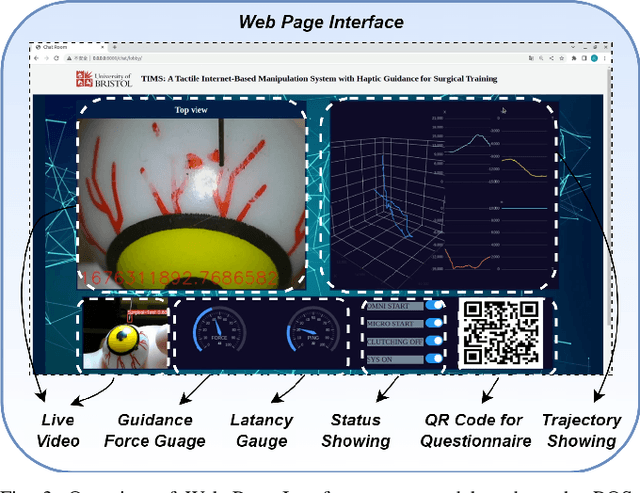

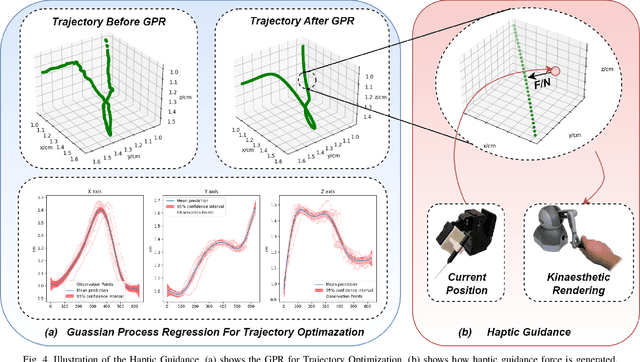

TIMS: A Tactile Internet-Based Micromanipulation System with Haptic Guidance for Surgical Training

Mar 07, 2023

Abstract:Microsurgery involves the dexterous manipulation of delicate tissue or fragile structures such as small blood vessels, nerves, etc., under a microscope. To address the limitation of imprecise manipulation of human hands, robotic systems have been developed to assist surgeons in performing complex microsurgical tasks with greater precision and safety. However, the steep learning curve for robot-assisted microsurgery (RAMS) and the shortage of well-trained surgeons pose significant challenges to the widespread adoption of RAMS. Therefore, the development of a versatile training system for RAMS is necessary, which can bring tangible benefits to both surgeons and patients. In this paper, we present a Tactile Internet-Based Micromanipulation System (TIMS) based on a ROS-Django web-based architecture for microsurgical training. This system can provide tactile feedback to operators via a wearable tactile display (WTD), while real-time data is transmitted through the internet via a ROS-Django framework. In addition, TIMS integrates haptic guidance to `guide' the trainees to follow a desired trajectory provided by expert surgeons. Learning from demonstration based on Gaussian Process Regression (GPR) was used to generate the desired trajectory. User studies were also conducted to verify the effectiveness of our proposed TIMS, comparing users' performance with and without tactile feedback and/or haptic guidance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge