Tao Cheng

Vision-Language Reasoning for Geolocalization: A Reinforcement Learning Approach

Jan 05, 2026Abstract:Recent advances in vision-language models have opened up new possibilities for reasoning-driven image geolocalization. However, existing approaches often rely on synthetic reasoning annotations or external image retrieval, which can limit interpretability and generalizability. In this paper, we present Geo-R, a retrieval-free framework that uncovers structured reasoning paths from existing ground-truth coordinates and optimizes geolocation accuracy via reinforcement learning. We propose the Chain of Region, a rule-based hierarchical reasoning paradigm that generates precise, interpretable supervision by mapping GPS coordinates to geographic entities (e.g., country, province, city) without relying on model-generated or synthetic labels. Building on this, we introduce a lightweight reinforcement learning strategy with coordinate-aligned rewards based on Haversine distance, enabling the model to refine predictions through spatially meaningful feedback. Our approach bridges structured geographic reasoning with direct spatial supervision, yielding improved localization accuracy, stronger generalization, and more transparent inference. Experimental results across multiple benchmarks confirm the effectiveness of Geo-R, establishing a new retrieval-free paradigm for scalable and interpretable image geolocalization. To facilitate further research and ensure reproducibility, both the model and code will be made publicly available.

CLIP the Landscape: Automated Tagging of Crowdsourced Landscape Images

Jun 13, 2025Abstract:We present a CLIP-based, multi-modal, multi-label classifier for predicting geographical context tags from landscape photos in the Geograph dataset--a crowdsourced image archive spanning the British Isles, including remote regions lacking POIs and street-level imagery. Our approach addresses a Kaggle competition\footnote{https://www.kaggle.com/competitions/predict-geographic-context-from-landscape-photos} task based on a subset of Geograph's 8M images, with strict evaluation: exact match accuracy is required across 49 possible tags. We show that combining location and title embeddings with image features improves accuracy over using image embeddings alone. We release a lightweight pipeline\footnote{https://github.com/SpaceTimeLab/ClipTheLandscape} that trains on a modest laptop, using pre-trained CLIP image and text embeddings and a simple classification head. Predicted tags can support downstream tasks such as building location embedders for GeoAI applications, enriching spatial understanding in data-sparse regions.

MSD-LLM: Predicting Ship Detention in Port State Control Inspections with Large Language Model

May 26, 2025Abstract:Maritime transportation is the backbone of global trade, making ship inspection essential for ensuring maritime safety and environmental protection. Port State Control (PSC), conducted by national ports, enforces compliance with safety regulations, with ship detention being the most severe consequence, impacting both ship schedules and company reputations. Traditional machine learning methods for ship detention prediction are limited by the capacity of representation learning and thus suffer from low accuracy. Meanwhile, autoencoder-based deep learning approaches face challenges due to the severe data imbalance in learning historical PSC detention records. To address these limitations, we propose Maritime Ship Detention with Large Language Models (MSD-LLM), integrating a dual robust subspace recovery (DSR) layer-based autoencoder with a progressive learning pipeline to handle imbalanced data and extract meaningful PSC representations. Then, a large language model groups and ranks features to identify likely detention cases, enabling dynamic thresholding for flexible detention predictions. Extensive evaluations on 31,707 PSC inspection records from the Asia-Pacific region show that MSD-LLM outperforms state-of-the-art methods more than 12\% on Area Under the Curve (AUC) for Singapore ports. Additionally, it demonstrates robustness to real-world challenges, making it adaptable to diverse maritime risk assessment scenarios.

Patho-R1: A Multimodal Reinforcement Learning-Based Pathology Expert Reasoner

May 16, 2025

Abstract:Recent advances in vision language models (VLMs) have enabled broad progress in the general medical field. However, pathology still remains a more challenging subdomain, with current pathology specific VLMs exhibiting limitations in both diagnostic accuracy and reasoning plausibility. Such shortcomings are largely attributable to the nature of current pathology datasets, which are primarily composed of image description pairs that lack the depth and structured diagnostic paradigms employed by real world pathologists. In this study, we leverage pathology textbooks and real world pathology experts to construct high-quality, reasoning-oriented datasets. Building on this, we introduce Patho-R1, a multimodal RL-based pathology Reasoner, trained through a three-stage pipeline: (1) continued pretraining on 3.5 million image-text pairs for knowledge infusion; (2) supervised fine-tuning on 500k high-quality Chain-of-Thought samples for reasoning incentivizing; (3) reinforcement learning using Group Relative Policy Optimization and Decoupled Clip and Dynamic sAmpling Policy Optimization strategies for multimodal reasoning quality refinement. To further assess the alignment quality of our dataset, we propose PathoCLIP, trained on the same figure-caption corpus used for continued pretraining. Comprehensive experimental results demonstrate that both PathoCLIP and Patho-R1 achieve robust performance across a wide range of pathology-related tasks, including zero-shot classification, cross-modal retrieval, Visual Question Answering, and Multiple Choice Question. Our project is available at the Patho-R1 repository: https://github.com/Wenchuan-Zhang/Patho-R1.

Geolocation with Real Human Gameplay Data: A Large-Scale Dataset and Human-Like Reasoning Framework

Feb 19, 2025

Abstract:Geolocation, the task of identifying an image's location, requires complex reasoning and is crucial for navigation, monitoring, and cultural preservation. However, current methods often produce coarse, imprecise, and non-interpretable localization. A major challenge lies in the quality and scale of existing geolocation datasets. These datasets are typically small-scale and automatically constructed, leading to noisy data and inconsistent task difficulty, with images that either reveal answers too easily or lack sufficient clues for reliable inference. To address these challenges, we introduce a comprehensive geolocation framework with three key components: GeoComp, a large-scale dataset; GeoCoT, a novel reasoning method; and GeoEval, an evaluation metric, collectively designed to address critical challenges and drive advancements in geolocation research. At the core of this framework is GeoComp (Geolocation Competition Dataset), a large-scale dataset collected from a geolocation game platform involving 740K users over two years. It comprises 25 million entries of metadata and 3 million geo-tagged locations spanning much of the globe, with each location annotated thousands to tens of thousands of times by human users. The dataset offers diverse difficulty levels for detailed analysis and highlights key gaps in current models. Building on this dataset, we propose Geographical Chain-of-Thought (GeoCoT), a novel multi-step reasoning framework designed to enhance the reasoning capabilities of Large Vision Models (LVMs) in geolocation tasks. GeoCoT improves performance by integrating contextual and spatial cues through a multi-step process that mimics human geolocation reasoning. Finally, using the GeoEval metric, we demonstrate that GeoCoT significantly boosts geolocation accuracy by up to 25% while enhancing interpretability.

Multimodal Contrastive Learning of Urban Space Representations from POI Data

Nov 09, 2024Abstract:Existing methods for learning urban space representations from Point-of-Interest (POI) data face several limitations, including issues with geographical delineation, inadequate spatial information modelling, underutilisation of POI semantic attributes, and computational inefficiencies. To address these issues, we propose CaLLiPer (Contrastive Language-Location Pre-training), a novel representation learning model that directly embeds continuous urban spaces into vector representations that can capture the spatial and semantic distribution of urban environment. This model leverages a multimodal contrastive learning objective, aligning location embeddings with textual POI descriptions, thereby bypassing the need for complex training corpus construction and negative sampling. We validate CaLLiPer's effectiveness by applying it to learning urban space representations in London, UK, where it demonstrates 5-15% improvement in predictive performance for land use classification and socioeconomic mapping tasks compared to state-of-the-art methods. Visualisations of the learned representations further illustrate our model's advantages in capturing spatial variations in urban semantics with high accuracy and fine resolution. Additionally, CaLLiPer achieves reduced training time, showcasing its efficiency and scalability. This work provides a promising pathway for scalable, semantically rich urban space representation learning that can support the development of geospatial foundation models. The implementation code is available at https://github.com/xlwang233/CaLLiPer.

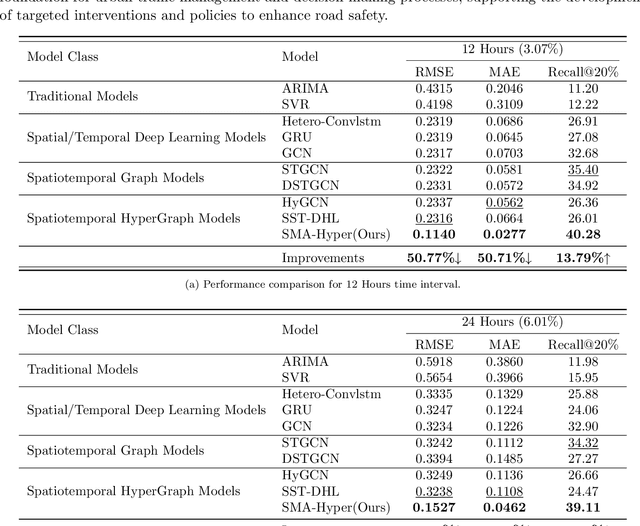

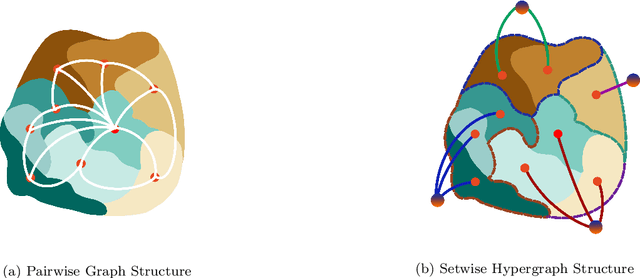

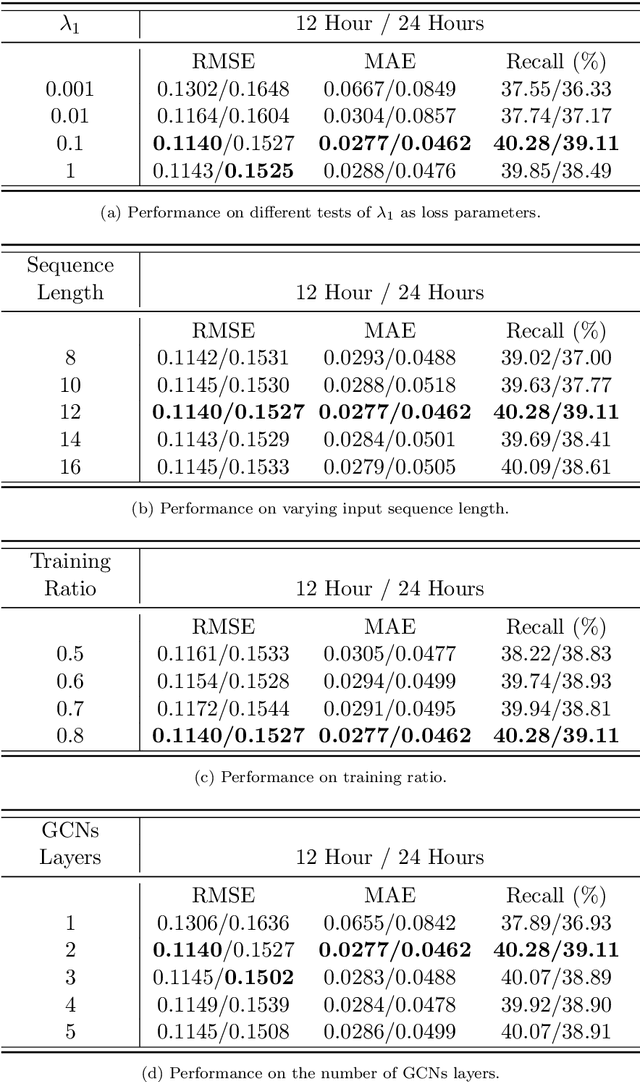

SMA-Hyper: Spatiotemporal Multi-View Fusion Hypergraph Learning for Traffic Accident Prediction

Jul 24, 2024

Abstract:Predicting traffic accidents is the key to sustainable city management, which requires effective address of the dynamic and complex spatiotemporal characteristics of cities. Current data-driven models often struggle with data sparsity and typically overlook the integration of diverse urban data sources and the high-order dependencies within them. Additionally, they frequently rely on predefined topologies or weights, limiting their adaptability in spatiotemporal predictions. To address these issues, we introduce the Spatiotemporal Multiview Adaptive HyperGraph Learning (SMA-Hyper) model, a dynamic deep learning framework designed for traffic accident prediction. Building on previous research, this innovative model incorporates dual adaptive spatiotemporal graph learning mechanisms that enable high-order cross-regional learning through hypergraphs and dynamic adaptation to evolving urban data. It also utilises contrastive learning to enhance global and local data representations in sparse datasets and employs an advance attention mechanism to fuse multiple views of accident data and urban functional features, thereby enriching the contextual understanding of risk factors. Extensive testing on the London traffic accident dataset demonstrates that the SMA-Hyper model significantly outperforms baseline models across various temporal horizons and multistep outputs, affirming the effectiveness of its multiview fusion and adaptive learning strategies. The interpretability of the results further underscores its potential to improve urban traffic management and safety by leveraging complex spatiotemporal urban data, offering a scalable framework adaptable to diverse urban environments.

GelSplitter: Tactile Reconstruction from Near Infrared and Visible Images

Sep 15, 2023

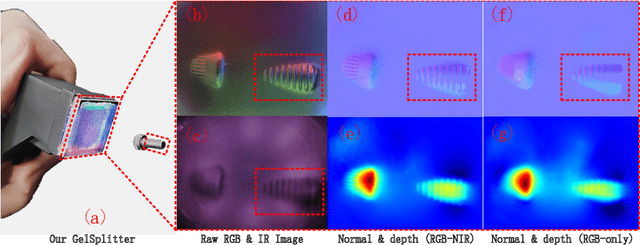

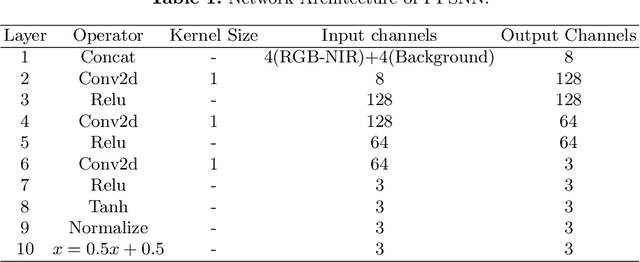

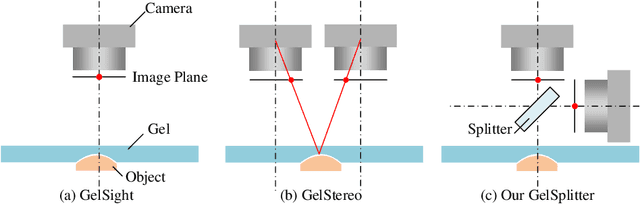

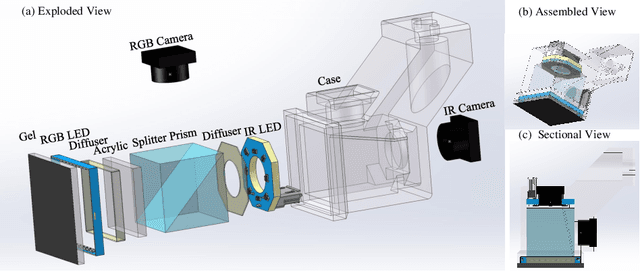

Abstract:The GelSight-like visual tactile (VT) sensor has gained popularity as a high-resolution tactile sensing technology for robots, capable of measuring touch geometry using a single RGB camera. However, the development of multi-modal perception for VT sensors remains a challenge, limited by the mono camera. In this paper, we propose the GelSplitter, a new framework approach the multi-modal VT sensor with synchronized multi-modal cameras and resemble a more human-like tactile receptor. Furthermore, we focus on 3D tactile reconstruction and implement a compact sensor structure that maintains a comparable size to state-of-the-art VT sensors, even with the addition of a prism and a near infrared (NIR) camera. We also design a photometric fusion stereo neural network (PFSNN), which estimates surface normals of objects and reconstructs touch geometry from both infrared and visible images. Our results demonstrate that the accuracy of RGB and NIR fusion is higher than that of RGB images alone. Additionally, our GelSplitter framework allows for a flexible configuration of different camera sensor combinations, such as RGB and thermal imaging.

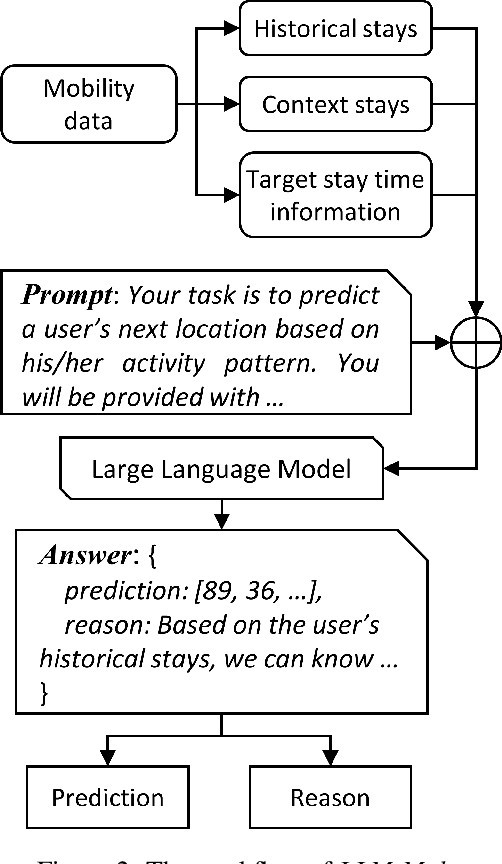

Where Would I Go Next? Large Language Models as Human Mobility Predictors

Aug 29, 2023

Abstract:Accurate human mobility prediction underpins many important applications across a variety of domains, including epidemic modelling, transport planning, and emergency responses. Due to the sparsity of mobility data and the stochastic nature of people's daily activities, achieving precise predictions of people's locations remains a challenge. While recently developed large language models (LLMs) have demonstrated superior performance across numerous language-related tasks, their applicability to human mobility studies remains unexplored. Addressing this gap, this article delves into the potential of LLMs for human mobility prediction tasks. We introduce a novel method, LLM-Mob, which leverages the language understanding and reasoning capabilities of LLMs for analysing human mobility data. We present concepts of historical stays and context stays to capture both long-term and short-term dependencies in human movement and enable time-aware prediction by using time information of the prediction target. Additionally, we design context-inclusive prompts that enable LLMs to generate more accurate predictions. Comprehensive evaluations of our method reveal that LLM-Mob excels in providing accurate and interpretable predictions, highlighting the untapped potential of LLMs in advancing human mobility prediction techniques. We posit that our research marks a significant paradigm shift in human mobility modelling, transitioning from building complex domain-specific models to harnessing general-purpose LLMs that yield accurate predictions through language instructions. The code for this work is available at https://github.com/xlwang233/LLM-Mob.

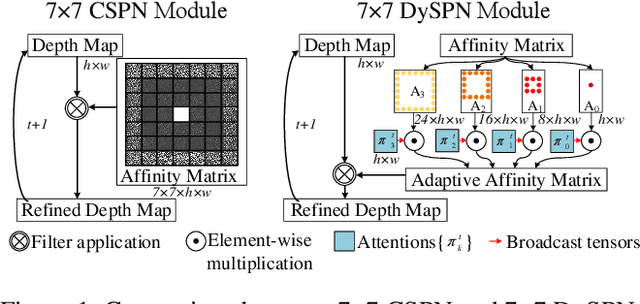

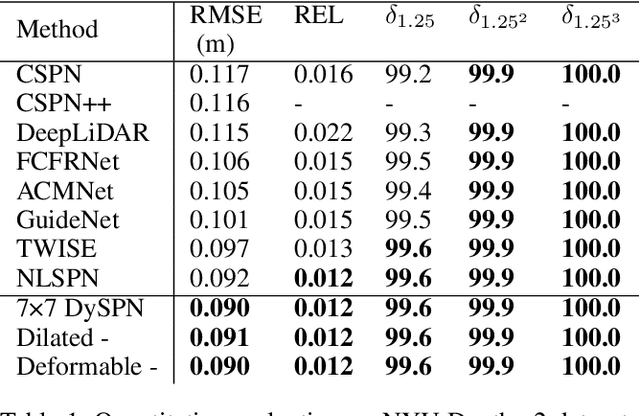

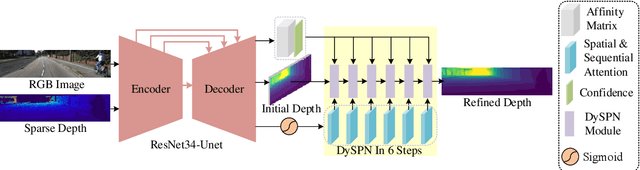

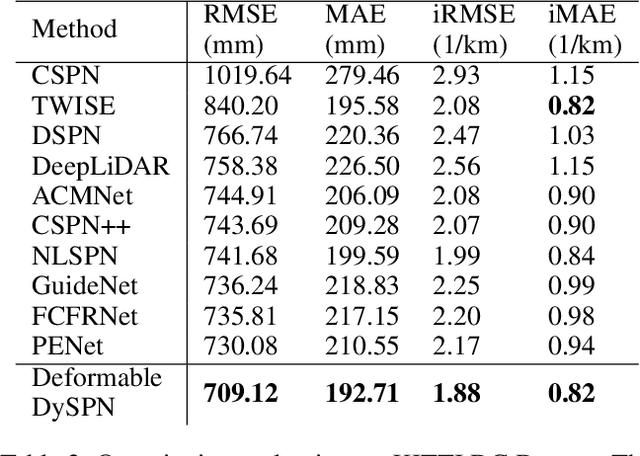

Dynamic Spatial Propagation Network for Depth Completion

Feb 20, 2022

Abstract:Image-guided depth completion aims to generate dense depth maps with sparse depth measurements and corresponding RGB images. Currently, spatial propagation networks (SPNs) are the most popular affinity-based methods in depth completion, but they still suffer from the representation limitation of the fixed affinity and the over smoothing during iterations. Our solution is to estimate independent affinity matrices in each SPN iteration, but it is over-parameterized and heavy calculation. This paper introduces an efficient model that learns the affinity among neighboring pixels with an attention-based, dynamic approach. Specifically, the Dynamic Spatial Propagation Network (DySPN) we proposed makes use of a non-linear propagation model (NLPM). It decouples the neighborhood into parts regarding to different distances and recursively generates independent attention maps to refine these parts into adaptive affinity matrices. Furthermore, we adopt a diffusion suppression (DS) operation so that the model converges at an early stage to prevent over-smoothing of dense depth. Finally, in order to decrease the computational cost required, we also introduce three variations that reduce the amount of neighbors and attentions needed while still retaining similar accuracy. In practice, our method requires less iteration to match the performance of other SPNs and yields better results overall. DySPN outperforms other state-of-the-art (SoTA) methods on KITTI Depth Completion (DC) evaluation by the time of submission and is able to yield SoTA performance in NYU Depth v2 dataset as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge