CyclingNet: Detecting cycling near misses from video streams in complex urban scenes with deep learning

Paper and Code

Jan 31, 2021

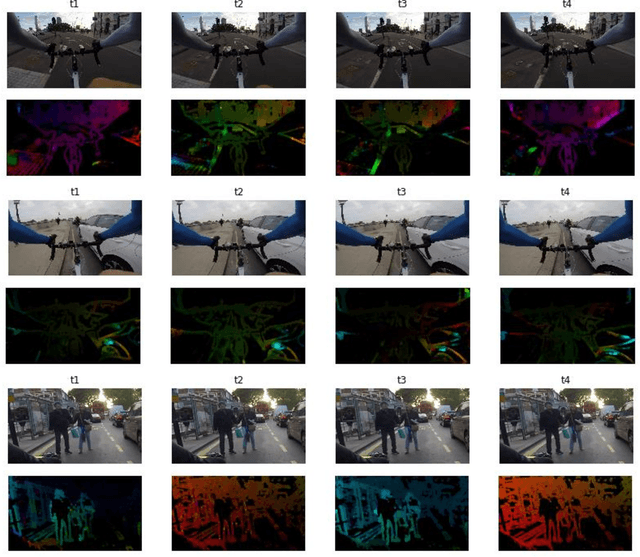

Cycling is a promising sustainable mode for commuting and leisure in cities, however, the fear of getting hit or fall reduces its wide expansion as a commuting mode. In this paper, we introduce a novel method called CyclingNet for detecting cycling near misses from video streams generated by a mounted frontal camera on a bike regardless of the camera position, the conditions of the built, the visual conditions and without any restrictions on the riding behaviour. CyclingNet is a deep computer vision model based on convolutional structure embedded with self-attention bidirectional long-short term memory (LSTM) blocks that aim to understand near misses from both sequential images of scenes and their optical flows. The model is trained on scenes of both safe rides and near misses. After 42 hours of training on a single GPU, the model shows high accuracy on the training, testing and validation sets. The model is intended to be used for generating information that can draw significant conclusions regarding cycling behaviour in cities and elsewhere, which could help planners and policy-makers to better understand the requirement of safety measures when designing infrastructure or drawing policies. As for future work, the model can be pipelined with other state-of-the-art classifiers and object detectors simultaneously to understand the causality of near misses based on factors related to interactions of road-users, the built and the natural environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge