Shreyas Vasanawala

Scale-Agnostic Super-Resolution in MRI using Feature-Based Coordinate Networks

Oct 18, 2022

Abstract:We propose using a coordinate network decoder for the task of super-resolution in MRI. The continuous signal representation of coordinate networks enables this approach to be scale-agnostic, i.e. one can train over a continuous range of scales and subsequently query at arbitrary resolutions. Due to the difficulty of performing super-resolution on inherently noisy data, we analyze network behavior under multiple denoising strategies. Lastly we compare this method to a standard convolutional decoder using both quantitative metrics and a radiologist study implemented in Voxel, our newly developed tool for web-based evaluation of medical images.

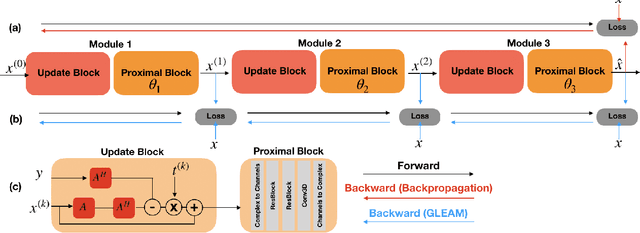

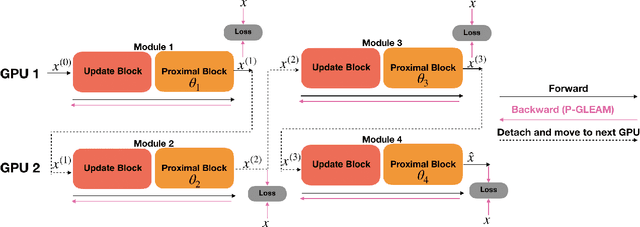

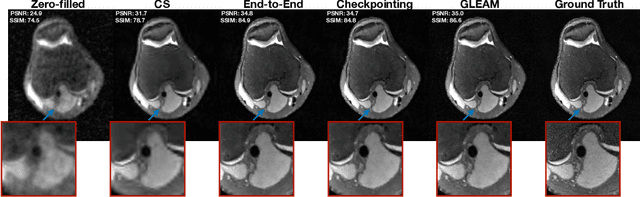

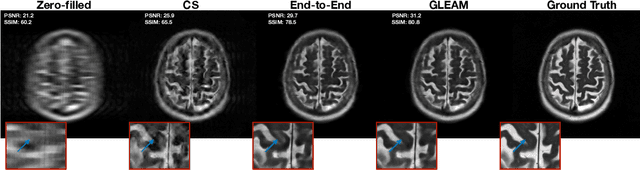

GLEAM: Greedy Learning for Large-Scale Accelerated MRI Reconstruction

Jul 18, 2022

Abstract:Unrolled neural networks have recently achieved state-of-the-art accelerated MRI reconstruction. These networks unroll iterative optimization algorithms by alternating between physics-based consistency and neural-network based regularization. However, they require several iterations of a large neural network to handle high-dimensional imaging tasks such as 3D MRI. This limits traditional training algorithms based on backpropagation due to prohibitively large memory and compute requirements for calculating gradients and storing intermediate activations. To address this challenge, we propose Greedy LEarning for Accelerated MRI (GLEAM) reconstruction, an efficient training strategy for high-dimensional imaging settings. GLEAM splits the end-to-end network into decoupled network modules. Each module is optimized in a greedy manner with decoupled gradient updates, reducing the memory footprint during training. We show that the decoupled gradient updates can be performed in parallel on multiple graphical processing units (GPUs) to further reduce training time. We present experiments with 2D and 3D datasets including multi-coil knee, brain, and dynamic cardiac cine MRI. We observe that: i) GLEAM generalizes as well as state-of-the-art memory-efficient baselines such as gradient checkpointing and invertible networks with the same memory footprint, but with 1.3x faster training; ii) for the same memory footprint, GLEAM yields 1.1dB PSNR gain in 2D and 1.8 dB in 3D over end-to-end baselines.

Scale-Equivariant Unrolled Neural Networks for Data-Efficient Accelerated MRI Reconstruction

Apr 21, 2022

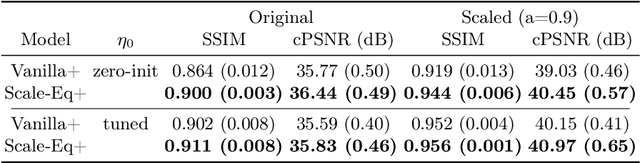

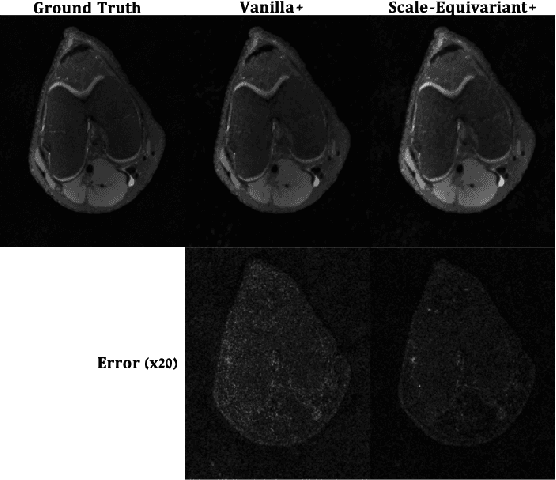

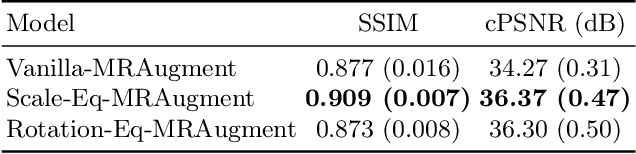

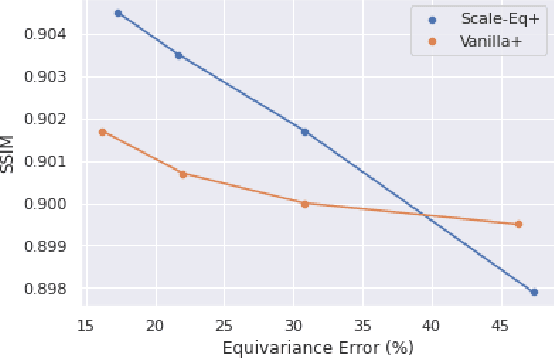

Abstract:Unrolled neural networks have enabled state-of-the-art reconstruction performance and fast inference times for the accelerated magnetic resonance imaging (MRI) reconstruction task. However, these approaches depend on fully-sampled scans as ground truth data which is either costly or not possible to acquire in many clinical medical imaging applications; hence, reducing dependence on data is desirable. In this work, we propose modeling the proximal operators of unrolled neural networks with scale-equivariant convolutional neural networks in order to improve the data-efficiency and robustness to drifts in scale of the images that might stem from the variability of patient anatomies or change in field-of-view across different MRI scanners. Our approach demonstrates strong improvements over the state-of-the-art unrolled neural networks under the same memory constraints both with and without data augmentations on both in-distribution and out-of-distribution scaled images without significantly increasing the train or inference time.

VORTEX: Physics-Driven Data Augmentations for Consistency Training for Robust Accelerated MRI Reconstruction

Nov 03, 2021

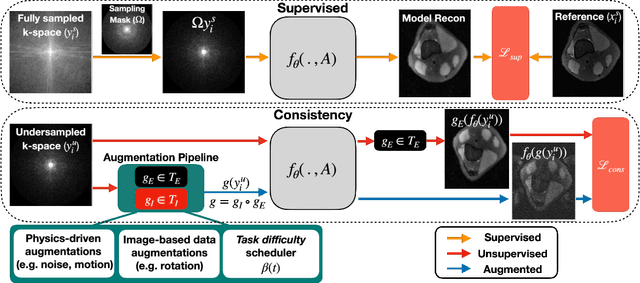

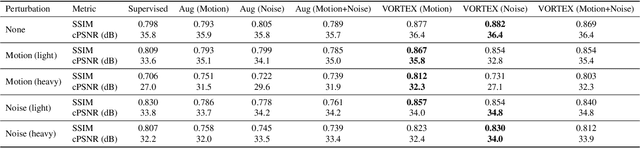

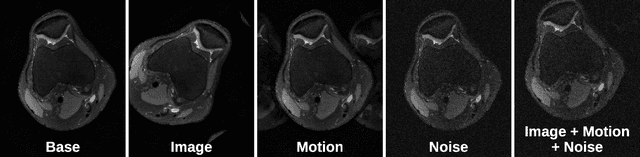

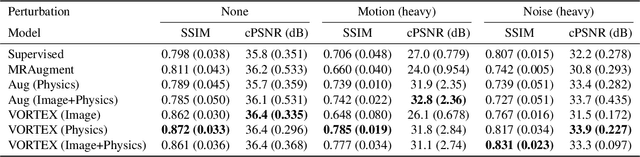

Abstract:Deep neural networks have enabled improved image quality and fast inference times for various inverse problems, including accelerated magnetic resonance imaging (MRI) reconstruction. However, such models require large amounts of fully-sampled ground truth data, which are difficult to curate and are sensitive to distribution drifts. In this work, we propose applying physics-driven data augmentations for consistency training that leverage our domain knowledge of the forward MRI data acquisition process and MRI physics for improved data efficiency and robustness to clinically-relevant distribution drifts. Our approach, termed VORTEX (1) demonstrates strong improvements over supervised baselines with and without augmentation in robustness to signal-to-noise ratio change and motion corruption in data-limited regimes; (2) considerably outperforms state-of-the-art data augmentation techniques that are purely image-based on both in-distribution and out-of-distribution data; and (3) enables composing heterogeneous image-based and physics-driven augmentations.

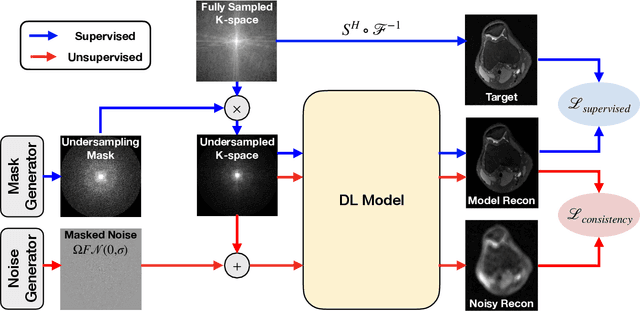

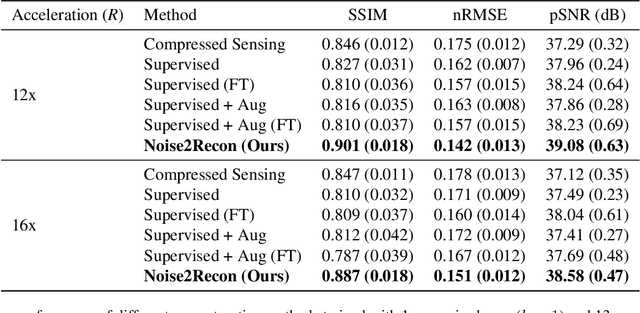

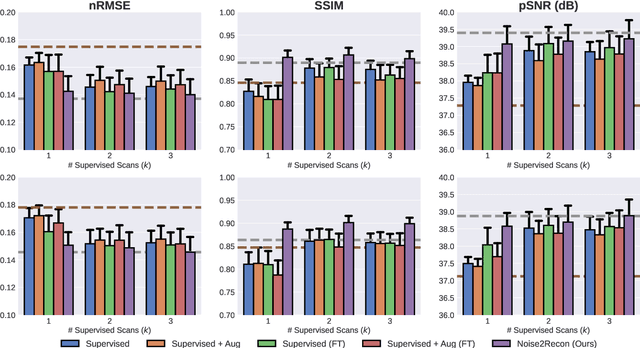

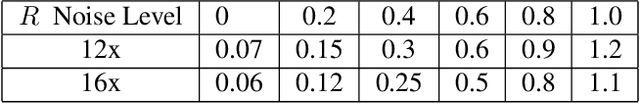

Noise2Recon: A Semi-Supervised Framework for Joint MRI Reconstruction and Denoising

Sep 30, 2021

Abstract:Deep learning (DL) has shown promise for faster, high quality accelerated MRI reconstruction. However, standard supervised DL methods depend on extensive amounts of fully-sampled ground-truth data and are sensitive to out-of-distribution (OOD) shifts, in particular for low signal-to-noise ratio (SNR) acquisitions. To alleviate this challenge, we propose a semi-supervised, consistency-based framework (termed Noise2Recon) for joint MR reconstruction and denoising. Our method enables the usage of a limited number of fully-sampled and a large number of undersampled-only scans. We compare our method to augmentation-based supervised techniques and fine-tuned denoisers. Results demonstrate that even with minimal ground-truth data, Noise2Recon (1) achieves high performance on in-distribution (low-noise) scans and (2) improves generalizability to OOD, noisy scans.

Spectral Decomposition in Deep Networks for Segmentation of Dynamic Medical Images

Sep 30, 2020

Abstract:Dynamic contrast-enhanced magnetic resonance imaging (DCE- MRI) is a widely used multi-phase technique routinely used in clinical practice. DCE and similar datasets of dynamic medical data tend to contain redundant information on the spatial and temporal components that may not be relevant for detection of the object of interest and result in unnecessarily complex computer models with long training times that may also under-perform at test time due to the abundance of noisy heterogeneous data. This work attempts to increase the training efficacy and performance of deep networks by determining redundant information in the spatial and spectral components and show that the performance of segmentation accuracy can be maintained and potentially improved. Reported experiments include the evaluation of training/testing efficacy on a heterogeneous dataset composed of abdominal images of pediatric DCE patients, showing that drastic data reduction (higher than 80%) can preserve the dynamic information and performance of the segmentation model, while effectively suppressing noise and unwanted portion of the images.

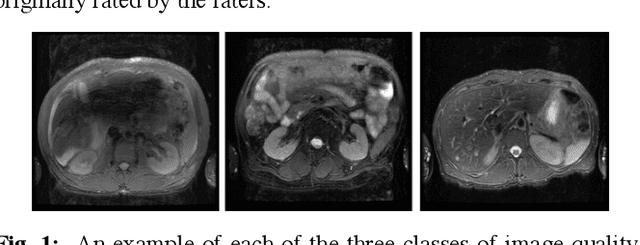

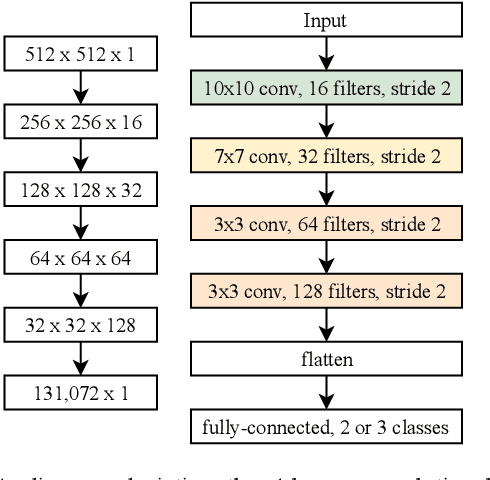

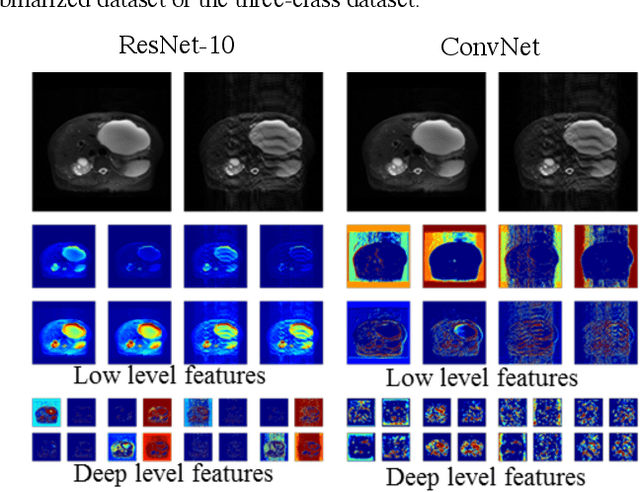

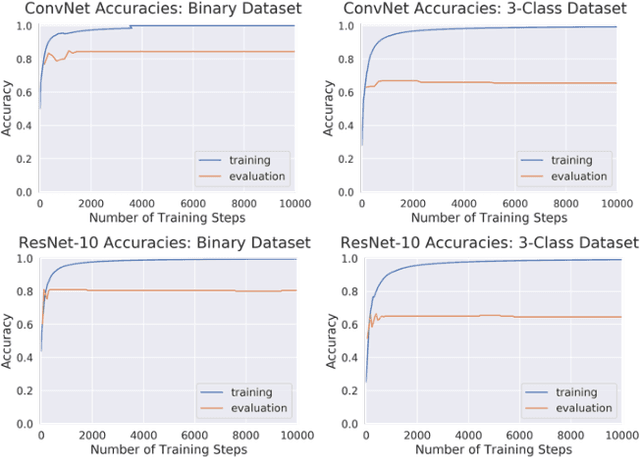

Diagnostic Image Quality Assessment and Classification in Medical Imaging: Opportunities and Challenges

Dec 05, 2019

Abstract:Magnetic Resonance Imaging (MRI) suffers from several artifacts, the most common of which are motion artifacts. These artifacts often yield images that are of non-diagnostic quality. To detect such artifacts, images are prospectively evaluated by experts for their diagnostic quality, which necessitates patient-revisits and rescans whenever non-diagnostic quality scans are encountered. This motivates the need to develop an automated framework capable of accessing medical image quality and detecting diagnostic and non-diagnostic images. In this paper, we explore several convolutional neural network-based frameworks for medical image quality assessment and investigate several challenges therein.

Degrees of Freedom Analysis of Unrolled Neural Networks

Jun 10, 2019

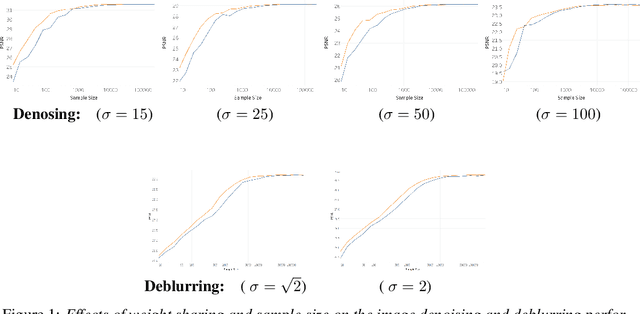

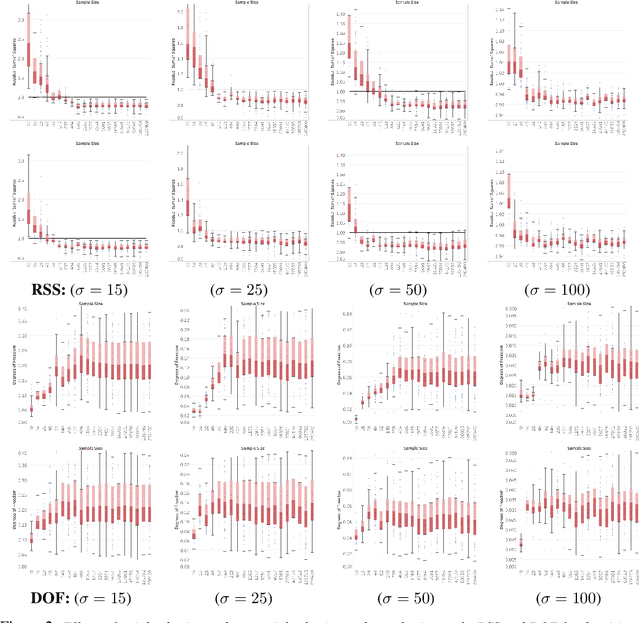

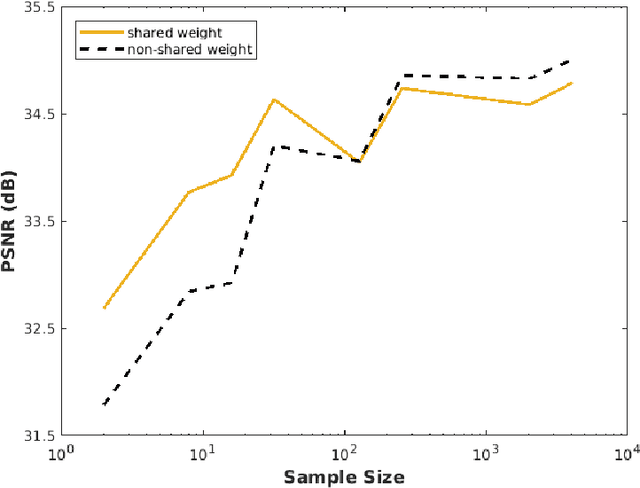

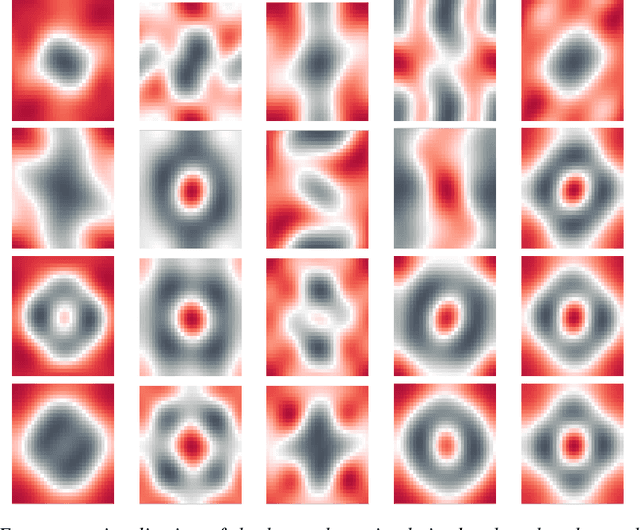

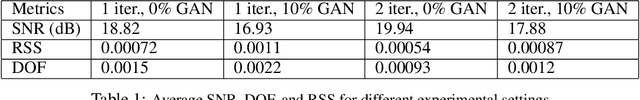

Abstract:Unrolled neural networks emerged recently as an effective model for learning inverse maps appearing in image restoration tasks. However, their generalization risk (i.e., test mean-squared-error) and its link to network design and train sample size remains mysterious. Leveraging the Stein's Unbiased Risk Estimator (SURE), this paper analyzes the generalization risk with its bias and variance components for recurrent unrolled networks. We particularly investigate the degrees-of-freedom (DOF) component of SURE, trace of the end-to-end network Jacobian, to quantify the prediction variance. We prove that DOF is well-approximated by the weighted \textit{path sparsity} of the network under incoherence conditions on the trained weights. Empirically, we examine the SURE components as a function of train sample size for both recurrent and non-recurrent (with many more parameters) unrolled networks. Our key observations indicate that: 1) DOF increases with train sample size and converges to the generalization risk for both recurrent and non-recurrent schemes; 2) recurrent network converges significantly faster (with less train samples) compared with non-recurrent scheme, hence recurrence serves as a regularization for low sample size regimes.

VAE-GANs for Probabilistic Compressive Image Recovery: Uncertainty Analysis

Jan 31, 2019

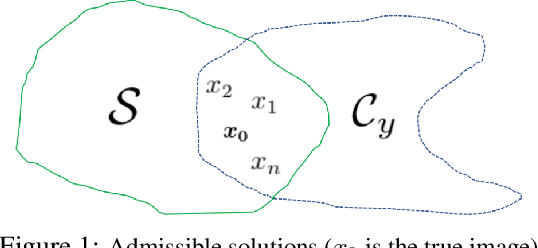

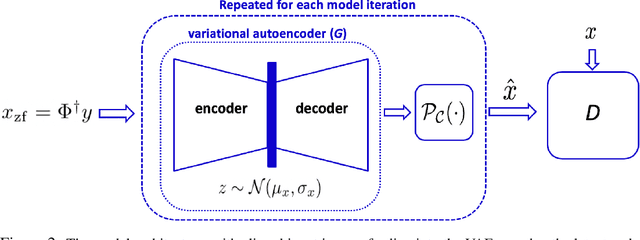

Abstract:Recovering high-quality images from limited sensory data is a challenging computer vision problem that has received significant attention in recent years. In particular, solutions based on deep learning, ranging from autoencoders to generative models, have been especially effective. However, comparatively little work has centered on the robustness of such reconstructions in terms of the generation of realistic image artifacts (known as hallucinations) and quantifying uncertainty. In this work, we develop experimental methods to address these concerns, utilizing a variational autoencoder-based generative adversarial network (VAE-GAN) as a probabilistic image recovery algorithm. We evaluate the model's output distribution statistically by exploring the variance, bias, and error associated with generated reconstructions. Furthermore, we perform eigen analysis by examining the Jacobians of outputs with respect to the aliased inputs to more accurately determine which input components can be responsible for deteriorated output quality. Experiments were carried out using a dataset of Knee MRI images, and our results indicate factors such as sampling rate, acquisition model, and loss function impact the model's robustness. We also conclude that a wise choice of hyperparameters can lead to the robust recovery of MRI images.

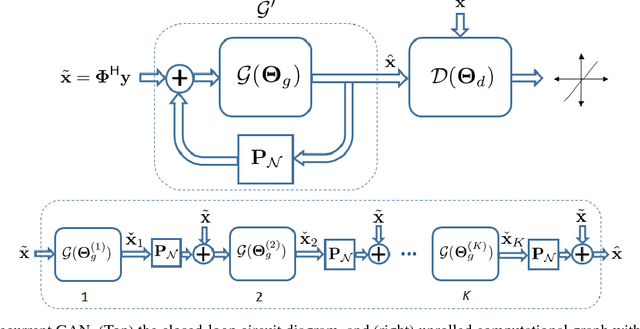

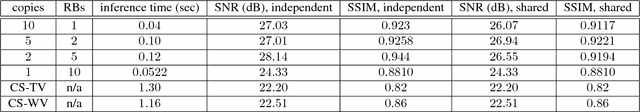

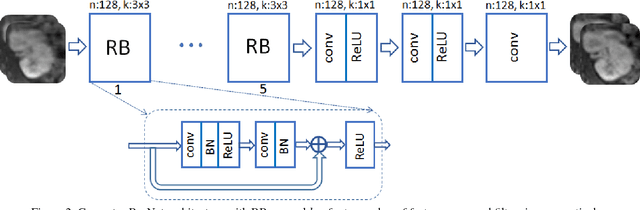

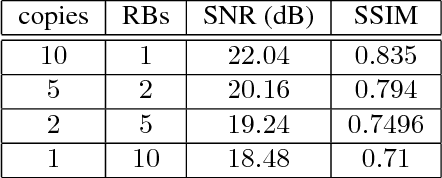

Recurrent Generative Adversarial Networks for Proximal Learning and Automated Compressive Image Recovery

Nov 27, 2017

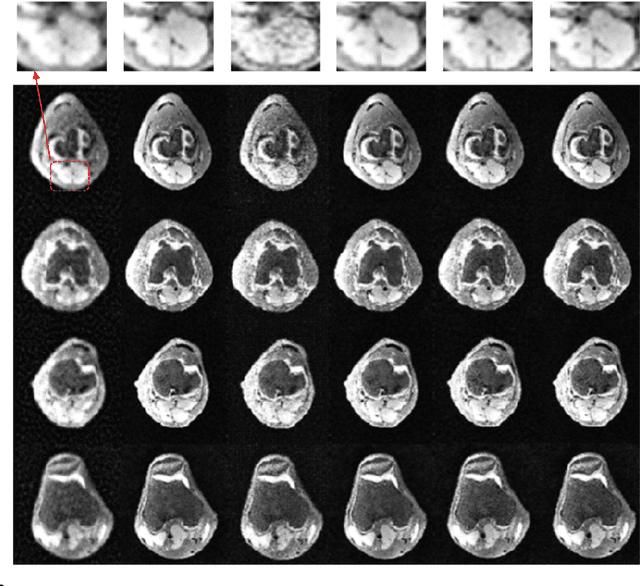

Abstract:Recovering images from undersampled linear measurements typically leads to an ill-posed linear inverse problem, that asks for proper statistical priors. Building effective priors is however challenged by the low train and test overhead dictated by real-time tasks; and the need for retrieving visually "plausible" and physically "feasible" images with minimal hallucination. To cope with these challenges, we design a cascaded network architecture that unrolls the proximal gradient iterations by permeating benefits from generative residual networks (ResNet) to modeling the proximal operator. A mixture of pixel-wise and perceptual costs is then deployed to train proximals. The overall architecture resembles back-and-forth projection onto the intersection of feasible and plausible images. Extensive computational experiments are examined for a global task of reconstructing MR images of pediatric patients, and a more local task of superresolving CelebA faces, that are insightful to design efficient architectures. Our observations indicate that for MRI reconstruction, a recurrent ResNet with a single residual block effectively learns the proximal. This simple architecture appears to significantly outperform the alternative deep ResNet architecture by 2dB SNR, and the conventional compressed-sensing MRI by 4dB SNR with 100x faster inference. For image superresolution, our preliminary results indicate that modeling the denoising proximal demands deep ResNets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge