Morteza Mardani

Demystifying Data-Driven Probabilistic Medium-Range Weather Forecasting

Jan 26, 2026Abstract:The recent revolution in data-driven methods for weather forecasting has lead to a fragmented landscape of complex, bespoke architectures and training strategies, obscuring the fundamental drivers of forecast accuracy. Here, we demonstrate that state-of-the-art probabilistic skill requires neither intricate architectural constraints nor specialized training heuristics. We introduce a scalable framework for learning multi-scale atmospheric dynamics by combining a directly downsampled latent space with a history-conditioned local projector that resolves high-resolution physics. We find that our framework design is robust to the choice of probabilistic estimator, seamlessly supporting stochastic interpolants, diffusion models, and CRPS-based ensemble training. Validated against the Integrated Forecasting System and the deep learning probabilistic model GenCast, our framework achieves statistically significant improvements on most of the variables. These results suggest scaling a general-purpose model is sufficient for state-of-the-art medium-range prediction, eliminating the need for tailored training recipes and proving effective across the full spectrum of probabilistic frameworks.

Warped Diffusion: Solving Video Inverse Problems with Image Diffusion Models

Oct 21, 2024

Abstract:Using image models naively for solving inverse video problems often suffers from flickering, texture-sticking, and temporal inconsistency in generated videos. To tackle these problems, in this paper, we view frames as continuous functions in the 2D space, and videos as a sequence of continuous warping transformations between different frames. This perspective allows us to train function space diffusion models only on images and utilize them to solve temporally correlated inverse problems. The function space diffusion models need to be equivariant with respect to the underlying spatial transformations. To ensure temporal consistency, we introduce a simple post-hoc test-time guidance towards (self)-equivariant solutions. Our method allows us to deploy state-of-the-art latent diffusion models such as Stable Diffusion XL to solve video inverse problems. We demonstrate the effectiveness of our method for video inpainting and $8\times$ video super-resolution, outperforming existing techniques based on noise transformations. We provide generated video results: https://giannisdaras.github.io/warped\_diffusion.github.io/.

Heavy-Tailed Diffusion Models

Oct 18, 2024Abstract:Diffusion models achieve state-of-the-art generation quality across many applications, but their ability to capture rare or extreme events in heavy-tailed distributions remains unclear. In this work, we show that traditional diffusion and flow-matching models with standard Gaussian priors fail to capture heavy-tailed behavior. We address this by repurposing the diffusion framework for heavy-tail estimation using multivariate Student-t distributions. We develop a tailored perturbation kernel and derive the denoising posterior based on the conditional Student-t distribution for the backward process. Inspired by $\gamma$-divergence for heavy-tailed distributions, we derive a training objective for heavy-tailed denoisers. The resulting framework introduces controllable tail generation using only a single scalar hyperparameter, making it easily tunable for diverse real-world distributions. As specific instantiations of our framework, we introduce t-EDM and t-Flow, extensions of existing diffusion and flow models that employ a Student-t prior. Remarkably, our approach is readily compatible with standard Gaussian diffusion models and requires only minimal code changes. Empirically, we show that our t-EDM and t-Flow outperform standard diffusion models in heavy-tail estimation on high-resolution weather datasets in which generating rare and extreme events is crucial.

Kilometer-Scale Convection Allowing Model Emulation using Generative Diffusion Modeling

Aug 20, 2024Abstract:Storm-scale convection-allowing models (CAMs) are an important tool for predicting the evolution of thunderstorms and mesoscale convective systems that result in damaging extreme weather. By explicitly resolving convective dynamics within the atmosphere they afford meteorologists the nuance needed to provide outlook on hazard. Deep learning models have thus far not proven skilful at km-scale atmospheric simulation, despite being competitive at coarser resolution with state-of-the-art global, medium-range weather forecasting. We present a generative diffusion model called StormCast, which emulates the high-resolution rapid refresh (HRRR) model-NOAA's state-of-the-art 3km operational CAM. StormCast autoregressively predicts 99 state variables at km scale using a 1-hour time step, with dense vertical resolution in the atmospheric boundary layer, conditioned on 26 synoptic variables. We present evidence of successfully learnt km-scale dynamics including competitive 1-6 hour forecast skill for composite radar reflectivity alongside physically realistic convective cluster evolution, moist updrafts, and cold pool morphology. StormCast predictions maintain realistic power spectra for multiple predicted variables across multi-hour forecasts. Together, these results establish the potential for autoregressive ML to emulate CAMs -- opening up new km-scale frontiers for regional ML weather prediction and future climate hazard dynamical downscaling.

Repulsive Score Distillation for Diverse Sampling of Diffusion Models

Jun 24, 2024

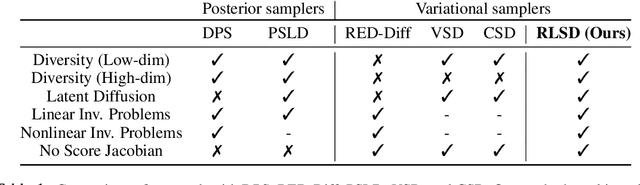

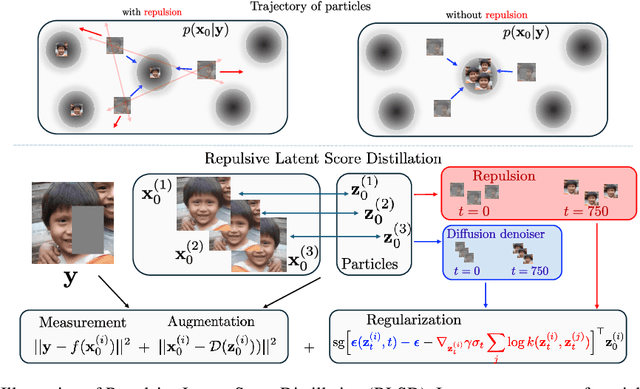

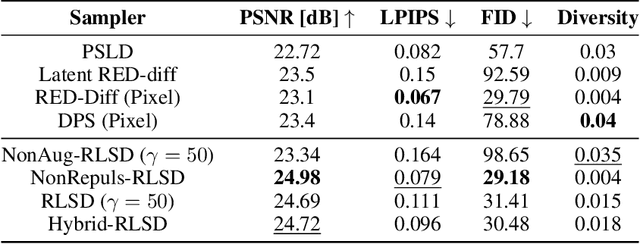

Abstract:Score distillation sampling has been pivotal for integrating diffusion models into generation of complex visuals. Despite impressive results it suffers from mode collapse and lack of diversity. To cope with this challenge, we leverage the gradient flow interpretation of score distillation to propose Repulsive Score Distillation (RSD). In particular, we propose a variational framework based on repulsion of an ensemble of particles that promotes diversity. Using a variational approximation that incorporates a coupling among particles, the repulsion appears as a simple regularization that allows interaction of particles based on their relative pairwise similarity, measured e.g., via radial basis kernels. We design RSD for both unconstrained and constrained sampling scenarios. For constrained sampling we focus on inverse problems in the latent space that leads to an augmented variational formulation, that strikes a good balance between compute, quality and diversity. Our extensive experiments for text-to-image generation, and inverse problems demonstrate that RSD achieves a superior trade-off between diversity and quality compared with state-of-the-art alternatives.

Generative Data Assimilation of Sparse Weather Station Observations at Kilometer Scales

Jun 19, 2024

Abstract:Data assimilation of observational data into full atmospheric states is essential for weather forecast model initialization. Recently, methods for deep generative data assimilation have been proposed which allow for using new input data without retraining the model. They could also dramatically accelerate the costly data assimilation process used in operational regional weather models. Here, in a central US testbed, we demonstrate the viability of score-based data assimilation in the context of realistically complex km-scale weather. We train an unconditional diffusion model to generate snapshots of a state-of-the-art km-scale analysis product, the High Resolution Rapid Refresh. Then, using score-based data assimilation to incorporate sparse weather station data, the model produces maps of precipitation and surface winds. The generated fields display physically plausible structures, such as gust fronts, and sensitivity tests confirm learnt physics through multivariate relationships. Preliminary skill analysis shows the approach already outperforms a naive baseline of the High-Resolution Rapid Refresh system itself. By incorporating observations from 40 weather stations, 10\% lower RMSEs on left-out stations are attained. Despite some lingering imperfections such as insufficiently disperse ensemble DA estimates, we find the results overall an encouraging proof of concept, and the first at km-scale. It is a ripe time to explore extensions that combine increasingly ambitious regional state generators with an increasing set of in situ, ground-based, and satellite remote sensing data streams.

Compositional Text-to-Image Generation with Dense Blob Representations

May 14, 2024

Abstract:Existing text-to-image models struggle to follow complex text prompts, raising the need for extra grounding inputs for better controllability. In this work, we propose to decompose a scene into visual primitives - denoted as dense blob representations - that contain fine-grained details of the scene while being modular, human-interpretable, and easy-to-construct. Based on blob representations, we develop a blob-grounded text-to-image diffusion model, termed BlobGEN, for compositional generation. Particularly, we introduce a new masked cross-attention module to disentangle the fusion between blob representations and visual features. To leverage the compositionality of large language models (LLMs), we introduce a new in-context learning approach to generate blob representations from text prompts. Our extensive experiments show that BlobGEN achieves superior zero-shot generation quality and better layout-guided controllability on MS-COCO. When augmented by LLMs, our method exhibits superior numerical and spatial correctness on compositional image generation benchmarks. Project page: https://blobgen-2d.github.io.

DiffObs: Generative Diffusion for Global Forecasting of Satellite Observations

Apr 04, 2024

Abstract:This work presents an autoregressive generative diffusion model (DiffObs) to predict the global evolution of daily precipitation, trained on a satellite observational product, and assessed with domain-specific diagnostics. The model is trained to probabilistically forecast day-ahead precipitation. Nonetheless, it is stable for multi-month rollouts, which reveal a qualitatively realistic superposition of convectively coupled wave modes in the tropics. Cross-spectral analysis confirms successful generation of low frequency variations associated with the Madden--Julian oscillation, which regulates most subseasonal to seasonal predictability in the observed atmosphere, and convectively coupled moist Kelvin waves with approximately correct dispersion relationships. Despite secondary issues and biases, the results affirm the potential for a next generation of global diffusion models trained on increasingly sparse, and increasingly direct and differentiated observations of the world, for practical applications in subseasonal and climate prediction.

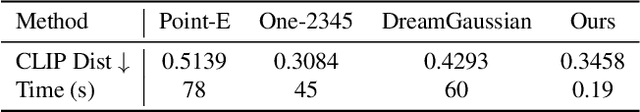

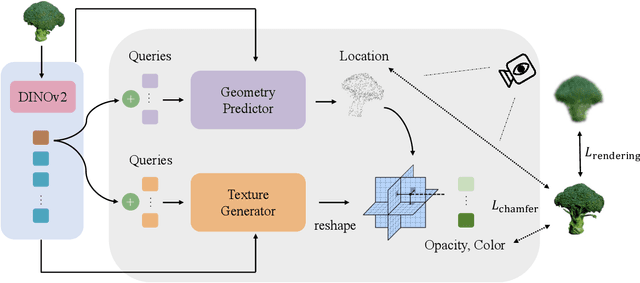

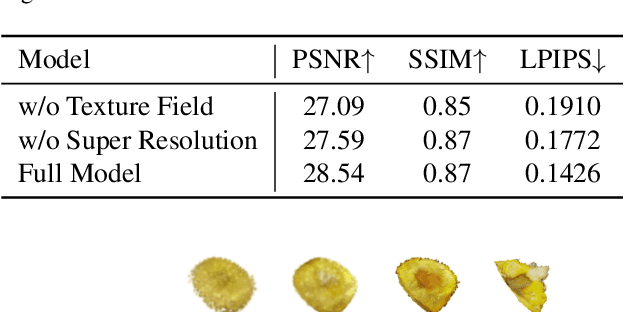

AGG: Amortized Generative 3D Gaussians for Single Image to 3D

Jan 08, 2024

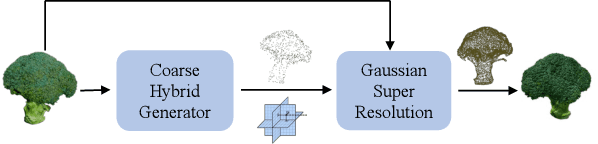

Abstract:Given the growing need for automatic 3D content creation pipelines, various 3D representations have been studied to generate 3D objects from a single image. Due to its superior rendering efficiency, 3D Gaussian splatting-based models have recently excelled in both 3D reconstruction and generation. 3D Gaussian splatting approaches for image to 3D generation are often optimization-based, requiring many computationally expensive score-distillation steps. To overcome these challenges, we introduce an Amortized Generative 3D Gaussian framework (AGG) that instantly produces 3D Gaussians from a single image, eliminating the need for per-instance optimization. Utilizing an intermediate hybrid representation, AGG decomposes the generation of 3D Gaussian locations and other appearance attributes for joint optimization. Moreover, we propose a cascaded pipeline that first generates a coarse representation of the 3D data and later upsamples it with a 3D Gaussian super-resolution module. Our method is evaluated against existing optimization-based 3D Gaussian frameworks and sampling-based pipelines utilizing other 3D representations, where AGG showcases competitive generation abilities both qualitatively and quantitatively while being several orders of magnitude faster. Project page: https://ir1d.github.io/AGG/

SMRD: SURE-based Robust MRI Reconstruction with Diffusion Models

Oct 18, 2023Abstract:Diffusion models have recently gained popularity for accelerated MRI reconstruction due to their high sample quality. They can effectively serve as rich data priors while incorporating the forward model flexibly at inference time, and they have been shown to be more robust than unrolled methods under distribution shifts. However, diffusion models require careful tuning of inference hyperparameters on a validation set and are still sensitive to distribution shifts during testing. To address these challenges, we introduce SURE-based MRI Reconstruction with Diffusion models (SMRD), a method that performs test-time hyperparameter tuning to enhance robustness during testing. SMRD uses Stein's Unbiased Risk Estimator (SURE) to estimate the mean squared error of the reconstruction during testing. SURE is then used to automatically tune the inference hyperparameters and to set an early stopping criterion without the need for validation tuning. To the best of our knowledge, SMRD is the first to incorporate SURE into the sampling stage of diffusion models for automatic hyperparameter selection. SMRD outperforms diffusion model baselines on various measurement noise levels, acceleration factors, and anatomies, achieving a PSNR improvement of up to 6 dB under measurement noise. The code is publicly available at https://github.com/NVlabs/SMRD .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge