Sachin Chitta

Fabrica: Dual-Arm Assembly of General Multi-Part Objects via Integrated Planning and Learning

Jun 05, 2025Abstract:Multi-part assembly poses significant challenges for robots to execute long-horizon, contact-rich manipulation with generalization across complex geometries. We present Fabrica, a dual-arm robotic system capable of end-to-end planning and control for autonomous assembly of general multi-part objects. For planning over long horizons, we develop hierarchies of precedence, sequence, grasp, and motion planning with automated fixture generation, enabling general multi-step assembly on any dual-arm robots. The planner is made efficient through a parallelizable design and is optimized for downstream control stability. For contact-rich assembly steps, we propose a lightweight reinforcement learning framework that trains generalist policies across object geometries, assembly directions, and grasp poses, guided by equivariance and residual actions obtained from the plan. These policies transfer zero-shot to the real world and achieve 80% successful steps. For systematic evaluation, we propose a benchmark suite of multi-part assemblies resembling industrial and daily objects across diverse categories and geometries. By integrating efficient global planning and robust local control, we showcase the first system to achieve complete and generalizable real-world multi-part assembly without domain knowledge or human demonstrations. Project website: http://fabrica.csail.mit.edu/

Flow-based Domain Randomization for Learning and Sequencing Robotic Skills

Feb 03, 2025

Abstract:Domain randomization in reinforcement learning is an established technique for increasing the robustness of control policies trained in simulation. By randomizing environment properties during training, the learned policy can become robust to uncertainties along the randomized dimensions. While the environment distribution is typically specified by hand, in this paper we investigate automatically discovering a sampling distribution via entropy-regularized reward maximization of a normalizing-flow-based neural sampling distribution. We show that this architecture is more flexible and provides greater robustness than existing approaches that learn simpler, parameterized sampling distributions, as demonstrated in six simulated and one real-world robotics domain. Lastly, we explore how these learned sampling distributions, combined with a privileged value function, can be used for out-of-distribution detection in an uncertainty-aware multi-step manipulation planner.

Hierarchical Hybrid Learning for Long-Horizon Contact-Rich Robotic Assembly

Sep 24, 2024

Abstract:Generalizable long-horizon robotic assembly requires reasoning at multiple levels of abstraction. End-to-end imitation learning (IL) has been proven a promising approach, but it requires a large amount of demonstration data for training and often fails to meet the high-precision requirement of assembly tasks. Reinforcement Learning (RL) approaches have succeeded in high-precision assembly tasks, but suffer from sample inefficiency and hence, are less competent at long-horizon tasks. To address these challenges, we propose a hierarchical modular approach, named ARCH (Adaptive Robotic Composition Hierarchy), which enables long-horizon high-precision assembly in contact-rich settings. ARCH employs a hierarchical planning framework, including a low-level primitive library of continuously parameterized skills and a high-level policy. The low-level primitive library includes essential skills for assembly tasks, such as grasping and inserting. These primitives consist of both RL and model-based controllers. The high-level policy, learned via imitation learning from a handful of demonstrations, selects the appropriate primitive skills and instantiates them with continuous input parameters. We extensively evaluate our approach on a real robot manipulation platform. We show that while trained on a single task, ARCH generalizes well to unseen tasks and outperforms baseline methods in terms of success rate and data efficiency. Videos can be found at https://long-horizon-assembly.github.io.

Toward Automated Programming for Robotic Assembly Using ChatGPT

May 13, 2024

Abstract:Despite significant technological advancements, the process of programming robots for adaptive assembly remains labor-intensive, demanding expertise in multiple domains and often resulting in task-specific, inflexible code. This work explores the potential of Large Language Models (LLMs), like ChatGPT, to automate this process, leveraging their ability to understand natural language instructions, generalize examples to new tasks, and write code. In this paper, we suggest how these abilities can be harnessed and applied to real-world challenges in the manufacturing industry. We present a novel system that uses ChatGPT to automate the process of programming robots for adaptive assembly by decomposing complex tasks into simpler subtasks, generating robot control code, executing the code in a simulated workcell, and debugging syntax and control errors, such as collisions. We outline the architecture of this system and strategies for task decomposition and code generation. Finally, we demonstrate how our system can autonomously program robots for various assembly tasks in a real-world project.

ASAP: Automated Sequence Planning for Complex Robotic Assembly with Physical Feasibility

Sep 29, 2023Abstract:The automated assembly of complex products requires a system that can automatically plan a physically feasible sequence of actions for assembling many parts together. In this paper, we present ASAP, a physics-based planning approach for automatically generating such a sequence for general-shaped assemblies. ASAP accounts for gravity to design a sequence where each sub-assembly is physically stable with a limited number of parts being held and a support surface. We apply efficient tree search algorithms to reduce the combinatorial complexity of determining such an assembly sequence. The search can be guided by either geometric heuristics or graph neural networks trained on data with simulation labels. Finally, we show the superior performance of ASAP at generating physically realistic assembly sequence plans on a large dataset of hundreds of complex product assemblies. We further demonstrate the applicability of ASAP on both simulation and real-world robotic setups. Project website: asap.csail.mit.edu

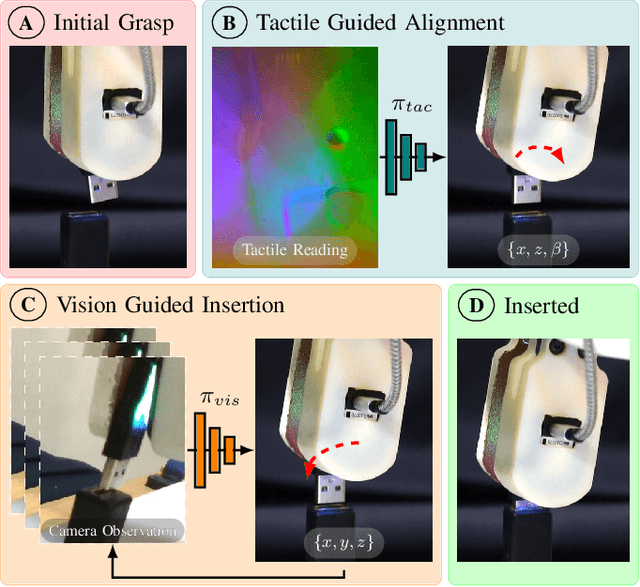

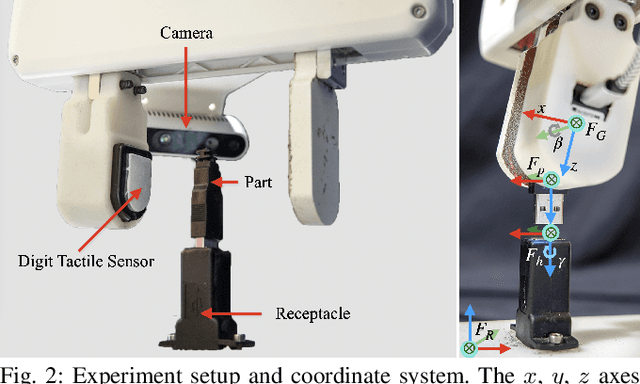

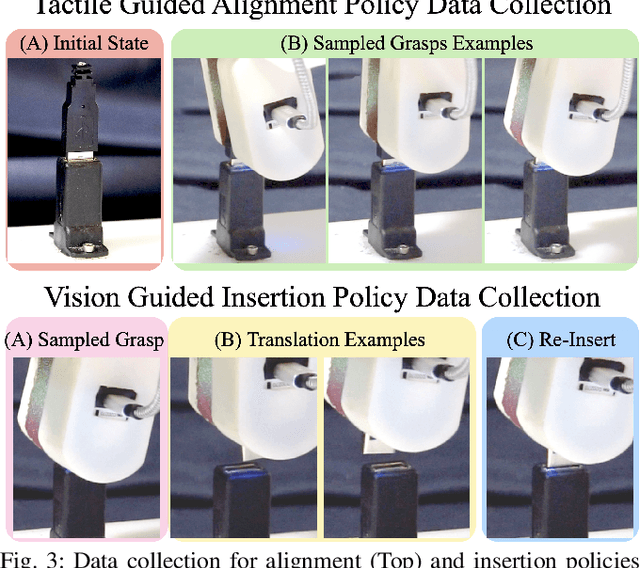

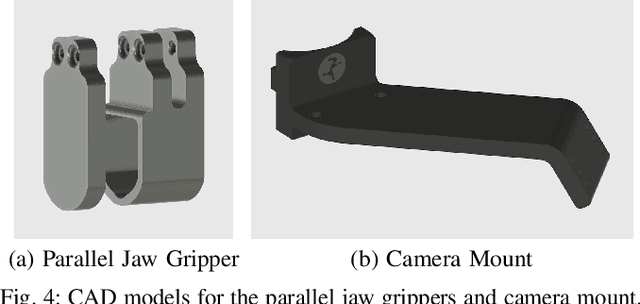

Safely Learning Visuo-Tactile Feedback Policies in Real For Industrial Insertion

Oct 04, 2022

Abstract:Industrial insertion tasks are often performed repetitively with parts that are subject to tight tolerances and prone to breakage. In this paper, we present a safe method to learn a visuo-tactile insertion policy that is robust against grasp pose variations while minimizing human inputs and collision between the robot and the environment. We achieve this by dividing the insertion task into two phases. In the first align phase, we learn a tactile-based grasp pose estimation model to align the insertion part with the receptacle. In the second insert phase, we learn a vision-based policy to guide the part into the receptacle. Using force-torque sensing, we also develop a safe self-supervised data collection pipeline that limits collision between the part and the surrounding environment. Physical experiments on the USB insertion task from the NIST Assembly Taskboard suggest that our approach can achieve 45/45 insertion successes on 45 different initial grasp poses, improving on two baselines: (1) a behavior cloning agent trained on 50 human insertion demonstrations (1/45) and (2) an online RL policy (TD3) trained in real (0/45).

On CAD Informed Adaptive Robotic Assembly

Aug 02, 2022

Abstract:We introduce a robotic assembly system that streamlines the design-to-make workflow for going from a CAD model of a product assembly to a fully programmed and adaptive assembly process. Our system captures (in the CAD tool) the intent of the assembly process for a specific robotic workcell and generates a recipe of task-level instructions. By integrating visual sensing with deep-learned perception models, the robots infer the necessary actions to assemble the design from the generated recipe. The perception models are trained directly from simulation, allowing the system to identify various parts based on CAD information. We demonstrate the system with a workcell of two robots to assemble interlocking 3D part designs. We first build and tune the assembly process in simulation, verifying the generated recipe. Finally, the real robotic workcell assembles the design using the same behavior.

Reducing the Barrier to Entry of Complex Robotic Software: a MoveIt! Case Study

Apr 15, 2014

Abstract:Developing robot agnostic software frameworks involves synthesizing the disparate fields of robotic theory and software engineering while simultaneously accounting for a large variability in hardware designs and control paradigms. As the capabilities of robotic software frameworks increase, the setup difficulty and learning curve for new users also increase. If the entry barriers for configuring and using the software on robots is too high, even the most powerful of frameworks are useless. A growing need exists in robotic software engineering to aid users in getting started with, and customizing, the software framework as necessary for particular robotic applications. In this paper a case study is presented for the best practices found for lowering the barrier of entry in the MoveIt! framework, an open-source tool for mobile manipulation in ROS, that allows users to 1) quickly get basic motion planning functionality with minimal initial setup, 2) automate its configuration and optimization, and 3) easily customize its components. A graphical interface that assists the user in configuring MoveIt! is the cornerstone of our approach, coupled with the use of an existing standardized robot model for input, automatically generated robot-specific configuration files, and a plugin-based architecture for extensibility. These best practices are summarized into a set of barrier to entry design principles applicable to other robotic software. The approaches for lowering the entry barrier are evaluated by usage statistics, a user survey, and compared against our design objectives for their effectiveness to users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge