Jieliang Luo

A-Scan2BIM: Assistive Scan to Building Information Modeling

Nov 30, 2023

Abstract:This paper proposes an assistive system for architects that converts a large-scale point cloud into a standardized digital representation of a building for Building Information Modeling (BIM) applications. The process is known as Scan-to-BIM, which requires many hours of manual work even for a single building floor by a professional architect. Given its challenging nature, the paper focuses on helping architects on the Scan-to-BIM process, instead of replacing them. Concretely, we propose an assistive Scan-to-BIM system that takes the raw sensor data and edit history (including the current BIM model), then auto-regressively predicts a sequence of model editing operations as APIs of a professional BIM software (i.e., Autodesk Revit). The paper also presents the first building-scale Scan2BIM dataset that contains a sequence of model editing operations as the APIs of Autodesk Revit. The dataset contains 89 hours of Scan2BIM modeling processes by professional architects over 16 scenes, spanning over 35,000 m^2. We report our system's reconstruction quality with standard metrics, and we introduce a novel metric that measures how natural the order of reconstructed operations is. A simple modification to the reconstruction module helps improve performance, and our method is far superior to two other baselines in the order metric. We will release data, code, and models at a-scan2bim.github.io.

Hybrid Reinforcement Learning for Optimizing Pump Sustainability in Real-World Water Distribution Networks

Oct 13, 2023

Abstract:This article addresses the pump-scheduling optimization problem to enhance real-time control of real-world water distribution networks (WDNs). Our primary objectives are to adhere to physical operational constraints while reducing energy consumption and operational costs. Traditional optimization techniques, such as evolution-based and genetic algorithms, often fall short due to their lack of convergence guarantees. Conversely, reinforcement learning (RL) stands out for its adaptability to uncertainties and reduced inference time, enabling real-time responsiveness. However, the effective implementation of RL is contingent on building accurate simulation models for WDNs, and prior applications have been limited by errors in simulation training data. These errors can potentially cause the RL agent to learn misleading patterns and actions and recommend suboptimal operational strategies. To overcome these challenges, we present an improved "hybrid RL" methodology. This method integrates the benefits of RL while anchoring it in historical data, which serves as a baseline to incrementally introduce optimal control recommendations. By leveraging operational data as a foundation for the agent's actions, we enhance the explainability of the agent's actions, foster more robust recommendations, and minimize error. Our findings demonstrate that the hybrid RL agent can significantly improve sustainability, operational efficiency, and dynamically adapt to emerging scenarios in real-world WDNs.

ASAP: Automated Sequence Planning for Complex Robotic Assembly with Physical Feasibility

Sep 29, 2023Abstract:The automated assembly of complex products requires a system that can automatically plan a physically feasible sequence of actions for assembling many parts together. In this paper, we present ASAP, a physics-based planning approach for automatically generating such a sequence for general-shaped assemblies. ASAP accounts for gravity to design a sequence where each sub-assembly is physically stable with a limited number of parts being held and a support surface. We apply efficient tree search algorithms to reduce the combinatorial complexity of determining such an assembly sequence. The search can be guided by either geometric heuristics or graph neural networks trained on data with simulation labels. Finally, we show the superior performance of ASAP at generating physically realistic assembly sequence plans on a large dataset of hundreds of complex product assemblies. We further demonstrate the applicability of ASAP on both simulation and real-world robotic setups. Project website: asap.csail.mit.edu

PlotMap: Automated Layout Design for Building Game Worlds

Sep 26, 2023

Abstract:World-building, the process of developing both the narrative and physical world of a game, plays a vital role in the game's experience. Critically acclaimed independent and AAA video games are praised for strong world building, with game maps that masterfully intertwine with and elevate the narrative, captivating players and leaving a lasting impression. However, designing game maps that support a desired narrative is challenging, as it requires satisfying complex constraints from various considerations. Most existing map generation methods focus on considerations about gameplay mechanics or map topography, while the need to support the story is typically neglected. As a result, extensive manual adjustment is still required to design a game world that facilitates particular stories. In this work, we approach this problem by introducing an extra layer of plot facility layout design that is independent of the underlying map generation method in a world-building pipeline. Concretely, we present a system that leverages Reinforcement Learning (RL) to automatically assign concrete locations on a game map to abstract locations mentioned in a given story (plot facilities), following spatial constraints derived from the story. A decision-making agent moves the plot facilities around, considering their relationship to the map and each other, to locations on the map that best satisfy the constraints of the story. Our system considers input from multiple modalities: map images as pixels, facility locations as real values, and story constraints expressed in natural language. We develop a method of generating datasets of facility layout tasks, create an RL environment to train and evaluate RL models, and further analyze the behaviors of the agents through a group of comprehensive experiments and ablation studies, aiming to provide insights for RL-based plot facility layout design.

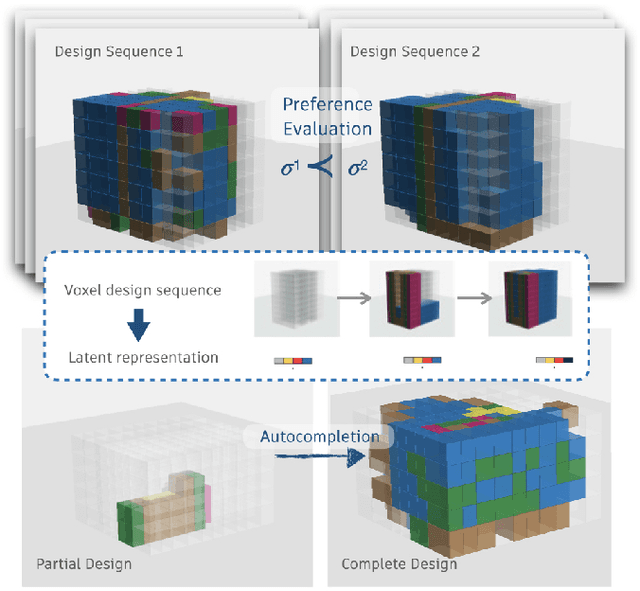

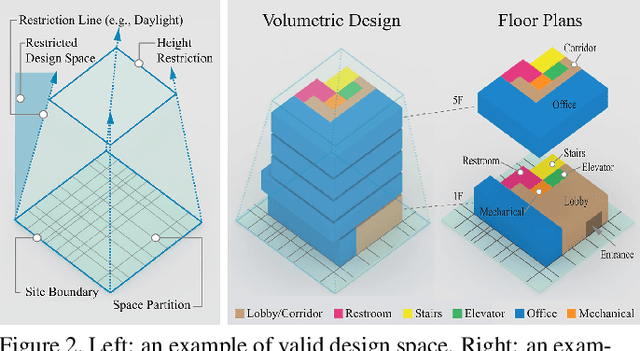

Representation Learning for Sequential Volumetric Design Tasks

Sep 05, 2023

Abstract:Volumetric design, also called massing design, is the first and critical step in professional building design which is sequential in nature. As the volumetric design process is complex, the underlying sequential design process encodes valuable information for designers. Many efforts have been made to automatically generate reasonable volumetric designs, but the quality of the generated design solutions varies, and evaluating a design solution requires either a prohibitively comprehensive set of metrics or expensive human expertise. While previous approaches focused on learning only the final design instead of sequential design tasks, we propose to encode the design knowledge from a collection of expert or high-performing design sequences and extract useful representations using transformer-based models. Later we propose to utilize the learned representations for crucial downstream applications such as design preference evaluation and procedural design generation. We develop the preference model by estimating the density of the learned representations whereas we train an autoregressive transformer model for sequential design generation. We demonstrate our ideas by leveraging a novel dataset of thousands of sequential volumetric designs. Our preference model can compare two arbitrarily given design sequences and is almost 90% accurate in evaluation against random design sequences. Our autoregressive model is also capable of autocompleting a volumetric design sequence from a partial design sequence.

Assemble Them All: Physics-Based Planning for Generalizable Assembly by Disassembly

Nov 08, 2022Abstract:Assembly planning is the core of automating product assembly, maintenance, and recycling for modern industrial manufacturing. Despite its importance and long history of research, planning for mechanical assemblies when given the final assembled state remains a challenging problem. This is due to the complexity of dealing with arbitrary 3D shapes and the highly constrained motion required for real-world assemblies. In this work, we propose a novel method to efficiently plan physically plausible assembly motion and sequences for real-world assemblies. Our method leverages the assembly-by-disassembly principle and physics-based simulation to efficiently explore a reduced search space. To evaluate the generality of our method, we define a large-scale dataset consisting of thousands of physically valid industrial assemblies with a variety of assembly motions required. Our experiments on this new benchmark demonstrate we achieve a state-of-the-art success rate and the highest computational efficiency compared to other baseline algorithms. Our method also generalizes to rotational assemblies (e.g., screws and puzzles) and solves 80-part assemblies within several minutes.

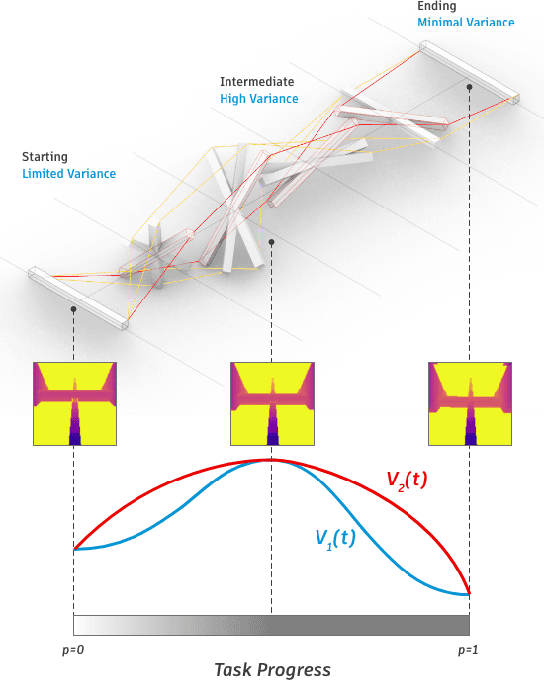

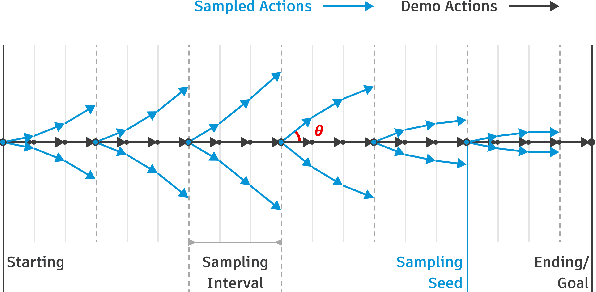

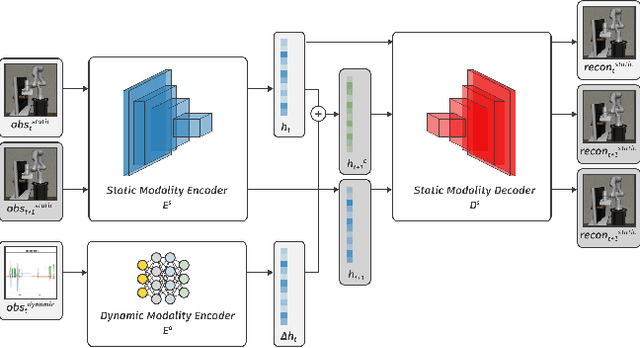

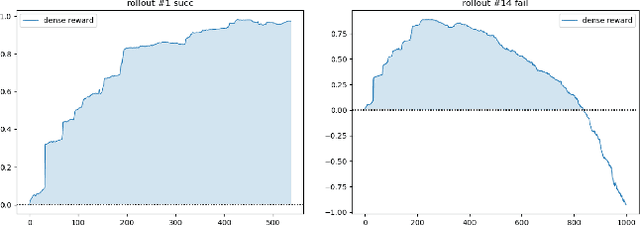

Learning Dense Reward with Temporal Variant Self-Supervision

May 26, 2022

Abstract:Rewards play an essential role in reinforcement learning. In contrast to rule-based game environments with well-defined reward functions, complex real-world robotic applications, such as contact-rich manipulation, lack explicit and informative descriptions that can directly be used as a reward. Previous effort has shown that it is possible to algorithmically extract dense rewards directly from multimodal observations. In this paper, we aim to extend this effort by proposing a more efficient and robust way of sampling and learning. In particular, our sampling approach utilizes temporal variance to simulate the fluctuating state and action distribution of a manipulation task. We then proposed a network architecture for self-supervised learning to better incorporate temporal information in latent representations. We tested our approach in two experimental setups, namely joint-assembly and door-opening. Preliminary results show that our approach is effective and efficient in learning dense rewards, and the learned rewards lead to faster convergence than baselines.

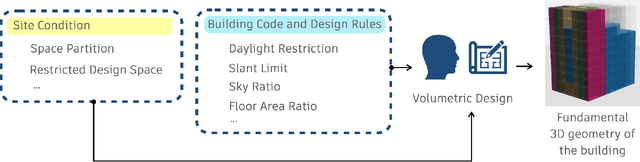

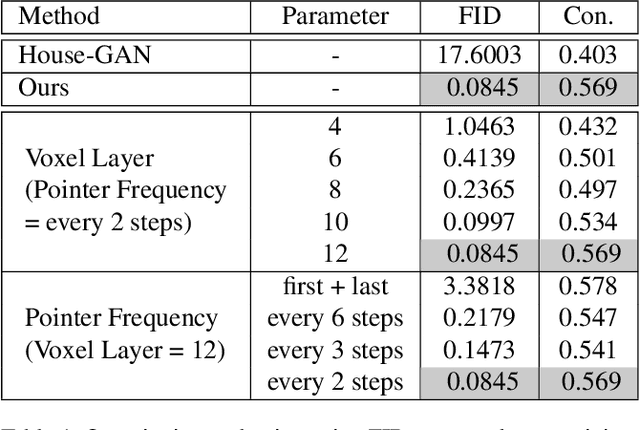

Building-GAN: Graph-Conditioned Architectural Volumetric Design Generation

Apr 27, 2021

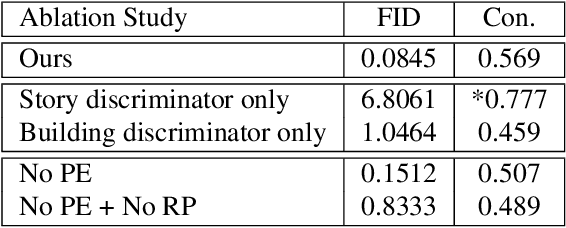

Abstract:Volumetric design is the first and critical step for professional building design, where architects not only depict the rough 3D geometry of the building but also specify the programs to form a 2D layout on each floor. Though 2D layout generation for a single story has been widely studied, there is no developed method for multi-story buildings. This paper focuses on volumetric design generation conditioned on an input program graph. Instead of outputting dense 3D voxels, we propose a new 3D representation named voxel graph that is both compact and expressive for building geometries. Our generator is a cross-modal graph neural network that uses a pointer mechanism to connect the input program graph and the output voxel graph, and the whole pipeline is trained using the adversarial framework. The generated designs are evaluated qualitatively by a user study and quantitatively using three metrics: quality, diversity, and connectivity accuracy. We show that our model generates realistic 3D volumetric designs and outperforms previous methods and baselines.

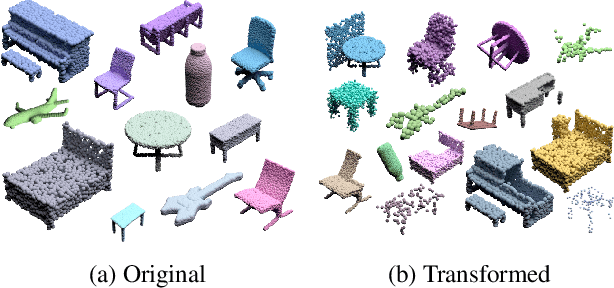

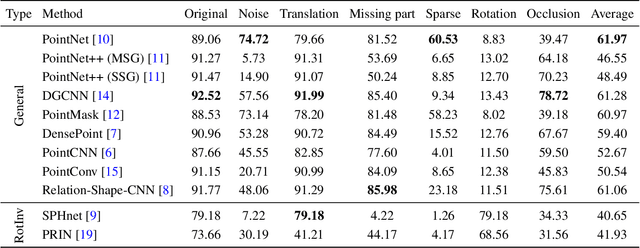

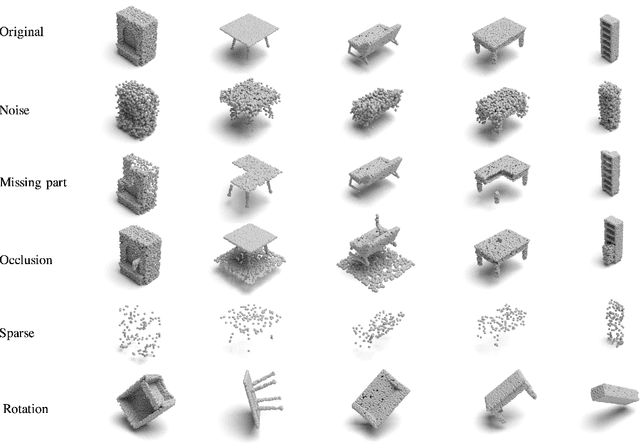

RobustPointSet: A Dataset for Benchmarking Robustness of Point Cloud Classifiers

Nov 25, 2020

Abstract:The 3D deep learning community has seen significant strides in pointcloud processing over the last few years. However, the datasets on which deep models have been trained have largely remained the same. Most datasets comprise clean, clutter-free pointclouds canonicalized for pose. Models trained on these datasets fail in uninterpretible and unintuitive ways when presented with data that contains transformations "unseen" at train time. While data augmentation enables models to be robust to "previously seen" input transformations, 1) we show that this does not work for unseen transformations during inference, and 2) data augmentation makes it difficult to analyze a model's inherent robustness to transformations. To this end, we create a publicly available dataset for robustness analysis of point cloud classification models (independent of data augmentation) to input transformations, called RobustPointSet. Our experiments indicate that despite all the progress in the point cloud classification, there is no single architecture that consistently performs better---several fail drastically---when evaluated on transformed test sets. We also find that robustness to unseen transformations cannot be brought about merely by extensive data augmentation. RobustPointSet can be accessed through https://github.com/AutodeskAILab/RobustPointSet.

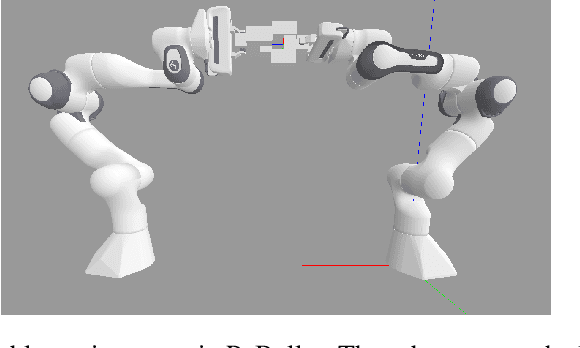

Recurrent Distributed Reinforcement Learning for Partially Observable Robotic Assembly

Oct 15, 2020

Abstract:In this work we solve for partially observable reinforcement learning (RL) environments by adding recurrency. We focus on partially observable robotic assembly tasks in the continuous action domain, with force/torque sensing being the only observation. We have developed a new distributed RL agent, named Recurrent Distributed DDPG (RD2), which adds a recurrent neural network layer to Ape-X DDPG and makes two important improvements on prioritized experience replay to stabilize training. We demonstrate the effectiveness of RD2 on a variety of joint assembly tasks and a partially observable version of the pendulum task from OpenAI Gym. Our results show that RD2 is able to achieve better performance than Ape-X DDPG and PPO with LSTM on partially observable tasks with varying complexity. We also show that the trained models adapt well to different initial states and different types of noise injected in the simulated environment. The video presenting our experiments is available at https://sites.google.com/view/rd2-rl

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge