Learning Dense Reward with Temporal Variant Self-Supervision

Paper and Code

May 26, 2022

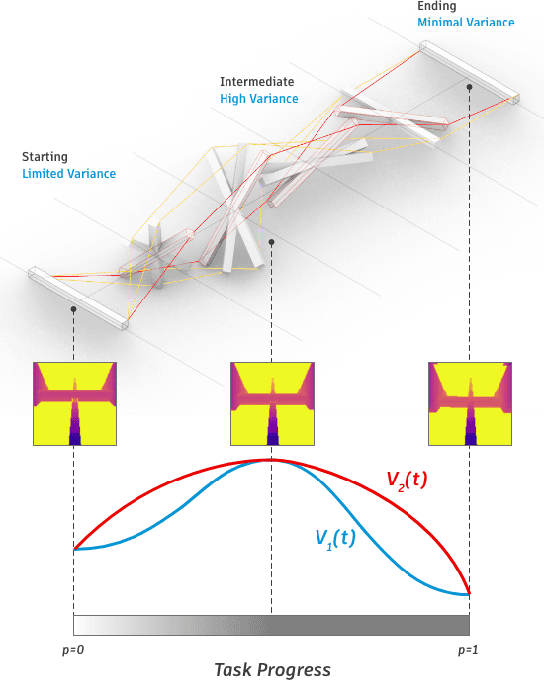

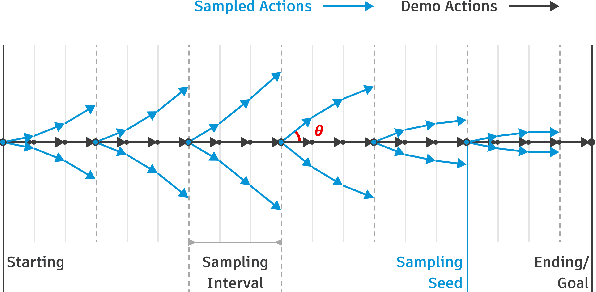

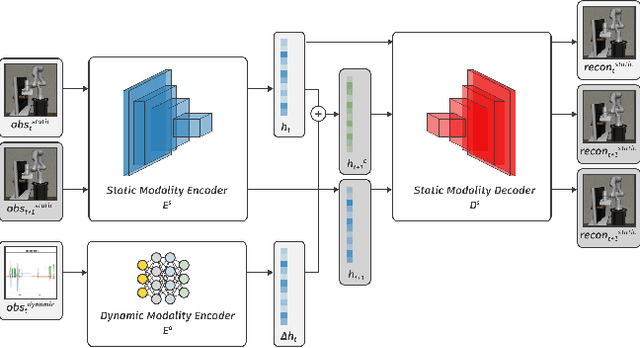

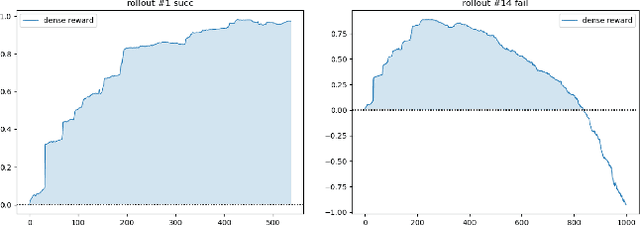

Rewards play an essential role in reinforcement learning. In contrast to rule-based game environments with well-defined reward functions, complex real-world robotic applications, such as contact-rich manipulation, lack explicit and informative descriptions that can directly be used as a reward. Previous effort has shown that it is possible to algorithmically extract dense rewards directly from multimodal observations. In this paper, we aim to extend this effort by proposing a more efficient and robust way of sampling and learning. In particular, our sampling approach utilizes temporal variance to simulate the fluctuating state and action distribution of a manipulation task. We then proposed a network architecture for self-supervised learning to better incorporate temporal information in latent representations. We tested our approach in two experimental setups, namely joint-assembly and door-opening. Preliminary results show that our approach is effective and efficient in learning dense rewards, and the learned rewards lead to faster convergence than baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge