Weilian Song

A-Scan2BIM: Assistive Scan to Building Information Modeling

Nov 30, 2023

Abstract:This paper proposes an assistive system for architects that converts a large-scale point cloud into a standardized digital representation of a building for Building Information Modeling (BIM) applications. The process is known as Scan-to-BIM, which requires many hours of manual work even for a single building floor by a professional architect. Given its challenging nature, the paper focuses on helping architects on the Scan-to-BIM process, instead of replacing them. Concretely, we propose an assistive Scan-to-BIM system that takes the raw sensor data and edit history (including the current BIM model), then auto-regressively predicts a sequence of model editing operations as APIs of a professional BIM software (i.e., Autodesk Revit). The paper also presents the first building-scale Scan2BIM dataset that contains a sequence of model editing operations as the APIs of Autodesk Revit. The dataset contains 89 hours of Scan2BIM modeling processes by professional architects over 16 scenes, spanning over 35,000 m^2. We report our system's reconstruction quality with standard metrics, and we introduce a novel metric that measures how natural the order of reconstructed operations is. A simple modification to the reconstruction module helps improve performance, and our method is far superior to two other baselines in the order metric. We will release data, code, and models at a-scan2bim.github.io.

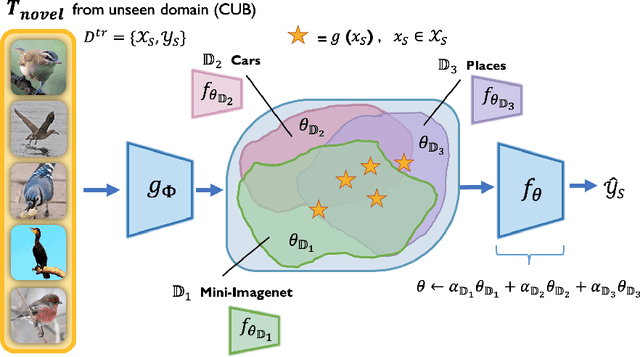

Combining Domain-Specific Meta-Learners in the Parameter Space for Cross-Domain Few-Shot Classification

Oct 31, 2020

Abstract:The goal of few-shot classification is to learn a model that can classify novel classes using only a few training examples. Despite the promising results shown by existing meta-learning algorithms in solving the few-shot classification problem, there still remains an important challenge: how to generalize to unseen domains while meta-learning on multiple seen domains? In this paper, we propose an optimization-based meta-learning method, called Combining Domain-Specific Meta-Learners (CosML), that addresses the cross-domain few-shot classification problem. CosML first trains a set of meta-learners, one for each training domain, to learn prior knowledge (i.e., meta-parameters) specific to each domain. The domain-specific meta-learners are then combined in the \emph{parameter space}, by taking a weighted average of their meta-parameters, which is used as the initialization parameters of a task network that is quickly adapted to novel few-shot classification tasks in an unseen domain. Our experiments show that CosML outperforms a range of state-of-the-art methods and achieves strong cross-domain generalization ability.

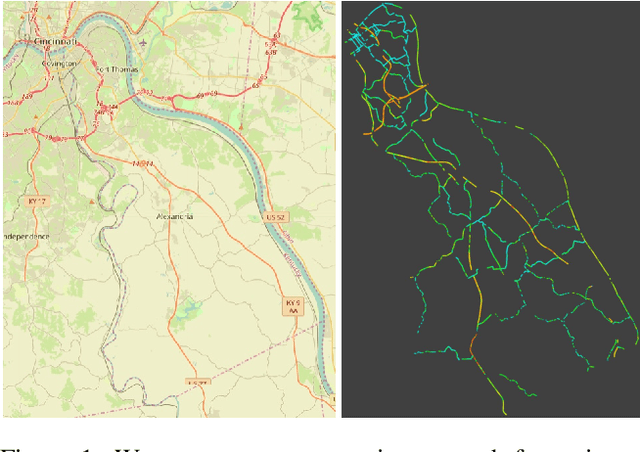

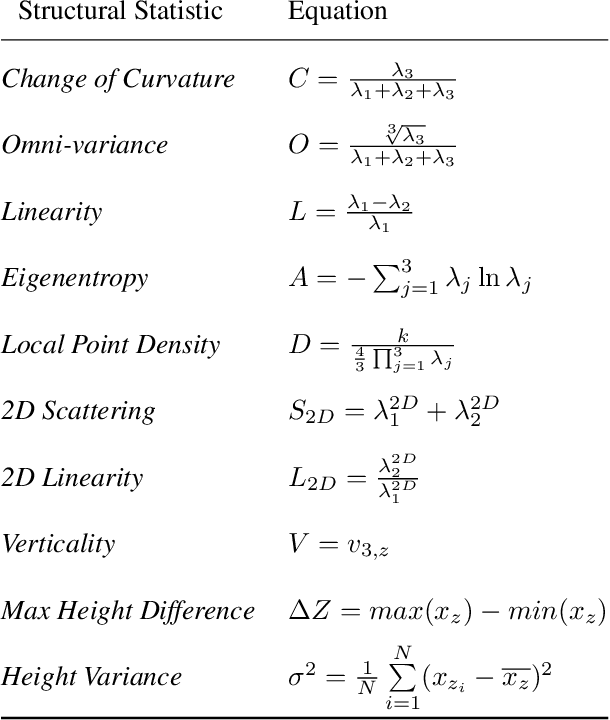

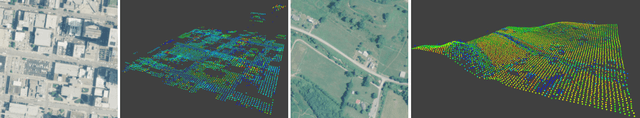

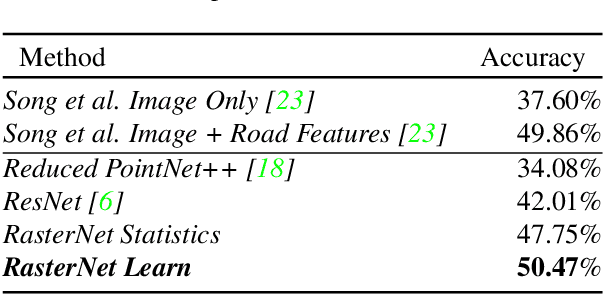

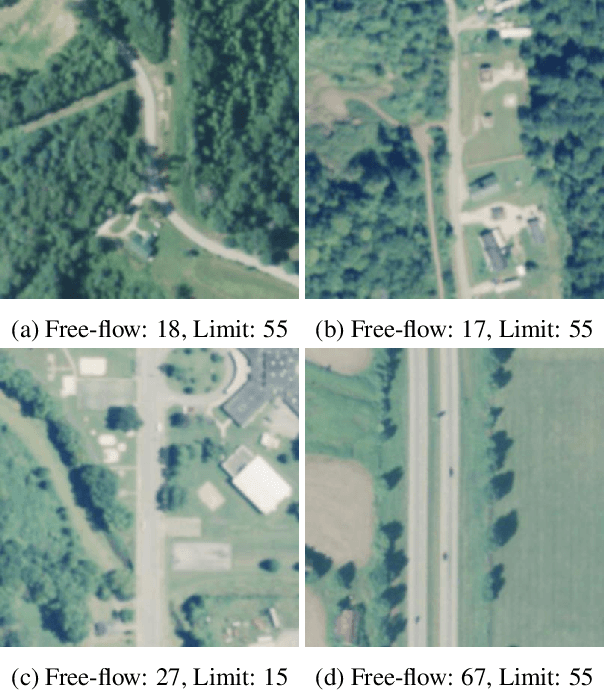

RasterNet: Modeling Free-Flow Speed using LiDAR and Overhead Imagery

Jun 14, 2020

Abstract:Roadway free-flow speed captures the typical vehicle speed in low traffic conditions. Modeling free-flow speed is an important problem in transportation engineering with applications to a variety of design, operation, planning, and policy decisions of highway systems. Unfortunately, collecting large-scale historical traffic speed data is expensive and time consuming. Traditional approaches for estimating free-flow speed use geometric properties of the underlying road segment, such as grade, curvature, lane width, lateral clearance and access point density, but for many roads such features are unavailable. We propose a fully automated approach, RasterNet, for estimating free-flow speed without the need for explicit geometric features. RasterNet is a neural network that fuses large-scale overhead imagery and aerial LiDAR point clouds using a geospatially consistent raster structure. To support training and evaluation, we introduce a novel dataset combining free-flow speeds of road segments, overhead imagery, and LiDAR point clouds across the state of Kentucky. Our method achieves state-of-the-art results on a benchmark dataset.

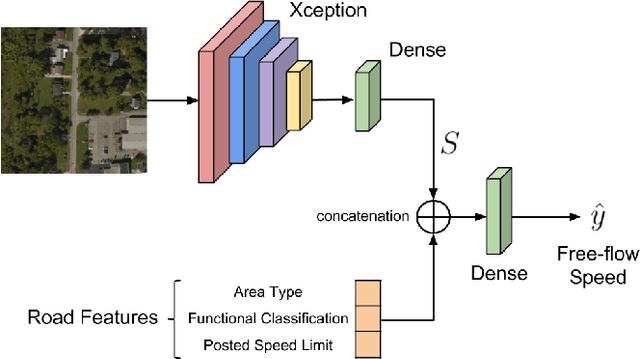

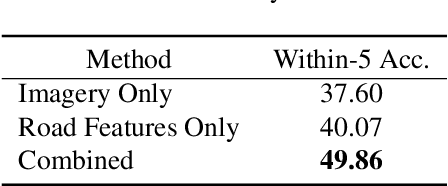

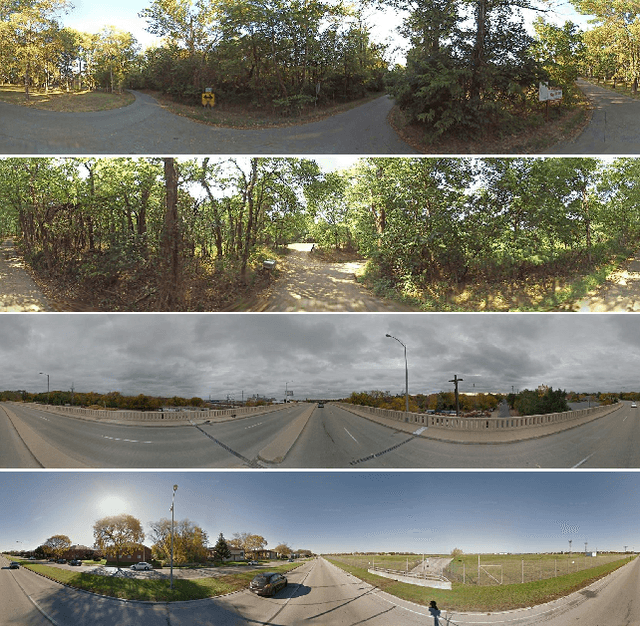

Remote Estimation of Free-Flow Speeds

Jun 24, 2019

Abstract:We propose an automated method to estimate a road segment's free-flow speed from overhead imagery and road metadata. The free-flow speed of a road segment is the average observed vehicle speed in ideal conditions, without congestion or adverse weather. Standard practice for estimating free-flow speeds depends on several road attributes, including grade, curve, and width of the right of way. Unfortunately, many of these fine-grained labels are not always readily available and are costly to manually annotate. To compensate, our model uses a small, easy to obtain subset of road features along with aerial imagery to directly estimate free-flow speed with a deep convolutional neural network (CNN). We evaluate our approach on a large dataset, and demonstrate that using imagery alone performs nearly as well as the road features and that the combination of imagery with road features leads to the highest accuracy.

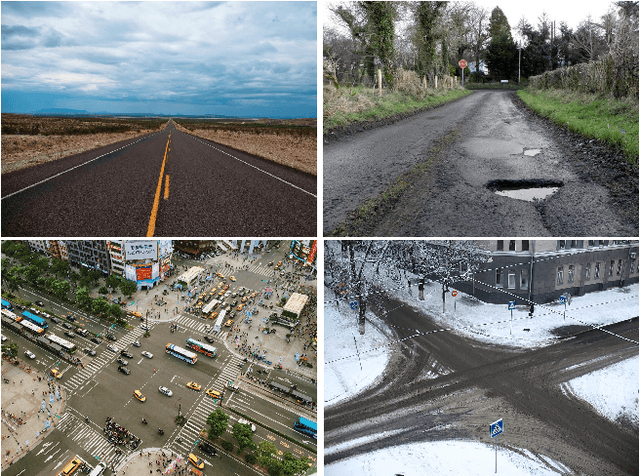

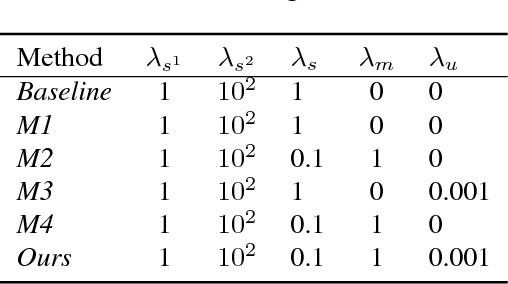

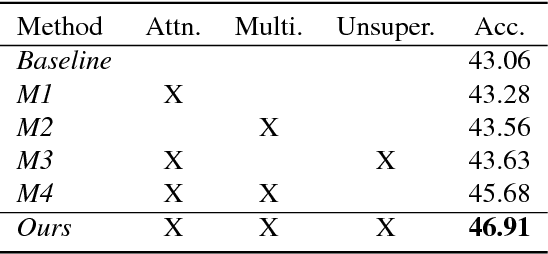

FARSA: Fully Automated Roadway Safety Assessment

Jan 17, 2019

Abstract:This paper addresses the task of road safety assessment. An emerging approach for conducting such assessments in the United States is through the US Road Assessment Program (usRAP), which rates roads from highest risk (1 star) to lowest (5 stars). Obtaining these ratings requires manual, fine-grained labeling of roadway features in street-level panoramas, a slow and costly process. We propose to automate this process using a deep convolutional neural network that directly estimates the star rating from a street-level panorama, requiring milliseconds per image at test time. Our network also estimates many other road-level attributes, including curvature, roadside hazards, and the type of median. To support this, we incorporate task-specific attention layers so the network can focus on the panorama regions that are most useful for a particular task. We evaluated our approach on a large dataset of real-world images from two US states. We found that incorporating additional tasks, and using a semi-supervised training approach, significantly reduced overfitting problems, allowed us to optimize more layers of the network, and resulted in higher accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge