Ruoming Jin

Kent State University

NOIR: Privacy-Preserving Generation of Code with Open-Source LLMs

Jan 22, 2026Abstract:Although boosting software development performance, large language model (LLM)-powered code generation introduces intellectual property and data security risks rooted in the fact that a service provider (cloud) observes a client's prompts and generated code, which can be proprietary in commercial systems. To mitigate this problem, we propose NOIR, the first framework to protect the client's prompts and generated code from the cloud. NOIR uses an encoder and a decoder at the client to encode and send the prompts' embeddings to the cloud to get enriched embeddings from the LLM, which are then decoded to generate the code locally at the client. Since the cloud can use the embeddings to infer the prompt and the generated code, NOIR introduces a new mechanism to achieve indistinguishability, a local differential privacy protection at the token embedding level, in the vocabulary used in the prompts and code, and a data-independent and randomized tokenizer on the client side. These components effectively defend against reconstruction and frequency analysis attacks by an honest-but-curious cloud. Extensive analysis and results using open-source LLMs show that NOIR significantly outperforms existing baselines on benchmarks, including the Evalplus (MBPP and HumanEval, Pass@1 of 76.7 and 77.4), and BigCodeBench (Pass@1 of 38.7, only a 1.77% drop from the original LLM) under strong privacy against attacks.

PAC-Bayes Bounds for Multivariate Linear Regression and Linear Autoencoders

Dec 15, 2025Abstract:Linear Autoencoders (LAEs) have shown strong performance in state-of-the-art recommender systems. However, this success remains largely empirical, with limited theoretical understanding. In this paper, we investigate the generalizability -- a theoretical measure of model performance in statistical learning -- of multivariate linear regression and LAEs. We first propose a PAC-Bayes bound for multivariate linear regression, extending the earlier bound for single-output linear regression by Shalaeva et al., and establish sufficient conditions for its convergence. We then show that LAEs, when evaluated under a relaxed mean squared error, can be interpreted as constrained multivariate linear regression models on bounded data, to which our bound adapts. Furthermore, we develop theoretical methods to improve the computational efficiency of optimizing the LAE bound, enabling its practical evaluation on large models and real-world datasets. Experimental results demonstrate that our bound is tight and correlates well with practical ranking metrics such as Recall@K and NDCG@K.

Evaluating LLM Alignment on Personality Inference from Real-World Interview Data

Sep 16, 2025Abstract:Large Language Models (LLMs) are increasingly deployed in roles requiring nuanced psychological understanding, such as emotional support agents, counselors, and decision-making assistants. However, their ability to interpret human personality traits, a critical aspect of such applications, remains unexplored, particularly in ecologically valid conversational settings. While prior work has simulated LLM "personas" using discrete Big Five labels on social media data, the alignment of LLMs with continuous, ground-truth personality assessments derived from natural interactions is largely unexamined. To address this gap, we introduce a novel benchmark comprising semi-structured interview transcripts paired with validated continuous Big Five trait scores. Using this dataset, we systematically evaluate LLM performance across three paradigms: (1) zero-shot and chain-of-thought prompting with GPT-4.1 Mini, (2) LoRA-based fine-tuning applied to both RoBERTa and Meta-LLaMA architectures, and (3) regression using static embeddings from pretrained BERT and OpenAI's text-embedding-3-small. Our results reveal that all Pearson correlations between model predictions and ground-truth personality traits remain below 0.26, highlighting the limited alignment of current LLMs with validated psychological constructs. Chain-of-thought prompting offers minimal gains over zero-shot, suggesting that personality inference relies more on latent semantic representation than explicit reasoning. These findings underscore the challenges of aligning LLMs with complex human attributes and motivate future work on trait-specific prompting, context-aware modeling, and alignment-oriented fine-tuning.

SoK: Are Watermarks in LLMs Ready for Deployment?

Jun 05, 2025

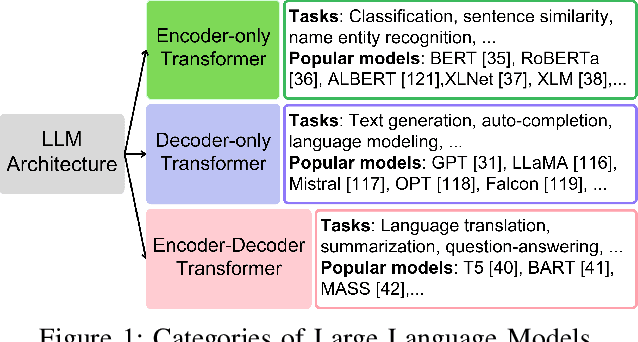

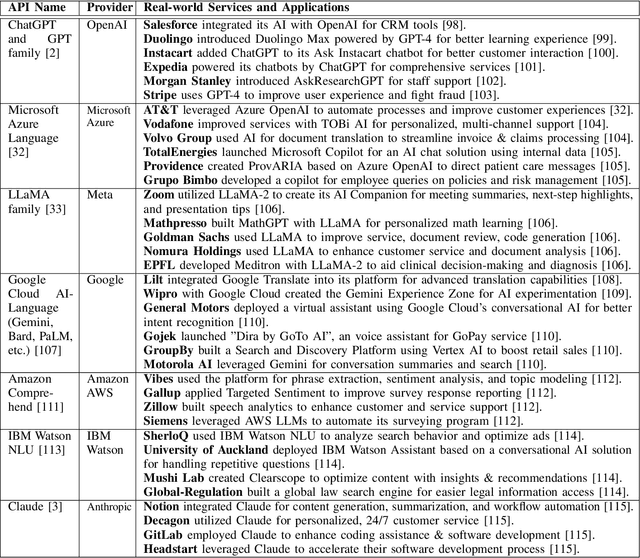

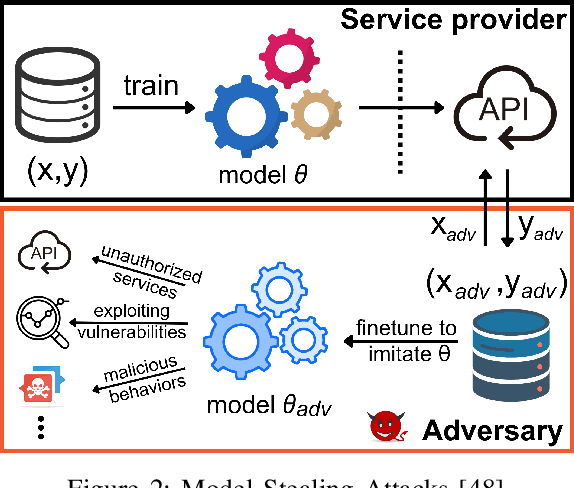

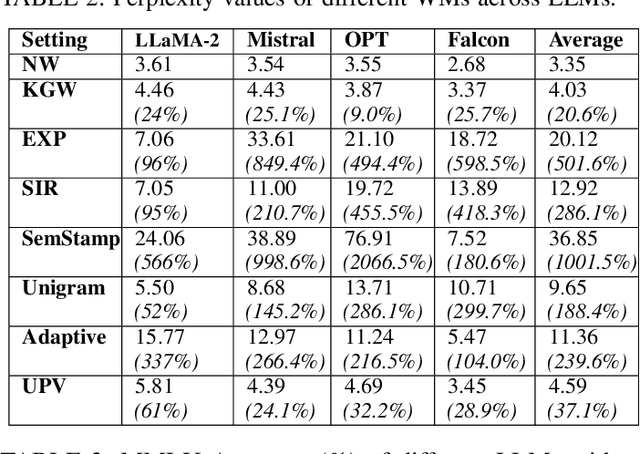

Abstract:Large Language Models (LLMs) have transformed natural language processing, demonstrating impressive capabilities across diverse tasks. However, deploying these models introduces critical risks related to intellectual property violations and potential misuse, particularly as adversaries can imitate these models to steal services or generate misleading outputs. We specifically focus on model stealing attacks, as they are highly relevant to proprietary LLMs and pose a serious threat to their security, revenue, and ethical deployment. While various watermarking techniques have emerged to mitigate these risks, it remains unclear how far the community and industry have progressed in developing and deploying watermarks in LLMs. To bridge this gap, we aim to develop a comprehensive systematization for watermarks in LLMs by 1) presenting a detailed taxonomy for watermarks in LLMs, 2) proposing a novel intellectual property classifier to explore the effectiveness and impacts of watermarks on LLMs under both attack and attack-free environments, 3) analyzing the limitations of existing watermarks in LLMs, and 4) discussing practical challenges and potential future directions for watermarks in LLMs. Through extensive experiments, we show that despite promising research outcomes and significant attention from leading companies and community to deploy watermarks, these techniques have yet to reach their full potential in real-world applications due to their unfavorable impacts on model utility of LLMs and downstream tasks. Our findings provide an insightful understanding of watermarks in LLMs, highlighting the need for practical watermarks solutions tailored to LLM deployment.

FedX: Adaptive Model Decomposition and Quantization for IoT Federated Learning

Apr 19, 2025

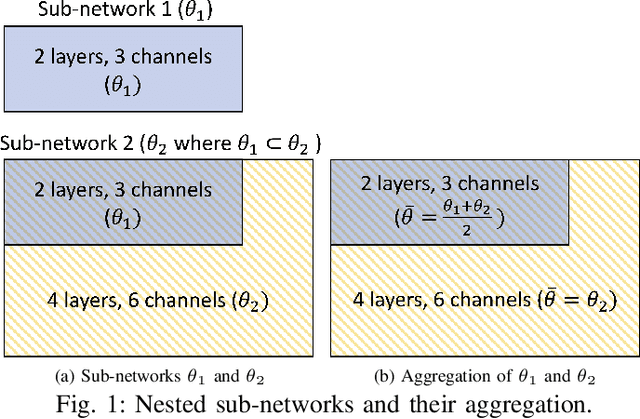

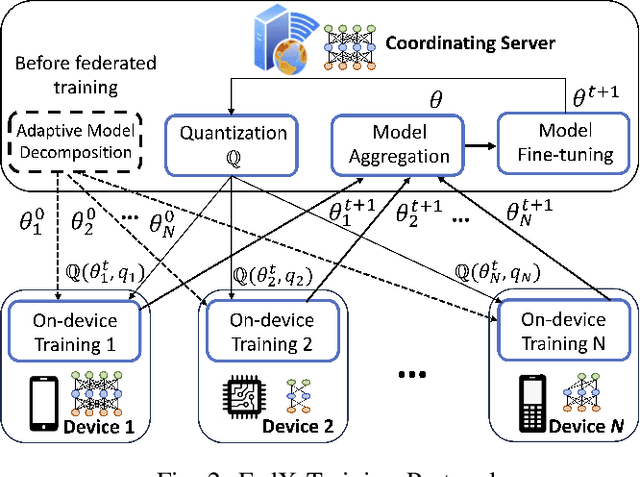

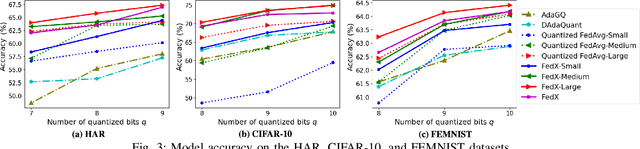

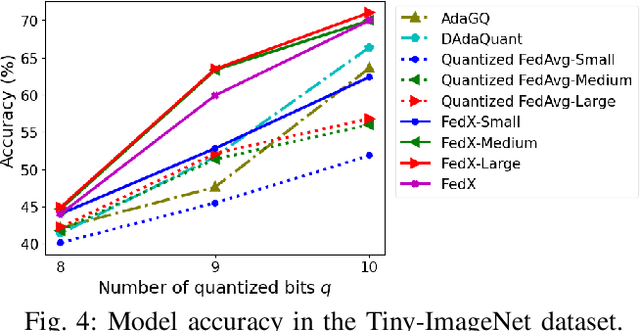

Abstract:Federated Learning (FL) allows collaborative training among multiple devices without data sharing, thus enabling privacy-sensitive applications on mobile or Internet of Things (IoT) devices, such as mobile health and asset tracking. However, designing an FL system with good model utility that works with low computation/communication overhead on heterogeneous, resource-constrained mobile/IoT devices is challenging. To address this problem, this paper proposes FedX, a novel adaptive model decomposition and quantization FL system for IoT. To balance utility with resource constraints on IoT devices, FedX decomposes a global FL model into different sub-networks with adaptive numbers of quantized bits for different devices. The key idea is that a device with fewer resources receives a smaller sub-network for lower overhead but utilizes a larger number of quantized bits for higher model utility, and vice versa. The quantization operations in FedX are done at the server to reduce the computational load on devices. FedX iteratively minimizes the losses in the devices' local data and in the server's public data using quantized sub-networks under a regularization term, and thus it maximizes the benefits of combining FL with model quantization through knowledge sharing among the server and devices in a cost-effective training process. Extensive experiments show that FedX significantly improves quantization times by up to 8.43X, on-device computation time by 1.5X, and total end-to-end training time by 1.36X, compared with baseline FL systems. We guarantee the global model convergence theoretically and validate local model convergence empirically, highlighting FedX's optimization efficiency.

Can Large Language Models Understand Intermediate Representations?

Feb 07, 2025Abstract:Intermediate Representations (IRs) are essential in compiler design and program analysis, yet their comprehension by Large Language Models (LLMs) remains underexplored. This paper presents a pioneering empirical study to investigate the capabilities of LLMs, including GPT-4, GPT-3, Gemma 2, LLaMA 3.1, and Code Llama, in understanding IRs. We analyze their performance across four tasks: Control Flow Graph (CFG) reconstruction, decompilation, code summarization, and execution reasoning. Our results indicate that while LLMs demonstrate competence in parsing IR syntax and recognizing high-level structures, they struggle with control flow reasoning, execution semantics, and loop handling. Specifically, they often misinterpret branching instructions, omit critical IR operations, and rely on heuristic-based reasoning, leading to errors in CFG reconstruction, IR decompilation, and execution reasoning. The study underscores the necessity for IR-specific enhancements in LLMs, recommending fine-tuning on structured IR datasets and integration of explicit control flow models to augment their comprehension and handling of IR-related tasks.

Leveraging Large Language Models to Analyze Emotional and Contextual Drivers of Teen Substance Use in Online Discussions

Jan 23, 2025

Abstract:Adolescence is a critical stage often linked to risky behaviors, including substance use, with significant developmental and public health implications. Social media provides a lens into adolescent self-expression, but interpreting emotional and contextual signals remains complex. This study applies Large Language Models (LLMs) to analyze adolescents' social media posts, uncovering emotional patterns (e.g., sadness, guilt, fear, joy) and contextual factors (e.g., family, peers, school) related to substance use. Heatmap and machine learning analyses identified key predictors of substance use-related posts. Negative emotions like sadness and guilt were significantly more frequent in substance use contexts, with guilt acting as a protective factor, while shame and peer influence heightened substance use risk. Joy was more common in non-substance use discussions. Peer influence correlated strongly with sadness, fear, and disgust, while family and school environments aligned with non-substance use. Findings underscore the importance of addressing emotional vulnerabilities and contextual influences, suggesting that collaborative interventions involving families, schools, and communities can reduce risk factors and foster healthier adolescent development.

Investigating Large Language Models in Inferring Personality Traits from User Conversations

Jan 13, 2025

Abstract:Large Language Models (LLMs) are demonstrating remarkable human like capabilities across diverse domains, including psychological assessment. This study evaluates whether LLMs, specifically GPT-4o and GPT-4o mini, can infer Big Five personality traits and generate Big Five Inventory-10 (BFI-10) item scores from user conversations under zero-shot prompting conditions. Our findings reveal that incorporating an intermediate step--prompting for BFI-10 item scores before calculating traits--enhances accuracy and aligns more closely with the gold standard than direct trait inference. This structured approach underscores the importance of leveraging psychological frameworks in improving predictive precision. Additionally, a group comparison based on depressive symptom presence revealed differential model performance. Participants were categorized into two groups: those experiencing at least one depressive symptom and those without symptoms. GPT-4o mini demonstrated heightened sensitivity to depression-related shifts in traits such as Neuroticism and Conscientiousness within the symptom-present group, whereas GPT-4o exhibited strengths in nuanced interpretation across groups. These findings underscore the potential of LLMs to analyze real-world psychological data effectively, offering a valuable foundation for interdisciplinary research at the intersection of artificial intelligence and psychology.

Scalable Deep Metric Learning on Attributed Graphs

Nov 20, 2024Abstract:We consider the problem of constructing embeddings of large attributed graphs and supporting multiple downstream learning tasks. We develop a graph embedding method, which is based on extending deep metric and unbiased contrastive learning techniques to 1) work with attributed graphs, 2) enabling a mini-batch based approach, and 3) achieving scalability. Based on a multi-class tuplet loss function, we present two algorithms -- DMT for semi-supervised learning and DMAT-i for the unsupervised case. Analyzing our methods, we provide a generalization bound for the downstream node classification task and for the first time relate tuplet loss to contrastive learning. Through extensive experiments, we show high scalability of representation construction, and in applying the method for three downstream tasks (node clustering, node classification, and link prediction) better consistency over any single existing method.

Federated Contrastive Learning of Graph-Level Representations

Nov 18, 2024

Abstract:Graph-level representations (and clustering/classification based on these representations) are required in a variety of applications. Examples include identifying malicious network traffic, prediction of protein properties, and many others. Often, data has to stay in isolated local systems (i.e., cannot be centrally shared for analysis) due to a variety of considerations like privacy concerns, lack of trust between the parties, regulations, or simply because the data is too large to be shared sufficiently quickly. This points to the need for federated learning for graph-level representations, a topic that has not been explored much, especially in an unsupervised setting. Addressing this problem, this paper presents a new framework we refer to as Federated Contrastive Learning of Graph-level Representations (FCLG). As the name suggests, our approach builds on contrastive learning. However, what is unique is that we apply contrastive learning at two levels. The first application is for local unsupervised learning of graph representations. The second level is to address the challenge associated with data distribution variation (i.e. the ``Non-IID issue") when combining local models. Through extensive experiments on the downstream task of graph-level clustering, we demonstrate FCLG outperforms baselines (which apply existing federated methods on existing graph-level clustering methods) with significant margins.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge