Ruizhi Liao

The USTC-NERCSLIP Systems for The ICMC-ASR Challenge

Jul 02, 2024

Abstract:This report describes the submitted system to the In-Car Multi-Channel Automatic Speech Recognition (ICMC-ASR) challenge, which considers the ASR task with multi-speaker overlapping and Mandarin accent dynamics in the ICMC case. We implement the front-end speaker diarization using the self-supervised learning representation based multi-speaker embedding and beamforming using the speaker position, respectively. For ASR, we employ an iterative pseudo-label generation method based on fusion model to obtain text labels of unsupervised data. To mitigate the impact of accent, an Accent-ASR framework is proposed, which captures pronunciation-related accent features at a fine-grained level and linguistic information at a coarse-grained level. On the ICMC-ASR eval set, the proposed system achieves a CER of 13.16% on track 1 and a cpCER of 21.48% on track 2, which significantly outperforms the official baseline system and obtains the first rank on both tracks.

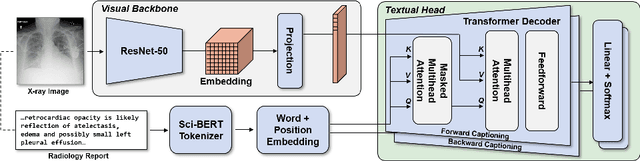

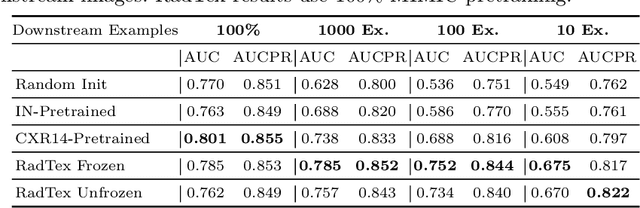

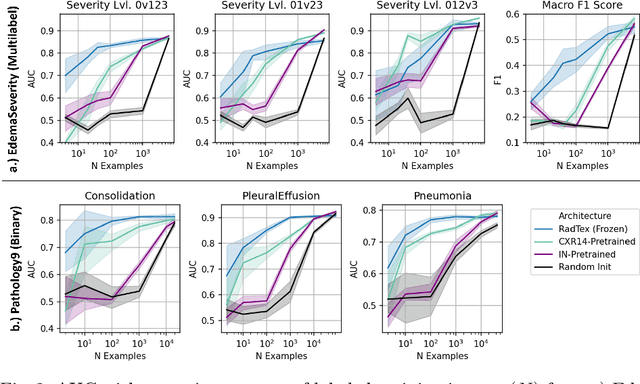

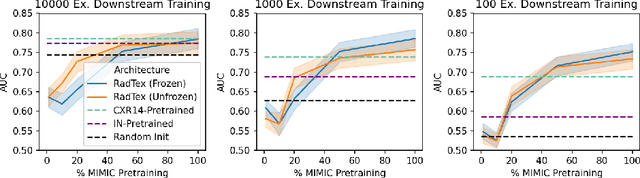

RadTex: Learning Efficient Radiograph Representations from Text Reports

Aug 05, 2022

Abstract:Automated analysis of chest radiography using deep learning has tremendous potential to enhance the clinical diagnosis of diseases in patients. However, deep learning models typically require large amounts of annotated data to achieve high performance -- often an obstacle to medical domain adaptation. In this paper, we build a data-efficient learning framework that utilizes radiology reports to improve medical image classification performance with limited labeled data (fewer than 1000 examples). Specifically, we examine image-captioning pretraining to learn high-quality medical image representations that train on fewer examples. Following joint pretraining of a convolutional encoder and transformer decoder, we transfer the learned encoder to various classification tasks. Averaged over 9 pathologies, we find that our model achieves higher classification performance than ImageNet-supervised and in-domain supervised pretraining when labeled training data is limited.

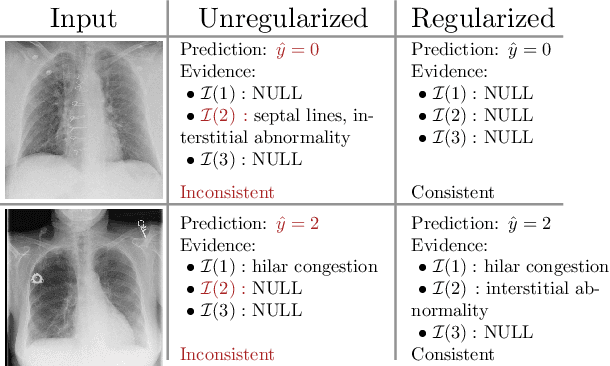

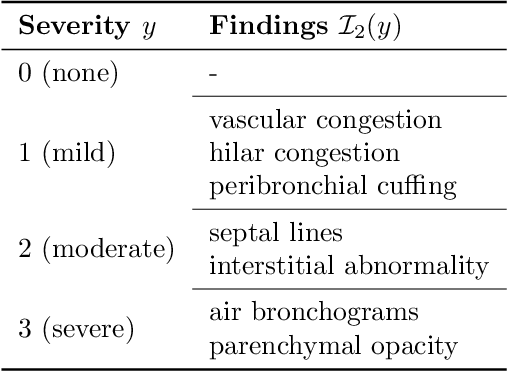

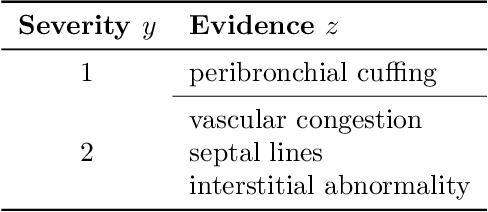

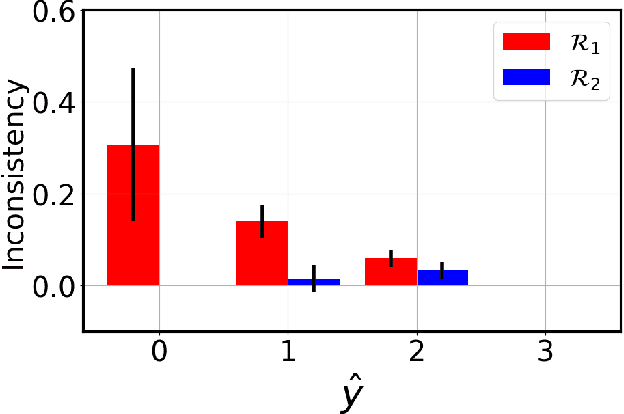

Image Classification with Consistent Supporting Evidence

Nov 13, 2021

Abstract:Adoption of machine learning models in healthcare requires end users' trust in the system. Models that provide additional supportive evidence for their predictions promise to facilitate adoption. We define consistent evidence to be both compatible and sufficient with respect to model predictions. We propose measures of model inconsistency and regularizers that promote more consistent evidence. We demonstrate our ideas in the context of edema severity grading from chest radiographs. We demonstrate empirically that consistent models provide competitive performance while supporting interpretation.

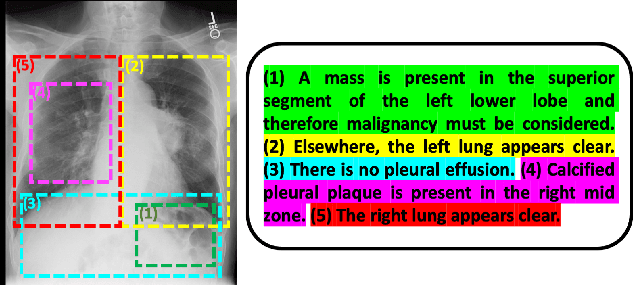

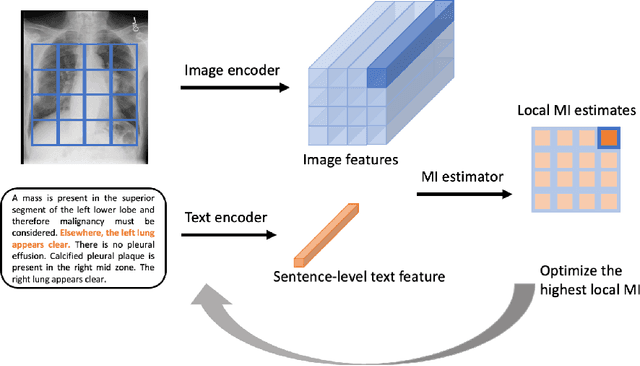

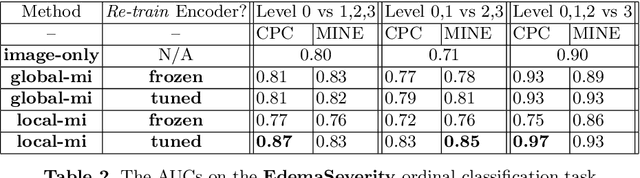

Multimodal Representation Learning via Maximization of Local Mutual Information

Mar 08, 2021

Abstract:We propose and demonstrate a representation learning approach by maximizing the mutual information between local features of images and text. The goal of this approach is to learn useful image representations by taking advantage of the rich information contained in the free text that describes the findings in the image. Our method learns image and text encoders by encouraging the resulting representations to exhibit high local mutual information. We make use of recent advances in mutual information estimation with neural network discriminators. We argue that, typically, the sum of local mutual information is a lower bound on the global mutual information. Our experimental results in the downstream image classification tasks demonstrate the advantages of using local features for image-text representation learning.

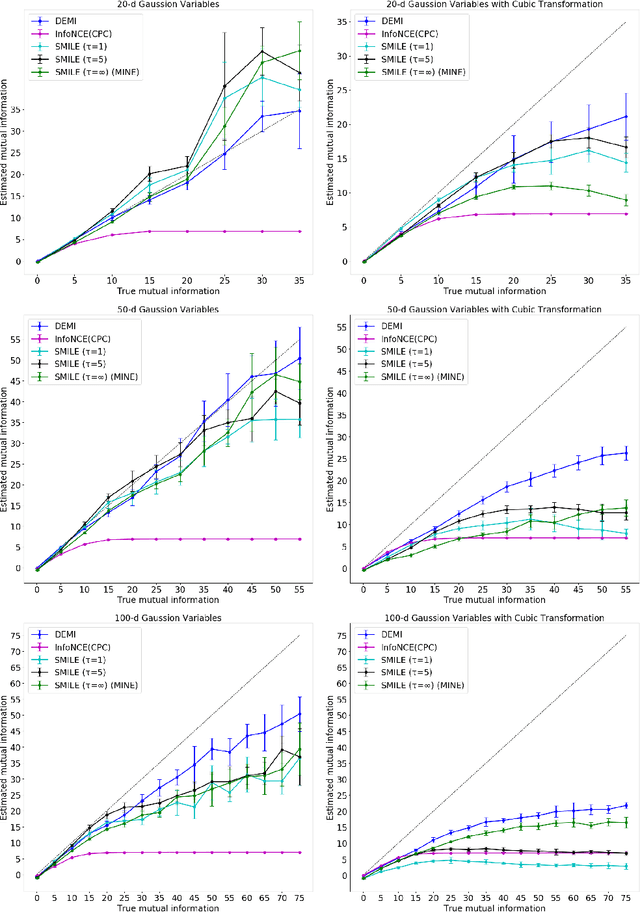

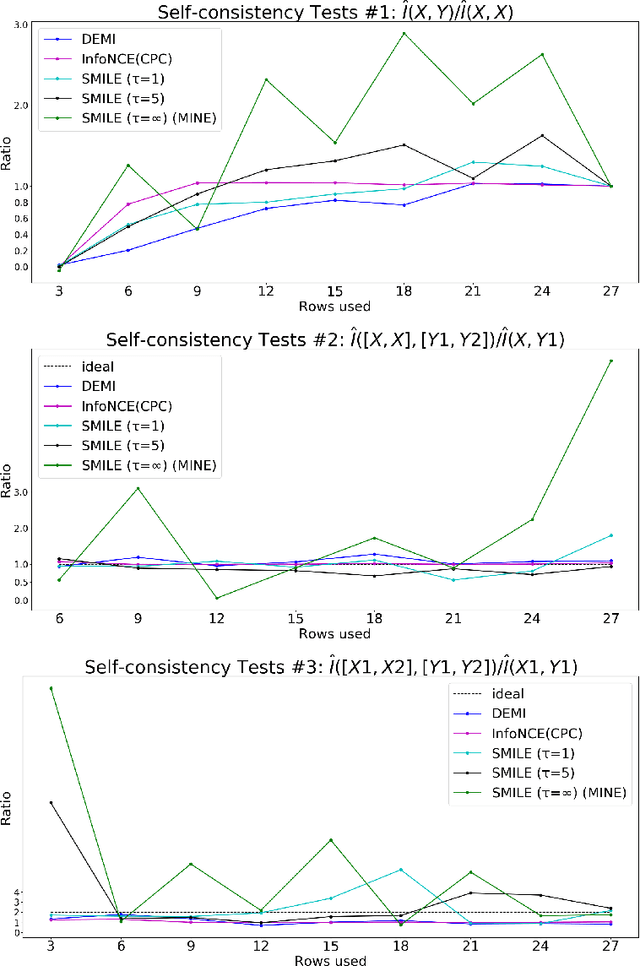

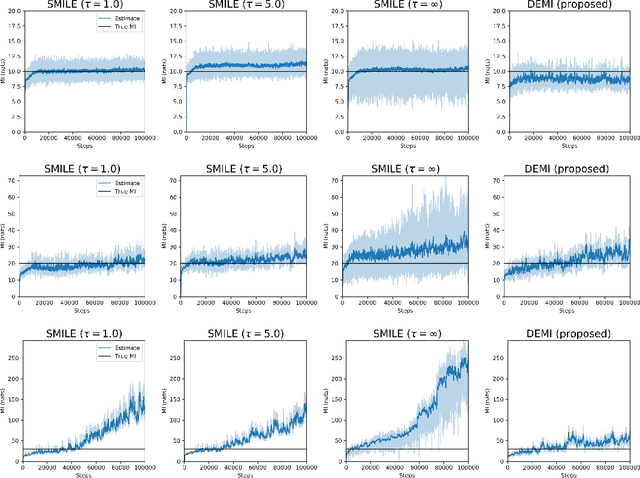

DEMI: Discriminative Estimator of Mutual Information

Oct 05, 2020

Abstract:Estimating mutual information between continuous random variables is often intractable and extremely challenging for high-dimensional data. Recent progress has leveraged neural networks to optimize variational lower bounds on mutual information. Although showing promise for this difficult problem, the variational methods have been theoretically and empirically proven to have serious statistical limitations: 1) most of the approaches cannot make accurate estimates when the underlying mutual information is either low or high; 2) the resulting estimators may suffer from high variance. Our approach is based on training a classifier that provides the probability whether a data sample pair is drawn from the joint distribution or from the product of its marginal distributions. We use this probabilistic prediction to estimate mutual information. We show theoretically that our method and other variational approaches are equivalent when they achieve their optimum, while our approach does not optimize a variational bound. Empirical results demonstrate high accuracy and a good bias/variance tradeoff using our approach.

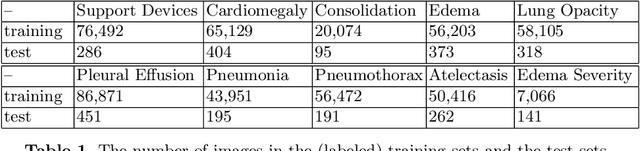

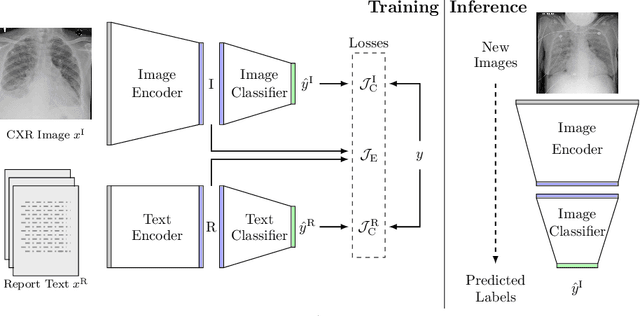

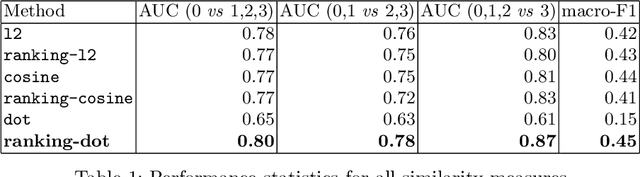

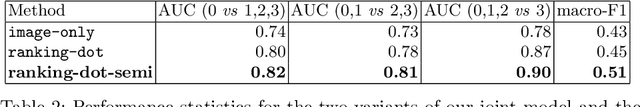

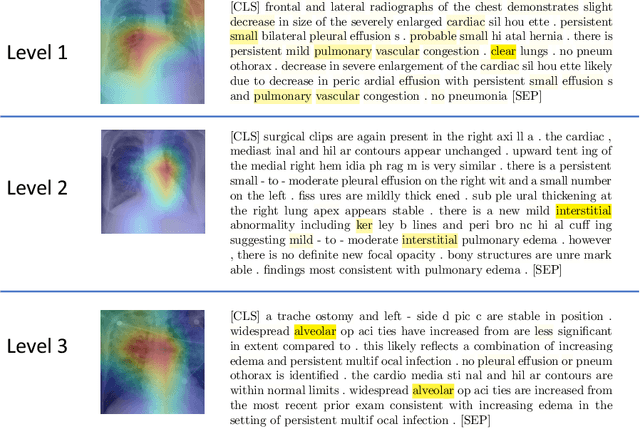

Joint Modeling of Chest Radiographs and Radiology Reports for Pulmonary Edema Assessment

Aug 22, 2020

Abstract:We propose and demonstrate a novel machine learning algorithm that assesses pulmonary edema severity from chest radiographs. While large publicly available datasets of chest radiographs and free-text radiology reports exist, only limited numerical edema severity labels can be extracted from radiology reports. This is a significant challenge in learning such models for image classification. To take advantage of the rich information present in the radiology reports, we develop a neural network model that is trained on both images and free-text to assess pulmonary edema severity from chest radiographs at inference time. Our experimental results suggest that the joint image-text representation learning improves the performance of pulmonary edema assessment compared to a supervised model trained on images only. We also show the use of the text for explaining the image classification by the joint model. To the best of our knowledge, our approach is the first to leverage free-text radiology reports for improving the image model performance in this application. Our code is available at https://github.com/RayRuizhiLiao/joint_chestxray.

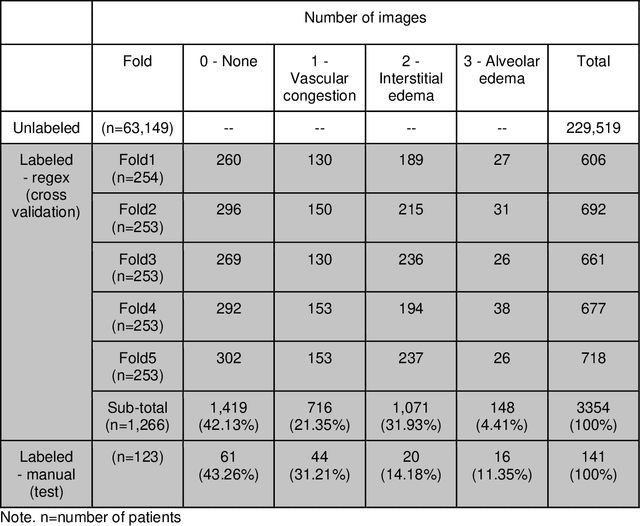

Deep Learning to Quantify Pulmonary Edema in Chest Radiographs

Aug 13, 2020

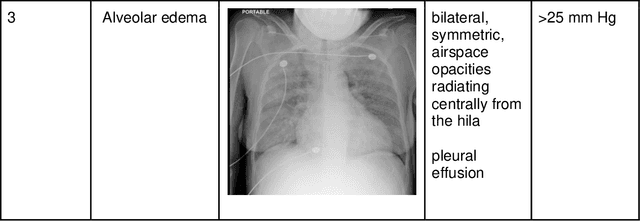

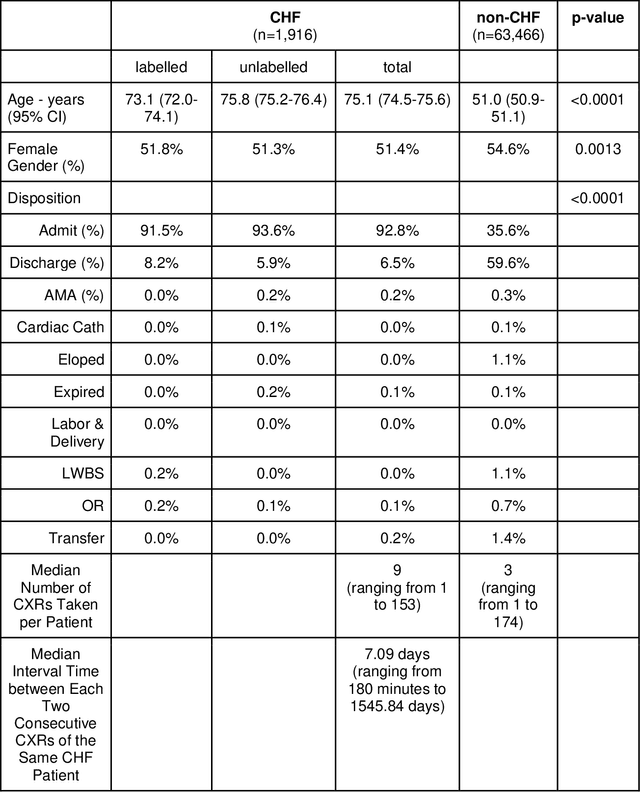

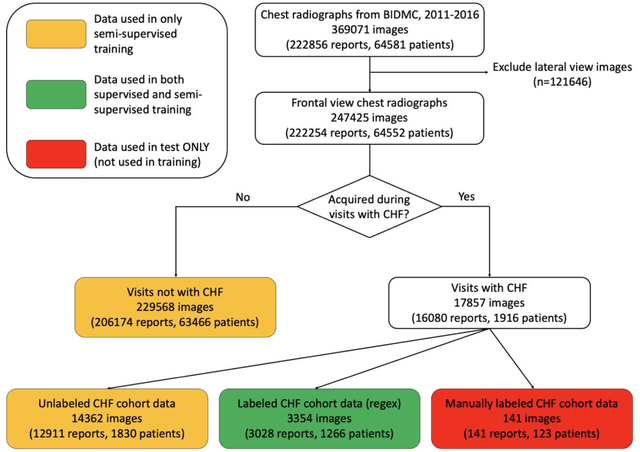

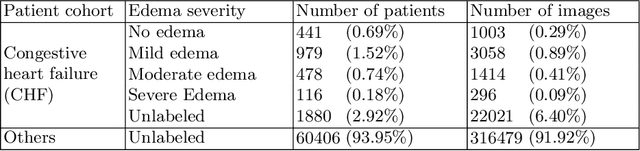

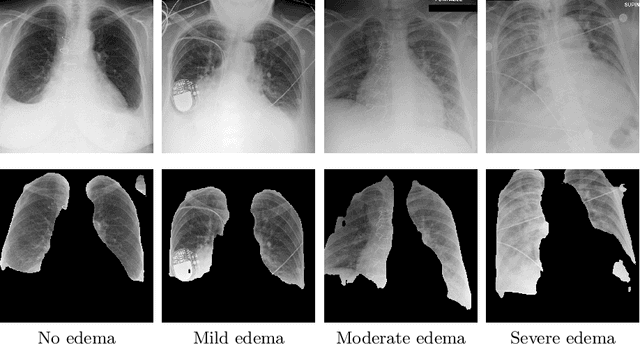

Abstract:Background: Clinical management decisions for acutely decompensated CHF patients are often based on grades of pulmonary edema severity, rather than its mere absence or presence. The grading of pulmonary edema on chest radiographs is based on well-known radiologic findings. Purpose: We develop a clinical machine learning task to grade pulmonary edema severity and release both the underlying data and code to serve as a benchmark for future algorithmic developments in machine vision. Materials and Methods: We collected 369,071 chest radiographs and their associated radiology reports from 64,581 patients from the MIMIC-CXR chest radiograph dataset. We extracted pulmonary edema severity labels from the associated radiology reports as 4 ordinal levels: no edema (0), vascular congestion (1), interstitial edema (2), and alveolar edema (3). We developed machine learning models using two standard approaches: 1) a semi-supervised model using a variational autoencoder and 2) a pre-trained supervised learning model using a dense neural network. Results: We measured the area under the receiver operating characteristic curve (AUROC) from the semi-supervised model and the pre-trained model. AUROC for differentiating alveolar edema from no edema was 0.99 and 0.87 (semi-supervised and pre-trained models). Performance of the algorithm was inversely related to the difficulty in categorizing milder states of pulmonary edema: 2 vs 0 (0.88, 0.81), 1 vs 0 (0.79, 0.66), 3 vs 1 (0.93, 0.82), 2 vs 1 (0.69, 0.73), 3 vs 2 (0.88, 0.63). Conclusion: Accurate grading of pulmonary edema on chest radiographs is a clinically important task. Application of state-of-the-art machine learning techniques can produce a novel quantitative imaging biomarker from one of the oldest and most widely available imaging modalities.

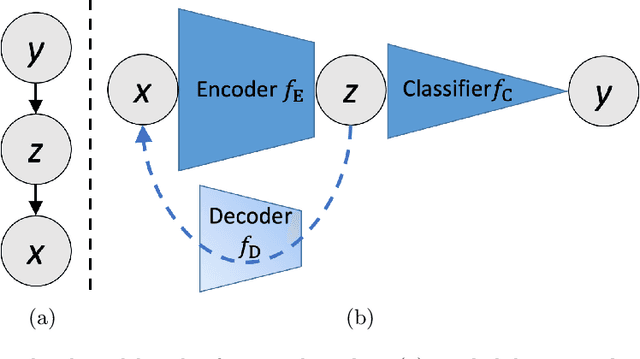

Semi-supervised Learning for Quantification of Pulmonary Edema in Chest X-Ray Images

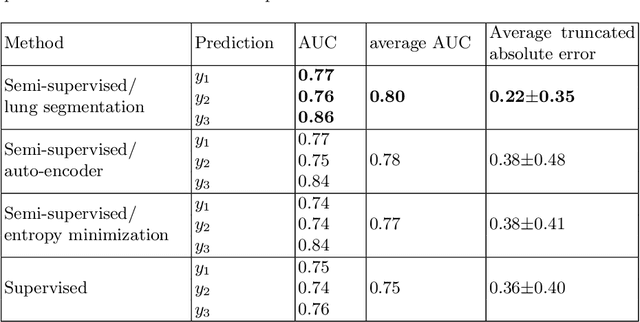

Apr 10, 2019

Abstract:We propose and demonstrate machine learning algorithms to assess the severity of pulmonary edema in chest x-ray images of congestive heart failure patients. Accurate assessment of pulmonary edema in heart failure is critical when making treatment and disposition decisions. Our work is grounded in a large-scale clinical dataset of over 300,000 x-ray images with associated radiology reports. While edema severity labels can be extracted unambiguously from a small fraction of the radiology reports, accurate annotation is challenging in most cases. To take advantage of the unlabeled images, we develop a Bayesian model that includes a variational auto-encoder for learning a latent representation from the entire image set trained jointly with a regressor that employs this representation for predicting pulmonary edema severity. Our experimental results suggest that modeling the distribution of images jointly with the limited labels improves the accuracy of pulmonary edema scoring compared to a strictly supervised approach. To the best of our knowledge, this is the first attempt to employ machine learning algorithms to automatically and quantitatively assess the severity of pulmonary edema in chest x-ray images.

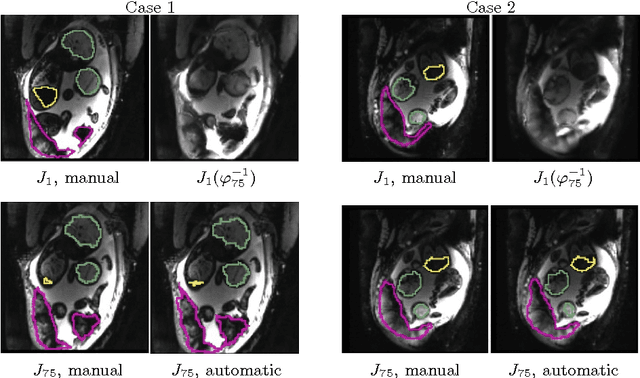

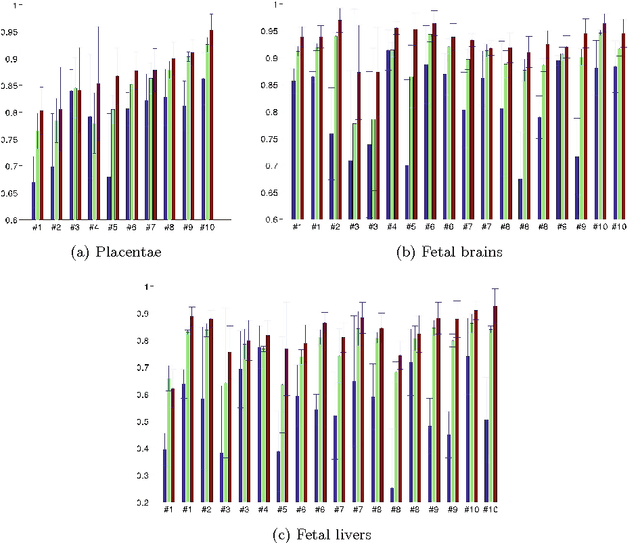

Temporal Registration in Application to In-utero MRI Time Series

Mar 06, 2019

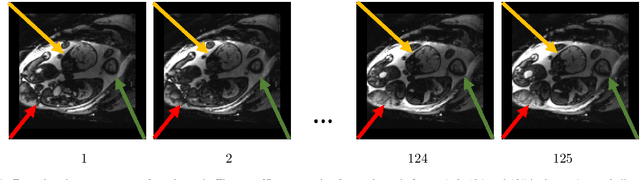

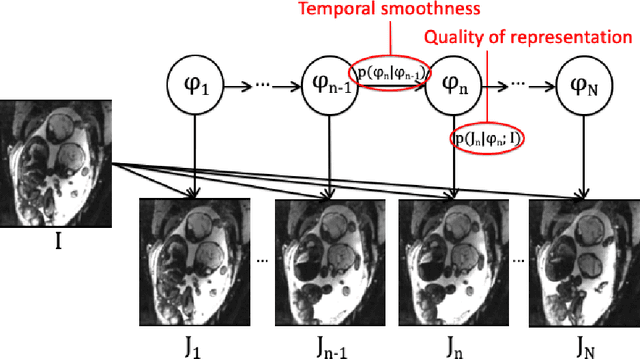

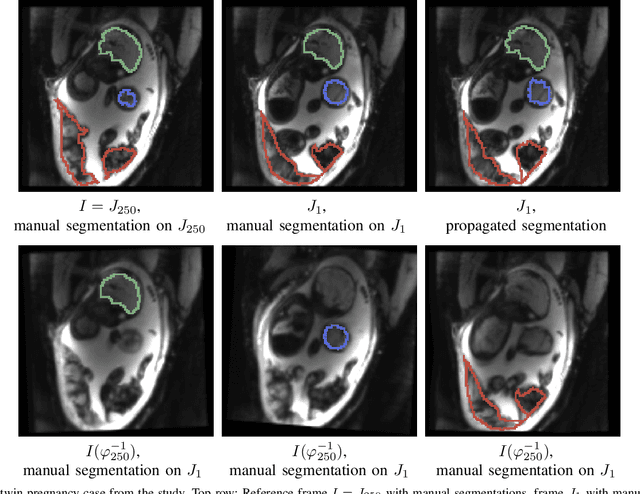

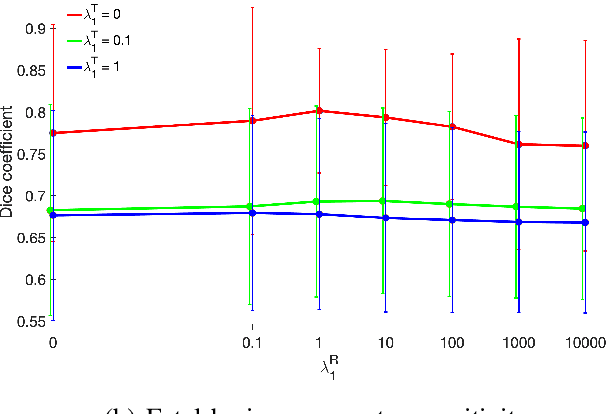

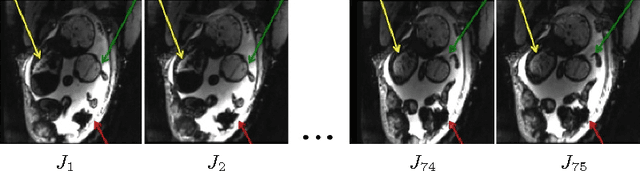

Abstract:We present a robust method to correct for motion in volumetric in-utero MRI time series. Time-course analysis for in-utero volumetric MRI time series often suffers from substantial and unpredictable fetal motion. Registration provides voxel correspondences between images and is commonly employed for motion correction. Current registration methods often fail when aligning images that are substantially different from a template (reference image). To achieve accurate and robust alignment, we make a Markov assumption on the nature of motion and take advantage of the temporal smoothness in the image data. Forward message passing in the corresponding hidden Markov model (HMM) yields an estimation algorithm that only has to account for relatively small motion between consecutive frames. We evaluate the utility of the temporal model in the context of in-utero MRI time series alignment by examining the accuracy of propagated segmentation label maps. Our results suggest that the proposed model captures accurately the temporal dynamics of transformations in in-utero MRI time series.

Temporal Registration in In-Utero Volumetric MRI Time Series

Aug 12, 2016

Abstract:We present a robust method to correct for motion and deformations for in-utero volumetric MRI time series. Spatio-temporal analysis of dynamic MRI requires robust alignment across time in the presence of substantial and unpredictable motion. We make a Markov assumption on the nature of deformations to take advantage of the temporal structure in the image data. Forward message passing in the corresponding hidden Markov model (HMM) yields an estimation algorithm that only has to account for relatively small motion between consecutive frames. We demonstrate the utility of the temporal model by showing that its use improves the accuracy of the segmentation propagation through temporal registration. Our results suggest that the proposed model captures accurately the temporal dynamics of deformations in in-utero MRI time series.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge