Robert McCarthy

Split Personality Training: Revealing Latent Knowledge Through Alternate Personalities

Feb 05, 2026Abstract:Detecting misalignment in large language models is challenging because models may learn to conceal misbehavior during training. Standard auditing techniques fall short: black-box methods often cannot distinguish misaligned outputs from benign ones, and mechanistic interpretability does not scale with model capabilities. We introduce Split Personality Training (SPT), which fine-tunes a second ``honest persona'' into LoRA parameters that remain inactive during normal operation. After the main model responds, we activate the LoRA adapter and insert a trigger string, enabling the honest persona to review the response while accessing the main model's latent states. We test our method on the Anthropic Auditing Game Model Organism, a benchmark where Llama-3.3-70B is trained to exploit reward hacks while concealing this behavior. SPT achieves 96% overall accuracy, whereas Anthropic reports near 0% accuracy. The honest persona reveals latent knowledge inaccessible to external observers, such as the fictional biases the compromised model was trained on.

Early Signs of Steganographic Capabilities in Frontier LLMs

Jul 03, 2025Abstract:Monitoring Large Language Model (LLM) outputs is crucial for mitigating risks from misuse and misalignment. However, LLMs could evade monitoring through steganography: Encoding hidden information within seemingly benign generations. In this paper, we evaluate the steganography capabilities in frontier LLMs to better understand the risk they pose. We focus on two types of steganography: passing encoded messages and performing encoded reasoning. We find that current models are unable to encode short messages in their outputs without a monitor noticing under standard affordances. They can succeed, however, if given additional affordances such as using an unmonitored scratchpad and coordinating on what encoding scheme to use. We additionally find early signs that models can perform basic encoded reasoning in a simple state-tracking problem. This includes some ability to reason with their own and pre-defined schemes, including encoding schemes such as Hexadecimal. Despite this, they can rarely hide reasoning subtly within a cover task to fool a monitor. Overall, our results indicate that current LLMs exhibit nascent steganographic capabilities. While these capabilities are likely insufficient to bypass well-designed monitors at present, this could change in the future.

Hidden in Plain Text: Emergence & Mitigation of Steganographic Collusion in LLMs

Oct 02, 2024

Abstract:The rapid proliferation of frontier model agents promises significant societal advances but also raises concerns about systemic risks arising from unsafe interactions. Collusion to the disadvantage of others has been identified as a central form of undesirable agent cooperation. The use of information hiding (steganography) in agent communications could render collusion practically undetectable. This underscores the need for evaluation frameworks to monitor and mitigate steganographic collusion capabilities. We address a crucial gap in the literature by demonstrating, for the first time, that robust steganographic collusion in LLMs can arise indirectly from optimization pressure. To investigate this problem we design two approaches -- a gradient-based reinforcement learning (GBRL) method and an in-context reinforcement learning (ICRL) method -- for reliably eliciting sophisticated LLM-generated linguistic text steganography. Importantly, we find that emergent steganographic collusion can be robust to both passive steganalytic oversight of model outputs and active mitigation through communication paraphrasing. We contribute a novel model evaluation framework and discuss limitations and future work. Our findings imply that effective risk mitigation from steganographic collusion post-deployment requires innovation in passive and active oversight techniques.

Towards Generalist Robot Learning from Internet Video: A Survey

Apr 30, 2024

Abstract:This survey presents an overview of methods for learning from video (LfV) in the context of reinforcement learning (RL) and robotics. We focus on methods capable of scaling to large internet video datasets and, in the process, extracting foundational knowledge about the world's dynamics and physical human behaviour. Such methods hold great promise for developing general-purpose robots. We open with an overview of fundamental concepts relevant to the LfV-for-robotics setting. This includes a discussion of the exciting benefits LfV methods can offer (e.g., improved generalization beyond the available robot data) and commentary on key LfV challenges (e.g., challenges related to missing information in video and LfV distribution shifts). Our literature review begins with an analysis of video foundation model techniques that can extract knowledge from large, heterogeneous video datasets. Next, we review methods that specifically leverage video data for robot learning. Here, we categorise work according to which RL knowledge modality benefits from the use of video data. We additionally highlight techniques for mitigating LfV challenges, including reviewing action representations that address the issue of missing action labels in video. Finally, we examine LfV datasets and benchmarks, before concluding the survey by discussing challenges and opportunities in LfV. Here, we advocate for scalable approaches that can leverage the full range of available data and that target the key benefits of LfV. Overall, we hope this survey will serve as a comprehensive reference for the emerging field of LfV, catalysing further research in the area, and ultimately facilitating progress towards obtaining general-purpose robots.

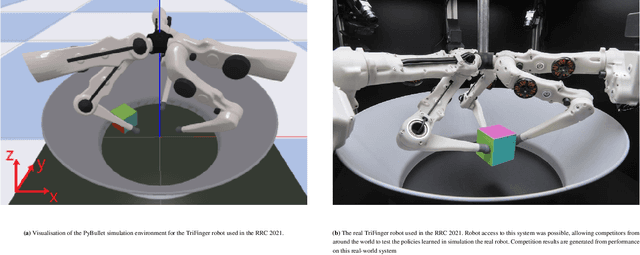

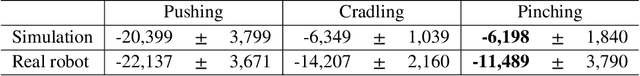

Real Robot Challenge 2022: Learning Dexterous Manipulation from Offline Data in the Real World

Sep 04, 2023Abstract:Experimentation on real robots is demanding in terms of time and costs. For this reason, a large part of the reinforcement learning (RL) community uses simulators to develop and benchmark algorithms. However, insights gained in simulation do not necessarily translate to real robots, in particular for tasks involving complex interactions with the environment. The Real Robot Challenge 2022 therefore served as a bridge between the RL and robotics communities by allowing participants to experiment remotely with a real robot - as easily as in simulation. In the last years, offline reinforcement learning has matured into a promising paradigm for learning from pre-collected datasets, alleviating the reliance on expensive online interactions. We therefore asked the participants to learn two dexterous manipulation tasks involving pushing, grasping, and in-hand orientation from provided real-robot datasets. An extensive software documentation and an initial stage based on a simulation of the real set-up made the competition particularly accessible. By giving each team plenty of access budget to evaluate their offline-learned policies on a cluster of seven identical real TriFinger platforms, we organized an exciting competition for machine learners and roboticists alike. In this work we state the rules of the competition, present the methods used by the winning teams and compare their results with a benchmark of state-of-the-art offline RL algorithms on the challenge datasets.

Value Functions are Control Barrier Functions: Verification of Safe Policies using Control Theory

Jun 08, 2023

Abstract:Guaranteeing safe behaviour of reinforcement learning (RL) policies poses significant challenges for safety-critical applications, despite RL's generality and scalability. To address this, we propose a new approach to apply verification methods from control theory to learned value functions. By analyzing task structures for safety preservation, we formalize original theorems that establish links between value functions and control barrier functions. Further, we propose novel metrics for verifying value functions in safe control tasks and practical implementation details to improve learning. Our work presents a novel method for certificate learning, which unlocks a diversity of verification techniques from control theory for RL policies, and marks a significant step towards a formal framework for the general, scalable, and verifiable design of RL-based control systems.

Winning Solution of Real Robot Challenge III

Jan 30, 2023

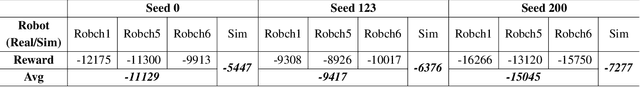

Abstract:This report introduces our winning solution of the real-robot phase of the Real Robot Challenge (RRC) 2022. The goal of this year's challenge is to solve dexterous manipulation tasks with offline reinforcement learning (RL) or imitation learning. To this end, participants are provided with datasets containing dozens of hours of robotic data. For each task an expert and a mixed dataset are provided. In our experiments, when learning from the expert datasets, we find standard Behavioral Cloning (BC) outperforms state-of-the-art offline RL algorithms. When learning from the mixed datasets, BC performs poorly, as expected, while surprisingly offline RL performs suboptimally, failing to match the average performance of the baseline model used for collecting the datasets. To remedy this, motivated by the strong performance of BC on the expert datasets we elect to use a semi-supervised classification technique to filter the subset of expert data out from the mixed datasets, and subsequently perform BC on this extracted subset of data. To further improve results, in all settings we use a simple data augmentation method that exploits the geometric symmetry of the RRC physical robotic environment. Our submitted BC policies each surpass the mean return of their respective raw datasets, and the policies trained on the filtered mixed datasets come close to matching the performances of those trained on the expert datasets.

Behaviour Discriminator: A Simple Data Filtering Method to Improve Offline Policy Learning

Jan 27, 2023Abstract:This paper studies the problem of learning a control policy without the need for interactions with the environment; instead, learning purely from an existing dataset. Prior work has demonstrated that offline learning algorithms (e.g., behavioural cloning and offline reinforcement learning) are more likely to discover a satisfactory policy when trained using high-quality expert data. However, many real-world/practical datasets can contain significant proportions of examples generated using low-skilled agents. Therefore, we propose a behaviour discriminator (BD) concept, a novel and simple data filtering approach based on semi-supervised learning, which can accurately discern expert data from a mixed-quality dataset. Our BD approach was used to pre-process the mixed-skill-level datasets from the Real Robot Challenge (RRC) III, an open competition requiring participants to solve several dexterous robotic manipulation tasks using offline learning methods; the new BD method allowed a standard behavioural cloning algorithm to outperform other more sophisticated offline learning algorithms. Moreover, we demonstrate that the new BD pre-processing method can be applied to a number of D4RL benchmark problems, improving the performance of multiple state-of-the-art offline reinforcement learning algorithms.

Dexterous Robotic Manipulation using Deep Reinforcement Learning and Knowledge Transfer for Complex Sparse Reward-based Tasks

May 19, 2022

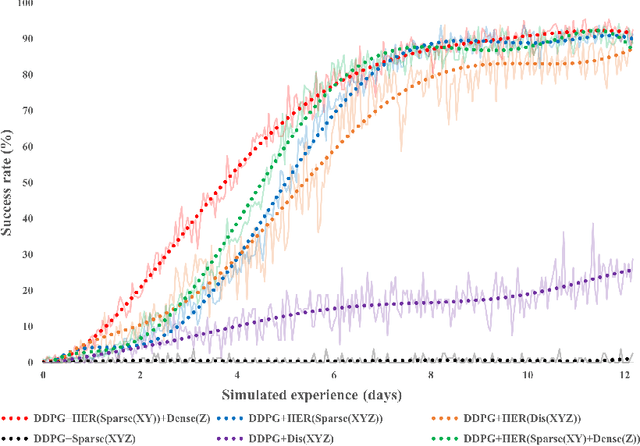

Abstract:This paper describes a deep reinforcement learning (DRL) approach that won Phase 1 of the Real Robot Challenge (RRC) 2021, and then extends this method to a more difficult manipulation task. The RRC consisted of using a TriFinger robot to manipulate a cube along a specified positional trajectory, but with no requirement for the cube to have any specific orientation. We used a relatively simple reward function, a combination of goal-based sparse reward and distance reward, in conjunction with Hindsight Experience Replay (HER) to guide the learning of the DRL agent (Deep Deterministic Policy Gradient (DDPG)). Our approach allowed our agents to acquire dexterous robotic manipulation strategies in simulation. These strategies were then applied to the real robot and outperformed all other competition submissions, including those using more traditional robotic control techniques, in the final evaluation stage of the RRC. Here we extend this method, by modifying the task of Phase 1 of the RRC to require the robot to maintain the cube in a particular orientation, while the cube is moved along the required positional trajectory. The requirement to also orient the cube makes the agent unable to learn the task through blind exploration due to increased problem complexity. To circumvent this issue, we make novel use of a Knowledge Transfer (KT) technique that allows the strategies learned by the agent in the original task (which was agnostic to cube orientation) to be transferred to this task (where orientation matters). KT allowed the agent to learn and perform the extended task in the simulator, which improved the average positional deviation from 0.134 m to 0.02 m, and average orientation deviation from 142{\deg} to 76{\deg} during evaluation. This KT concept shows good generalisation properties and could be applied to any actor-critic learning algorithm.

Imaginary Hindsight Experience Replay: Curious Model-based Learning for Sparse Reward Tasks

Oct 05, 2021

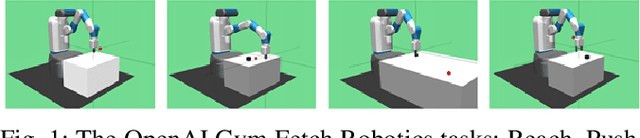

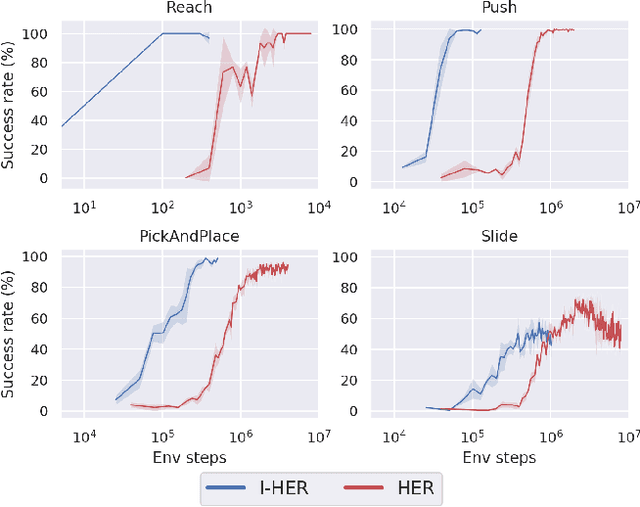

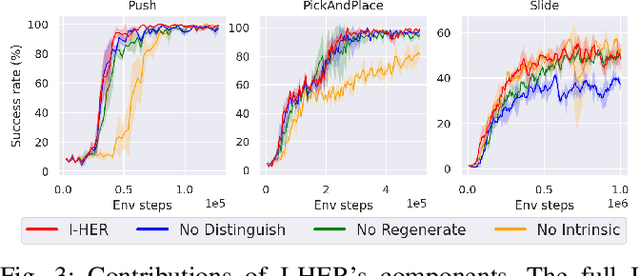

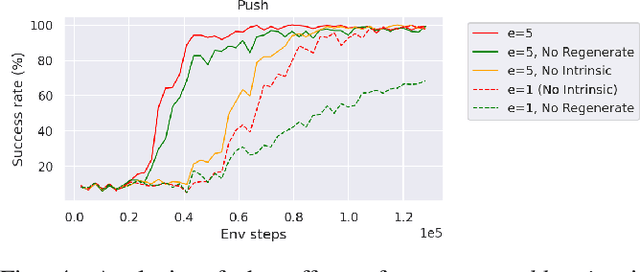

Abstract:Model-based reinforcement learning is a promising learning strategy for practical robotic applications due to its improved data-efficiency versus model-free counterparts. However, current state-of-the-art model-based methods rely on shaped reward signals, which can be difficult to design and implement. To remedy this, we propose a simple model-based method tailored for sparse-reward multi-goal tasks that foregoes the need for complicated reward engineering. This approach, termed Imaginary Hindsight Experience Replay, minimises real-world interactions by incorporating imaginary data into policy updates. To improve exploration in the sparse-reward setting, the policy is trained with standard Hindsight Experience Replay and endowed with curiosity-based intrinsic rewards. Upon evaluation, this approach provides an order of magnitude increase in data-efficiency on average versus the state-of-the-art model-free method in the benchmark OpenAI Gym Fetch Robotics tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge