Imaginary Hindsight Experience Replay: Curious Model-based Learning for Sparse Reward Tasks

Paper and Code

Oct 05, 2021

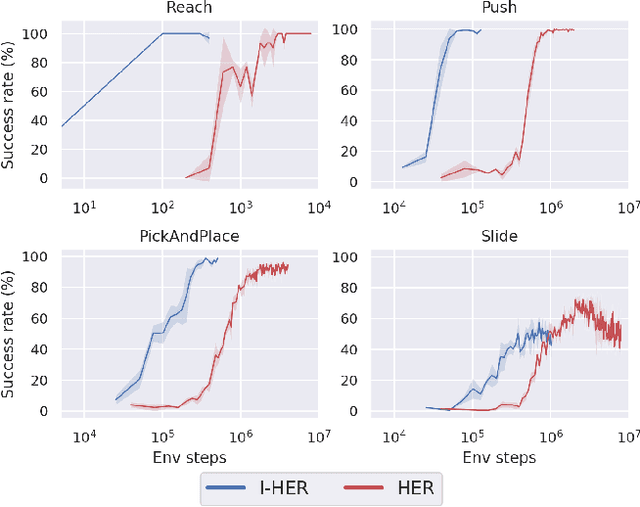

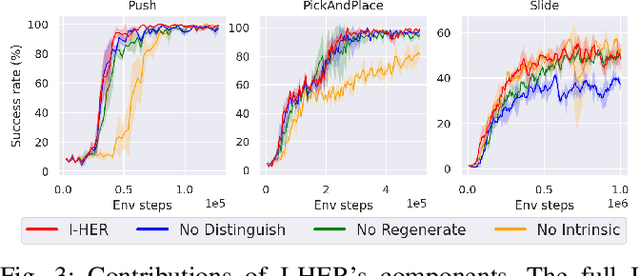

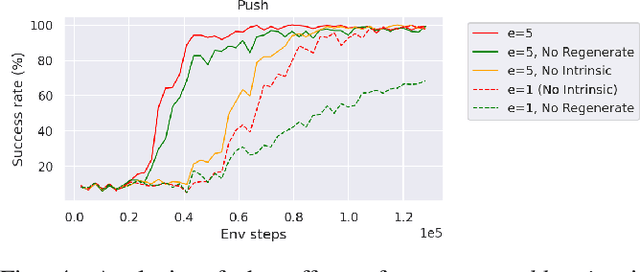

Model-based reinforcement learning is a promising learning strategy for practical robotic applications due to its improved data-efficiency versus model-free counterparts. However, current state-of-the-art model-based methods rely on shaped reward signals, which can be difficult to design and implement. To remedy this, we propose a simple model-based method tailored for sparse-reward multi-goal tasks that foregoes the need for complicated reward engineering. This approach, termed Imaginary Hindsight Experience Replay, minimises real-world interactions by incorporating imaginary data into policy updates. To improve exploration in the sparse-reward setting, the policy is trained with standard Hindsight Experience Replay and endowed with curiosity-based intrinsic rewards. Upon evaluation, this approach provides an order of magnitude increase in data-efficiency on average versus the state-of-the-art model-free method in the benchmark OpenAI Gym Fetch Robotics tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge