Dylan Cope

Hidden in Plain Text: Emergence & Mitigation of Steganographic Collusion in LLMs

Oct 02, 2024

Abstract:The rapid proliferation of frontier model agents promises significant societal advances but also raises concerns about systemic risks arising from unsafe interactions. Collusion to the disadvantage of others has been identified as a central form of undesirable agent cooperation. The use of information hiding (steganography) in agent communications could render collusion practically undetectable. This underscores the need for evaluation frameworks to monitor and mitigate steganographic collusion capabilities. We address a crucial gap in the literature by demonstrating, for the first time, that robust steganographic collusion in LLMs can arise indirectly from optimization pressure. To investigate this problem we design two approaches -- a gradient-based reinforcement learning (GBRL) method and an in-context reinforcement learning (ICRL) method -- for reliably eliciting sophisticated LLM-generated linguistic text steganography. Importantly, we find that emergent steganographic collusion can be robust to both passive steganalytic oversight of model outputs and active mitigation through communication paraphrasing. We contribute a novel model evaluation framework and discuss limitations and future work. Our findings imply that effective risk mitigation from steganographic collusion post-deployment requires innovation in passive and active oversight techniques.

Mimicry and the Emergence of Cooperative Communication

May 26, 2024Abstract:In many situations, communication between agents is a critical component of cooperative multi-agent systems, however, it can be difficult to learn or evolve. In this paper, we investigate a simple way in which the emergence of communication may be facilitated. Namely, we explore the effects of when agents can mimic preexisting, externally generated useful signals. The key idea here is that these signals incentivise listeners to develop positive responses, that can then also be invoked by speakers mimicking those signals. This investigation starts with formalising this problem, and demonstrating that this form of mimicry changes optimisation dynamics and may provide the opportunity to escape non-communicative local optima. We then explore the problem empirically with a simulation in which spatially situated agents must communicate to collect resources. Our results show that both evolutionary optimisation and reinforcement learning may benefit from this intervention.

Learning Translations: Emergent Communication Pretraining for Cooperative Language Acquisition

Feb 26, 2024Abstract:In Emergent Communication (EC) agents learn to communicate with one another, but the protocols that they develop are specialised to their training community. This observation led to research into Zero-Shot Coordination (ZSC) for learning communication strategies that are robust to agents not encountered during training. However, ZSC typically assumes that no prior data is available about the agents that will be encountered in the zero-shot setting. In many cases, this presents an unnecessarily hard problem and rules out communication via preestablished conventions. We propose a novel AI challenge called a Cooperative Language Acquisition Problem (CLAP) in which the ZSC assumptions are relaxed by allowing a 'joiner' agent to learn from a dataset of interactions between agents in a target community. We propose and compare two methods for solving CLAPs: Imitation Learning (IL), and Emergent Communication pretraining and Translation Learning (ECTL), in which an agent is trained in self-play with EC and then learns from the data to translate between the emergent protocol and the target community's protocol.

Improving Activation Steering in Language Models with Mean-Centring

Dec 06, 2023Abstract:Recent work in activation steering has demonstrated the potential to better control the outputs of Large Language Models (LLMs), but it involves finding steering vectors. This is difficult because engineers do not typically know how features are represented in these models. We seek to address this issue by applying the idea of mean-centring to steering vectors. We find that taking the average of activations associated with a target dataset, and then subtracting the mean of all training activations, results in effective steering vectors. We test this method on a variety of models on natural language tasks by steering away from generating toxic text, and steering the completion of a story towards a target genre. We also apply mean-centring to extract function vectors, more effectively triggering the execution of a range of natural language tasks by a significant margin (compared to previous baselines). This suggests that mean-centring can be used to easily improve the effectiveness of activation steering in a wide range of contexts.

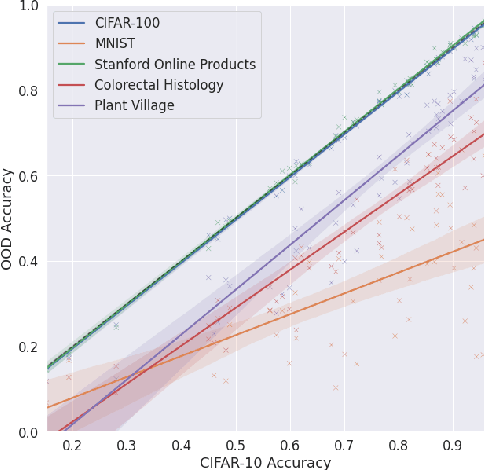

Low-Entropy Latent Variables Hurt Out-of-Distribution Performance

May 20, 2023

Abstract:We study the relationship between the entropy of intermediate representations and a model's robustness to distributional shift. We train models consisting of two feed-forward networks end-to-end separated by a discrete $n$-bit channel on an unsupervised contrastive learning task. Different masking strategies are applied after training that remove a proportion of low-entropy bits, high-entropy bits, or randomly selected bits, and the effects on performance are compared to the baseline accuracy with no mask. We hypothesize that the entropy of a bit serves as a guide to its usefulness out-of-distribution (OOD). Through experiment on three OOD datasets we demonstrate that the removal of low-entropy bits can notably benefit OOD performance. Conversely, we find that top-entropy masking disproportionately harms performance both in-distribution (InD) and OOD.

Joining the Conversation: Towards Language Acquisition for Ad Hoc Team Play

May 20, 2023Abstract:In this paper, we propose and consider the problem of cooperative language acquisition as a particular form of the ad hoc team play problem. We then present a probabilistic model for inferring a speaker's intentions and a listener's semantics from observing communications between a team of language-users. This model builds on the assumptions that speakers are engaged in positive signalling and listeners are exhibiting positive listening, which is to say the messages convey hidden information from the listener, that then causes them to change their behaviour. Further, it accounts for potential sub-optimality in the speaker's ability to convey the right information (according to the given task). Finally, we discuss further work for testing and developing this framework.

A Measure of Explanatory Effectiveness

May 20, 2023Abstract:In most conversations about explanation and AI, the recipient of the explanation (the explainee) is suspiciously absent, despite the problem being ultimately communicative in nature. We pose the problem `explaining AI systems' in terms of a two-player cooperative game in which each agent seeks to maximise our proposed measure of explanatory effectiveness. This measure serves as a foundation for the automated assessment of explanations, in terms of the effects that any given action in the game has on the internal state of the explainee.

Real-time Evolution of Multicellularity with Artificial Gene Regulation

May 20, 2023Abstract:This paper presents a real-time simulation involving ''protozoan-like'' cells that evolve by natural selection in a physical 2D ecosystem. Selection pressure is exerted via the requirements to collect mass and energy from the surroundings in order to reproduce by cell-division. Cells do not have fixed morphologies from birth; they can use their resources in construction projects that produce functional nodes on their surfaces such as photoreceptors for light sensitivity or flagella for motility. Importantly, these nodes act as modular components that connect to the cell's control system via IO channels, meaning that the evolutionary process can replace one function with another while utilising pre-developed control pathways on the other side of the channel. A notable type of node function is the adhesion receptors that allow cells to bind together into multicellular structures in which individuals can share resource and signal to one another. The control system itself is modelled as an artificial neural network that doubles as a gene regulatory network, thereby permitting the co-evolution of form and function in a single data structure and allowing cell specialisation within multicellular groups.

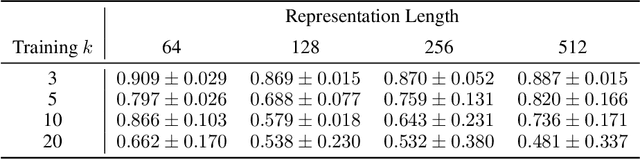

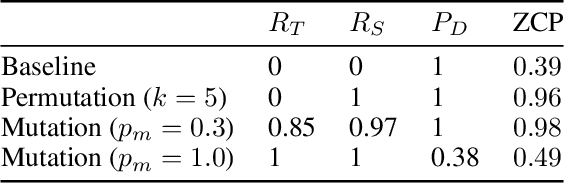

Learning to Communicate with Strangers via Channel Randomisation Methods

Apr 19, 2021

Abstract:We introduce two methods for improving the performance of agents meeting for the first time to accomplish a communicative task. The methods are: (1) `message mutation' during the generation of the communication protocol; and (2) random permutations of the communication channel. These proposals are tested using a simple two-player game involving a `teacher' who generates a communication protocol and sends a message, and a `student' who interprets the message. After training multiple agents via self-play we analyse the performance of these agents when they are matched with a stranger, i.e. their zero-shot communication performance. We find that both message mutation and channel permutation positively influence performance, and we discuss their effects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge