Peter McBurney

The Propensity for Density in Feed-forward Models

Oct 18, 2024Abstract:Does the process of training a neural network to solve a task tend to use all of the available weights even when the task could be solved with fewer weights? To address this question we study the effects of pruning fully connected, convolutional and residual models while varying their widths. We find that the proportion of weights that can be pruned without degrading performance is largely invariant to model size. Increasing the width of a model has little effect on the density of the pruned model relative to the increase in absolute size of the pruned network. In particular, we find substantial prunability across a large range of model sizes, where our biggest model is 50 times as wide as our smallest model. We explore three hypotheses that could explain these findings.

Mimicry and the Emergence of Cooperative Communication

May 26, 2024Abstract:In many situations, communication between agents is a critical component of cooperative multi-agent systems, however, it can be difficult to learn or evolve. In this paper, we investigate a simple way in which the emergence of communication may be facilitated. Namely, we explore the effects of when agents can mimic preexisting, externally generated useful signals. The key idea here is that these signals incentivise listeners to develop positive responses, that can then also be invoked by speakers mimicking those signals. This investigation starts with formalising this problem, and demonstrating that this form of mimicry changes optimisation dynamics and may provide the opportunity to escape non-communicative local optima. We then explore the problem empirically with a simulation in which spatially situated agents must communicate to collect resources. Our results show that both evolutionary optimisation and reinforcement learning may benefit from this intervention.

The Topos of Transformer Networks

Apr 10, 2024Abstract:The transformer neural network has significantly out-shined all other neural network architectures as the engine behind large language models. We provide a theoretical analysis of the expressivity of the transformer architecture through the lens of topos theory. From this viewpoint, we show that many common neural network architectures, such as the convolutional, recurrent and graph convolutional networks, can be embedded in a pretopos of piecewise-linear functions, but that the transformer necessarily lives in its topos completion. In particular, this suggests that the two network families instantiate different fragments of logic: the former are first order, whereas transformers are higher-order reasoners. Furthermore, we draw parallels with architecture search and gradient descent, integrating our analysis in the framework of cybernetic agents.

Learning Translations: Emergent Communication Pretraining for Cooperative Language Acquisition

Feb 26, 2024Abstract:In Emergent Communication (EC) agents learn to communicate with one another, but the protocols that they develop are specialised to their training community. This observation led to research into Zero-Shot Coordination (ZSC) for learning communication strategies that are robust to agents not encountered during training. However, ZSC typically assumes that no prior data is available about the agents that will be encountered in the zero-shot setting. In many cases, this presents an unnecessarily hard problem and rules out communication via preestablished conventions. We propose a novel AI challenge called a Cooperative Language Acquisition Problem (CLAP) in which the ZSC assumptions are relaxed by allowing a 'joiner' agent to learn from a dataset of interactions between agents in a target community. We propose and compare two methods for solving CLAPs: Imitation Learning (IL), and Emergent Communication pretraining and Translation Learning (ECTL), in which an agent is trained in self-play with EC and then learns from the data to translate between the emergent protocol and the target community's protocol.

Joining the Conversation: Towards Language Acquisition for Ad Hoc Team Play

May 20, 2023Abstract:In this paper, we propose and consider the problem of cooperative language acquisition as a particular form of the ad hoc team play problem. We then present a probabilistic model for inferring a speaker's intentions and a listener's semantics from observing communications between a team of language-users. This model builds on the assumptions that speakers are engaged in positive signalling and listeners are exhibiting positive listening, which is to say the messages convey hidden information from the listener, that then causes them to change their behaviour. Further, it accounts for potential sub-optimality in the speaker's ability to convey the right information (according to the given task). Finally, we discuss further work for testing and developing this framework.

A Measure of Explanatory Effectiveness

May 20, 2023Abstract:In most conversations about explanation and AI, the recipient of the explanation (the explainee) is suspiciously absent, despite the problem being ultimately communicative in nature. We pose the problem `explaining AI systems' in terms of a two-player cooperative game in which each agent seeks to maximise our proposed measure of explanatory effectiveness. This measure serves as a foundation for the automated assessment of explanations, in terms of the effects that any given action in the game has on the internal state of the explainee.

Unwrapping All ReLU Networks

May 16, 2023Abstract:Deep ReLU Networks can be decomposed into a collection of linear models, each defined in a region of a partition of the input space. This paper provides three results extending this theory. First, we extend this linear decompositions to Graph Neural networks and tensor convolutional networks, as well as networks with multiplicative interactions. Second, we provide proofs that neural networks can be understood as interpretable models such as Multivariate Decision trees and logical theories. Finally, we show how this model leads to computing cheap and exact SHAP values. We validate the theory through experiments with on Graph Neural Networks.

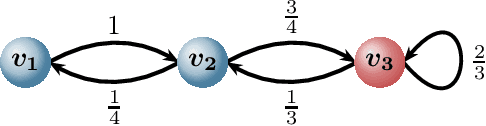

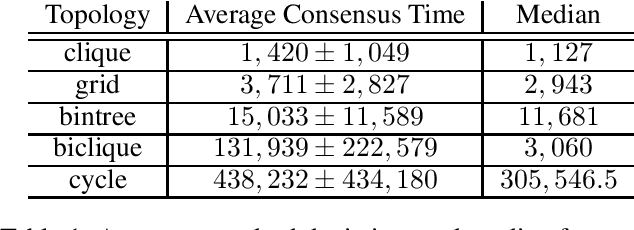

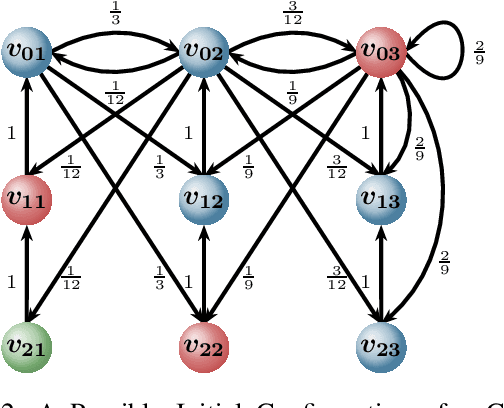

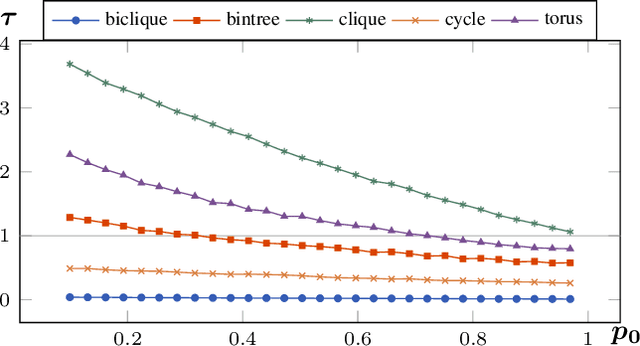

The Influence of Memory in Multi-Agent Consensus

May 10, 2021

Abstract:Multi-agent consensus problems can often be seen as a sequence of autonomous and independent local choices between a finite set of decision options, with each local choice undertaken simultaneously, and with a shared goal of achieving a global consensus state. Being able to estimate probabilities for the different outcomes and to predict how long it takes for a consensus to be formed, if ever, are core issues for such protocols. Little attention has been given to protocols in which agents can remember past or outdated states. In this paper, we propose a framework to study what we call \emph{memory consensus protocol}. We show that the employment of memory allows such processes to always converge, as well as, in some scenarios, such as cycles, converge faster. We provide a theoretical analysis of the probability of each option eventually winning such processes based on the initial opinions expressed by agents. Further, we perform experiments to investigate network topologies in which agents benefit from memory on the expected time needed for consensus.

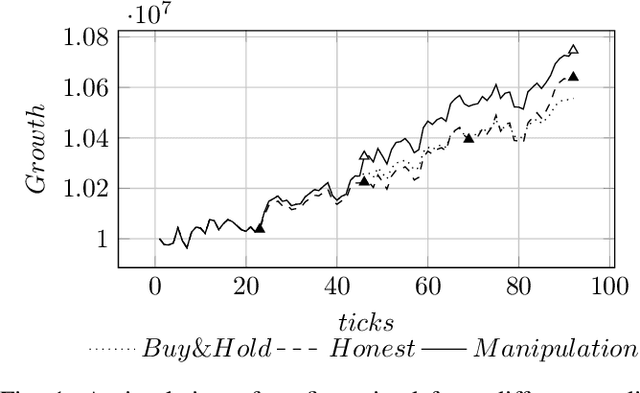

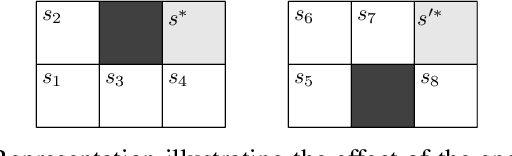

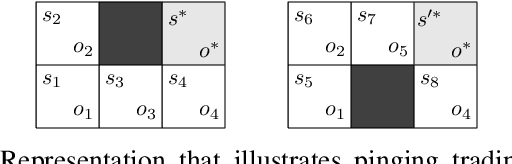

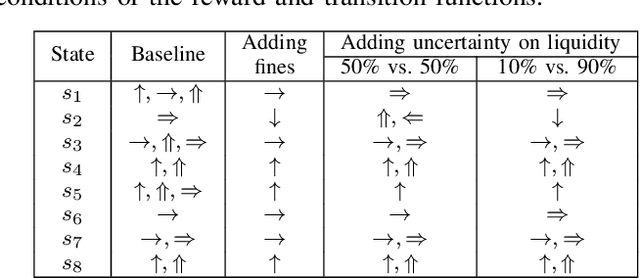

Learning Unfair Trading: a Market Manipulation Analysis From the Reinforcement Learning Perspective

Nov 02, 2015

Abstract:Market manipulation is a strategy used by traders to alter the price of financial securities. One type of manipulation is based on the process of buying or selling assets by using several trading strategies, among them spoofing is a popular strategy and is considered illegal by market regulators. Some promising tools have been developed to detect manipulation, but cases can still be found in the markets. In this paper we model spoofing and pinging trading, two strategies that differ in the legal background but share the same elemental concept of market manipulation. We use a reinforcement learning framework within the full and partial observability of Markov decision processes and analyse the underlying behaviour of the manipulators by finding the causes of what encourages the traders to perform fraudulent activities. This reveals procedures to counter the problem that may be helpful to market regulators as our model predicts the activity of spoofers.

Risk Agoras: Dialectical Argumentation for Scientific Reasoning

Jan 16, 2013Abstract:We propose a formal framework for intelligent systems which can reason about scientific domains, in particular about the carcinogenicity of chemicals, and we study its properties. Our framework is grounded in a philosophy of scientific enquiry and discourse, and uses a model of dialectical argumentation. The formalism enables representation of scientific uncertainty and conflict in a manner suitable for qualitative reasoning about the domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge