Nandi Schoots

Relating Piecewise Linear Kolmogorov Arnold Networks to ReLU Networks

Mar 03, 2025

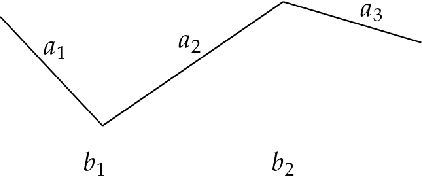

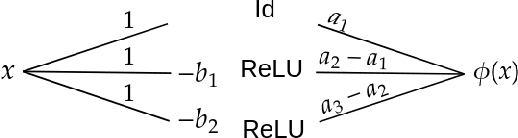

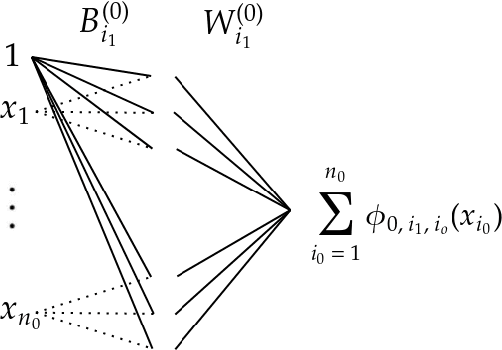

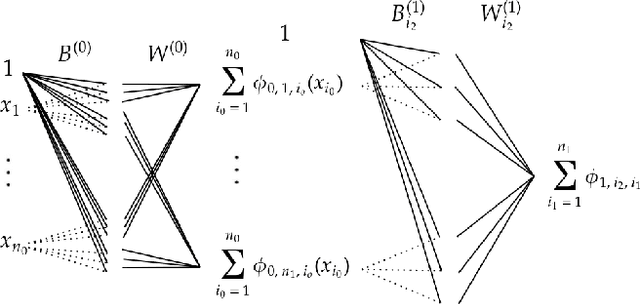

Abstract:Kolmogorov-Arnold Networks are a new family of neural network architectures which holds promise for overcoming the curse of dimensionality and has interpretability benefits (arXiv:2404.19756). In this paper, we explore the connection between Kolmogorov Arnold Networks (KANs) with piecewise linear (univariate real) functions and ReLU networks. We provide completely explicit constructions to convert a piecewise linear KAN into a ReLU network and vice versa.

Modular Training of Neural Networks aids Interpretability

Feb 04, 2025

Abstract:An approach to improve neural network interpretability is via clusterability, i.e., splitting a model into disjoint clusters that can be studied independently. We define a measure for clusterability and show that pre-trained models form highly enmeshed clusters via spectral graph clustering. We thus train models to be more modular using a ``clusterability loss'' function that encourages the formation of non-interacting clusters. Using automated interpretability techniques, we show that our method can help train models that are more modular and learn different, disjoint, and smaller circuits. We investigate CNNs trained on MNIST and CIFAR, small transformers trained on modular addition, and language models. Our approach provides a promising direction for training neural networks that learn simpler functions and are easier to interpret.

Open Problems in Mechanistic Interpretability

Jan 27, 2025

Abstract:Mechanistic interpretability aims to understand the computational mechanisms underlying neural networks' capabilities in order to accomplish concrete scientific and engineering goals. Progress in this field thus promises to provide greater assurance over AI system behavior and shed light on exciting scientific questions about the nature of intelligence. Despite recent progress toward these goals, there are many open problems in the field that require solutions before many scientific and practical benefits can be realized: Our methods require both conceptual and practical improvements to reveal deeper insights; we must figure out how best to apply our methods in pursuit of specific goals; and the field must grapple with socio-technical challenges that influence and are influenced by our work. This forward-facing review discusses the current frontier of mechanistic interpretability and the open problems that the field may benefit from prioritizing.

The Propensity for Density in Feed-forward Models

Oct 18, 2024Abstract:Does the process of training a neural network to solve a task tend to use all of the available weights even when the task could be solved with fewer weights? To address this question we study the effects of pruning fully connected, convolutional and residual models while varying their widths. We find that the proportion of weights that can be pruned without degrading performance is largely invariant to model size. Increasing the width of a model has little effect on the density of the pruned model relative to the increase in absolute size of the pruned network. In particular, we find substantial prunability across a large range of model sizes, where our biggest model is 50 times as wide as our smallest model. We explore three hypotheses that could explain these findings.

Hidden in Plain Text: Emergence & Mitigation of Steganographic Collusion in LLMs

Oct 02, 2024

Abstract:The rapid proliferation of frontier model agents promises significant societal advances but also raises concerns about systemic risks arising from unsafe interactions. Collusion to the disadvantage of others has been identified as a central form of undesirable agent cooperation. The use of information hiding (steganography) in agent communications could render collusion practically undetectable. This underscores the need for evaluation frameworks to monitor and mitigate steganographic collusion capabilities. We address a crucial gap in the literature by demonstrating, for the first time, that robust steganographic collusion in LLMs can arise indirectly from optimization pressure. To investigate this problem we design two approaches -- a gradient-based reinforcement learning (GBRL) method and an in-context reinforcement learning (ICRL) method -- for reliably eliciting sophisticated LLM-generated linguistic text steganography. Importantly, we find that emergent steganographic collusion can be robust to both passive steganalytic oversight of model outputs and active mitigation through communication paraphrasing. We contribute a novel model evaluation framework and discuss limitations and future work. Our findings imply that effective risk mitigation from steganographic collusion post-deployment requires innovation in passive and active oversight techniques.

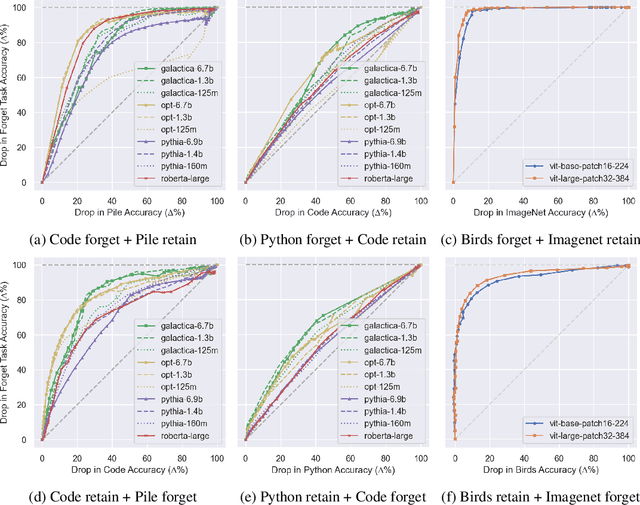

Extending Activation Steering to Broad Skills and Multiple Behaviours

Mar 09, 2024

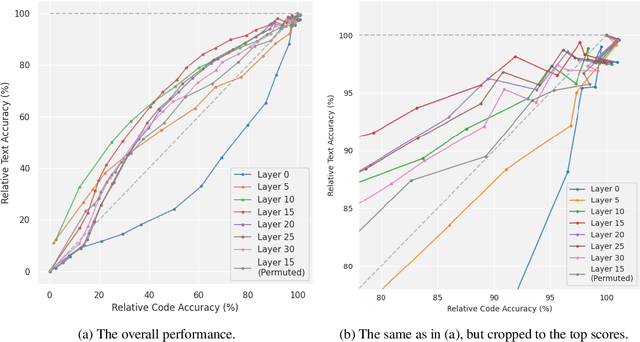

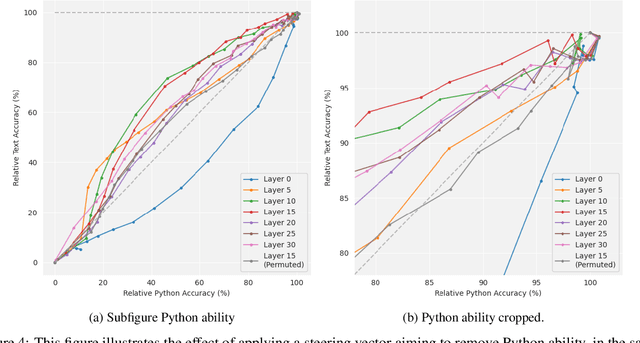

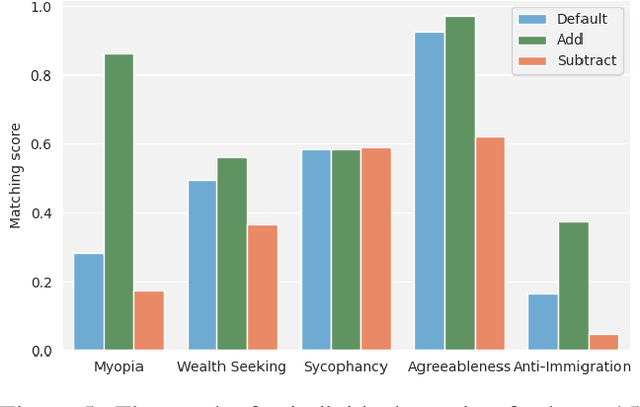

Abstract:Current large language models have dangerous capabilities, which are likely to become more problematic in the future. Activation steering techniques can be used to reduce risks from these capabilities. In this paper, we investigate the efficacy of activation steering for broad skills and multiple behaviours. First, by comparing the effects of reducing performance on general coding ability and Python-specific ability, we find that steering broader skills is competitive to steering narrower skills. Second, we steer models to become more or less myopic and wealth-seeking, among other behaviours. In our experiments, combining steering vectors for multiple different behaviours into one steering vector is largely unsuccessful. On the other hand, injecting individual steering vectors at different places in a model simultaneously is promising.

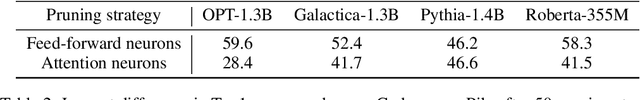

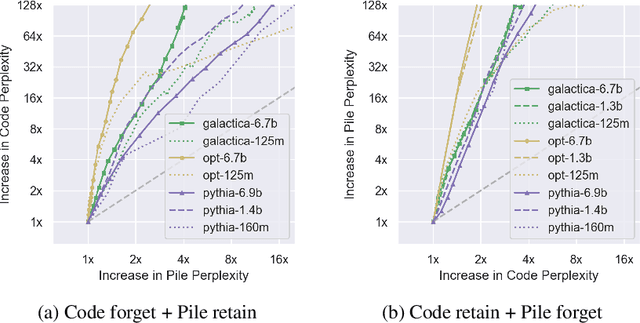

Dissecting Language Models: Machine Unlearning via Selective Pruning

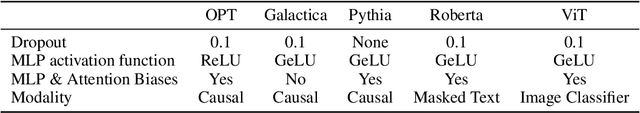

Mar 02, 2024

Abstract:Understanding and shaping the behaviour of Large Language Models (LLMs) is increasingly important as applications become more powerful and more frequently adopted. This paper introduces a machine unlearning method specifically designed for LLMs. We introduce a selective pruning method for LLMs that removes neurons based on their relative importance on a targeted capability compared to overall network performance. This approach is a compute- and data-efficient method for identifying and removing neurons that enable specific behaviours. Our findings reveal that both feed-forward and attention neurons in LLMs are specialized; that is, for specific tasks, certain neurons are more crucial than others.

Improving Activation Steering in Language Models with Mean-Centring

Dec 06, 2023Abstract:Recent work in activation steering has demonstrated the potential to better control the outputs of Large Language Models (LLMs), but it involves finding steering vectors. This is difficult because engineers do not typically know how features are represented in these models. We seek to address this issue by applying the idea of mean-centring to steering vectors. We find that taking the average of activations associated with a target dataset, and then subtracting the mean of all training activations, results in effective steering vectors. We test this method on a variety of models on natural language tasks by steering away from generating toxic text, and steering the completion of a story towards a target genre. We also apply mean-centring to extract function vectors, more effectively triggering the execution of a range of natural language tasks by a significant margin (compared to previous baselines). This suggests that mean-centring can be used to easily improve the effectiveness of activation steering in a wide range of contexts.

Comparing Optimization Targets for Contrast-Consistent Search

Nov 01, 2023

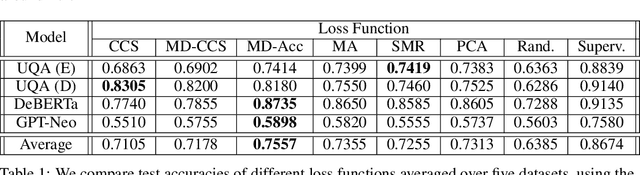

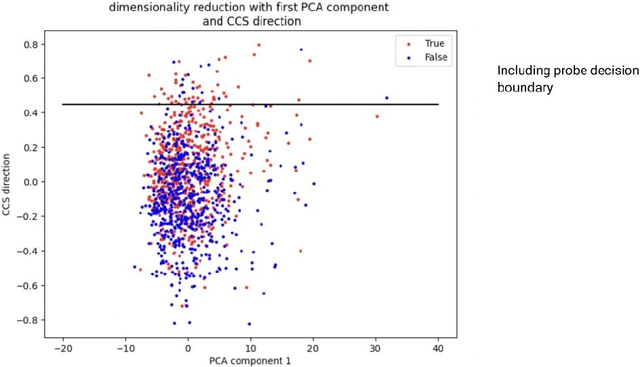

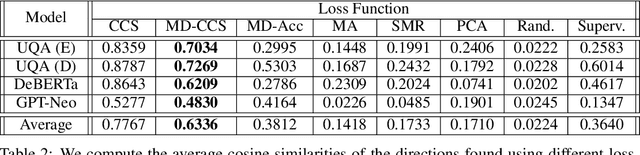

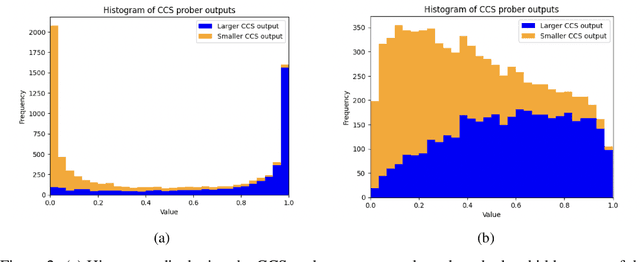

Abstract:We investigate the optimization target of Contrast-Consistent Search (CCS), which aims to recover the internal representations of truth of a large language model. We present a new loss function that we call the Midpoint-Displacement (MD) loss function. We demonstrate that for a certain hyper-parameter value this MD loss function leads to a prober with very similar weights to CCS. We further show that this hyper-parameter is not optimal and that with a better hyper-parameter the MD loss function attains a higher test accuracy than CCS.

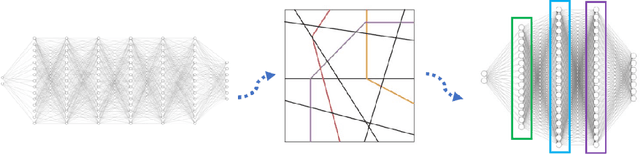

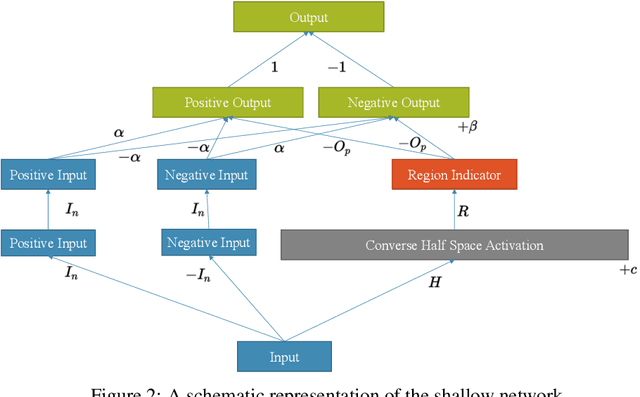

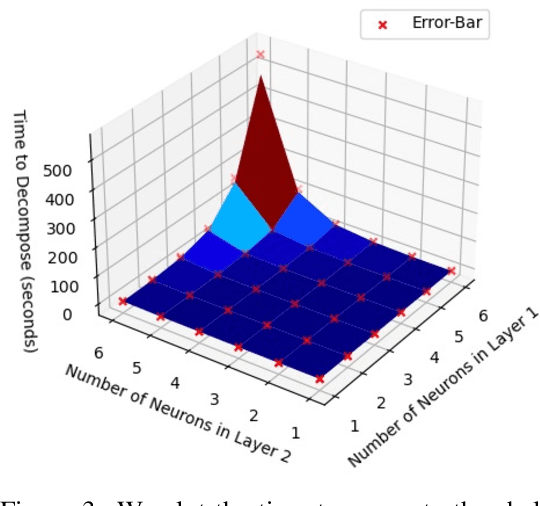

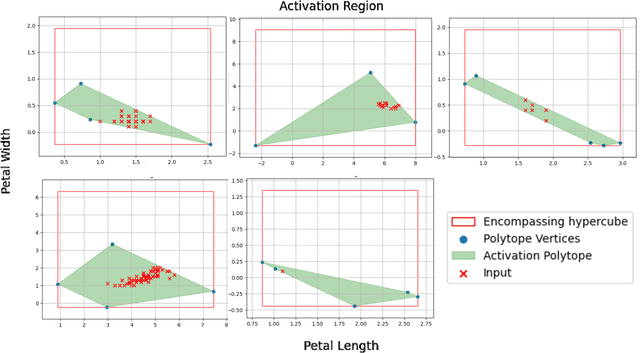

Any Deep ReLU Network is Shallow

Jun 20, 2023

Abstract:We constructively prove that every deep ReLU network can be rewritten as a functionally identical three-layer network with weights valued in the extended reals. Based on this proof, we provide an algorithm that, given a deep ReLU network, finds the explicit weights of the corresponding shallow network. The resulting shallow network is transparent and used to generate explanations of the model s behaviour.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge