Niels uit de Bos

Relating Piecewise Linear Kolmogorov Arnold Networks to ReLU Networks

Mar 03, 2025

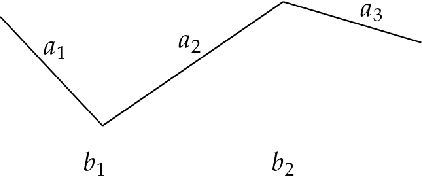

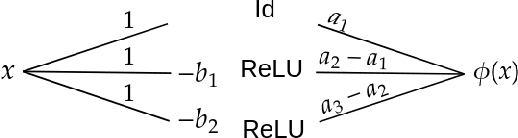

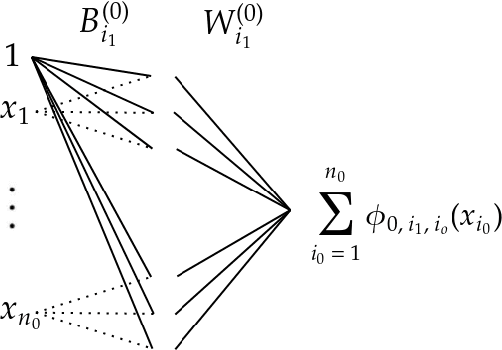

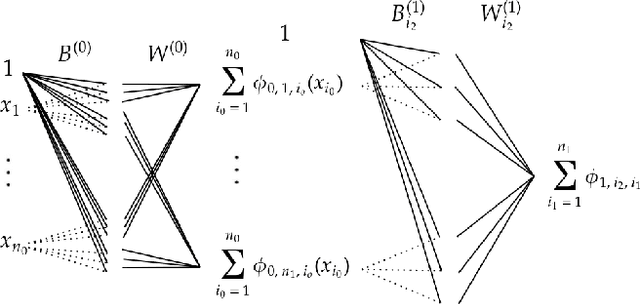

Abstract:Kolmogorov-Arnold Networks are a new family of neural network architectures which holds promise for overcoming the curse of dimensionality and has interpretability benefits (arXiv:2404.19756). In this paper, we explore the connection between Kolmogorov Arnold Networks (KANs) with piecewise linear (univariate real) functions and ReLU networks. We provide completely explicit constructions to convert a piecewise linear KAN into a ReLU network and vice versa.

Adversarial Circuit Evaluation

Jul 21, 2024Abstract:Circuits are supposed to accurately describe how a neural network performs a specific task, but do they really? We evaluate three circuits found in the literature (IOI, greater-than, and docstring) in an adversarial manner, considering inputs where the circuit's behavior maximally diverges from the full model. Concretely, we measure the KL divergence between the full model's output and the circuit's output, calculated through resample ablation, and we analyze the worst-performing inputs. Our results show that the circuits for the IOI and docstring tasks fail to behave similarly to the full model even on completely benign inputs from the original task, indicating that more robust circuits are needed for safety-critical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge