Qiaoying Huang

Object-Guided Instance Segmentation With Auxiliary Feature Refinement for Biological Images

Jun 14, 2021

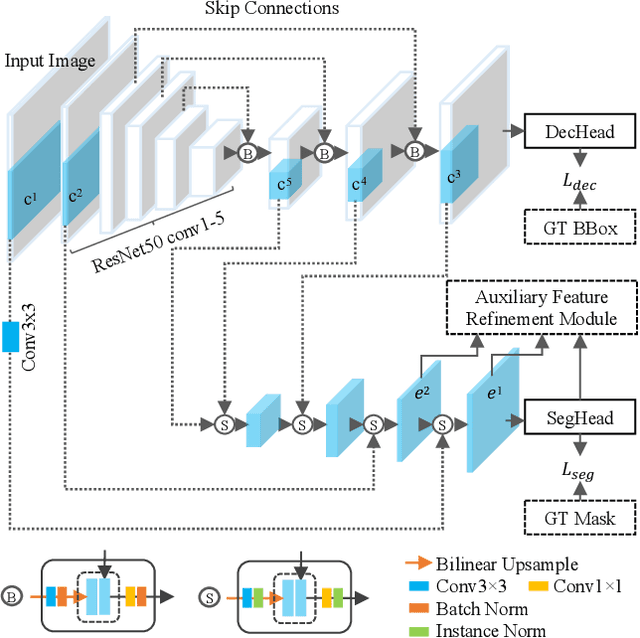

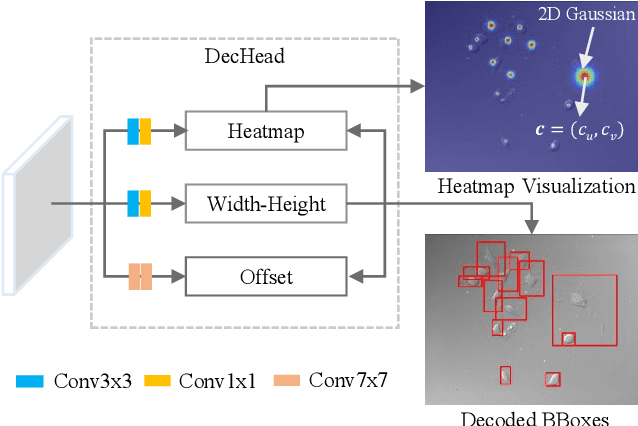

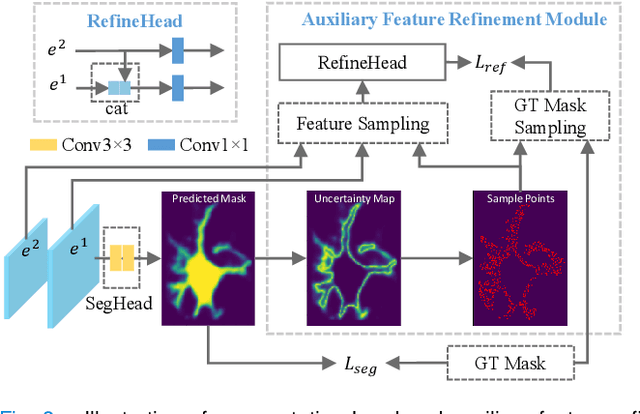

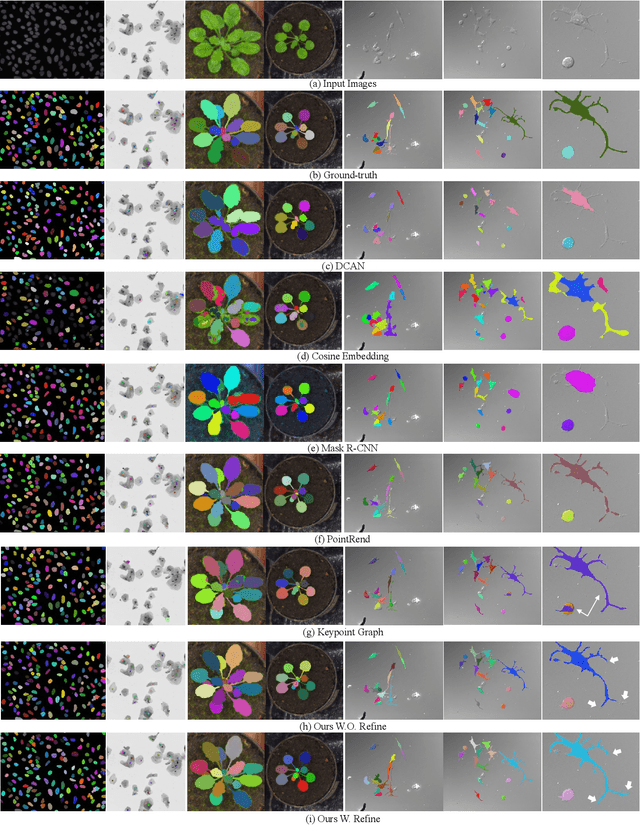

Abstract:Instance segmentation is of great importance for many biological applications, such as study of neural cell interactions, plant phenotyping, and quantitatively measuring how cells react to drug treatment. In this paper, we propose a novel box-based instance segmentation method. Box-based instance segmentation methods capture objects via bounding boxes and then perform individual segmentation within each bounding box region. However, existing methods can hardly differentiate the target from its neighboring objects within the same bounding box region due to their similar textures and low-contrast boundaries. To deal with this problem, in this paper, we propose an object-guided instance segmentation method. Our method first detects the center points of the objects, from which the bounding box parameters are then predicted. To perform segmentation, an object-guided coarse-to-fine segmentation branch is built along with the detection branch. The segmentation branch reuses the object features as guidance to separate target object from the neighboring ones within the same bounding box region. To further improve the segmentation quality, we design an auxiliary feature refinement module that densely samples and refines point-wise features in the boundary regions. Experimental results on three biological image datasets demonstrate the advantages of our method. The code will be available at https://github.com/yijingru/ObjGuided-Instance-Segmentation.

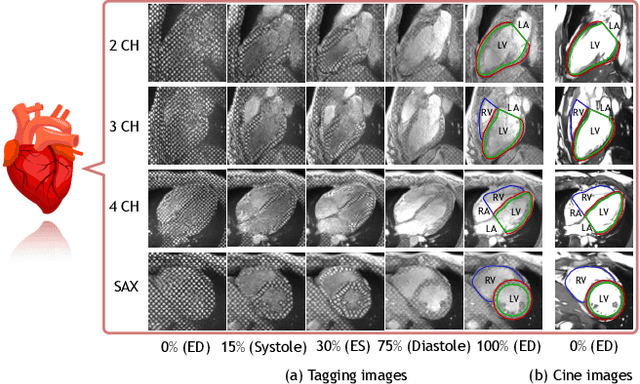

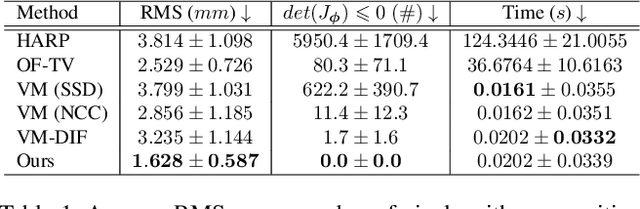

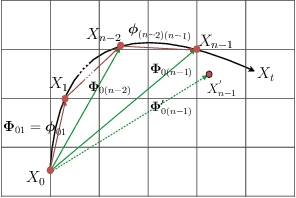

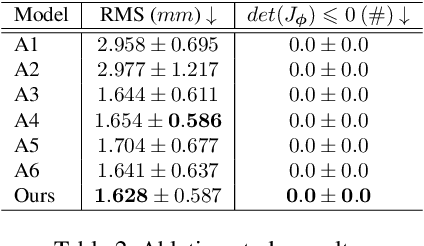

DeepTag: An Unsupervised Deep Learning Method for Motion Tracking on Cardiac Tagging Magnetic Resonance Images

Mar 29, 2021

Abstract:Cardiac tagging magnetic resonance imaging (t-MRI) is the gold standard for regional myocardium deformation and cardiac strain estimation. However, this technique has not been widely used in clinical diagnosis, as a result of the difficulty of motion tracking encountered with t-MRI images. In this paper, we propose a novel deep learning-based fully unsupervised method for in vivo motion tracking on t-MRI images. We first estimate the motion field (INF) between any two consecutive t-MRI frames by a bi-directional generative diffeomorphic registration neural network. Using this result, we then estimate the Lagrangian motion field between the reference frame and any other frame through a differentiable composition layer. By utilizing temporal information to perform reasonable estimations on spatio-temporal motion fields, this novel method provides a useful solution for motion tracking and image registration in dynamic medical imaging. Our method has been validated on a representative clinical t-MRI dataset; the experimental results show that our method is superior to conventional motion tracking methods in terms of landmark tracking accuracy and inference efficiency.

Oriented Object Detection in Aerial Images with Box Boundary-Aware Vectors

Aug 29, 2020

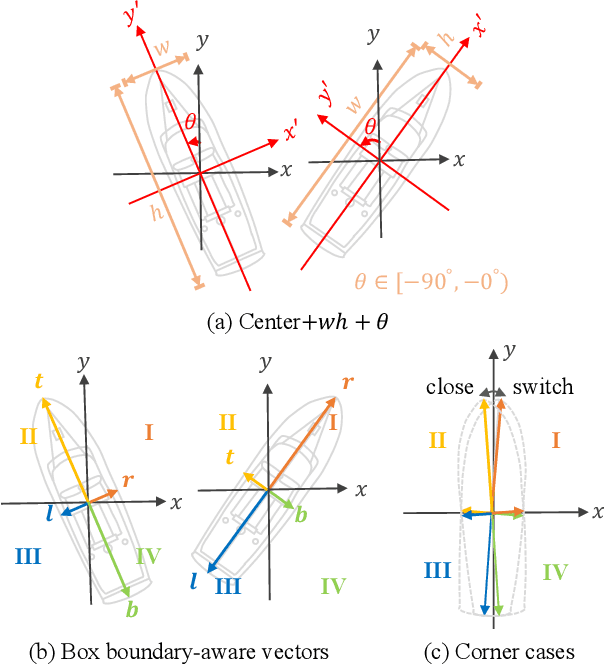

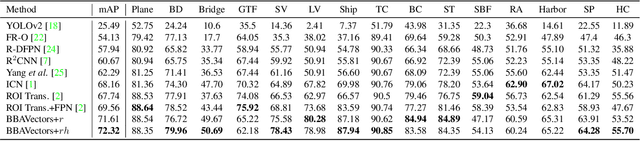

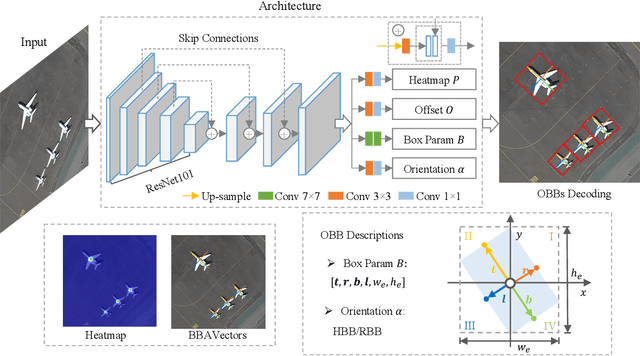

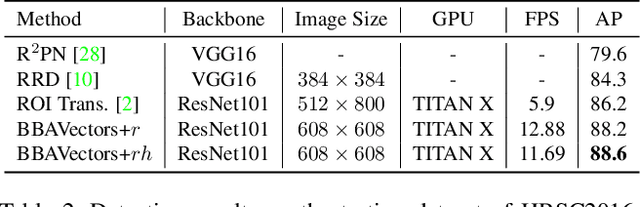

Abstract:Oriented object detection in aerial images is a challenging task as the objects in aerial images are displayed in arbitrary directions and are usually densely packed. Current oriented object detection methods mainly rely on two-stage anchor-based detectors. However, the anchor-based detectors typically suffer from a severe imbalance issue between the positive and negative anchor boxes. To address this issue, in this work we extend the horizontal keypoint-based object detector to the oriented object detection task. In particular, we first detect the center keypoints of the objects, based on which we then regress the box boundary-aware vectors (BBAVectors) to capture the oriented bounding boxes. The box boundary-aware vectors are distributed in the four quadrants of a Cartesian coordinate system for all arbitrarily oriented objects. To relieve the difficulty of learning the vectors in the corner cases, we further classify the oriented bounding boxes into horizontal and rotational bounding boxes. In the experiment, we show that learning the box boundary-aware vectors is superior to directly predicting the width, height, and angle of an oriented bounding box, as adopted in the baseline method. Besides, the proposed method competes favorably with state-of-the-art methods. Code is available at https://github.com/yijingru/BBAVectors-Oriented-Object-Detection.

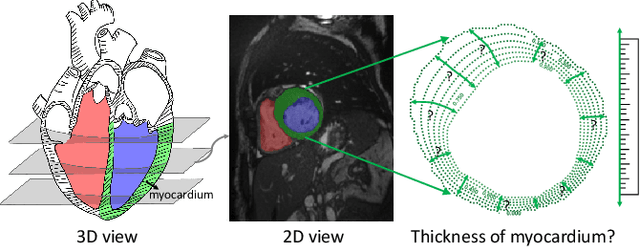

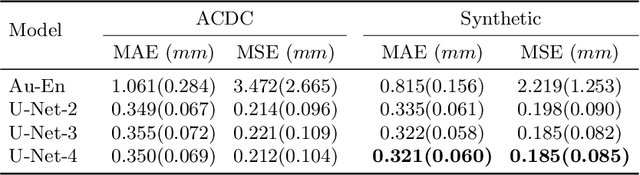

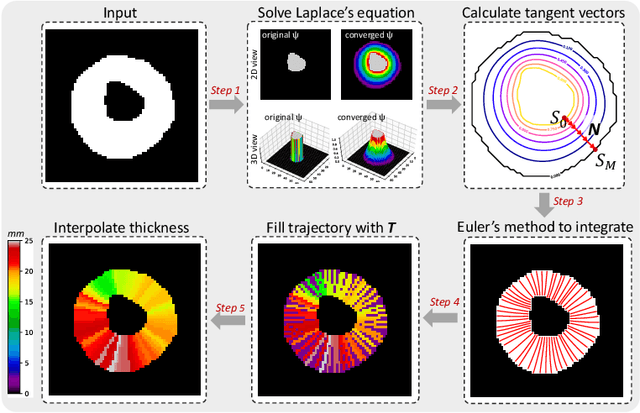

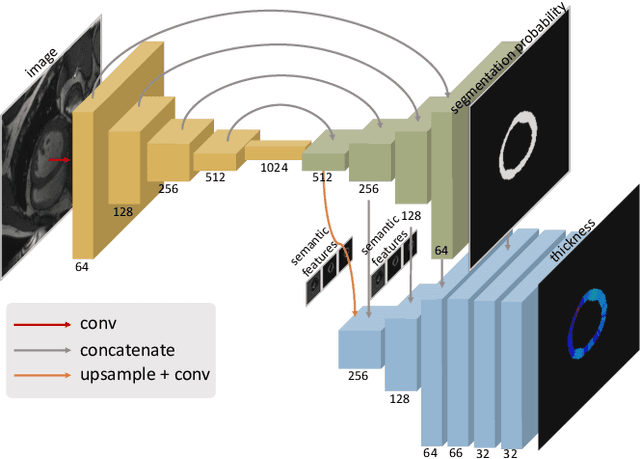

Measure Anatomical Thickness from Cardiac MRI with Deep Neural Networks

Aug 25, 2020

Abstract:Accurate estimation of shape thickness from medical images is crucial in clinical applications. For example, the thickness of myocardium is one of the key to cardiac disease diagnosis. While mathematical models are available to obtain accurate dense thickness estimation, they suffer from heavy computational overhead due to iterative solvers. To this end, we propose novel methods for dense thickness estimation, including a fast solver that estimates thickness from binary annular shapes and an end-to-end network that estimates thickness directly from raw cardiac images.We test the proposed models on three cardiac datasets and one synthetic dataset, achieving impressive results and generalizability on all. Thickness estimation is performed without iterative solvers or manual correction, which is 100 times faster than the mathematical model. We also analyze thickness patterns on different cardiac pathologies with a standard clinical model and the results demonstrate the potential clinical value of our method for thickness based cardiac disease diagnosis.

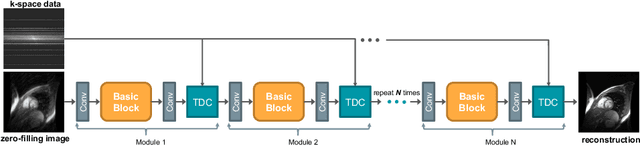

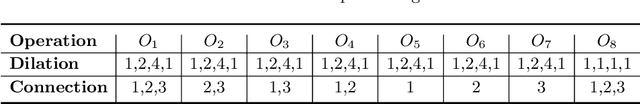

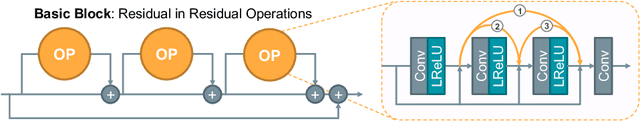

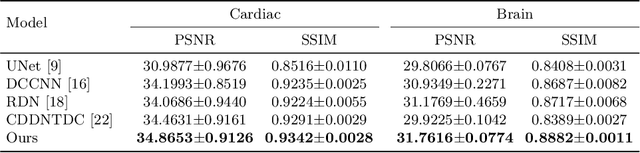

Enhanced MRI Reconstruction Network using Neural Architecture Search

Aug 19, 2020

Abstract:The accurate reconstruction of under-sampled magnetic resonance imaging (MRI) data using modern deep learning technology, requires significant effort to design the necessary complex neural network architectures. The cascaded network architecture for MRI reconstruction has been widely used, while it suffers from the "vanishing gradient" problem when the network becomes deep. In addition, homogeneous architecture degrades the representation capacity of the network. In this work, we present an enhanced MRI reconstruction network using a residual in residual basic block. For each cell in the basic block, we use the differentiable neural architecture search (NAS) technique to automatically choose the optimal operation among eight variants of the dense block. This new heterogeneous network is evaluated on two publicly available datasets and outperforms all current state-of-the-art methods, which demonstrates the effectiveness of our proposed method.

PC-U Net: Learning to Jointly Reconstruct and Segment the Cardiac Walls in 3D from CT Data

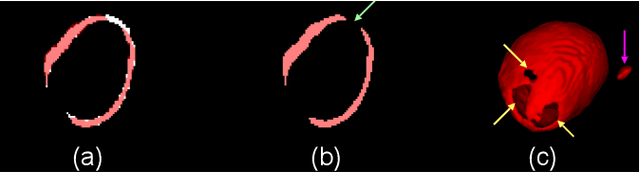

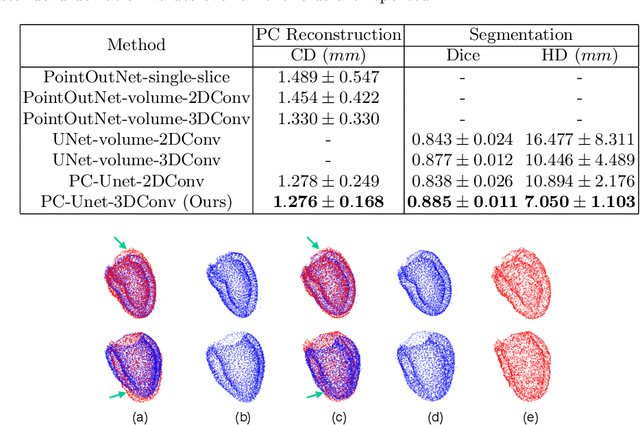

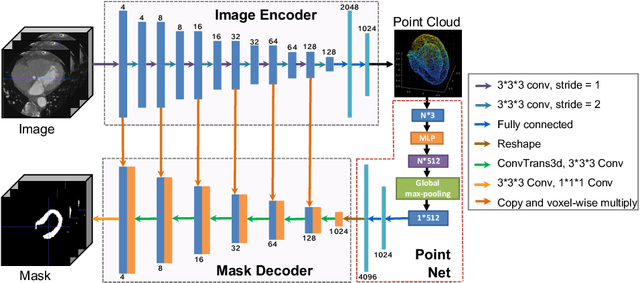

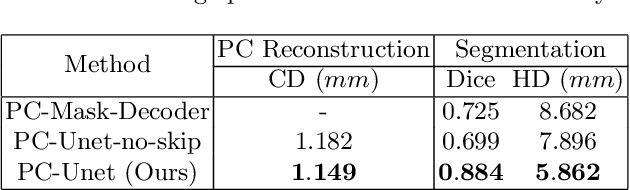

Aug 18, 2020

Abstract:The 3D volumetric shape of the heart's left ventricle (LV) myocardium (MYO) wall provides important information for diagnosis of cardiac disease and invasive procedure navigation. Many cardiac image segmentation methods have relied on detection of region-of-interest as a pre-requisite for shape segmentation and modeling. With segmentation results, a 3D surface mesh and a corresponding point cloud of the segmented cardiac volume can be reconstructed for further analyses. Although state-of-the-art methods (e.g., U-Net) have achieved decent performance on cardiac image segmentation in terms of accuracy, these segmentation results can still suffer from imaging artifacts and noise, which will lead to inaccurate shape modeling results. In this paper, we propose a PC-U net that jointly reconstructs the point cloud of the LV MYO wall directly from volumes of 2D CT slices and generates its segmentation masks from the predicted 3D point cloud. Extensive experimental results show that by incorporating a shape prior from the point cloud, the segmentation masks are more accurate than the state-of-the-art U-Net results in terms of Dice's coefficient and Hausdorff distance.The proposed joint learning framework of our PC-U net is beneficial for automatic cardiac image analysis tasks because it can obtain simultaneously the 3D shape and segmentation of the LV MYO walls.

Weakly Supervised Deep Nuclei Segmentation Using Partial Points Annotation in Histopathology Images

Jul 10, 2020

Abstract:Nuclei segmentation is a fundamental task in histopathology image analysis. Typically, such segmentation tasks require significant effort to manually generate accurate pixel-wise annotations for fully supervised training. To alleviate such tedious and manual effort, in this paper we propose a novel weakly supervised segmentation framework based on partial points annotation, i.e., only a small portion of nuclei locations in each image are labeled. The framework consists of two learning stages. In the first stage, we design a semi-supervised strategy to learn a detection model from partially labeled nuclei locations. Specifically, an extended Gaussian mask is designed to train an initial model with partially labeled data. Then, selftraining with background propagation is proposed to make use of the unlabeled regions to boost nuclei detection and suppress false positives. In the second stage, a segmentation model is trained from the detected nuclei locations in a weakly-supervised fashion. Two types of coarse labels with complementary information are derived from the detected points and are then utilized to train a deep neural network. The fully-connected conditional random field loss is utilized in training to further refine the model without introducing extra computational complexity during inference. The proposed method is extensively evaluated on two nuclei segmentation datasets. The experimental results demonstrate that our method can achieve competitive performance compared to the fully supervised counterpart and the state-of-the-art methods while requiring significantly less annotation effort.

Fairness-Aware Explainable Recommendation over Knowledge Graphs

Jun 28, 2020

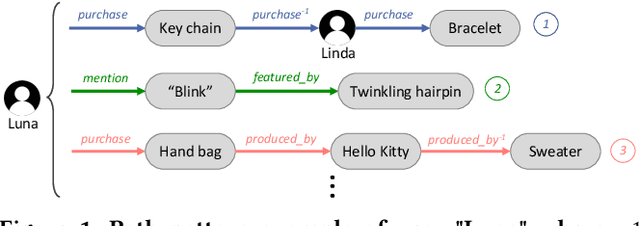

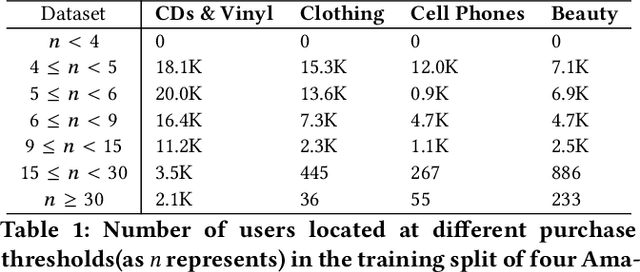

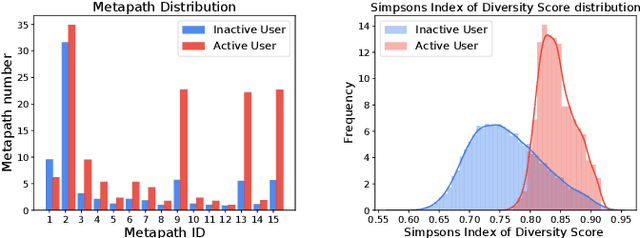

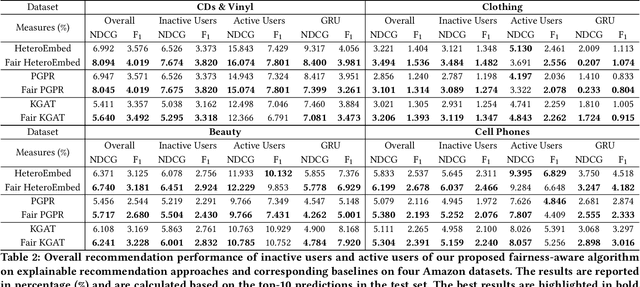

Abstract:There has been growing attention on fairness considerations recently, especially in the context of intelligent decision making systems. Explainable recommendation systems, in particular, may suffer from both explanation bias and performance disparity. In this paper, we analyze different groups of users according to their level of activity, and find that bias exists in recommendation performance between different groups. We show that inactive users may be more susceptible to receiving unsatisfactory recommendations, due to insufficient training data for the inactive users, and that their recommendations may be biased by the training records of more active users, due to the nature of collaborative filtering, which leads to an unfair treatment by the system. We propose a fairness constrained approach via heuristic re-ranking to mitigate this unfairness problem in the context of explainable recommendation over knowledge graphs. We experiment on several real-world datasets with state-of-the-art knowledge graph-based explainable recommendation algorithms. The promising results show that our algorithm is not only able to provide high-quality explainable recommendations, but also reduces the recommendation unfairness in several respects.

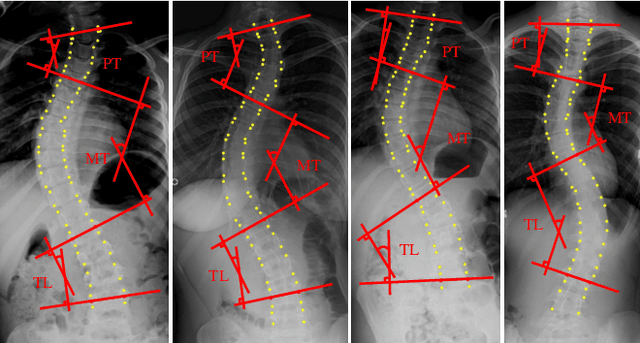

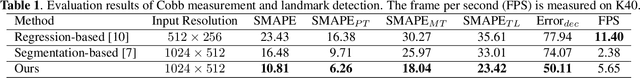

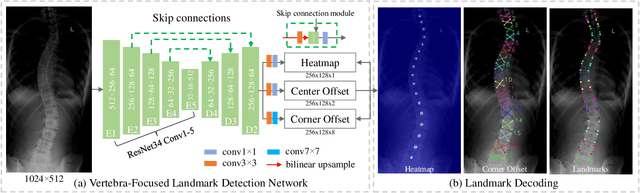

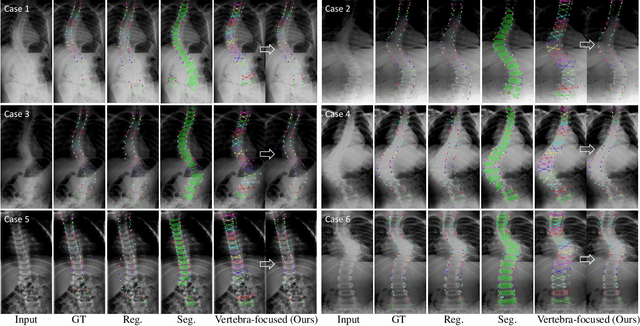

Vertebra-Focused Landmark Detection for Scoliosis Assessment

Jan 09, 2020

Abstract:Adolescent idiopathic scoliosis (AIS) is a lifetime disease that arises in children. Accurate estimation of Cobb angles of the scoliosis is essential for clinicians to make diagnosis and treatment decisions. The Cobb angles are measured according to the vertebrae landmarks. Existing regression-based methods for the vertebra landmark detection typically suffer from large dense mapping parameters and inaccurate landmark localization. The segmentation-based methods tend to predict connected or corrupted vertebra masks. In this paper, we propose a novel vertebra-focused landmark detection method. Our model first localizes the vertebra centers, based on which it then traces the four corner landmarks of the vertebra through the learned corner offset. In this way, our method is able to keep the order of the landmarks. The comparison results demonstrate the merits of our method in both Cobb angle measurement and landmark detection on low-contrast and ambiguous X-ray images. Code is available at: \url{https://github.com/yijingru/Vertebra-Landmark-Detection}.

Multi-scale Cell Instance Segmentation with Keypoint Graph based Bounding Boxes

Jul 25, 2019

Abstract:Most existing methods handle cell instance segmentation problems directly without relying on additional detection boxes. These methods generally fails to separate touching cells due to the lack of global understanding of the objects. In contrast, box-based instance segmentation solves this problem by combining object detection with segmentation. However, existing methods typically utilize anchor box-based detectors, which would lead to inferior instance segmentation performance due to the class imbalance issue. In this paper, we propose a new box-based cell instance segmentation method. In particular, we first detect the five pre-defined points of a cell via keypoints detection. Then we group these points according to a keypoint graph and subsequently extract the bounding box for each cell. Finally, cell segmentation is performed on feature maps within the bounding boxes. We validate our method on two cell datasets with distinct object shapes, and empirically demonstrate the superiority of our method compared to other instance segmentation techniques. Code is available at: https://github.com/yijingru/KG_Instance_Segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge