Pablo Lanillos

Designing Tools with Control Confidence

Oct 14, 2025Abstract:Prehistoric humans invented stone tools for specialized tasks by not just maximizing the tool's immediate goal-completion accuracy, but also increasing their confidence in the tool for later use under similar settings. This factor contributed to the increased robustness of the tool, i.e., the least performance deviations under environmental uncertainties. However, the current autonomous tool design frameworks solely rely on performance optimization, without considering the agent's confidence in tool use for repeated use. Here, we take a step towards filling this gap by i) defining an optimization framework for task-conditioned autonomous hand tool design for robots, where ii) we introduce a neuro-inspired control confidence term into the optimization routine that helps the agent to design tools with higher robustness. Through rigorous simulations using a robotic arm, we show that tools designed with control confidence as the objective function are more robust to environmental uncertainties during tool use than a pure accuracy-driven objective. We further show that adding control confidence to the objective function for tool design provides a balance between the robustness and goal accuracy of the designed tools under control perturbations. Finally, we show that our CMAES-based evolutionary optimization strategy for autonomous tool design outperforms other state-of-the-art optimizers by designing the optimal tool within the fewest iterations. Code: https://github.com/ajitham123/Tool_design_control_confidence.

Spiking neurons as predictive controllers of linear systems

Jul 22, 2025Abstract:Neurons communicate with downstream systems via sparse and incredibly brief electrical pulses, or spikes. Using these events, they control various targets such as neuromuscular units, neurosecretory systems, and other neurons in connected circuits. This gave rise to the idea of spiking neurons as controllers, in which spikes are the control signal. Using instantaneous events directly as the control inputs, also called `impulse control', is challenging as it does not scale well to larger networks and has low analytical tractability. Therefore, current spiking control usually relies on filtering the spike signal to approximate analog control. This ultimately means spiking neural networks (SNNs) have to output a continuous control signal, necessitating continuous energy input into downstream systems. Here, we circumvent the need for rate-based representations, providing a scalable method for task-specific spiking control with sparse neural activity. In doing so, we take inspiration from both optimal control and neuroscience theory, and define a spiking rule where spikes are only emitted if they bring a dynamical system closer to a target. From this principle, we derive the required connectivity for an SNN, and show that it can successfully control linear systems. We show that for physically constrained systems, predictive control is required, and the control signal ends up exploiting the passive dynamics of the downstream system to reach a target. Finally, we show that the control method scales to both high-dimensional networks and systems. Importantly, in all cases, we maintain a closed-form mathematical derivation of the network connectivity, the network dynamics and the control objective. This work advances the understanding of SNNs as biologically-inspired controllers, providing insight into how real neurons could exert control, and enabling applications in neuromorphic hardware design.

Adaptive Torque Control of Exoskeletons under Spasticity Conditions via Reinforcement Learning

Mar 14, 2025Abstract:Spasticity is a common movement disorder symptom in individuals with cerebral palsy, hereditary spastic paraplegia, spinal cord injury and stroke, being one of the most disabling features in the progression of these diseases. Despite the potential benefit of using wearable robots to treat spasticity, their use is not currently recommended to subjects with a level of spasticity above ${1^+}$ on the Modified Ashworth Scale. The varying dynamics of this velocity-dependent tonic stretch reflex make it difficult to deploy safe personalized controllers. Here, we describe a novel adaptive torque controller via deep reinforcement learning (RL) for a knee exoskeleton under joint spasticity conditions, which accounts for task performance and interaction forces reduction. To train the RL agent, we developed a digital twin, including a musculoskeletal-exoskeleton system with joint misalignment and a differentiable spastic reflexes model for the muscles activation. Results for a simulated knee extension movement showed that the agent learns to control the exoskeleton for individuals with different levels of spasticity. The proposed controller was able to reduce maximum torques applied to the human joint under spastic conditions by an average of 10.6\% and decreases the root mean square until the settling time by 8.9\% compared to a conventional compliant controller.

Object-centric proto-symbolic behavioural reasoning from pixels

Nov 26, 2024Abstract:Autonomous intelligent agents must bridge computational challenges at disparate levels of abstraction, from the low-level spaces of sensory input and motor commands to the high-level domain of abstract reasoning and planning. A key question in designing such agents is how best to instantiate the representational space that will interface between these two levels -- ideally without requiring supervision in the form of expensive data annotations. These objectives can be efficiently achieved by representing the world in terms of objects (grounded in perception and action). In this work, we present a novel, brain-inspired, deep-learning architecture that learns from pixels to interpret, control, and reason about its environment, using object-centric representations. We show the utility of our approach through tasks in synthetic environments that require a combination of (high-level) logical reasoning and (low-level) continuous control. Results show that the agent can learn emergent conditional behavioural reasoning, such as $(A \to B) \land (\neg A \to C)$, as well as logical composition $(A \to B) \land (A \to C) \vdash A \to (B \land C)$ and XOR operations, and successfully controls its environment to satisfy objectives deduced from these logical rules. The agent can adapt online to unexpected changes in its environment and is robust to mild violations of its world model, thanks to dynamic internal desired goal generation. While the present results are limited to synthetic settings (2D and 3D activated versions of dSprites), which fall short of real-world levels of complexity, the proposed architecture shows how to manipulate grounded object representations, as a key inductive bias for unsupervised learning, to enable behavioral reasoning.

Confidence-Aware Decision-Making and Control for Tool Selection

Mar 06, 2024Abstract:Self-reflecting about our performance (e.g., how confident we are) before doing a task is essential for decision making, such as selecting the most suitable tool or choosing the best route to drive. While this form of awareness -- thinking about our performance or metacognitive performance -- is well-known in humans, robots still lack this cognitive ability. This reflective monitoring can enhance their embodied decision power, robustness and safety. Here, we take a step in this direction by introducing a mathematical framework that allows robots to use their control self-confidence to make better-informed decisions. We derive a mathematical closed-form expression for control confidence for dynamic systems (i.e., the posterior inverse covariance of the control action). This control confidence seamlessly integrates within an objective function for decision making, that balances the: i) performance for task completion, ii) control effort, and iii) self-confidence. To evaluate our theoretical account, we framed the decision-making within the tool selection problem, where the agent has to select the best robot arm for a particular control task. The statistical analysis of the numerical simulations with randomized 2DOF arms shows that using control confidence during tool selection improves both real task performance, and the reliability of the tool for performance under unmodelled perturbations (e.g., external forces). Furthermore, our results indicate that control confidence is an early indicator of performance and thus, it can be used as a heuristic for making decisions when computation power is restricted or decision-making is intractable. Overall, we show the advantages of using confidence-aware decision-making and control scheme for dynamic systems.

Awareness in robotics: An early perspective from the viewpoint of the EIC Pathfinder Challenge "Awareness Inside''

Feb 14, 2024Abstract:Consciousness has been historically a heavily debated topic in engineering, science, and philosophy. On the contrary, awareness had less success in raising the interest of scholars in the past. However, things are changing as more and more researchers are getting interested in answering questions concerning what awareness is and how it can be artificially generated. The landscape is rapidly evolving, with multiple voices and interpretations of the concept being conceived and techniques being developed. The goal of this paper is to summarize and discuss the ones among these voices connected with projects funded by the EIC Pathfinder Challenge called ``Awareness Inside'', a nonrecurring call for proposals within Horizon Europe designed specifically for fostering research on natural and synthetic awareness. In this perspective, we dedicate special attention to challenges and promises of applying synthetic awareness in robotics, as the development of mature techniques in this new field is expected to have a special impact on generating more capable and trustworthy embodied systems.

Adaptive Noise Covariance Estimation under Colored Noise using Dynamic Expectation Maximization

Aug 15, 2023Abstract:The accurate estimation of the noise covariance matrix (NCM) in a dynamic system is critical for state estimation and control, as it has a major influence in their optimality. Although a large number of NCM estimation methods have been developed, most of them assume the noises to be white. However, in many real-world applications, the noises are colored (e.g., they exhibit temporal autocorrelations), resulting in suboptimal solutions. Here, we introduce a novel brain-inspired algorithm that accurately and adaptively estimates the NCM for dynamic systems subjected to colored noise. Particularly, we extend the Dynamic Expectation Maximization algorithm to perform both online noise covariance and state estimation by optimizing the free energy objective. We mathematically prove that our NCM estimator converges to the global optimum of this free energy objective. Using randomized numerical simulations, we show that our estimator outperforms nine baseline methods with minimal noise covariance estimation error under colored noise conditions. Notably, we show that our method outperforms the best baseline (Variational Bayes) in joint noise and state estimation for high colored noise. We foresee that the accuracy and the adaptive nature of our estimator make it suitable for online estimation in real-world applications.

World Models and Predictive Coding for Cognitive and Developmental Robotics: Frontiers and Challenges

Jan 14, 2023Abstract:Creating autonomous robots that can actively explore the environment, acquire knowledge and learn skills continuously is the ultimate achievement envisioned in cognitive and developmental robotics. Their learning processes should be based on interactions with their physical and social world in the manner of human learning and cognitive development. Based on this context, in this paper, we focus on the two concepts of world models and predictive coding. Recently, world models have attracted renewed attention as a topic of considerable interest in artificial intelligence. Cognitive systems learn world models to better predict future sensory observations and optimize their policies, i.e., controllers. Alternatively, in neuroscience, predictive coding proposes that the brain continuously predicts its inputs and adapts to model its own dynamics and control behavior in its environment. Both ideas may be considered as underpinning the cognitive development of robots and humans capable of continual or lifelong learning. Although many studies have been conducted on predictive coding in cognitive robotics and neurorobotics, the relationship between world model-based approaches in AI and predictive coding in robotics has rarely been discussed. Therefore, in this paper, we clarify the definitions, relationships, and status of current research on these topics, as well as missing pieces of world models and predictive coding in conjunction with crucially related concepts such as the free-energy principle and active inference in the context of cognitive and developmental robotics. Furthermore, we outline the frontiers and challenges involved in world models and predictive coding toward the further integration of AI and robotics, as well as the creation of robots with real cognitive and developmental capabilities in the future.

Closed-form control with spike coding networks

Dec 25, 2022Abstract:Efficient and robust control using spiking neural networks (SNNs) is still an open problem. Whilst behaviour of biological agents is produced through sparse and irregular spiking patterns, which provide both robust and efficient control, the activity patterns in most artificial spiking neural networks used for control are dense and regular -- resulting in potentially less efficient codes. Additionally, for most existing control solutions network training or optimization is necessary, even for fully identified systems, complicating their implementation in on-chip low-power solutions. The neuroscience theory of Spike Coding Networks (SCNs) offers a fully analytical solution for implementing dynamical systems in recurrent spiking neural networks -- while maintaining irregular, sparse, and robust spiking activity -- but it's not clear how to directly apply it to control problems. Here, we extend SCN theory by incorporating closed-form optimal estimation and control. The resulting networks work as a spiking equivalent of a linear-quadratic-Gaussian controller. We demonstrate robust spiking control of simulated spring-mass-damper and cart-pole systems, in the face of several perturbations, including input- and system-noise, system disturbances, and neural silencing. As our approach does not need learning or optimization, it offers opportunities for deploying fast and efficient task-specific on-chip spiking controllers with biologically realistic activity.

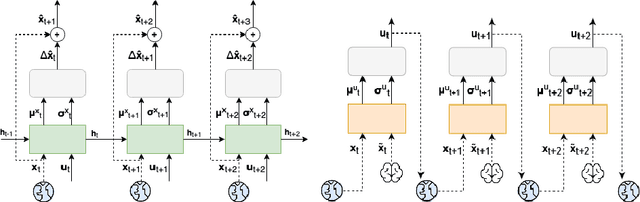

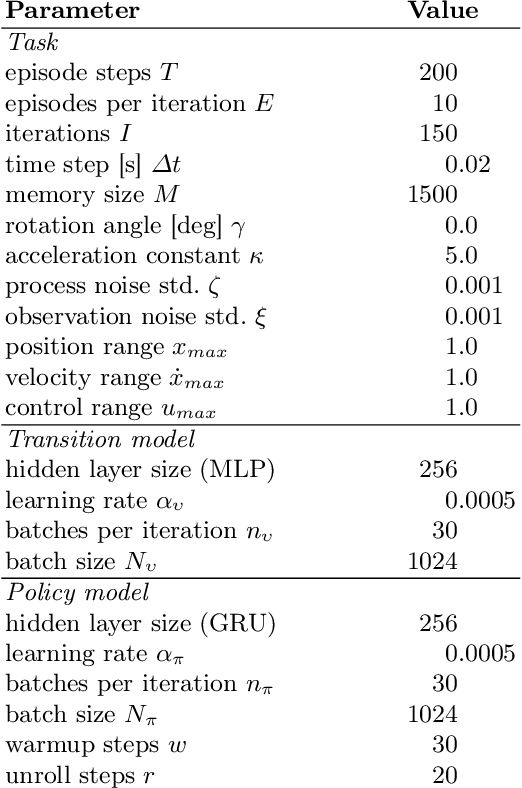

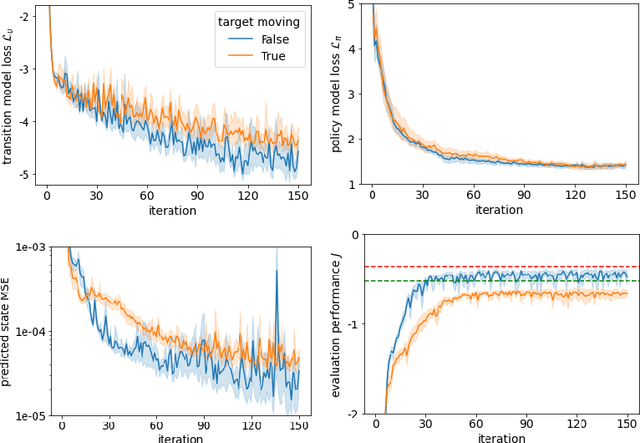

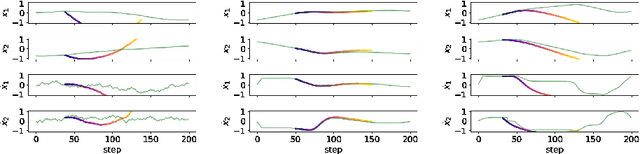

Learning Policies for Continuous Control via Transition Models

Sep 16, 2022

Abstract:It is doubtful that animals have perfect inverse models of their limbs (e.g., what muscle contraction must be applied to every joint to reach a particular location in space). However, in robot control, moving an arm's end-effector to a target position or along a target trajectory requires accurate forward and inverse models. Here we show that by learning the transition (forward) model from interaction, we can use it to drive the learning of an amortized policy. Hence, we revisit policy optimization in relation to the deep active inference framework and describe a modular neural network architecture that simultaneously learns the system dynamics from prediction errors and the stochastic policy that generates suitable continuous control commands to reach a desired reference position. We evaluated the model by comparing it against the baseline of a linear quadratic regulator, and conclude with additional steps to take toward human-like motor control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge