Jean Vanderdonckt

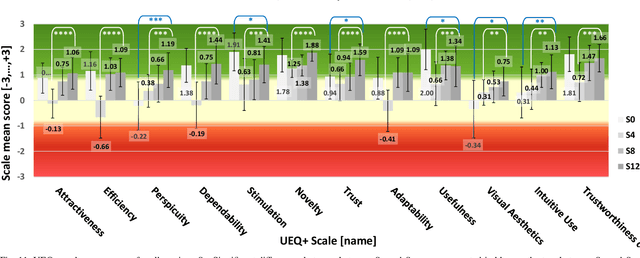

Context-aware Adaptive Visualizations for Critical Decision Making

Nov 14, 2025Abstract:Effective decision-making often relies on timely insights from complex visual data. While Information Visualization (InfoVis) dashboards can support this process, they rarely adapt to users' cognitive state, and less so in real time. We present Symbiotik, an intelligent, context-aware adaptive visualization system that leverages neurophysiological signals to estimate mental workload (MWL) and dynamically adapt visual dashboards using reinforcement learning (RL). Through a user study with 120 participants and three visualization types, we demonstrate that our approach improves task performance and engagement. Symbiotik offers a scalable, real-time adaptation architecture, and a validated methodology for neuroadaptive user interfaces.

Multiple Choice Questions and Large Languages Models: A Case Study with Fictional Medical Data

Jun 04, 2024Abstract:Large Language Models (LLMs) like ChatGPT demonstrate significant potential in the medical field, often evaluated using multiple-choice questions (MCQs) similar to those found on the USMLE. Despite their prevalence in medical education, MCQs have limitations that might be exacerbated when assessing LLMs. To evaluate the effectiveness of MCQs in assessing the performance of LLMs, we developed a fictional medical benchmark focused on a non-existent gland, the Glianorex. This approach allowed us to isolate the knowledge of the LLM from its test-taking abilities. We used GPT-4 to generate a comprehensive textbook on the Glianorex in both English and French and developed corresponding multiple-choice questions in both languages. We evaluated various open-source, proprietary, and domain-specific LLMs using these questions in a zero-shot setting. The models achieved average scores around 67%, with minor performance differences between larger and smaller models. Performance was slightly higher in English than in French. Fine-tuned medical models showed some improvement over their base versions in English but not in French. The uniformly high performance across models suggests that traditional MCQ-based benchmarks may not accurately measure LLMs' clinical knowledge and reasoning abilities, instead highlighting their pattern recognition skills. This study underscores the need for more robust evaluation methods to better assess the true capabilities of LLMs in medical contexts.

Awareness in robotics: An early perspective from the viewpoint of the EIC Pathfinder Challenge "Awareness Inside''

Feb 14, 2024Abstract:Consciousness has been historically a heavily debated topic in engineering, science, and philosophy. On the contrary, awareness had less success in raising the interest of scholars in the past. However, things are changing as more and more researchers are getting interested in answering questions concerning what awareness is and how it can be artificially generated. The landscape is rapidly evolving, with multiple voices and interpretations of the concept being conceived and techniques being developed. The goal of this paper is to summarize and discuss the ones among these voices connected with projects funded by the EIC Pathfinder Challenge called ``Awareness Inside'', a nonrecurring call for proposals within Horizon Europe designed specifically for fostering research on natural and synthetic awareness. In this perspective, we dedicate special attention to challenges and promises of applying synthetic awareness in robotics, as the development of mature techniques in this new field is expected to have a special impact on generating more capable and trustworthy embodied systems.

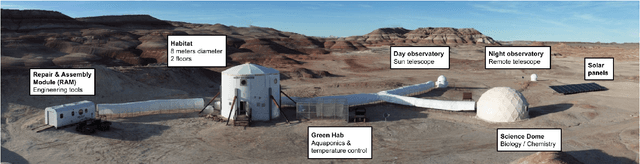

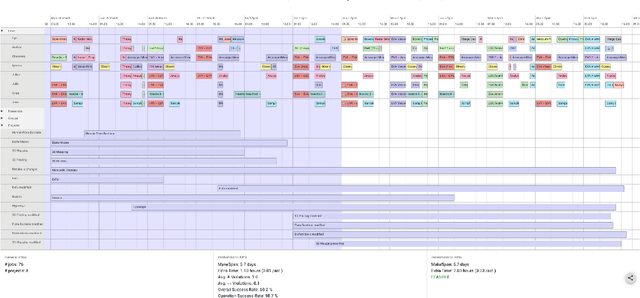

Enabling Astronaut Self-Scheduling using a Robust Advanced Modelling and Scheduling system: an assessment during a Mars analogue mission

Jan 14, 2023

Abstract:Human long duration exploration missions (LDEMs) raise a number of technological challenges. This paper addresses the question of the crew autonomy: as the distances increase, the communication delays and constraints tend to prevent the astronauts from being monitored and supported by a real time ground control. Eventually, future planetary missions will necessarily require a form of astronaut self-scheduling. We study the usage of a computer decision-support tool by a crew of analog astronauts, during a Mars simulation mission conducted at the Mars Desert Research Station (MDRS, Mars Society) in Utah. The proposed tool, called Romie, belongs to the new category of Robust Advanced Modelling and Scheduling (RAMS) systems. It allows the crew members (i) to visually model their scientific objectives and constraints, (ii) to compute near-optimal operational schedules while taking uncertainty into account, (iii) to monitor the execution of past and current activities, and (iv) to modify scientific objectives/constraints w.r.t. unforeseen events and opportunistic science. In this study, we empirically measure how the astronauts, who are novice planners, perform at using such a tool when self-scheduling under the realistic assumptions of a simulated Martian planetary habitat.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge