Alejandra Ciria

World Models and Predictive Coding for Cognitive and Developmental Robotics: Frontiers and Challenges

Jan 14, 2023Abstract:Creating autonomous robots that can actively explore the environment, acquire knowledge and learn skills continuously is the ultimate achievement envisioned in cognitive and developmental robotics. Their learning processes should be based on interactions with their physical and social world in the manner of human learning and cognitive development. Based on this context, in this paper, we focus on the two concepts of world models and predictive coding. Recently, world models have attracted renewed attention as a topic of considerable interest in artificial intelligence. Cognitive systems learn world models to better predict future sensory observations and optimize their policies, i.e., controllers. Alternatively, in neuroscience, predictive coding proposes that the brain continuously predicts its inputs and adapts to model its own dynamics and control behavior in its environment. Both ideas may be considered as underpinning the cognitive development of robots and humans capable of continual or lifelong learning. Although many studies have been conducted on predictive coding in cognitive robotics and neurorobotics, the relationship between world model-based approaches in AI and predictive coding in robotics has rarely been discussed. Therefore, in this paper, we clarify the definitions, relationships, and status of current research on these topics, as well as missing pieces of world models and predictive coding in conjunction with crucially related concepts such as the free-energy principle and active inference in the context of cognitive and developmental robotics. Furthermore, we outline the frontiers and challenges involved in world models and predictive coding toward the further integration of AI and robotics, as well as the creation of robots with real cognitive and developmental capabilities in the future.

Predictive Processing in Cognitive Robotics: a Review

Jan 22, 2021

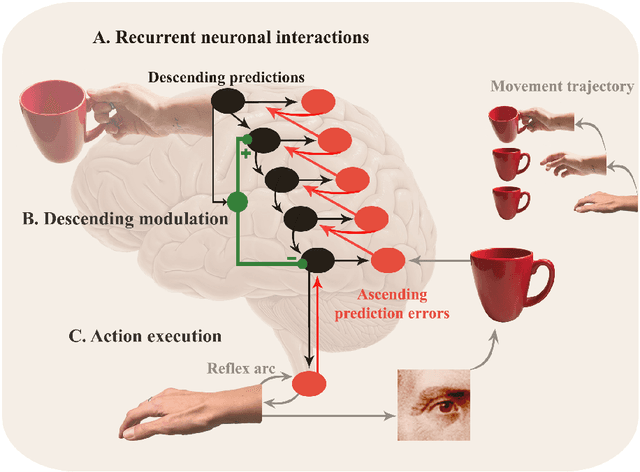

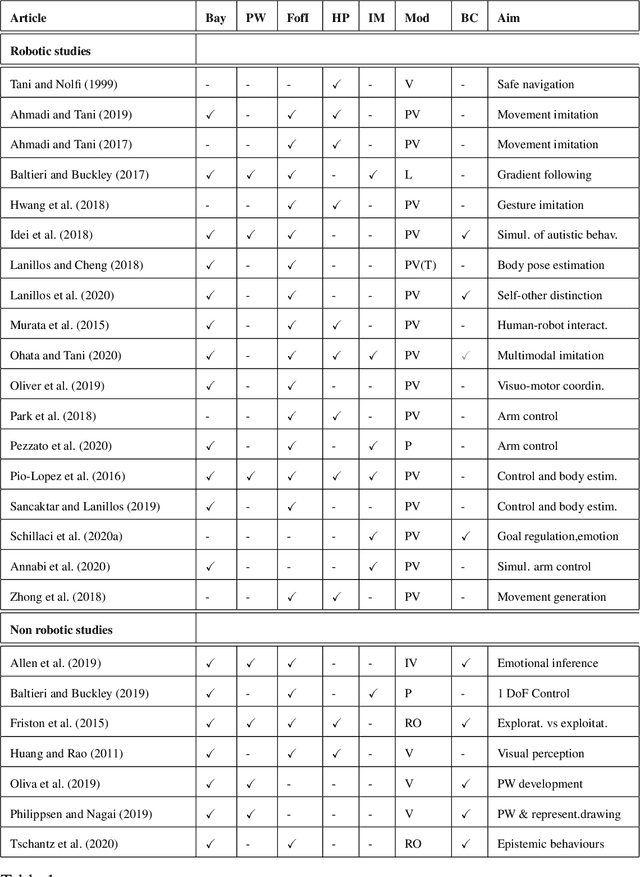

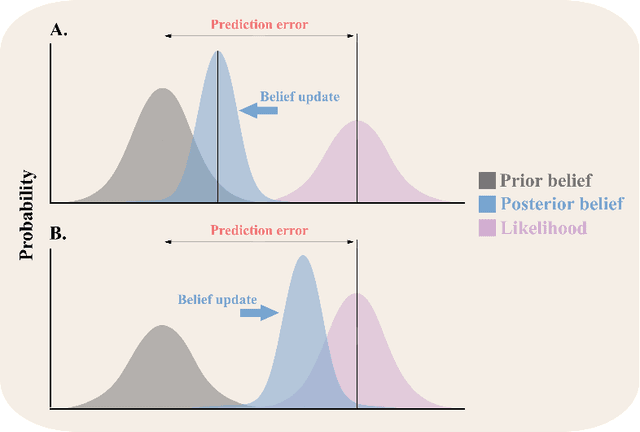

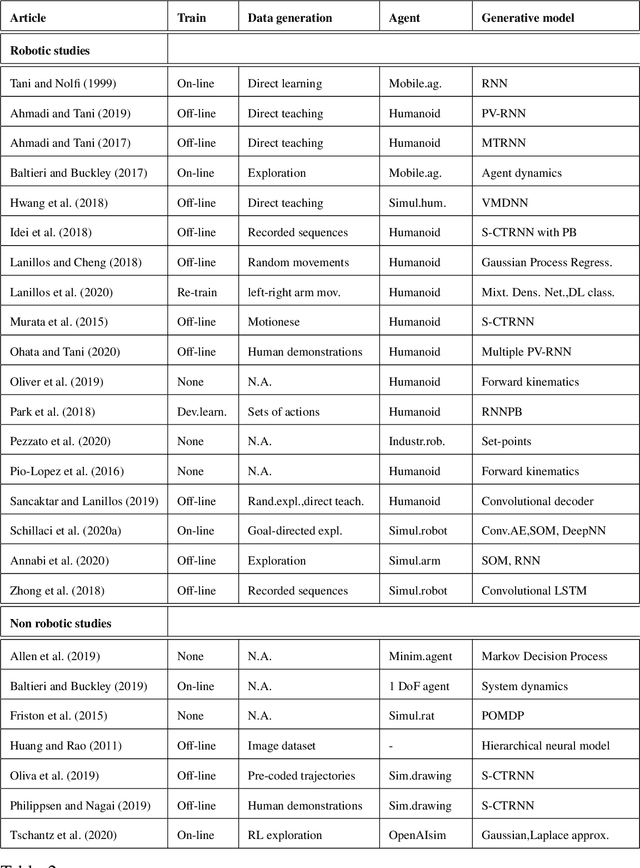

Abstract:Predictive processing has become an influential framework in cognitive sciences. This framework turns the traditional view of perception upside down, claiming that the main flow of information processing is realized in a top-down hierarchical manner. Furthermore, it aims at unifying perception, cognition, and action as a single inferential process. However, in the related literature, the predictive processing framework and its associated schemes such as predictive coding, active inference, perceptual inference, free-energy principle, tend to be used interchangeably. In the field of cognitive robotics there is no clear-cut distinction on which schemes have been implemented and under which assumptions. In this paper, working definitions are set with the main aim of analyzing the state of the art in cognitive robotics research working under the predictive processing framework as well as some related non-robotic models. The analysis suggests that, first, both research in cognitive robotics implementations and non-robotic models needs to be extended to the study of how multiple exteroceptive modalities can be integrated into prediction error minimization schemes. Second, a relevant distinction found here is that cognitive robotics implementations tend to emphasize the learning of a generative model, while in non-robotics models it is almost absent. Third, despite the relevance for active inference, few cognitive robotics implementations examine the issues around control and whether it should result from the substitution of inverse models with proprioceptive predictions. Finally, limited attention has been placed on precision weighting and the tracking of prediction error dynamics. These mechanisms should help to explore more complex behaviors and tasks in cognitive robotics research under the predictive processing framework.

Tracking Emotions: Intrinsic Motivation Grounded on Multi-Level Prediction Error Dynamics

Jul 29, 2020

Abstract:How do cognitive agents decide what is the relevant information to learn and how goals are selected to gain this knowledge? Cognitive agents need to be motivated to perform any action. We discuss that emotions arise when differences between expected and actual rates of progress towards a goal are experienced. Therefore, the tracking of prediction error dynamics has a tight relationship with emotions. Here, we suggest that the tracking of prediction error dynamics allows an artificial agent to be intrinsically motivated to seek new experiences but constrained to those that generate reducible prediction error.We present an intrinsic motivation architecture that generates behaviors towards self-generated and dynamic goals and that regulates goal selection and the balance between exploitation and exploration through multi-level monitoring of prediction error dynamics. This new architecture modulates exploration noise and leverages computational resources according to the dynamics of the overall performance of the learning system. Additionally, it establishes a possible solution to the temporal dynamics of goal selection. The results of the experiments presented here suggest that this architecture outperforms intrinsic motivation approaches where exploratory noise and goals are fixed and a greedy strategy is applied.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge