Verena V. Hafner

From Human to Robot Interactions: A Circular Approach towards Trustworthy Social Robots

Nov 14, 2023Abstract:Human trust research uncovered important catalysts for trust building between interaction partners such as appearance or cognitive factors. The introduction of robots into social interactions calls for a reevaluation of these findings and also brings new challenges and opportunities. In this paper, we suggest approaching trust research in a circular way by drawing from human trust findings, validating them and conceptualizing them for robots, and finally using the precise manipulability of robots to explore previously less-explored areas of trust formation to generate new hypotheses for trust building between agents.

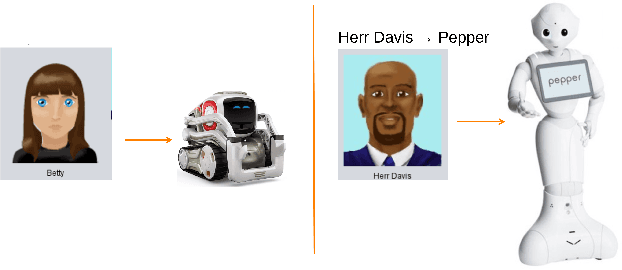

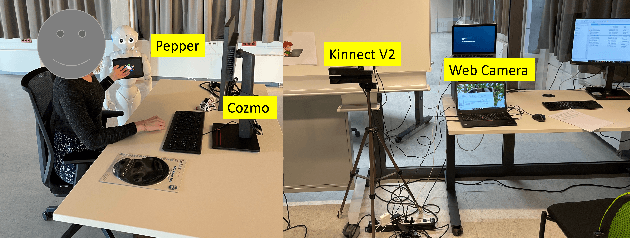

Challenges in Designing Teacher Robots with Motivation Based Gestures

Feb 08, 2023

Abstract:Humanoid robots are increasingly being integrated into learning contexts to assist teaching and learning. However, challenges remain how to design and incorporate such robots in an educational context. As an important part of teaching includes monitoring the motivational and emotional state of the learner and adapting the interaction style and learning content accordingly, in this paper, we discuss the role of gestures displayed by a humanoid robot (i.e., Pepper robot) in a learning and teaching context and present our ongoing research on designing and developing a teacher robot.

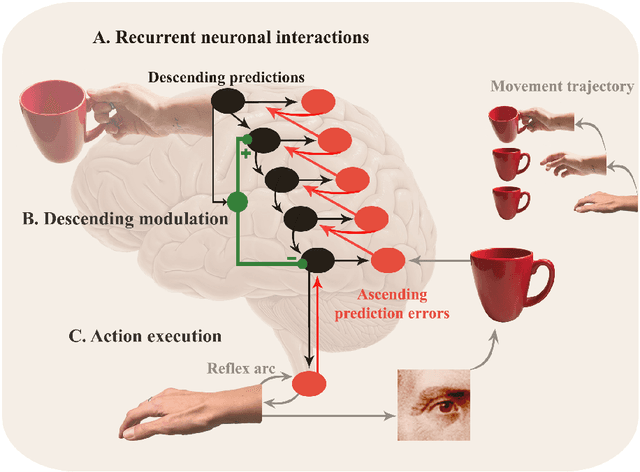

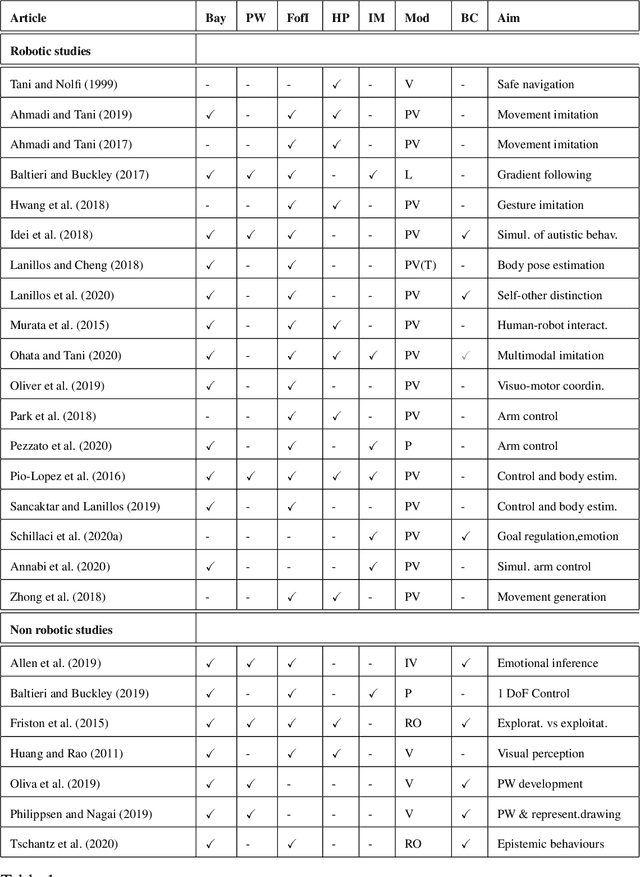

Predictive Processing in Cognitive Robotics: a Review

Jan 22, 2021

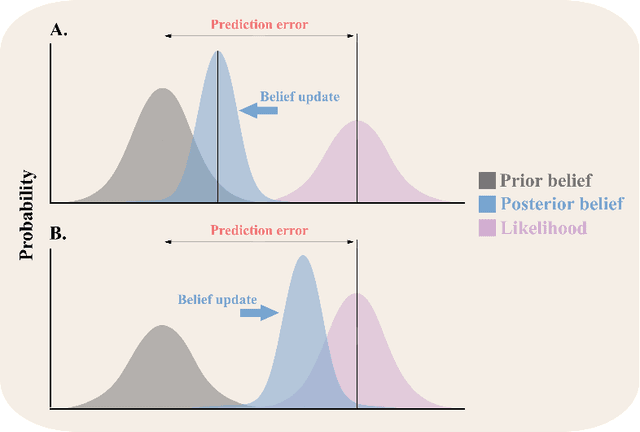

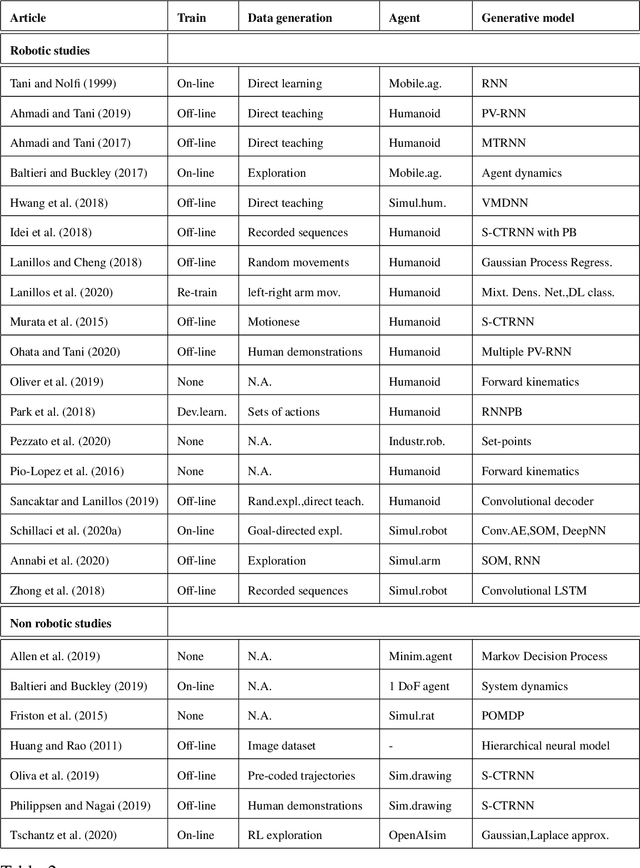

Abstract:Predictive processing has become an influential framework in cognitive sciences. This framework turns the traditional view of perception upside down, claiming that the main flow of information processing is realized in a top-down hierarchical manner. Furthermore, it aims at unifying perception, cognition, and action as a single inferential process. However, in the related literature, the predictive processing framework and its associated schemes such as predictive coding, active inference, perceptual inference, free-energy principle, tend to be used interchangeably. In the field of cognitive robotics there is no clear-cut distinction on which schemes have been implemented and under which assumptions. In this paper, working definitions are set with the main aim of analyzing the state of the art in cognitive robotics research working under the predictive processing framework as well as some related non-robotic models. The analysis suggests that, first, both research in cognitive robotics implementations and non-robotic models needs to be extended to the study of how multiple exteroceptive modalities can be integrated into prediction error minimization schemes. Second, a relevant distinction found here is that cognitive robotics implementations tend to emphasize the learning of a generative model, while in non-robotics models it is almost absent. Third, despite the relevance for active inference, few cognitive robotics implementations examine the issues around control and whether it should result from the substitution of inverse models with proprioceptive predictions. Finally, limited attention has been placed on precision weighting and the tracking of prediction error dynamics. These mechanisms should help to explore more complex behaviors and tasks in cognitive robotics research under the predictive processing framework.

A Multisensory Learning Architecture for Rotation-invariant Object Recognition

Sep 14, 2020

Abstract:This study presents a multisensory machine learning architecture for object recognition by employing a novel dataset that was constructed with the iCub robot, which is equipped with three cameras and a depth sensor. The proposed architecture combines convolutional neural networks to form representations (i.e., features) for grayscaled color images and a multi-layer perceptron algorithm to process depth data. To this end, we aimed to learn joint representations of different modalities (e.g., color and depth) and employ them for recognizing objects. We evaluate the performance of the proposed architecture by benchmarking the results obtained with the models trained separately with the input of different sensors and a state-of-the-art data fusion technique, namely decision level fusion. The results show that our architecture improves the recognition accuracy compared with the models that use inputs from a single modality and decision level multimodal fusion method.

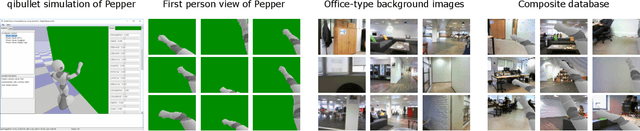

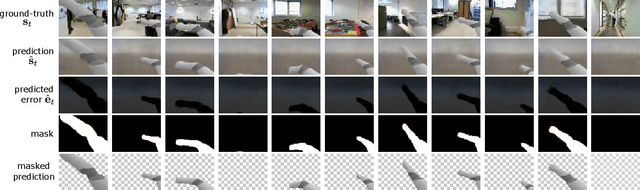

Self-supervised Body Image Acquisition Using a Deep Neural Network for Sensorimotor Prediction

Jun 03, 2019

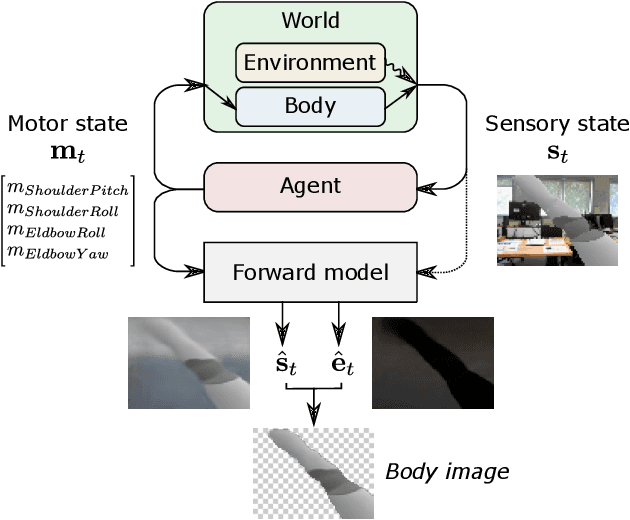

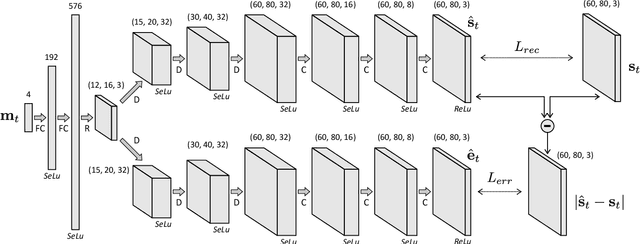

Abstract:This work investigates how a naive agent can acquire its own body image in a self-supervised way, based on the predictability of its sensorimotor experience. Our working hypothesis is that, due to its temporal stability, an agent's body produces more consistent sensory experiences than the environment, which exhibits a greater variability. Given its motor experience, an agent can thus reliably predict what appearance its body should have. This intrinsic predictability can be used to automatically isolate the body image from the rest of the environment. We propose a two-branches deconvolutional neural network to predict the visual sensory state associated with an input motor state, as well as the prediction error associated with this input. We train the network on a dataset of first-person images collected with a simulated Pepper robot, and show how the network outputs can be used to automatically isolate its visible arm from the rest of the environment. Finally, the quality of the body image produced by the network is evaluated.

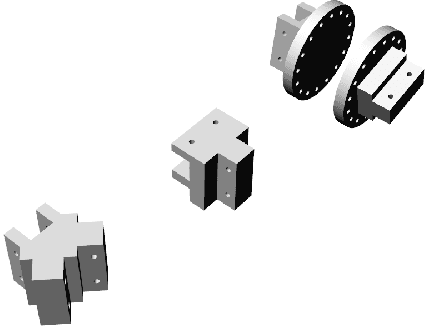

A Customisable Underwater Robot

Jul 20, 2017

Abstract:We present a model of a configurable underwater drone, whose parts are optimised for 3D printing processes. We show how - through the use of printable adapters - several thrusters and ballast configurations can be implemented, allowing different maneuvering possibilities. After introducing the model and illustrating a set of possible configurations, we present a functional prototype based on open source hardware and software solutions. The prototype has been successfully tested in several dives in rivers and lakes around Berlin. The reliability of the printed models has been tested only in relatively shallow waters. However, we strongly believe that their availability as freely downloadable models will motivate the general public to build and to test underwater drones, thus speeding up the development of innovative solutions and applications. The models and their documentation will be available for download at the following link: https://adapt.informatik.hu-berlin.de/schillaci/underwater.html.

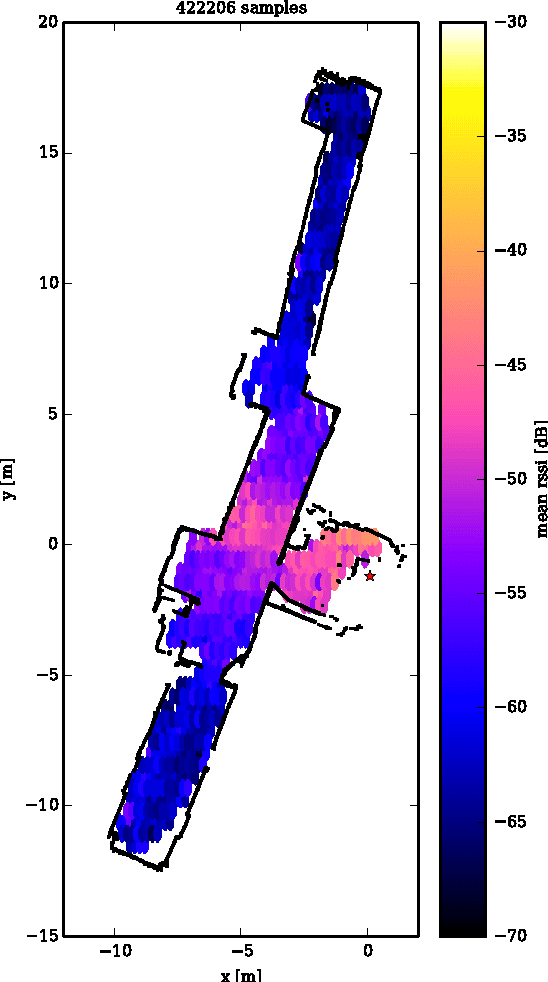

Active exploration of sensor networks from a robotics perspective

Nov 17, 2015

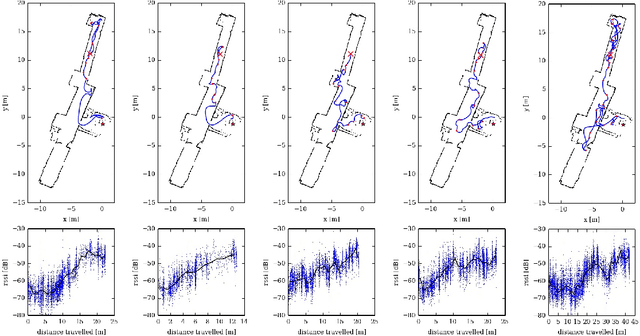

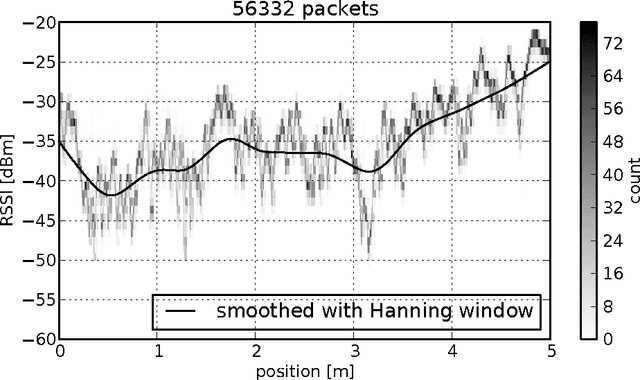

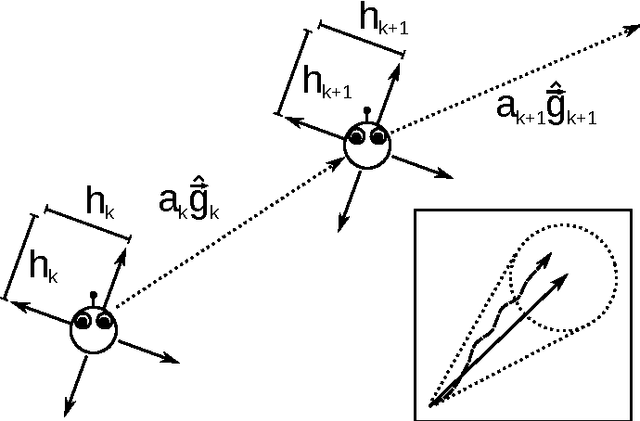

Abstract:Traditional algorithms for robots who need to integrate into a wireless network often focus on one specific task. In this work we want to develop simple, adaptive and reusable algorithms for real world applications for this scenario. Starting with the most basic task for mobile wireless network nodes, finding the position of another node, we introduce an algorithm able to solve this task. We then show how this algorithm can readily be employed to solve a large number of other related tasks like finding the optimal position to bridge two static network nodes. For this we first introduce a meta-algorithm inspired by autonomous robot learning strategies and the concept of internal models which yields a class of source seeking algorithms for mobile nodes. The effectiveness of this algorithm is demonstrated in real world experiments using a physical mobile robot and standard 802.11 wireless LAN in an office environment. We also discuss the differences to conventional algorithms and give the robotics perspective on this class of algorithms. Then we proceed to show how more complex tasks, which might be encountered by mobile nodes, can be encoded in the same framework and how the introduced algorithm can solve them. These tasks can be direct (cross layer) optimization tasks or can also encode more complex tasks like bridging two network nodes. We choose the bridging scenario as an example, implemented on a real physical robot, and show how the robot can solve it in a real world experiment.

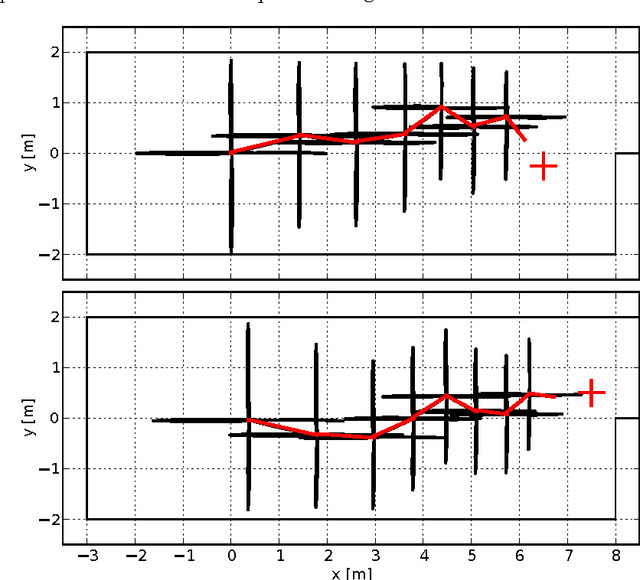

Gradient-based Taxis Algorithms for Network Robotics

Sep 26, 2014

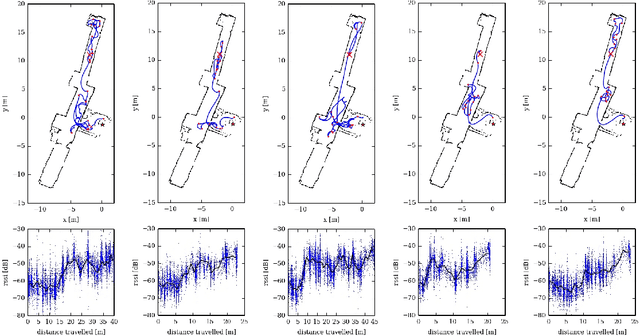

Abstract:Finding the physical location of a specific network node is a prototypical task for navigation inside a wireless network. In this paper, we consider in depth the implications of wireless communication as a measurement input of gradient-based taxis algorithms. We discuss how gradients can be measured and determine the errors of this estimation. We then introduce a gradient-based taxis algorithm as an example of a family of gradient-based, convergent algorithms and discuss its convergence in the context of network robotics. We also conduct an exemplary experiment to show how to overcome some of the specific problems related to network robotics. Finally, we show how to adapt this framework to more complex objectives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge