Niels Pinkwart

Show me the numbers! -- Student-facing Interventions in Adaptive Learning Environments for German Spelling

Jun 13, 2023Abstract:Since adaptive learning comes in many shapes and sizes, it is crucial to find out which adaptations can be meaningful for which areas of learning. Our work presents the result of an experiment conducted on an online platform for the acquisition of German spelling skills. We compared the traditional online learning platform to three different adaptive versions of the platform that implement machine learning-based student-facing interventions that show the personalized solution probability. We evaluate the different interventions with regard to the error rate, the number of early dropouts, and the users competency. Our results show that the number of mistakes decreased in comparison to the control group. Additionally, an increasing number of dropouts was found. We did not find any significant effects on the users competency. We conclude that student-facing adaptive learning environments are effective in improving a persons error rate and should be chosen wisely to have a motivating impact.

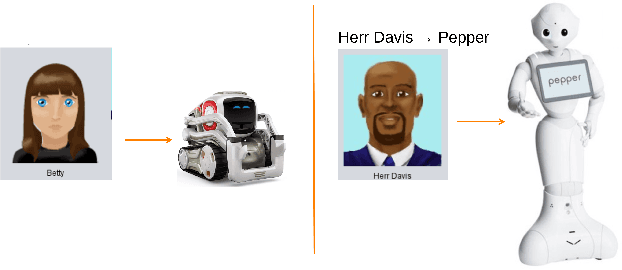

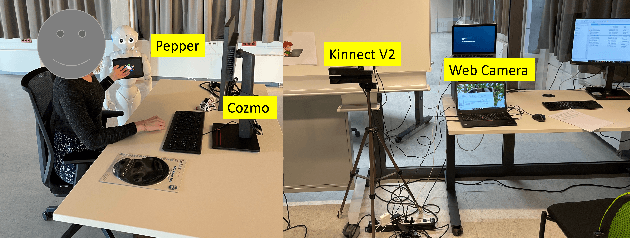

Challenges in Designing Teacher Robots with Motivation Based Gestures

Feb 08, 2023

Abstract:Humanoid robots are increasingly being integrated into learning contexts to assist teaching and learning. However, challenges remain how to design and incorporate such robots in an educational context. As an important part of teaching includes monitoring the motivational and emotional state of the learner and adapting the interaction style and learning content accordingly, in this paper, we discuss the role of gestures displayed by a humanoid robot (i.e., Pepper robot) in a learning and teaching context and present our ongoing research on designing and developing a teacher robot.

Automatic Creativity Measurement in Scratch Programs Across Modalities

Nov 07, 2022Abstract:Promoting creativity is considered an important goal of education, but creativity is notoriously hard to measure.In this paper, we make the journey fromdefining a formal measure of creativity that is efficientlycomputable to applying the measure in a practical domain. The measure is general and relies on coretheoretical concepts in creativity theory, namely fluency, flexibility, and originality, integratingwith prior cognitive science literature. We adapted the general measure for projects in the popular visual programming language Scratch.We designed a machine learning model for predicting the creativity of Scratch projects, trained and evaluated on human expert creativity assessments in an extensive user study. Our results show that opinions about creativity in Scratch varied widely across experts. The automatic creativity assessment aligned with the assessment of the human experts more than the experts agreed with each other. This is a first step in providing computational models for measuring creativity that can be applied to educational technologies, and to scale up the benefit of creativity education in schools.

The Continuous Hint Factory - Providing Hints in Vast and Sparsely Populated Edit Distance Spaces

Jun 30, 2018

Abstract:Intelligent tutoring systems can support students in solving multi-step tasks by providing hints regarding what to do next. However, engineering such next-step hints manually or via an expert model becomes infeasible if the space of possible states is too large. Therefore, several approaches have emerged to infer next-step hints automatically, relying on past students' data. In particular, the Hint Factory (Barnes & Stamper, 2008) recommends edits that are most likely to guide students from their current state towards a correct solution, based on what successful students in the past have done in the same situation. Still, the Hint Factory relies on student data being available for any state a student might visit while solving the task, which is not the case for some learning tasks, such as open-ended programming tasks. In this contribution we provide a mathematical framework for edit-based hint policies and, based on this theory, propose a novel hint policy to provide edit hints in vast and sparsely populated state spaces. In particular, we extend the Hint Factory by considering data of past students in all states which are similar to the student's current state and creating hints approximating the weighted average of all these reference states. Because the space of possible weighted averages is continuous, we call this approach the Continuous Hint Factory. In our experimental evaluation, we demonstrate that the Continuous Hint Factory can predict more accurately what capable students would do compared to existing prediction schemes on two learning tasks, especially in an open-ended programming task, and that the Continuous Hint Factory is comparable to existing hint policies at reproducing tutor hints on a simple UML diagram task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge