Alban Laflaquière

SBRE

Discovering and Exploiting Sparse Rewards in a Learned Behavior Space

Nov 02, 2021

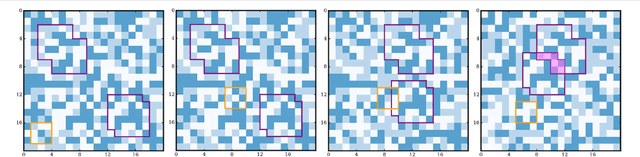

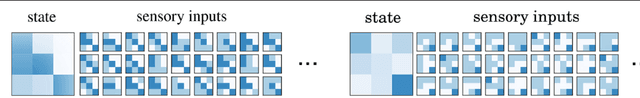

Abstract:Learning optimal policies in sparse rewards settings is difficult as the learning agent has little to no feedback on the quality of its actions. In these situations, a good strategy is to focus on exploration, hopefully leading to the discovery of a reward signal to improve on. A learning algorithm capable of dealing with this kind of settings has to be able to (1) explore possible agent behaviors and (2) exploit any possible discovered reward. Efficient exploration algorithms have been proposed that require to define a behavior space, that associates to an agent its resulting behavior in a space that is known to be worth exploring. The need to define this space is a limitation of these algorithms. In this work, we introduce STAX, an algorithm designed to learn a behavior space on-the-fly and to explore it while efficiently optimizing any reward discovered. It does so by separating the exploration and learning of the behavior space from the exploitation of the reward through an alternating two-steps process. In the first step, STAX builds a repertoire of diverse policies while learning a low-dimensional representation of the high-dimensional observations generated during the policies evaluation. In the exploitation step, emitters are used to optimize the performance of the discovered rewarding solutions. Experiments conducted on three different sparse reward environments show that STAX performs comparably to existing baselines while requiring much less prior information about the task as it autonomously builds the behavior space.

Sparse Reward Exploration via Novelty Search and Emitters

Feb 05, 2021

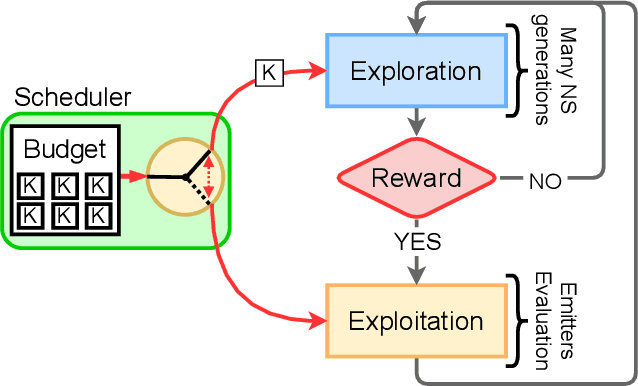

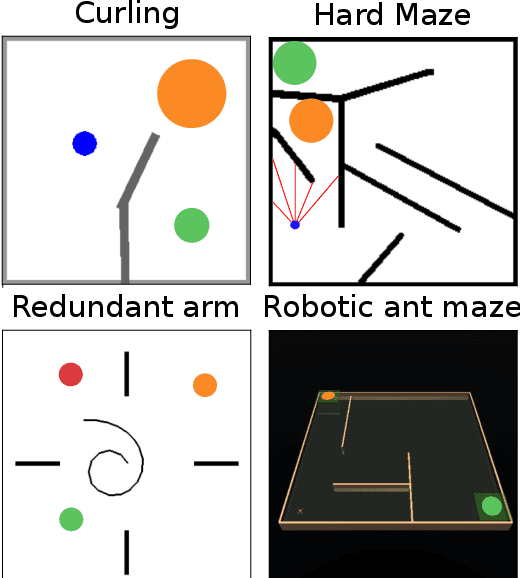

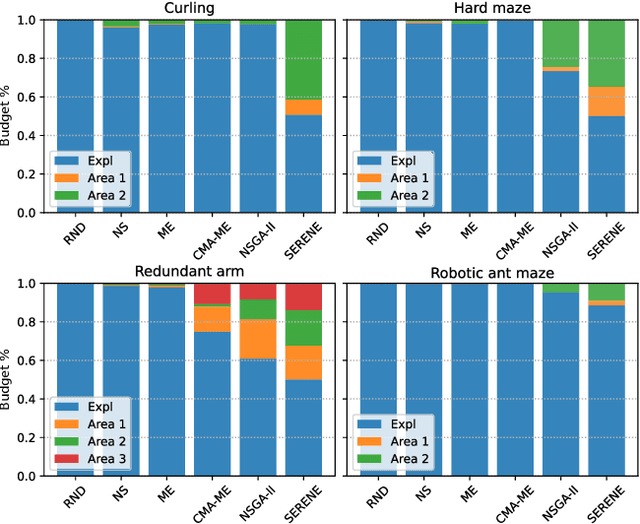

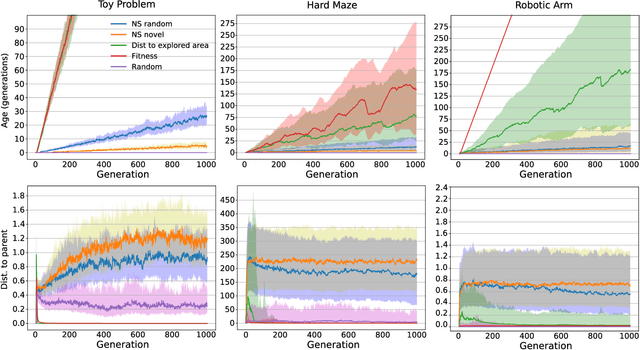

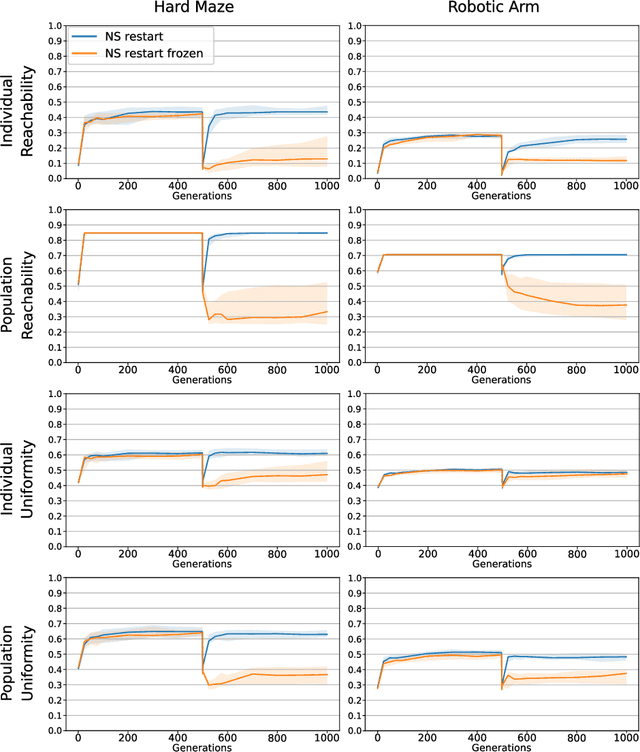

Abstract:Reward-based optimization algorithms require both exploration, to find rewards, and exploitation, to maximize performance. The need for efficient exploration is even more significant in sparse reward settings, in which performance feedback is given sparingly, thus rendering it unsuitable for guiding the search process. In this work, we introduce the SparsE Reward Exploration via Novelty and Emitters (SERENE) algorithm, capable of efficiently exploring a search space, as well as optimizing rewards found in potentially disparate areas. Contrary to existing emitters-based approaches, SERENE separates the search space exploration and reward exploitation into two alternating processes. The first process performs exploration through Novelty Search, a divergent search algorithm. The second one exploits discovered reward areas through emitters, i.e. local instances of population-based optimization algorithms. A meta-scheduler allocates a global computational budget by alternating between the two processes, ensuring the discovery and efficient exploitation of disjoint reward areas. SERENE returns both a collection of diverse solutions covering the search space and a collection of high-performing solutions for each distinct reward area. We evaluate SERENE on various sparse reward environments and show it compares favorably to existing baselines.

Emergence of Spatial Coordinates via Exploration

Oct 29, 2020

Abstract:Spatial knowledge is a fundamental building block for the development of advanced perceptive and cognitive abilities. Traditionally, in robotics, the Euclidean (x,y,z) coordinate system and the agent's forward model are defined a priori. We show that a naive agent can autonomously build an internal coordinate system, with the same dimension and metric regularity as the external space, simply by learning to predict the outcome of sensorimotor transitions in a self-supervised way.

Novelty Search makes Evolvability Inevitable

May 13, 2020

Abstract:Evolvability is an important feature that impacts the ability of evolutionary processes to find interesting novel solutions and to deal with changing conditions of the problem to solve. The estimation of evolvability is not straightforward and is generally too expensive to be directly used as selective pressure in the evolutionary process. Indirectly promoting evolvability as a side effect of other easier and faster to compute selection pressures would thus be advantageous. In an unbounded behavior space, it has already been shown that evolvable individuals naturally appear and tend to be selected as they are more likely to invade empty behavior niches. Evolvability is thus a natural byproduct of the search in this context. However, practical agents and environments often impose limits on the reach-able behavior space. How do these boundaries impact evolvability? In this context, can evolvability still be promoted without explicitly rewarding it? We show that Novelty Search implicitly creates a pressure for high evolvability even in bounded behavior spaces, and explore the reasons for such a behavior. More precisely we show that, throughout the search, the dynamic evaluation of novelty rewards individuals which are very mobile in the behavior space, which in turn promotes evolvability.

Unsupervised Learning and Exploration of Reachable Outcome Space

Sep 13, 2019

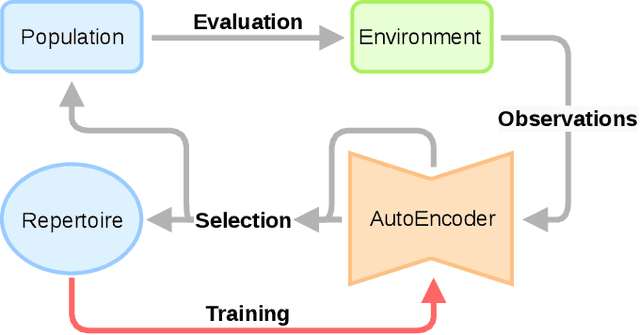

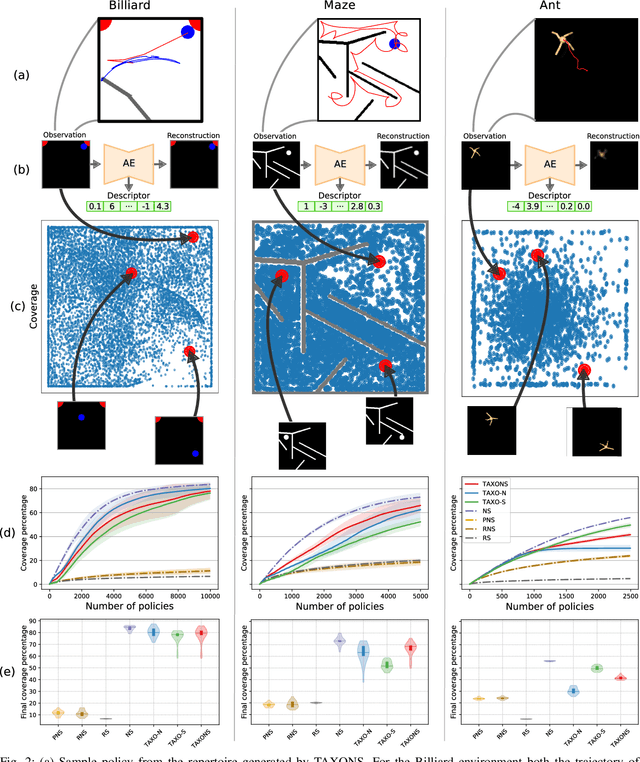

Abstract:Performing Reinforcement Learning in sparse rewards settings, with very little prior knowledge, is a challenging problem since there is no signal to properly guide the learning process. In such situations, a good search strategy is fundamental. At the same time, not having to adapt the algorithm to every single problem is very desirable. Here we introduce TAXONS, a Task Agnostic eXploration of Outcome spaces through Novelty and Surprise algorithm. Based on a population-based divergent-search approach, it learns a set of diverse policies directly from high-dimensional observations, without any task-specific information. TAXONS builds a repertoire of policies while training an autoencoder on the high-dimensional observation of the final state of the system to build a low-dimensional outcome space. The learned outcome space, combined with the reconstruction error, is used to drive the search for new policies. Results show that TAXONS can find a diverse set of controllers, covering a good part of the ground-truth outcome space, while having no information about such space.

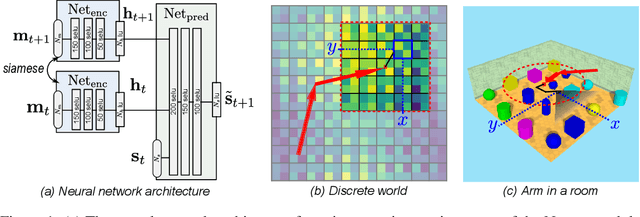

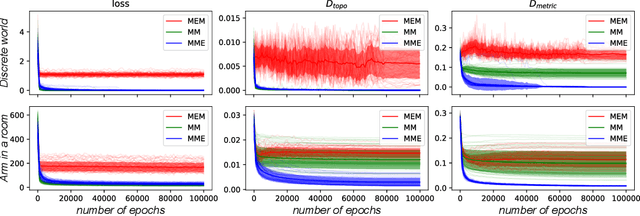

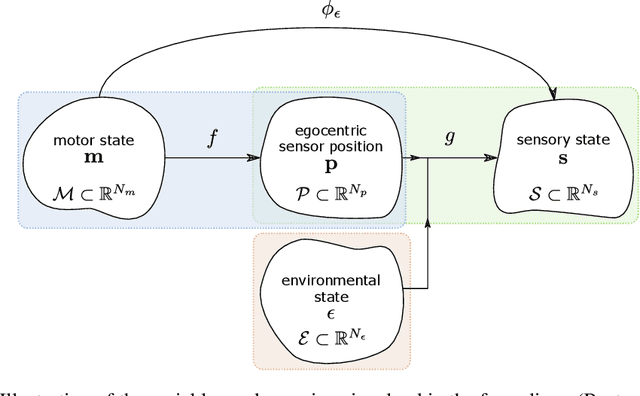

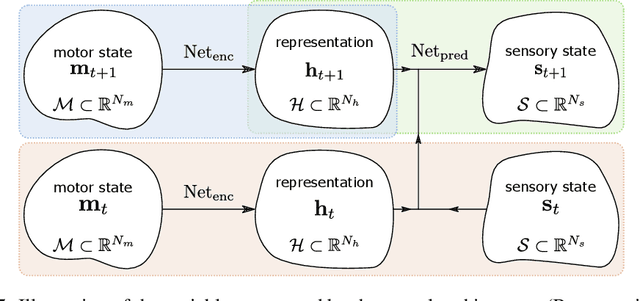

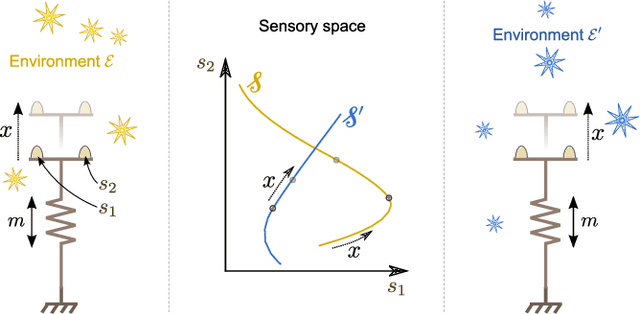

Unsupervised Emergence of Egocentric Spatial Structure from Sensorimotor Prediction

Jun 04, 2019

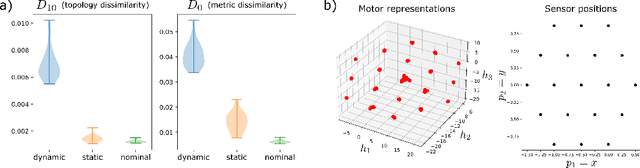

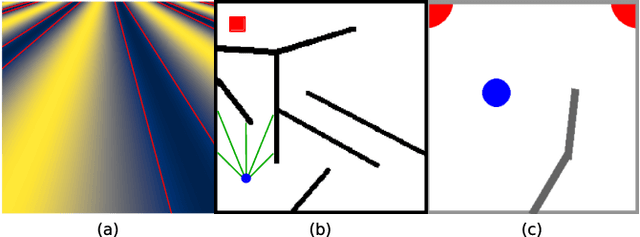

Abstract:Despite its omnipresence in robotics application, the nature of spatial knowledge and the mechanisms that underlie its emergence in autonomous agents are still poorly understood. Recent theoretical works suggest that the Euclidean structure of space induces invariants in an agent's raw sensorimotor experience. We hypothesize that capturing these invariants is beneficial for sensorimotor prediction and that, under certain exploratory conditions, a motor representation capturing the structure of the external space should emerge as a byproduct of learning to predict future sensory experiences. We propose a simple sensorimotor predictive scheme, apply it to different agents and types of exploration, and evaluate the pertinence of these hypotheses. We show that a naive agent can capture the topology and metric regularity of its sensor's position in an egocentric spatial frame without any a priori knowledge, nor extraneous supervision.

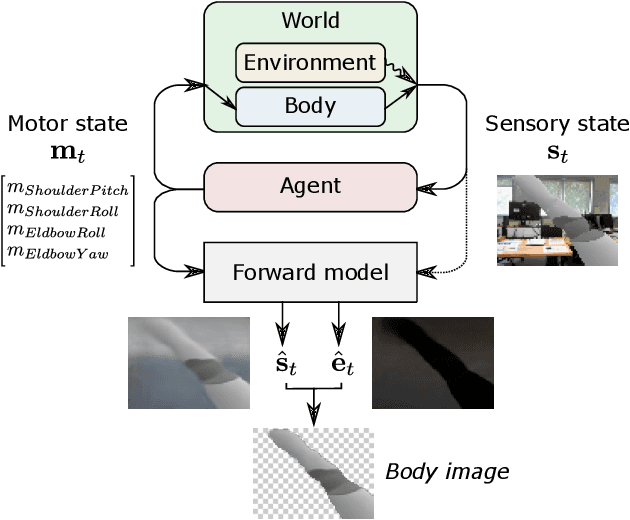

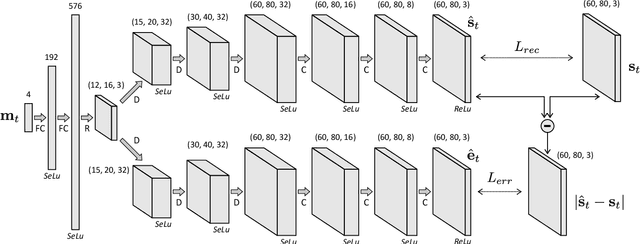

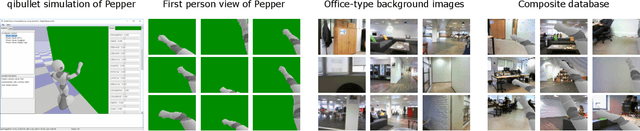

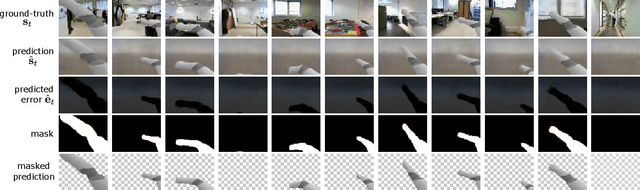

Self-supervised Body Image Acquisition Using a Deep Neural Network for Sensorimotor Prediction

Jun 03, 2019

Abstract:This work investigates how a naive agent can acquire its own body image in a self-supervised way, based on the predictability of its sensorimotor experience. Our working hypothesis is that, due to its temporal stability, an agent's body produces more consistent sensory experiences than the environment, which exhibits a greater variability. Given its motor experience, an agent can thus reliably predict what appearance its body should have. This intrinsic predictability can be used to automatically isolate the body image from the rest of the environment. We propose a two-branches deconvolutional neural network to predict the visual sensory state associated with an input motor state, as well as the prediction error associated with this input. We train the network on a dataset of first-person images collected with a simulated Pepper robot, and show how the network outputs can be used to automatically isolate its visible arm from the rest of the environment. Finally, the quality of the body image produced by the network is evaluated.

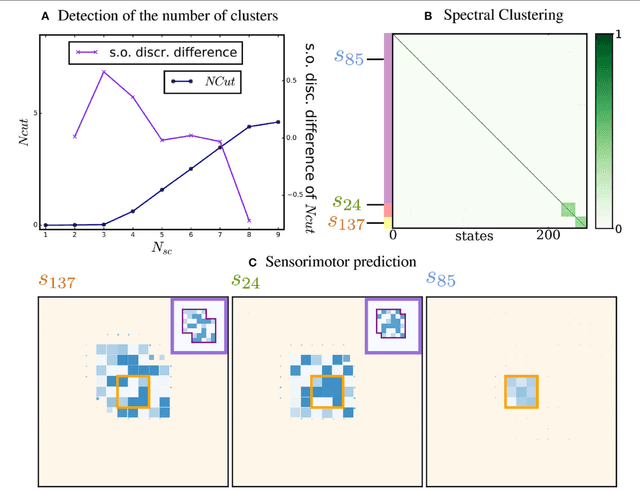

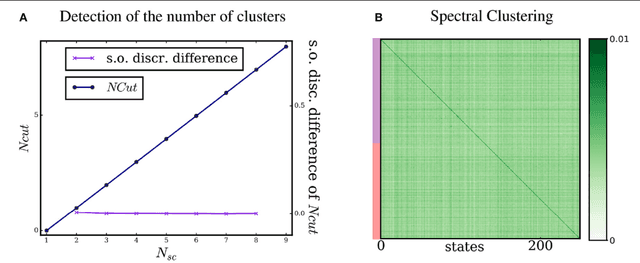

Identification of Invariant Sensorimotor Structures as a Prerequisite for the Discovery of Objects

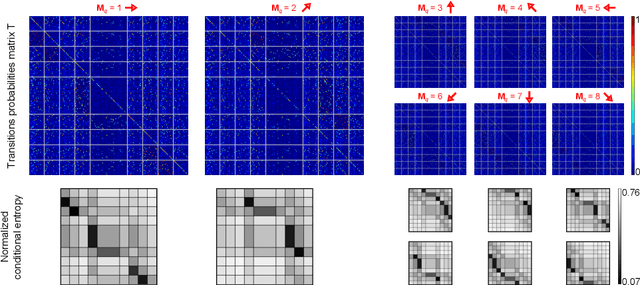

Oct 11, 2018

Abstract:Perceiving the surrounding environment in terms of objects is useful for any general purpose intelligent agent. In this paper, we investigate a fundamental mechanism making object perception possible, namely the identification of spatio-temporally invariant structures in the sensorimotor experience of an agent. We take inspiration from the Sensorimotor Contingencies Theory to define a computational model of this mechanism through a sensorimotor, unsupervised and predictive approach. Our model is based on processing the unsupervised interaction of an artificial agent with its environment. We show how spatio-temporally invariant structures in the environment induce regularities in the sensorimotor experience of an agent, and how this agent, while building a predictive model of its sensorimotor experience, can capture them as densely connected subgraphs in a graph of sensory states connected by motor commands. Our approach is focused on elementary mechanisms, and is illustrated with a set of simple experiments in which an agent interacts with an environment. We show how the agent can build an internal model of moving but spatio-temporally invariant structures by performing a Spectral Clustering of the graph modeling its overall sensorimotor experiences. We systematically examine properties of the model, shedding light more globally on the specificities of the paradigm with respect to methods based on the supervised processing of collections of static images.

* 24 pages, 10 figures, published in Frontiers Robotics and AI

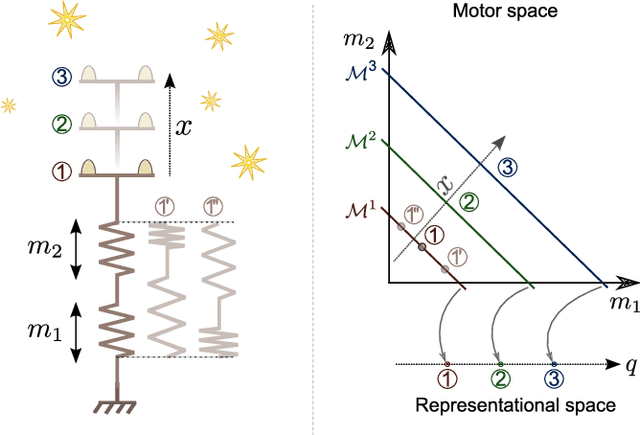

Learning agent's spatial configuration from sensorimotor invariants

Oct 03, 2018

Abstract:The design of robotic systems is largely dictated by our purely human intuition about how we perceive the world. This intuition has been proven incorrect with regard to a number of critical issues, such as visual change blindness. In order to develop truly autonomous robots, we must step away from this intuition and let robotic agents develop their own way of perceiving. The robot should start from scratch and gradually develop perceptual notions, under no prior assumptions, exclusively by looking into its sensorimotor experience and identifying repetitive patterns and invariants. One of the most fundamental perceptual notions, space, cannot be an exception to this requirement. In this paper we look into the prerequisites for the emergence of simplified spatial notions on the basis of a robot's sensorimotor flow. We show that the notion of space as environment-independent cannot be deduced solely from exteroceptive information, which is highly variable and is mainly determined by the contents of the environment. The environment-independent definition of space can be approached by looking into the functions that link the motor commands to changes in exteroceptive inputs. In a sufficiently rich environment, the kernels of these functions correspond uniquely to the spatial configuration of the agent's exteroceptors. We simulate a redundant robotic arm with a retina installed at its end-point and show how this agent can learn the configuration space of its retina. The resulting manifold has the topology of the Cartesian product of a plane and a circle, and corresponds to the planar position and orientation of the retina.

* 26 pages, 5 images, published in Robotics and Autonomous Systems

Grounding the Experience of a Visual Field through Sensorimotor Contingencies

Oct 03, 2018

Abstract:Artificial perception is traditionally handled by hand-designing task specific algorithms. However, a truly autonomous robot should develop perceptive abilities on its own, by interacting with its environment, and adapting to new situations. The sensorimotor contingencies theory proposes to ground the development of those perceptive abilities in the way the agent can actively transform its sensory inputs. We propose a sensorimotor approach, inspired by this theory, in which the agent explores the world and discovers its properties by capturing the sensorimotor regularities they induce. This work presents an application of this approach to the discovery of a so-called visual field as the set of regularities that a visual sensor imposes on a naive agent's experience. A formalism is proposed to describe how those regularities can be captured in a sensorimotor predictive model. Finally, the approach is evaluated on a simulated system coarsely inspired from the human retina.

* 23 pages, 7 figures, published in Neurocomputing

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge