Michael Garcia Ortiz

Efficient entity-based reinforcement learning

Jun 06, 2022

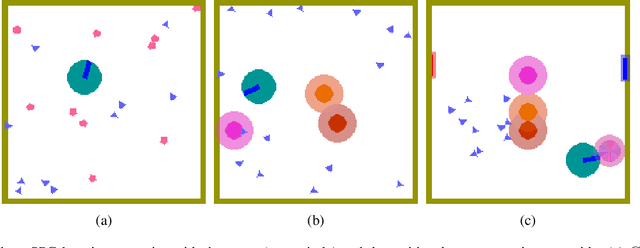

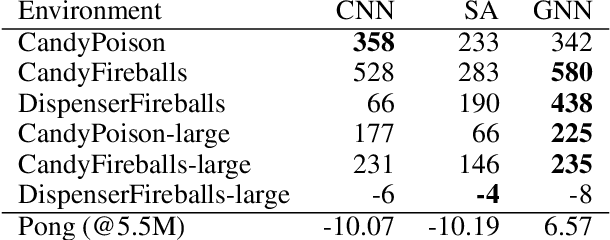

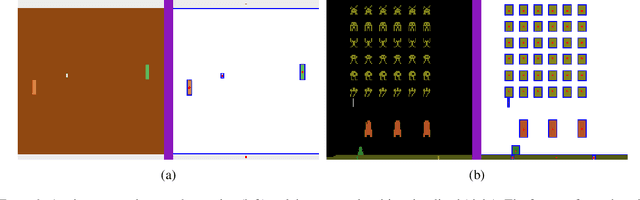

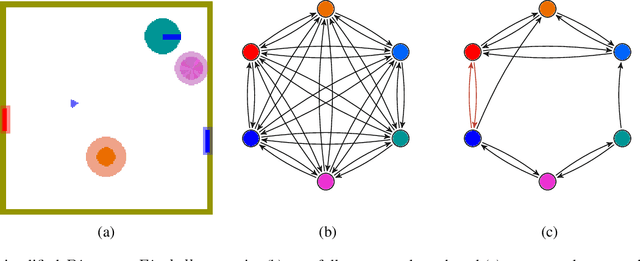

Abstract:Recent deep reinforcement learning (DRL) successes rely on end-to-end learning from fixed-size observational inputs (e.g. image, state-variables). However, many challenging and interesting problems in decision making involve observations or intermediary representations which are best described as a set of entities: either the image-based approach would miss small but important details in the observations (e.g. ojects on a radar, vehicles on satellite images, etc.), the number of sensed objects is not fixed (e.g. robotic manipulation), or the problem simply cannot be represented in a meaningful way as an image (e.g. power grid control, or logistics). This type of structured representations is not directly compatible with current DRL architectures, however, there has been an increase in machine learning techniques directly targeting structured information, potentially addressing this issue. We propose to combine recent advances in set representations with slot attention and graph neural networks to process structured data, broadening the range of applications of DRL algorithms. This approach allows to address entity-based problems in an efficient and scalable way. We show that it can improve training time and robustness significantly, and demonstrate their potential to handle structured as well as purely visual domains, on multiple environments from the Atari Learning Environment and Simple Playgrounds.

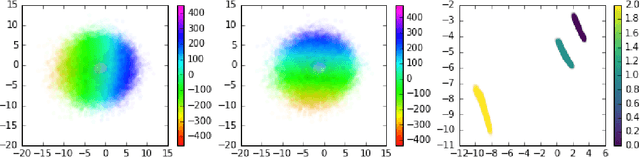

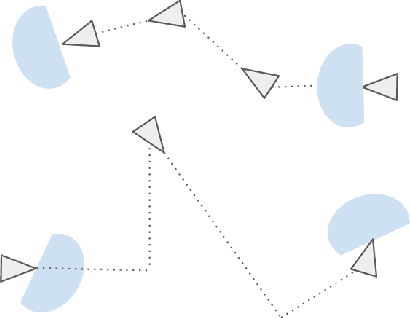

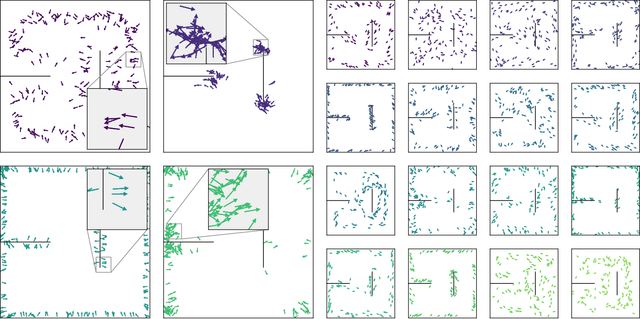

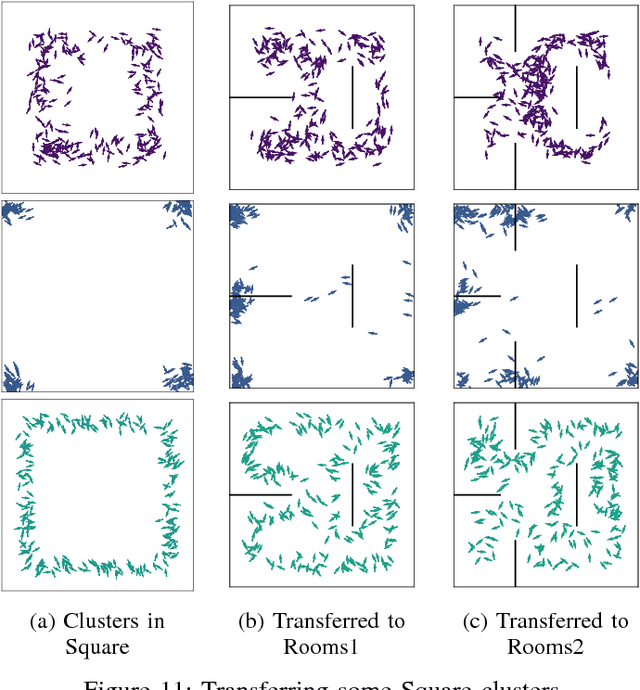

Unsupervised Emergence of Egocentric Spatial Structure from Sensorimotor Prediction

Jun 04, 2019

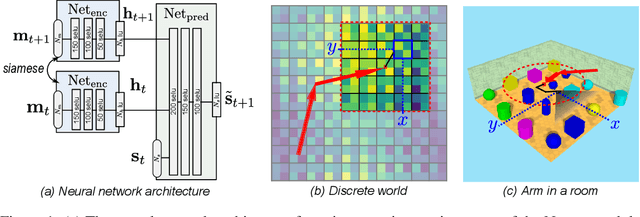

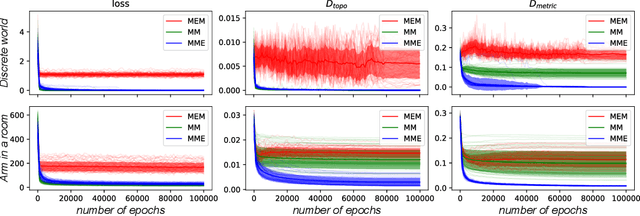

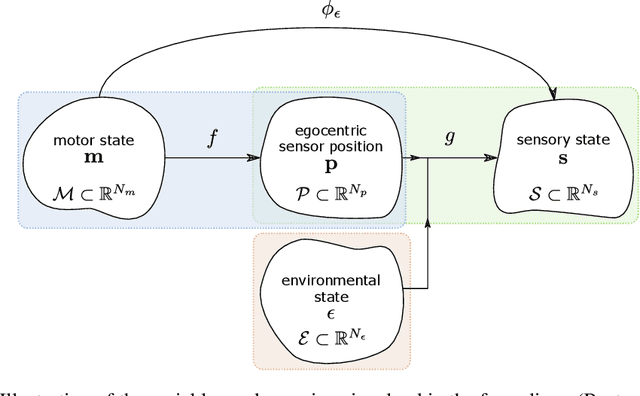

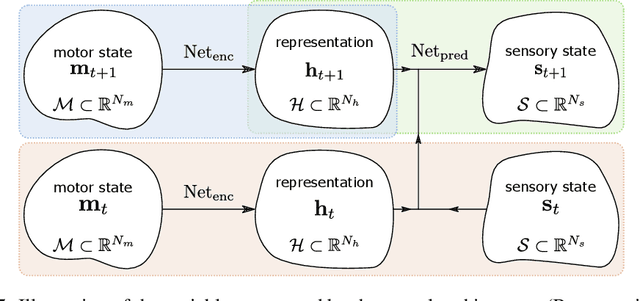

Abstract:Despite its omnipresence in robotics application, the nature of spatial knowledge and the mechanisms that underlie its emergence in autonomous agents are still poorly understood. Recent theoretical works suggest that the Euclidean structure of space induces invariants in an agent's raw sensorimotor experience. We hypothesize that capturing these invariants is beneficial for sensorimotor prediction and that, under certain exploratory conditions, a motor representation capturing the structure of the external space should emerge as a byproduct of learning to predict future sensory experiences. We propose a simple sensorimotor predictive scheme, apply it to different agents and types of exploration, and evaluate the pertinence of these hypotheses. We show that a naive agent can capture the topology and metric regularity of its sensor's position in an egocentric spatial frame without any a priori knowledge, nor extraneous supervision.

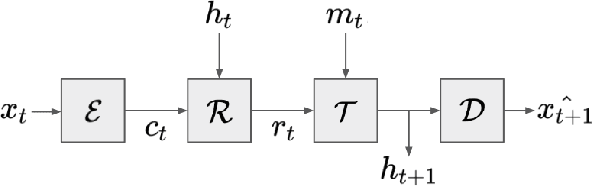

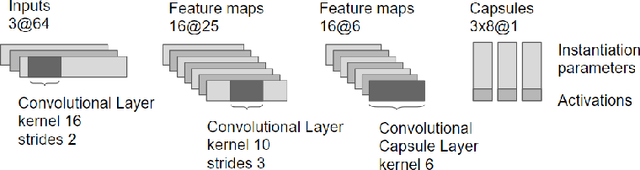

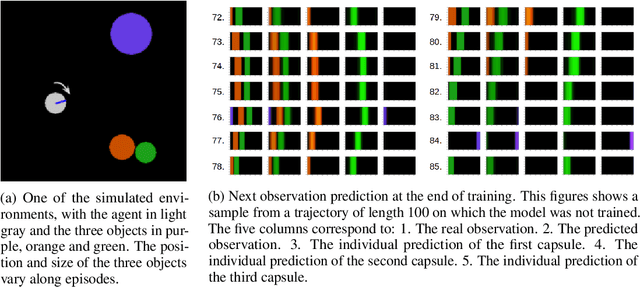

State representation learning with recurrent capsule networks

Jan 21, 2019

Abstract:Unsupervised learning of compact and relevant state representations has been proved very useful at solving complex reinforcement learning tasks. In this paper, we propose a recurrent capsule network that learns such representations by trying to predict the future observations in an agent's trajectory.

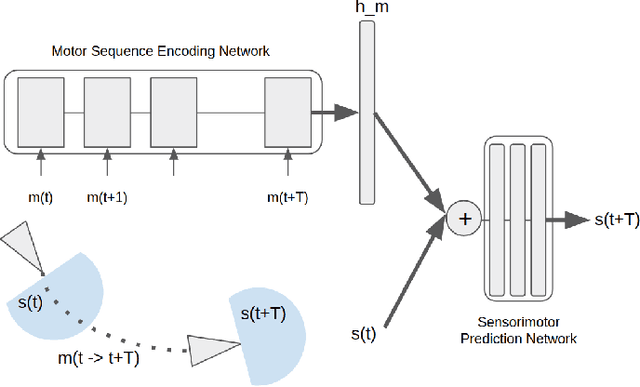

Learning Representations of Spatial Displacement through Sensorimotor Prediction

May 16, 2018

Abstract:Robots act in their environment through sequences of continuous motor commands. Because of the dimensionality of the motor space, as well as the infinite possible combinations of successive motor commands, agents need compact representations that capture the structure of the resulting displacements. In the case of an autonomous agent with no a priori knowledge about its sensorimotor apparatus, this compression has to be learned. We propose to use Recurrent Neural Networks to encode motor sequences into a compact representation, which is used to predict the consequence of motor sequences in term of sensory changes. We show that sensory prediction can successfully guide the compression of motor sequences into representations that are organized topologically in term of spatial displacement.

Representation Learning in Partially Observable Environments using Sensorimotor Prediction

Apr 26, 2018

Abstract:In order to explore and act autonomously in an environment, an agent needs to learn from the sensorimotor information that is captured while acting. By extracting the regularities in this sensorimotor stream, it can learn a model of the world, which in turn can be used as a basis for action and exploration. This requires the acquisition of compact representations from a possibly high dimensional raw observation, which is noisy and ambiguous. In this paper, we learn sensory representations from sensorimotor prediction. We propose a model which integrates sensorimotor information over time, and project it in a sensory representation which is useful for prediction. We emphasize on a simple example the role of motor and memory for learning sensory representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge