Louis Annabi

Flowers, U2IS

Analyzing Data Efficiency and Performance of Machine Learning Algorithms for Assessing Low Back Pain Physical Rehabilitation Exercises

Aug 05, 2024

Abstract:Analyzing human motion is an active research area, with various applications. In this work, we focus on human motion analysis in the context of physical rehabilitation using a robot coach system. Computer-aided assessment of physical rehabilitation entails evaluation of patient performance in completing prescribed rehabilitation exercises, based on processing movement data captured with a sensory system, such as RGB and RGB-D cameras. As 2D and 3D human pose estimation from RGB images had made impressive improvements, we aim to compare the assessment of physical rehabilitation exercises using movement data obtained from both RGB-D camera (Microsoft Kinect) and estimation from RGB videos (OpenPose and BlazePose algorithms). A Gaussian Mixture Model (GMM) is employed from position (and orientation) features, with performance metrics defined based on the log-likelihood values from GMM. The evaluation is performed on a medical database of clinical patients carrying out low back-pain rehabilitation exercises, previously coached by robot Poppy.

Prerequisite Structure Discovery in Intelligent Tutoring Systems

Jan 18, 2024Abstract:This paper addresses the importance of Knowledge Structure (KS) and Knowledge Tracing (KT) in improving the recommendation of educational content in intelligent tutoring systems. The KS represents the relations between different Knowledge Components (KCs), while KT predicts a learner's success based on her past history. The contribution of this research includes proposing a KT model that incorporates the KS as a learnable parameter, enabling the discovery of the underlying KS from learner trajectories. The quality of the uncovered KS is assessed by using it to recommend content and evaluating the recommendation algorithm with simulated students.

On the Relationship Between Variational Inference and Auto-Associative Memory

Oct 14, 2022

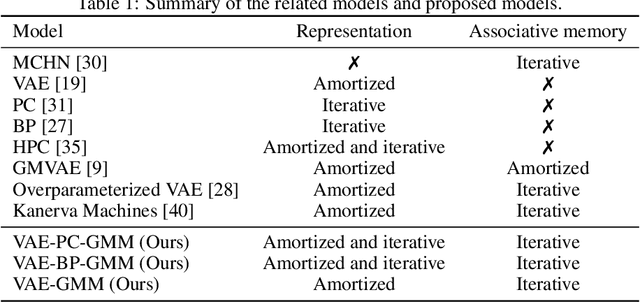

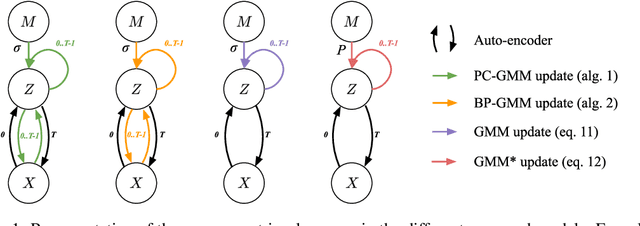

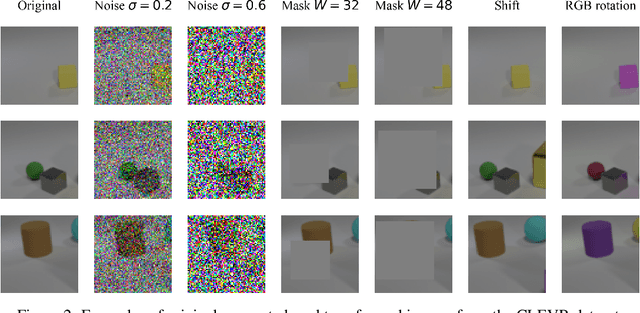

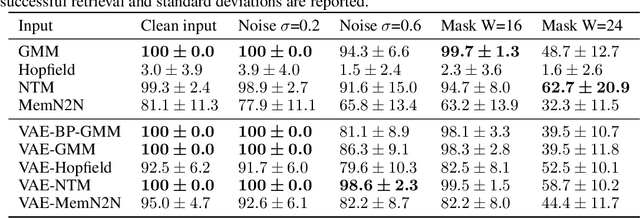

Abstract:In this article, we propose a variational inference formulation of auto-associative memories, allowing us to combine perceptual inference and memory retrieval into the same mathematical framework. In this formulation, the prior probability distribution onto latent representations is made memory dependent, thus pulling the inference process towards previously stored representations. We then study how different neural network approaches to variational inference can be applied in this framework. We compare methods relying on amortized inference such as Variational Auto Encoders and methods relying on iterative inference such as Predictive Coding and suggest combining both approaches to design new auto-associative memory models. We evaluate the obtained algorithms on the CIFAR10 and CLEVR image datasets and compare them with other associative memory models such as Hopfield Networks, End-to-End Memory Networks and Neural Turing Machines.

Intrinsically Motivated Learning of Causal World Models

Aug 09, 2022

Abstract:Despite the recent progress in deep learning and reinforcement learning, transfer and generalization of skills learned on specific tasks is very limited compared to human (or animal) intelligence. The lifelong, incremental building of common sense knowledge might be a necessary component on the way to achieve more general intelligence. A promising direction is to build world models capturing the true physical mechanisms hidden behind the sensorimotor interaction with the environment. Here we explore the idea that inferring the causal structure of the environment could benefit from well-chosen actions as means to collect relevant interventional data.

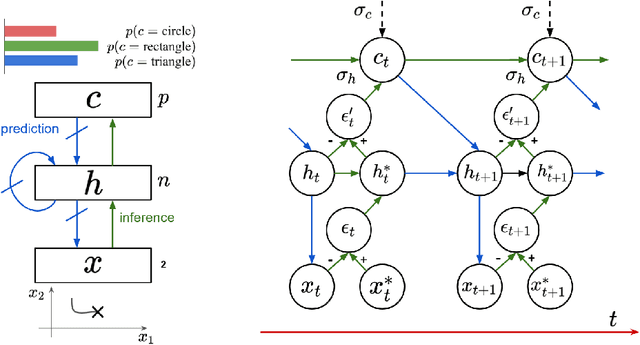

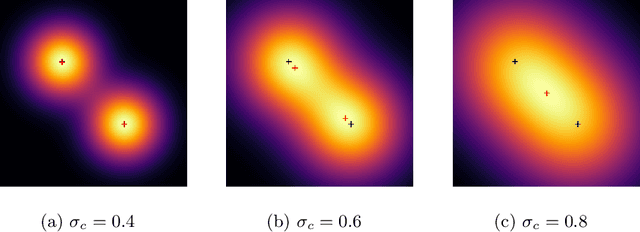

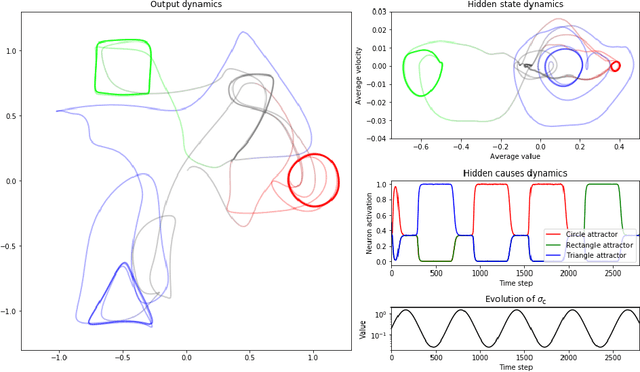

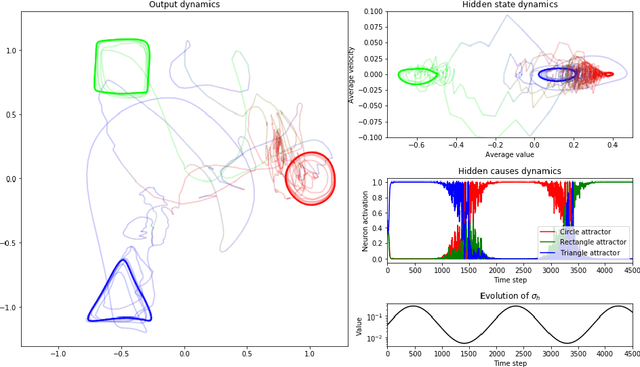

A Predictive Coding Account for Chaotic Itinerancy

Jun 16, 2021

Abstract:As a phenomenon in dynamical systems allowing autonomous switching between stable behaviors, chaotic itinerancy has gained interest in neurorobotics research. In this study, we draw a connection between this phenomenon and the predictive coding theory by showing how a recurrent neural network implementing predictive coding can generate neural trajectories similar to chaotic itinerancy in the presence of input noise. We propose two scenarios generating random and past-independent attractor switching trajectories using our model.

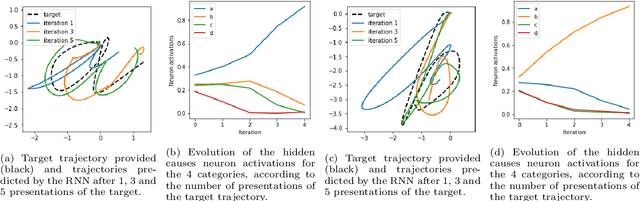

Bidirectional Interaction between Visual and Motor Generative Models using Predictive Coding and Active Inference

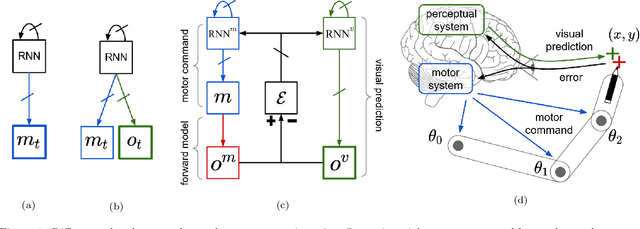

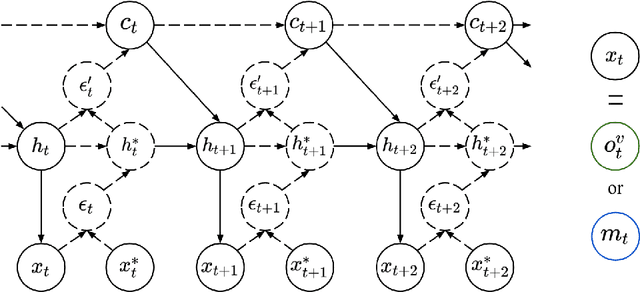

Apr 19, 2021

Abstract:In this work, we build upon the Active Inference (AIF) and Predictive Coding (PC) frameworks to propose a neural architecture comprising a generative model for sensory prediction, and a distinct generative model for motor trajectories. We highlight how sequences of sensory predictions can act as rails guiding learning, control and online adaptation of motor trajectories. We furthermore inquire the effects of bidirectional interactions between the motor and the visual modules. The architecture is tested on the control of a simulated robotic arm learning to reproduce handwritten letters.

Autonomous learning and chaining of motor primitives using the Free Energy Principle

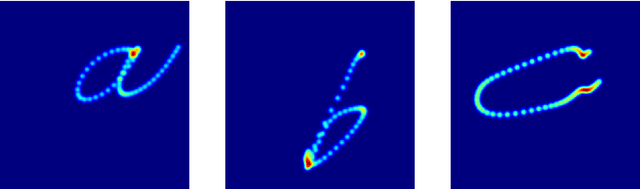

May 11, 2020

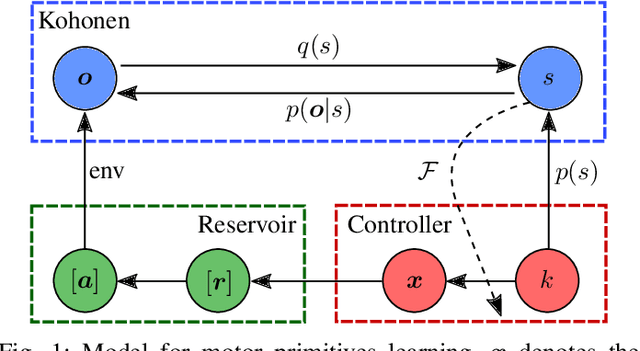

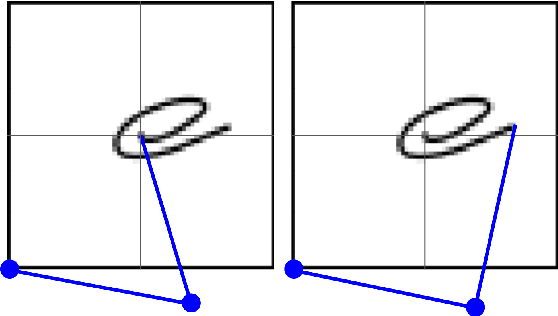

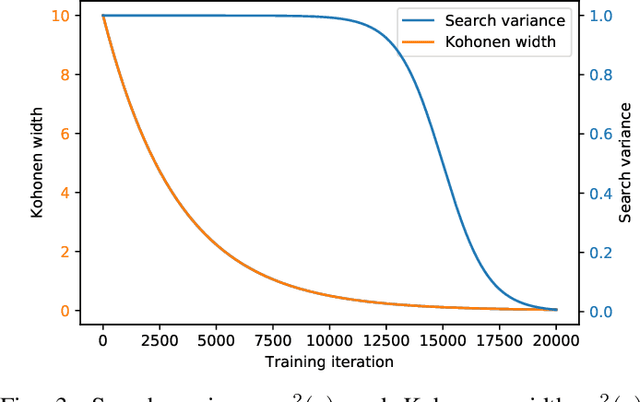

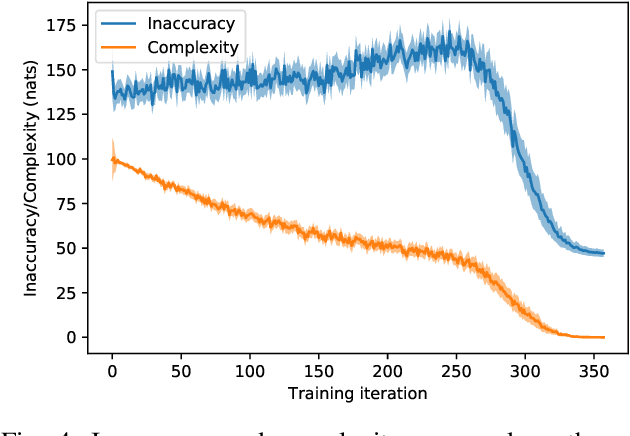

Abstract:In this article, we apply the Free-Energy Principle to the question of motor primitives learning. An echo-state network is used to generate motor trajectories. We combine this network with a perception module and a controller that can influence its dynamics. This new compound network permits the autonomous learning of a repertoire of motor trajectories. To evaluate the repertoires built with our method, we exploit them in a handwriting task where primitives are chained to produce long-range sequences.

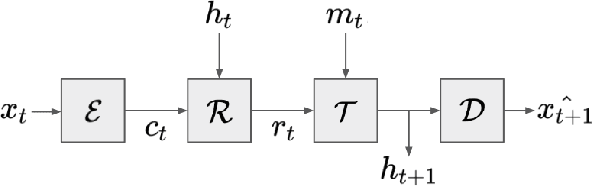

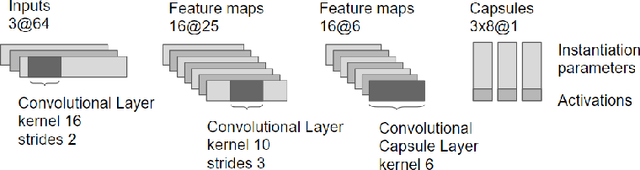

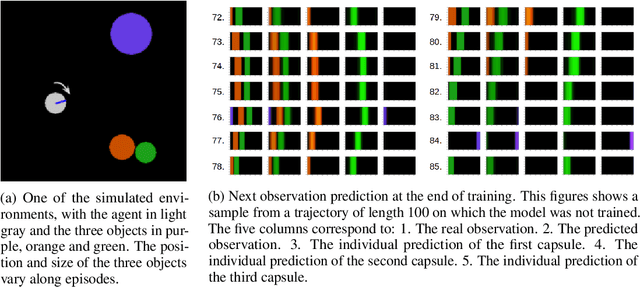

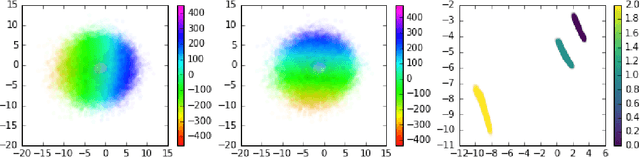

State representation learning with recurrent capsule networks

Jan 21, 2019

Abstract:Unsupervised learning of compact and relevant state representations has been proved very useful at solving complex reinforcement learning tasks. In this paper, we propose a recurrent capsule network that learns such representations by trying to predict the future observations in an agent's trajectory.

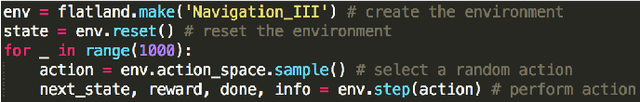

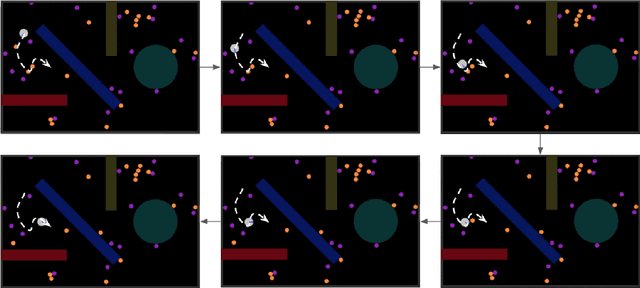

Flatland: a Lightweight First-Person 2-D Environment for Reinforcement Learning

Sep 10, 2018

Abstract:Flatland is a simple, lightweight environment for fast prototyping and testing of reinforcement learning agents. It is of lower complexity compared to similar 3D platforms (e.g. DeepMind Lab or VizDoom), but emulates physical properties of the real world, such as continuity, multi-modal partially-observable states with first-person view and coherent physics. We propose to use it as an intermediary benchmark for problems related to Lifelong Learning. Flatland is highly customizable and offers a wide range of task difficulty to extensively evaluate the properties of artificial agents. We experiment with three reinforcement learning baseline agents and show that they can rapidly solve a navigation task in Flatland. A video of an agent acting in Flatland is available here: https://youtu.be/I5y6Y2ZypdA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge