Bidirectional Interaction between Visual and Motor Generative Models using Predictive Coding and Active Inference

Paper and Code

Apr 19, 2021

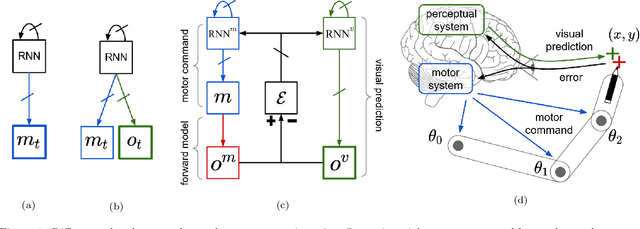

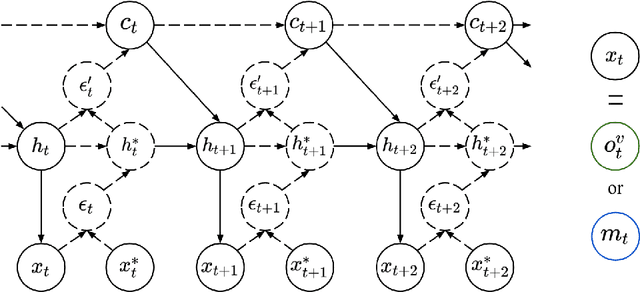

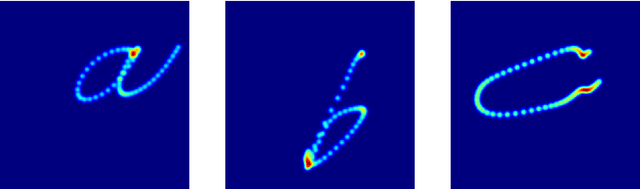

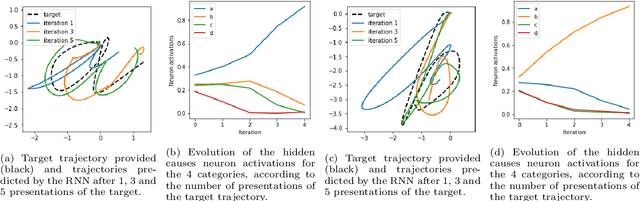

In this work, we build upon the Active Inference (AIF) and Predictive Coding (PC) frameworks to propose a neural architecture comprising a generative model for sensory prediction, and a distinct generative model for motor trajectories. We highlight how sequences of sensory predictions can act as rails guiding learning, control and online adaptation of motor trajectories. We furthermore inquire the effects of bidirectional interactions between the motor and the visual modules. The architecture is tested on the control of a simulated robotic arm learning to reproduce handwritten letters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge